See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/279200983

Affective Postures Classification using Bones

Rotations Features from Motion Capture Data

Conference Paper · November 2013

READS

63

3 authors, including:

Surya Sumpeno

Institut Teknologi Sepuluh Nopember

25PUBLICATIONS 22CITATIONS

SEE PROFILE

Affective Postures Classification using Bones

Rotations Features from Motion Capture Data

Lukman Zaman∗†

∗Informatics Engineering Department ∗Sekolah Tinggi Teknik Surabaya (STTS)

Surabaya, Indonesia

Email: [email protected], [email protected]

Surya Sumpeno†, Mochamad Hariadi† †Electrical Engineering Department †Institut Teknologi Sepuluh Nopember (ITS)

Surabaya, Indonesia Email:{surya,mochar}@ee.its.ac.id

Abstract—Emotion recognition based on affective body pos-tures can be quite a challenging task. This challenge mainly because the characteristics of the features that must be computed. Although the number of the bones in skeletal model from motion capture system is relatively small compared to real human, each of the bones has their own geometric attributes, i.e. their positions and transformations in 3D axis. There are also a lot of variations from one person’s postures to another although they are portraying same emotions. This paper will explore the possibility of affective postures classification based on bones rotations into four basic emotions. The markers positions from motion capture system were mapped into skeletal model and converted into bones rotations. There is an apex frame in each motion that act as the most representative posture for particular emotion. The classifiers use bones rotations in these apex frames as features. Experiments show that Multilayer Perceptron (MLP) and Sequential Minimal Optimization (SMO) with Radial Basis Network (RBF) kernel are able to classify these postures with classification accuracy up to 80%. We also find that postures for anger are the most difficult to distinguish accurately.

Index Terms—Affective computing, motion capture, posture classification, Naive Bayes, k-NN search, Multilayer Perceptron, MLP, Support Vector Machine, SVM

I. INTRODUCTION

Affective computing focused on machine learning that able to recognize human emotion [1]. For nonverbal interactions, facial expressions and body gestures can be used as emotion recognition input. These are based on facts that facial expres-sions, as well as body gestures, are related to emotions as shown in [2], [3], [4] and [5].

According to [6], human emotion can be categorized into a set of basic emotions. This set comprises six emotions: fear, anger, happy, sad, surprise and disgust. Such categorization greatly simplify the task of recognition because instead of describing emotions from a set of facial or body features, machine learning systems only need to assign each sample into a finite set of classes.

Machine learning also use visual data as their input to mimic human ability to recognize emotion from visual clues. This input can be either in 2D data (i.e. photographs) or 3D data from motion capture (mocap) system.

The challenges in emotion recognition from body gestures mainly are in complexity and variations of the features. Bone configuration has more degree of freedom than facial features.

Studies pointed out that cultural differences must also be considered. People with different culture sometimes expressed same emotions in different ways, and they could recognize same postures as different emotions [7].

Compared to facial expressions, currently there are only small numbers of researches on affective computing from body gestures. Examples off these works are done by [8], [9] and [10]. [8] used hierarchical neural detectors to recognize emo-tion from from photographs based on a visual cortex model. The input consists of photographs of several posing actors displaying basic emotions. Their system can classify those photographs into seven basic emotions (including neutral pose) with accuracy of 82%, compared to 87% for classifications done by human.

Emotion classification that use 3D data of body gestures has been done in [10]. They used Vicon mocap system to acquire motion data. This mocap system gave a set of markers positions in 3D space that represents actors postures. The markers positions are recorded in a certain frame rate and motions are defined by transformations of each marker in se-quence of frames. Machine learning methods including Naive Bayes, decision tree, SMO SVM and multilayer perceptron were used to classify the motion data into four basic emotions. It was found that SMO SVM is better in the classification task than other methods. Their proposed method gives classification accuracy between 84% to 92%.

Projections of body postures onto three orthonormal planes are used as kinematic features in [9]. These features are se-lected based on motions in dancing. Incremental categorization process on these features was used to recognize happy, angry and sad emotions. Later work in [11] these postural features are used to recognize emotions in affective dimensions. Unlike Ekman’s discrete emotions, this dimensions are Likert-type scales in terms of valence, arousal, potency and avoidance. With this method, 70% postures can be recognized correctly. For alternatives from acquiring data by ourself, there are a number of repositories that can provide data for this kind of researches. For affective body postures, BEAST (The Bodily Expressive Action Stimulus Test) contains a collection of photographs of affective poses. This poses were performed by actors that posed in four basic emotions: happy, sad, fear and anger [12].

____________________________________

978-1-4673-6278-8/13/$31.00 ©2013 IEEE

UCLIC Affective Body Posture and Motion Database can provide useful data for researches on emotion detection from body gestures as it contains human motions data that show affective gestures. This database consists of two parts. One part is called acted corpus [7], contains body gestures performed by nonprofessional actors portraying angry, fear, happy and sad emotions. The other part called naturalistic corpus [13], contains body motions of 11 people playing Wii sport game. General body movements data also available for public at CMU Graphics Lab Motion Capture Database [14]. This repository contains thousands of body motions data that cap-tured by mocap system. This collection will give a wide range of human motions such as walking, dancing and other daily activities.

Based on above researches, our work will explore the pos-sibility to classify affective body gestures into basic emotions. We use bones rotations that derived from mocap data as the features. In the rest of the paper, first we will describe the data that we used. Features extraction and selection from the data are next to be explained. Those selected features will be classified using several classification algorithms, and finally, the classification results will be discussed.

II. MOTIONDATABASE ANDFEATUREEXTRACTION

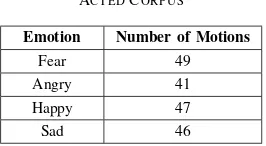

We use acted corpus from UCLIC database for our work. The corpus contain 183 sequences of human motions that comprises four basic emotions (table I). Each sequence has a different number of frames. Some of them are recorded in 120 fps, the others are in 250 fps.

TABLE I ACTEDCORPUS

Emotion Number of Motions

Fear 49

Angry 41

Happy 47

Sad 46

These motion data are in CSM format that contains an array of frames, and each frame contains positions of Vicon mocap markers. Unlike [10] that used this markers positions to derive speed information, we retarget these data to a skeletal model prior to classification. Using popular BVH format as reference, we use skeletal model that has 18 bones in hierarchical order (figure 1). This process involves deriving bones translations and rotations from marker positions. With this skeletal model, a unique human posture can be defined by a unique bones configuration. Each bone has its own transformations. Those are translation and rotation, and they are relative to the parent bone. In this model, rotation information is stored for each bone in each frame, but the position of the bone in world coordinate must be calculated from its rotation value, combined with parent bone’s transformations (Eq. 1).

M =Mself ×Mparent×Mgrandparent×...×Mroot (1)

In Eq.1 eachM is transformation matrix in the form of Eq. 2

Hip (1)

Fig. 1. Skeletal Model

M = part that also forms three orthonormal vectors can be computed from cross product results of three markers positions [15]. The

T parts are translation component that computed from bone’s length.

Like BVH format, Euler rotation is used. Euler rotations are able to represent bone rotation more intuitively as visual features rather than alternative such as quaternion.

Each sequence of motion is several seconds long and frame rates either in 120 fps or 250 fps. This means that each sequence has hundreds of frames. There is a plenty of room for variation in such number of frames. Fortunately the database came with information about apex frame for each sequence. These apex frames are selected by human observers as the most representative pose for particular emotion. Figure 2 illustrates some of these poses presented by skeletal model. The emotions depicted there are: fear (2a), happy (2b), angry (2c) and sad (2d).

Bones rotations data from these apex frames are used as input for the classifiers. Because the same skeletal model used in each posture, bones positions can be discarded as they can be computed from rotation. With this set-up, a body posture can be defined by Eq. 3.

posture={R1×B1, R2×B2, R3×B3...Rn×Bn} (3)

where

n=number of bones in skeletal system

R1..n=Euler rotation for bone 1..n B1..n=bone 1..n

Euler rotation consists of three angle values that specify rotation around X, Y and Z axis.

III. THECLASSIFIERS

(a) Fear (b) Happy

(c) Angry (d) Sad

Fig. 2. Apex poses example

uses bones rotations as input. Because each bone contributes three rotation values and there are 18 bones, therefore 54 values are regarded as features in each posture. Entire 183 postures from apex poses are used in learning and testing with 10 folds cross validation (CV10) and Leave One Out Cross Validation (LOOCV).

Naive Bayes classifier is a statistical model that assumes one feature doesn’t relate to other features [16]. We use this simple classifier as common comparison with other methods. This classifier also useful for getting initial estimation about classifiability of the samples.

k-NN uses distances between a sample to its k-nearest neighbors in feature space. These distances are used to judge which class the sample belong to. With our data, best result can be achieved with k=1 and Manhattan length was used as distance function.

MLP is feedforward artificial neural network that can clas-sify nonlinearly separable data [16]. It uses backpropagation in the training process. Using one hidden layer with 54 units (same number as input features), at learning rate 0.3 convergence can be achieved in 500 epochs.

SMO is used in Support Vector Machine (SVM) training to efficiently solving the optimization [17]. SVM itself is a classifier that constructs a hyperplane that acts as maximal margin between two classes of samples. Multiclass classifica-tions can be done with SVM by reducing them into multiple binary classification problems [18]. To make separation easier

usually the sample points must be mapped onto higher dimen-sion using kernel trick [19]. In this experiment Radial Basis Function (RBF) kernel with parameterC = 36andγ= 0.146

gives best classification result.

IV. EXPERIMENTRESULTS

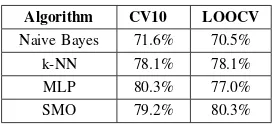

Average accuracies from each classifier are shown in table II. It is shown that MLP and SMO give better performances compared to other classifiers. However SMO can be con-sidered as the most robust classifier as it gives result more quickly.

TABLE II CLASSIFICATIONRESULTS

Algorithm CV10 LOOCV Naive Bayes 71.6% 70.5%

k-NN 78.1% 78.1%

MLP 80.3% 77.0%

SMO 79.2% 80.3%

if we examine the sample poses visually we can see that anger postures have less uniqueness than, for example, happy postures. Slight raising of the hands and lowering of the head are common in nonhappy postures. For comparison with recognition results by human observers, readers are suggested to refer [7].

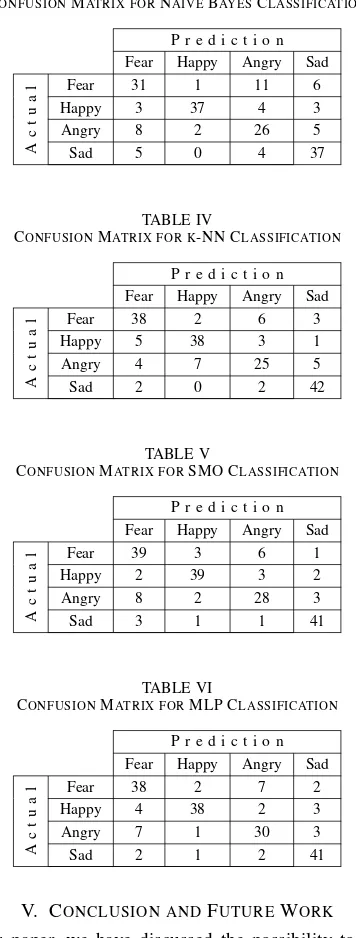

TABLE III

CONFUSIONMATRIX FORNAIVEBAYESCLASSIFICATION

P r e d i c t i o n Fear Happy Angry Sad

Actual

CONFUSIONMATRIX FOR K-NN CLASSIFICATION

P r e d i c t i o n Fear Happy Angry Sad

Actual

CONFUSIONMATRIX FORSMO CLASSIFICATION

P r e d i c t i o n Fear Happy Angry Sad

Actual

CONFUSIONMATRIX FORMLP CLASSIFICATION

P r e d i c t i o n Fear Happy Angry Sad

Actual

Fear 38 2 7 2

Happy 4 38 2 3

Angry 7 1 30 3

Sad 2 1 2 41

V. CONCLUSION ANDFUTUREWORK

In this paper, we have discussed the possibility to classify affective postures based on emotions. With classification ac-curacy up to 80%, we can conclude that the bones’ rotation data is a good discriminator feature.

For future works, following aspects must be considered to improve the classifier performance. Sometimes same emotions are represented into completely different gestures. More sam-ples must be collected to determine whether these postures

must be considered as outliers or not. Statistical analysis also can be implored to filter out the redundant and irrelevant features (i.e. bones), or to determine whether bones selection in skeletal model already the optimal one. Another thing that can be considered is the bones themselves are in hierarchical order, but present algorithm still has not used this information to help classification process.

REFERENCES

[1] J. Tao and T. Tan, “Affective computing: A review,” in Affective Computing and Intelligent Interaction, pp. 981–995, Springer, 2005. [2] P. Ekmanet al., “Facial expression and emotion,”American

Psycholo-gist, vol. 48, pp. 384–384, 1993.

[3] H. K. Meeren, C. C. van Heijnsbergen, and B. de Gelder, “Rapid per-ceptual integration of facial expression and emotional body language,”

Proceedings of the National Academy of Sciences of the United States of America, vol. 102, no. 45, pp. 16518–16523, 2005.

[4] J. Grezes, S. Pichon, and B. De Gelder, “Perceiving fear in dynamic body expressions,”Neuroimage, vol. 35, no. 2, pp. 959–967, 2007. [5] M. V. Peelen and P. E. Downing, “The neural basis of visual body

perception,”Nature Reviews Neuroscience, vol. 8, no. 8, pp. 636–648, 2007.

[6] P. Ekman and W. V. Friesen,Facial Action Coding System. Consulting Psychologists Press, Stanford University, Palo Alto, 1977.

[7] A. Kleinsmith, P. R. De Silva, and N. Bianchi-Berthouze, “Cross-cultural differences in recognizing affect from body posture,”Interact. Comput., vol. 18, pp. 1371–1389, Dec. 2006.

[8] K. Schindler, L. V. Gool, and B. de Gelder, “Recognizing emotions expressed by body pose: A biologically inspired neural model,”Neural Networks, vol. 21, no. 9, pp. 1238 – 1246, 2008.

[9] N. Bianchi-berthouze and A. Kleinsmith, “A categorical approach to affective gesture recognition,” Connection Science, vol. 15, no. 4, pp. 259–269, 2003.

[10] A. Kapur, A. Kapur, N. Virji-Babul, G. Tzanetakis, and P. F. Driessen, “Gesture-based affective computing on motion capture data,” in Proceed-ings of the First international conference on Affective Computing and Intelligent Interaction, ACII’05, (Berlin, Heidelberg), pp. 1–7, Springer-Verlag, 2005.

[11] A. Kleinsmith and N. Bianchi-Berthouze, “Recognizing affective di-mensions from body posture,” inAffective Computing and Intelligent Interaction, pp. 48–58, Springer, 2007.

[12] B. De Gelder and J. Van den Stock, “The bodily expressive action stimulus test (beast). construction and validation of a stimulus basis for measuring perception of whole body expression of emotions,”Frontiers in psychology, vol. 2, 2011.

[13] A. Kleinsmith, N. Bianchi-Berthouze, and A. Steed, “Automatic recog-nition of non-acted affective postures,”Systems, Man, and Cybernetics, Part B: Cybernetics, IEEE Transactions on, vol. 41, no. 4, pp. 1027– 1038, 2011.

[14] CMU, “Cmu graphics lab motion capture database,” May 2013. [15] G. Guerra-Filho, “Optical motion capture: theory and implementation,”

Journal of Theoretical and Applied Informatics, vol. 12, no. 2, pp. 61– 89, 2005.

[16] I. H. Witten, E. Frank, and M. A. Hall,Data Mining: Practical Machine Learning Tools and Techniques: Practical Machine Learning Tools and Techniques. Morgan Kaufmann, 2011.

[17] J. C. Platt, “Sequential minimal optimization: A fast algorithm for training support vector machines,” tech. rep., ADVANCES IN KERNEL METHODS - SUPPORT VECTOR LEARNING, 1998.

[18] K. bo Duan and S. S. Keerthi, “Which is the best multiclass svm method? an empirical study,” inProceedings of the Sixth International Workshop on Multiple Classifier Systems, pp. 278–285, 2005.