On the stability of monotone discrete selection dynamics

with inertia

* Herbert Dawid

¨

Department of Management Science, University of Vienna, Brunnerstraße 72, A-1210 Vienna, Austria Received 10 February 1997; received in revised form 1 March 1998; accepted 1 May 1998

Abstract

In this paper we study the learning behavior of a population of boundedly rational players who interact by repeatedly playing an evolutionary game. A simple imitation type learning rule for the agents is suggested and it is shown that the evolution of the population strategy is described by the discrete time replicator dynamics with inertia. Conditions are derived which guarantee the local stability of a Nash-equilibrium with respect to these dynamics and for quasi-strict ESS with support of three pure strategies the crucial level of inertia is derived which ensures stability and avoids overshooting. These results are illustrated by an example and generalized to the class of monotone selection dynamics. 1999 Elsevier Science B.V. All rights reserved.

Keywords: Stability; Monotone discrete selection dynamics; Inertia

JEL classification: C72

1. Introduction

Adaptive learning in games has been a major topic in recent economic research. In particular the behavior of a whole population playing an evolutionary game has been studied using several different stochastic and deterministic models (see e.g. Blume, 1997; Kandori and Rob, 1995; Friedman, 1991; Ellison and Fudenberg, 1995). One of the most popular approaches to modelling learning in an evolutionary context is replicator dynamics (Taylor and Jonker, 1976) which was first introduced as a model of biological evolution but may also be interpreted in economic applications as a highly stylized description of the effects of imitation in a population of agents. It is well known

*Corresponding author. Tel.:143 1 29128 513; fax:143 1 29128 504. E-mail address: [email protected] (H. Dawid)

that there is a considerable difference in behavior between the continuous time and discrete time formulations of the replicator dynamics. Whereas any evolutionary stable strategy is stable with respect to the continuous time replicator dynamics (see e.g. van Damme, 1991) this does not necessarilly hold true for the discrete time version. Moreover there is no comparable criterion to ESS which would guarantee the stability of the discrete time replicator dynamics. Furthermore, the property that only rationalizable strategies (Bernheim, 1984) can survive in the long run holds true for the continuous time dynamics (Samuelson and Zhang, 1992) but fails to do so for the discrete time

1

analogon (Deckel and Scotchmer, 1992). Both of these discrepancies are due to the same phenomenon called overshooting which may of course also be observed for different learning dynamics (e.g. Dawid, 1999).

In this paper we will propose a very simple learning model describing the boundedly rational choice of strategy by agents playing an iterated game. We show that the evolution of the population strategy in this model is determined by a replicator dynamics with inertia where some fraction of the population sticks to its old strategy whereas the choice of the rest of the population is determined by the discrete time replicator dynamics. It is quite intuitive that the properties of this dynamics lie somewhere in-between the properties of the continous and discrete time replicator dynamics. It is the aim of this paper to exactify this intuition and provide some lower bounds of inertia which guarantee the stability of the learning dynamics near equilibria which are asymptotically stable with respect to the continuous time replicator dynamics. We also extend these results by considering a more general class of imitation models with inertia and showing that the same criterion as in the case of the replicator dynamics assures the existence of a crucial level of inertia which avoids instability due to overshooting.

The existence of inertia in a population of boundedly rational agents seems to be a very plausible assumption and has been used in several game theoretic learning models (e.g. Samuelson, 1994; Ellison and Fudenberg, 1995). Confronted with a situation where gathering of information which is needed for sensible decision making might be costly and on the other hand by no means is a guarantee of higher payoffs an agent might decide just to stick to his previous actions. Such behavior could also be interpreted as ‘acting out of habit’ (see also Day (1984) for a discussion of imitational and habitual behavior), a mode of boudedly rational behavior which has also been detected in experiments (Day and Pingle, 1996). The results which are derived in this paper suggest that a high propensity to show this kind of habitual behavior might allow a population to settle down at an equilibrium where a population of ‘more rational’ agents showing a higher propensity to adapt their strategy does not converge. Observations of this kind do not crucially depend on the actual structure of the learning rule but hold for different type of models and simply restate the fact that inertia can avoid overshooting.

The paper is organized as follows. In Section 2 we describe our model and point out the relation to the continuous and discrete time replicator dynamics. A local stability analysis is carried out in Section 3 and conditions ensuring stability near equilibria with support of three or less pure strategies are derived. We illustrate these results using the

1

example of a generalized rock-scissors paper game in Section 4. In Section 5 we shortly deal with the more general case of monotone imitation dynamics and conclude with some remarks in Section 6.

2. Replicator dynamics with inertia

We consider a situation where a large number of economic agents (we will proceed as if the number of agents is infinite) interact with each other by iteratedly playing an evolutionary game. Every agent chooses every period a pure strategy i[I, where n5uIu

is the number of pure strategies and receives of payoff of

9

P(e ,s)i 5e As,i

n n n

where A is a n3n payoff matrix and s[D 5hx[R uxi$0, oi51 xi51j is the mixed population strategy generated by the choices of all agents. We assume that all payoffs are non-negative.

Furthermore, we assume that the agents do not know the exact structure of their payoff function and have to base their choice of strategy on very limited information. Every period the average population payoff is made public and thus is known by all agents. Furthermore every agent meets each period a randomly chosen other agent and gets to know the strategy this agent used last period as well as the resulting payoff. Let us denote the strategy of the agent he meets with i[I. The agent compares the payoff of i with the average population payoff and adopts the strategy i with a probability

proportional to the ratio of the payoff of i and the average population payoff. Thus, the probability that an arbitrary agent adopts in the next period strategy i due to a meeting

9 9

e Asi e Asi

]] ]]

with another agent who used i is given by xs9Ass , wherei xs9As is the probability that he chooses i given he meets an agent who used i and s is the probability to meeti

2

such an agent. Note that the parameterx has to be sufficiently small to guarantee that

9

e Asi n

]]

x #1 for all i[I and s[D . Furthermore, the probability that an arbitrary agent

s9As

uses i in the next period because he used it in the last and did not adopt a new strategy is

n e As9 j

]]

si

S

12xO

sj s9AsD

5(12x)s .i j51This shows thata:512x is the probability that an agent sticks to his old strategy. We call this parameter the level of inertia in the population. Since we assume that the number of agents is infinite the population strategy at time t11 is given by the expected

2

Those readers who feel uncomfortable with the fact that the admissible range ofx depends on the payoff

9

e Asi

]]

S

D

matrix A could assume that the imitation probability is actually given by min 1,x . We always s9As

population strategy given s . Thus, the evolution of the population strategy is given byt

the following dynamical system

Ast

]]

st115ast1(12a) diag(s )t s As9 (1)

t t

We call this dynamical system replicator dynamics with inertia, since the case a 50 corresponds to the discrete time replicator dynamics (see e.g. Weibull, 1995). The replicator dynamics has been analyzed in great detail in the game theory literature (see e.g. Hofbauer and Sigmund, 1988; Weissing, 1991; Weibull, 1995), however it is mainly motivated by biological rather than by economic models. Here we provide an interpretation of the replicator dynamics with inertia as an imitation dynamics in the spirit of word of mouth learning (see Ellison and Fudenberg, 1995; Dawid, 1999). Note however that a certain level of inertia always has to be present if the dynamics is interpreted according to the model presented above. Cabrales and Sobel (1992) consider the dynamics Eq. (1) in a more technical context without giving a specific economic interpretation of the model and without restricting the range of a. Of course the dynamics can also be motivated by a model where all agents have information about the payoffs of all other agents in the population and with probability 12a choose some other agent to imitate, where again the probability to be imitated is proportional to the past success.

It is well known that any Nash equilibrium of the symmetric game with payoff matrix

A is a fixed point of both the discrete time replicator dynamics and also its continuous

time pendant

~

s5diag(s)[As21s9As]. (2)

However, there are fixed points which are no equilibria; for example all vertices of the simplex. As already pointed out in the introduction every evolutionary stable strategy is locally asymptotically stable with respect to the continuous time replicator dynamics. The discrete time replicator dynamics however may diverge from an equilibrium even if it is evolutionary stable because the equilibrium may be ‘overshot’ by such a wide margin that the trajectory departs more and more from the equilibrium (Weissing, 1991). Since the notion of overshooting is a central point of the present paper we would like to make precise what we mean by this term. We say that a discrete time dynamic shows overshooting near an equilibrium if the fixed point is unstable with respect to the discrete time dynamics but stable with respect to Eq. (2). Note however that even if the discrete time dynamics is stable the convergence speed varies with changing levels of inertia. In particular there might be oscillating converging paths where the oscillations could be dampened by increasing the level of inertia. On the other hand, a very high level of inertia causes tiny approach steps and accordinlgy a slow convergence. It is the aim of this paper to derive characterizations of the crucial level of inertia which avoids instability due to overshooting.

3. Local stability analysis

dynamics. In particular, we prove that the stability of an equilibrium with respect to the continuous time dynamics guarantees that this equilibrium is stable with respect to the discrete time dynamics with inertia if the level of inertia is sufficiently high. Afterwards, we derive conditions which guarantee that the continuous time dynamics is locally attracted by a quasi strict equilibrium with a support of at most three pure strategies.

The first Lemma establishes that the eigenvalues of the linearization of the replicator dynamics with inertia are closely related to the eigenvalues of the continuous time dynamics.

Lemma 1 Letmbe a symmetric Nash equilibrium of the game G. Thenmis a fixed point of Eq. (1) and the eigenvalues li of the Jacobian of Eq. (1) at m are given by

12a ]] l 5i 11 v ni,

n

wherev is the game value at the equilibrium (m,m) and hnj is the spectrum of the

i i51

Jacobian of the continuous time replicator dynamics at m. Furthermore, the external

eigenvalue of the discrete time dynamics corresponds to the external eigenvalue of the continuous time dynamics.

Proof. Note first that Eq. (1) can be written as

12a

]] 9

st115f(s )t 5st1 s As9 diag(s )(Ast t21s As )t t t t

9

Further, diag(s )(Ast t21s As ) is the right hand side of the continuous time replicatort t

dynamics. Let us denote the Jacobian of this expression atm by J(m). Calculating the differential at m yields

diag(m)(Am 21m 9Am)

12a 12a

]]] ]]

D (f m)5I1(m9Am)2(Jm9Am 2 50 5I1 v J.

Since the eigenvectors of both dynamics coincide it is obvious that li is the external eigenvalue of the linearization of Eq. (1) if and only if mi is the external eigenvalue of the linearization of Eq. (2). Thus, we get the lemma. h

Lemma 1 says that every eigenvalue of the discrete time dynamics with inertia lies

ni ]

somewhere on the straight line between 1 and 11v where ni is an eigenvalue of the continuous time dynamics. In particular this shows the well known fact that the discrete time dynamics (even with inertia) can never be locally stable unless the continuous time dynamics is stable. On the other hand it is obvious from Lemma 1 that if all ni have negative real parts there exists a valuea* such that all eigenvalues of the discrete time dynamics with inertiaa $a* lie within the unit circle. We state this fact in Corrollary 1.

Corollary 1 Assume the symmetric Nash equilibrium m is a hyperbolic locally asymtotically fixed point of the continuous time replicator dynamics Eq. (2). Then there

is a level of inertia a* such that m is locally asymptotically stable with respect to any discrete time replicator dynamics with inertiaa $a*.

behavior for lower levels of inertia qualitatively looks like the discrete time replicator dynamic, whereas it resembles the behavior of the continuous time replicator dynamics for larger inertia. Thus, it is important for the determination of the stability of the discrete time replicator dynamics with inertia to derive condtitions guaranteeing the stability of an equilibrium with respect to the continuous time replicator dynamics. In order to derive such conditions we have to determine the spectrum of the linearization of the continuous time replicator dynamics. It is impossible to give a closed form analytical expression for the spectrum, but in Lemma 2 we reduce the problem to an eigenvalue problem of a simpler matrix M . We denote by A the submatrix of A consisting of allm m

rows and columns corresponding to the pure strategies in the support of m and by

C(m) ˆ

m[D the vector consisting only of the positive elements of m.

Lemma 2 Let m be a symmetric Nash equilibrium with support C(m) and v be the

equilibrium payoff. The spectrum of J(m) is given by

Y5 2h vj< J < P\ 0 ,h j (3)

where 2v is the external eigenvalue and Jis the spectrum of

ˆ ˆ

Since the simplex is invariant in the replicator dynamics there is a single external (or transversal) eigenvalue where the corresponding eigenvector is no element of the tangent

n n

space t5hx[R uoi51 xi50j. It is easy to see that the external eigenvalue is given by 2v, where the corresponding right-eigenvector is m. If we reorder the strategies in a

way such that the first C(m) strategies are in the support of m we realize that the first

C(m) rows of the first matrix in the sum are zero, whereas the last n2C(m) rows of the second matrix are zero. Accordingly, J(m) has the form

J1 J2

elements of P are eigenvalues of J(m). Furthermore this implies that the remaining

3

eigenvalues of J(m) coincide with the eigenvalues of J . We have1

ˆ ˆ ˆ

J 5diag(m)(A 21m 9A 2v119)5M 2vdiag(m)119.

1 m m m

3

These facts have already been observed by several researchers in this field, see e.g. Lemma 45 in Bomze and ¨

Note further that also the simplex containing all mixed strategies with support C(m) is invariant in the replicator dynamics which implies that all eigenspaces of J1 with

C(m) ˆ ˆ

exception of the external eigenvector lie in the tangent space t5hx[D uoi[C(m) ˆ

xi50j. However, for any vector x[t we have

ˆ ˆ

J x1 5M xm

which implies that the remaining eigenvalues of J(m) coincide with the eigenvalues of

M . Noting thatm m is a right-eigenvector of M for eigenvalue 0 establishes the claim of the lemma.h

Taking into account Lemma 2 we only have to calculate the eigenvalues of Mm to determine the stability of an equilibrium with respect to the continuous time replicator dynamics and to find the right level of inertia which will avoid overshooting in the discrete time dynamics. In cases where the support of the equilibrium contains not more than three pure strategies the related calculations can be carried out without specifying the payoff matrix A. We will provide these results in the remaining part of this section. To avoid the case where the equilibrium is a non-hyperbolic fixed point we restrict our attention to quasi-strict Nash equilibria (see e.g. van Damme, 1991).

Every quasi-strict Nash equilibrium in pure strategies is strict and accordingly locally asymptotically stable with respect to both the continuous time and the discrete time replicator dynamics. If the support ofm contains two pure strategies we easily get the following characterization.

Proposition 1 Let m be a quasi-strict Nash equilibrium with C(m)5hi ,i1 2j. Thenm is locally asymptotically stable with respect to the dynamics Eq. (2) if

(C1) ai i1 12ai i1 22ai i2 11ai i2 2,0.

Proof: Since m is a quasi-strict equilibrium we have

e Am ,v ;j[⁄ C(m)

j

and accordingly all elements of Pin Eq. (3) are negative. Considering the elementsJ we know that one of them is zero and accordingly the other one is given by tr (M ).m

Simple calculations show

tr(M )m 5m mi1 i2(ai i1 12ai i1 22ai i2 11ai i2 2)

and the positivity of mi1 and mi2 implies the proposition. h

To formulate the stability conditions for an equilibrium with the support of three pure strategies we introduce the following notation. Let [a]ijkldenote the 232 submatrix of A consisting of rows i and j and columns k and l:

aik ail

[a]ijkl5

S

a aD

.jk jl

n n

i1j1k1l

S(A)5

O O O O

(21) det([a]ijkl)i51 j.i k51 l.k

and

ˆ

Dm5diag(m)A .m

Proposition 2 Let m be a symmetric quasi-strict Nash equilibrium of the game with support C(m)5hi , i , i1 2 3j. Then m is locally asymptotically stable with respect to the continuous time replicator dynamics if and only if the following two conditions are satisfied:

(C2) v.tr(D )m

(C3) S(A )m .0.

Proof: According to Lemma 2 the eigenvalues of J(m) are given by Eq. (3). Just like in the proof of proposition 1 we conclude that all elements ofPare negative which leaves us with showing that all elements ofJbut the external eigenvalue 0 are negative. The elements ofJ are given by the solutions of the equation

3 2

l 2tr(M )l 1m W(M )m l 2det(M )m 50,

where W(M ) is the sum of the major subdeterminants of M . Since we have shown thatm m

0 is an eigenvalue of M we have det(M )50. This leaves us with showing that the realm m

parts of the two solutions of

2

l 2tr(M )l 1m W(M )m 50

are negative. It is well known that this holds if and only if W(M ).0 and tr(M),m 0. Tiresome but straightforward calculations establish further that

tr(M )m 5tr(D )m 2v, W(M )m 5m m m S(A ).

i1 i2 i3 m

If at least one the two inequalities holds the other way round at least one of the two eigenvalues has a positive real part and accordingly m is unstable. If either (C2) or (C3) hold as equality (mi1,mi2,mi3) is a non-hyperbolic fixed point of the replicator dynamics on the simplex spanned by hi ,i ,i1 2 3j. It follows from the classification in Bomze (1983) that no non-hyperbolic interior fixed point can be asymptotically stable with respect to the replicator dynamics for three or less pure strategies. h

Another static criterion for dynamic stability of the continuous time replicator dynamic based on the definiteness of a matrix derived from the payoff matrix and the

4

support of the equilibrium was given by Haigh (1975). However, our approach enables us also to conveniently express the actual eigenvalues of the linearization of Eq. (2)

4

which also allows us to actually compute the level of inertia needed to avoid overshooting. Measuring the level of inertia from real data seems to be impossible which might suggest that calculating the exact values of the crucial level of inertia is not of high relevance. However, since the derivation of the actual crucial level of inertia yields, as a corrollary, a stability criterion for the discrete time replicator dynamics and also allows some insights into the qualitative behavior of the dynamics with inertia we present the derivation of the crucial level of inertia for equilibria with a support of three

5

pure strategies.

Proposition 3 Let m be a symmetric quasi-strict Nash equilibrium with support C(m)5hi , i , i1 2 3j. If (C2) and (C3) hold then there is a level of inertia

such that m is locally asymptotically stable with respect to the dynamics Eq. (1) for

every a .a*. Ifa ,a* the equilibrium m is unstable.

Proof: We have to show that all eigenvaluesliof the linearization of Eq. (1) at m have a modulus smaller than one. Letli be an arbitrary relevant eigenvalue. Then we know from Lemma 1 that

Considering the two eigenvalues inJ\h0jwe distinguish between the case where the eigenvalues of M are real and the case where they are complex. Assume first that theym ]]]]

are real (i.e. tr(D )#m v22 m m m S(A )). Denote by n the smaller of the two real

which implies by (C2) thatli[[0,1) for alla[[0,1). Obviously, we also havelj[[0,1) for the eigenvalue corresponding to the larger of the two real relevant eigenvalues of

M . Thus, in such a case we should havem a*50 and indeed using the condition that the eigenvalues are real and (C2) we get

2 2

check that the modulus of the eigenvaluel 511i v ni is smaller than 1, whereniand

¯

ni are the two relevant eigenvalues of M . We havem

2

Putting together the two cases establishes the proposition. h

Since the ‘traditional’ discrete time replicator dynamics corresponds to the case where a 50 we get as an immediate corrollary the following characterization of equilibria with the support of three strategies which are locally asymptotically stable with respect to the discrete time replicator dynamics

Corollary 2 Under the assumptions of proposition 3 an equilibrium m is stable with respect to the discrete time replicator dynamics (without inertia) if

m m m S(A ).v(v2tr(D )). (4)

i1 i2 i3 m m

If the inequality holds the other way roundm is unstable.

It is further interesting to notice that the reasoning in the proof of proposition 3 shows that in the case of an equilibrium with the support of three pure strategies overshooting can never appear if all eigenvalues are real (in this case all eigenvalues are positive). With other words, if the equilibrium is approached linearly rather than in spirals the discrete dynamics never overshoots the equilibrium. However, such a situation might occur if the support of the equilibrium consists only of two pure strategies.

4. An example

Consider the following extension of a circular Rock-Scissors Paper (RSP) game to a

6

There are three symmetric Nash equilibria of the game: m 53(1,1,1,0), m 5(0,0,0,1)

3 1 2 3

andm 5(0.2, 0.2, 0.2, 0.4). Whereasm andm are ESS the equilibrium m is no ESS

7

and unstable with respect to the continuous time replicator dynamics which in turn implies that it is unstable with respect to Eq. (1) for any level of inertiaa. As long as there is relatively large and equal weight on the first three strategies these three strategies have a higher payoff than the fourth one. However, if the frequencies of the first three strategies differ decisively the payoff of the fourth strategy becomes above average and it increases in frequency. This, in turn decreases the payoff of all strategies but the effect on the payoff of the first three strategies is larger than the effect on the

1 2

fourth strategy. Accordingly, instability of the equilibriumm leads to the selection ofm as the long run outcome of the learning process. It follows from corrollary 2 (and also

1

from Weissing (1991) results on circular RSP games) thatm is unstable with respect to

1

the discrete time replicator dynamics. However, since m is an ESS it is stable with respect to the continuous time replicator dynamics, and therefore there exists a crucial

1

level of inertia – using proposition 3 we calculate this level asa*50.649 – such thatm is locally asymptotically stable with respect to the dynamics with inertia ifa .a* and unstable otherwise. In this game the level of inertia determines for a large set of initial

1 2

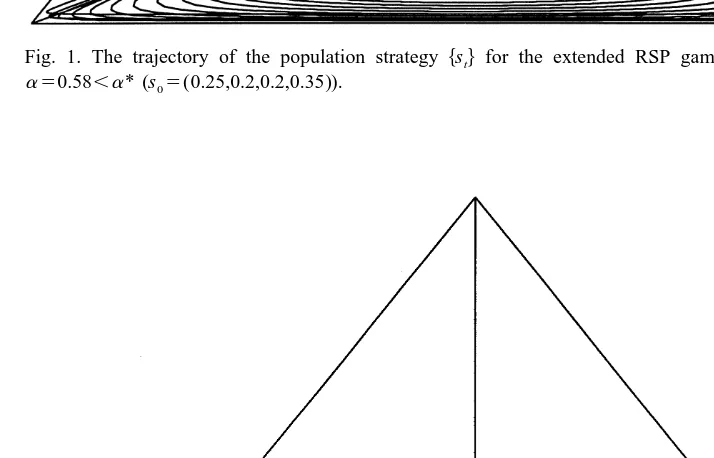

strategies s whether the process will end up at0 m or at m . In Fig. 1 we show the trajectory of the learning process initialized at s05(0.25, 0.2, 0.2, 0.35) for a 50.58.

It can be clearly seen that initially the payoff of e4 is below average and the population strategy spirals down towards the subsimplex characterized by s450. For

1

a ,a* the equilibrium m is locally unstable and hstj approaches the boundary of the subsimplex, where the payoff of e is above average. Hence, s increases again and4 4 2

eventually the population strategy is driven towards the pure strategy equilibrium m . Things change dramatically if we increase the probability that an agent sticks to his old

1

strategy at a given period by 12% (a 50.7). Now the equilibrium m is locally asymptotically stable and a trajectory initialized as above approaches the subsimplex

1

s450 but this time spiraling inwards and converging towardsm (Fig. 2).

This shows that the level of inertia determines the dynamic equilibrium selection of the process for some initial values s . Note, however, that the process converges towards0

2

m for all initial strategies s with s0 0,4.0.4 regardless of the value ofa. It follows from

6

Deckel and Scotchmer (1992) use a similar game to show that the discrete time replicator dynamics does even in the long run not necessarilly eliminate strategies which are dominated by a combination of other strategies in the game.

7

Fig. 1. The trajectory of the population strategy hstj for the extended RSP game and a level of inertia

a 50.58,a* (s05(0.25,0.2,0.2,0.35)).

Fig. 2. The trajectory of the population strategy hstj for the extended RSP game and a level of inertia

our reasoning that for a ,a* this equilibrium is almost a global attractor. Only

3 2

trajectories with either s0,450 or s05m do not eventually converge towards m . Since

1 ]13 2

the equilibrium m with equilibrium payoff v5 for the agents Pareto dominates m

3

with v50.2 a higher level of inertia in the population can for a large set of initial

population distributions significanlty increase the long run payoff of all agents in the population.

5. Monotone selection dynamics with inertia

In the previous sections we always considered the discrete time replicator dynamics with inertia motivated by an imitation model where the agents imitate another agent with a probability proportional to the relative payoff of this agents current strategy. Let us now consider the more general case where the probability that an agent currently using strategy i imitates another agent currently using j is given by xp (s), where pij ij is non-negative and

9 9 9 9

p (s)ij .p (s)kj ⇒e Asi ,e As and p (s)k ij .p (s)⇔e Asik j .e Ask (5)

hold for all i, j,k[I. This means that the probability to imitate an agent increases with

his payoff and either decreases or is constant with the own payoff. The corresponding population dynamics reads

si,t115si,t1xsi,t

O

s ( p (s )j,t ji t 2p (s )).ij t (6)j[I

Similar dynamics in continuous time have recently been analyzed in Hofbauer (1995). Note also that the replicator dynamics with inertia is a special case of this type of

e As9j

]

dynamics with p (s)5ij s9As. We call this kind of dynamics a smooth monotone imitation

n

dynamics with inertiaa 512x if all p (s) are continuously differentiable onij D , satisfy Eq. (5) and the scaling condition

1

]D

E O O

S

s s p (s) dsi j ijD

51 (7)i[I j[I

n

s[D

n

holds.D denotes the volume ofD . Contrary to the case of the replicator dynamics the probability to stick with the old strategy given by 12x oj[Is p (s) depends on both thej ij

currently used strategy i and the population state s in this more general formulation. The scaling condition Eq. (7) guarantees that a 512x is the average (measured over the

n

The monotone imitation dynamics Eq. (6) are a special case of a monotone selection

8

dynamics

st115diag(s )t 1diag(s )g(s ),t t (8)

n n n

where g:D ∞R is continuously differentiable and satisfies s9g(s)50;s[D and

9 9

g (s)i .g (s)⇔e Asj i .e As.j (9)

Cressman (1997) showed in a continuous time framework that the stability properties of an interior rest point with respect to a smooth monotone selection dynamics are basically the same as with respect to the continuous time replicator dynamics. Using this result it is easy to show that the conditions (C2) and (C3) ensure that there is a crucial level of inertia for every smooth monotone imitation dynamics with inertia. Again, a quasi-strict equilibrium with the support of at most three pure strategies is locally asymptotically stable with respect to these dynamics if the level of inertia is larger than this crucial level. We state this formally in the next proposition.

Proposition 4 Let m be a symmetric quasi-strict Nash equilibrium with support C(m)5hi ,i ,i1 2 3j and assume that (C2) and (C3) hold. Then for every smooth imitation

dynamics with inertia there exists a crucial level of inertiaa*,1 such thatmis locally asymptotically stable with respect to this dynamics for any level of inertia larger than

a*.

Proof: We assume here thatm is an interior fixed point of the dynamics with three pure strategies. The extension to quasi-strict equilibria with the support of three pure strategies is the same as in the proof of Eq. (3). The Jacobian of the dynamics Eq. (6) at m is

V5I1xK,

where K is the linearization of the continuous time dynamics

~

si5si

O

s ( p (s)j ji 2p (s)).ij (10)j[I

It is easy to see that Eq. (10) is a smooth monotone selection dynamics as defined in Cressman (1997) and in the same paper it is shown that the Jacobian of any smooth selection dynamics at an interior hyperbolic fixed point is a positive multiple of the Jacobian of the continuous time replicator dynamics at this fixed point. This means that there exists a scalar c.0 such that

K5cJ,

8

where J is the linearization of Eq. (2) at m. Denoting by l1, l2 the two relevant eigenvalues of V we have

l 5i 11xcni, i51,2,

whereni are again the two relevant eigenvalues of J. Since we know from proposition Eq. (2) that (C2) and (C3) imply that the real parts of bothni are negative it is obvious that there exists ax*.0 such that the moduli of bothliare smaller than one ifx ,x*.

h

Note that the actual value for the crucial level of inertia depends on the functions pij

and accordingly we can not give a general expression here. However, it is interesting to notice that the conditions (C2) and (C3) are very general conditions in the sense that they ensure stability of any monotone dynamics as long as the level of inertia is sufficiently large. Finally, we would like to mention that also imitation rules of the form

9 9

p (s)ij 5C(e As2j e As) wherei C is non-negative and increasing (e.g. Hofbauer (1995)) fit into our framework and similar results hold there too.

6. Conclusions

The results of the present paper can be seen from two different perspectives. From a mathematical point of view we have provided a rather easy to calculate criterion ensuring that a Nash equilibrium is stable with respect to the continuous time replicator dynamics and also a stability criterion for the discrete time replicator dynamics (with inertia). To our knowledge no such criterion for the discrete replicator dynamics exists in the literature for general normal form games but only for special cases like the RSP games. From an economic point of view the results suggest that a higher fraction of non-adaptive agents in the population can facilitate the convergence of the population strategy towards a certain equilibrium. We have shown in an example that this might result in higher payoffs for all individuals, however this obviously does not hold in general. In our opinion the largest deficit of the present analysis lies in the fact that we only get analytical stability criterions if the support of the considered equilibrium contains not more than three strategies. We hope to extend these results to more general cases in future research.

Acknowledgements

References

Bernheim, B.D., 1984. Rationalizable strategic behavior. Econometrica 52, 1007–1028.

L.E. Blume, 1997. Population games. In: Arthur, W.B., Durlauf, S.N., Lane, D.A. (Eds.), The Economy as an Evolving Complex System II. Addison-Wesley, Reading, MA, pp. 425–460.

Bomze, I., 1983. Lotka-Volterra equation and the replicator dynamics: a two-dimensional classification. Biological Cybernetics 48, 31–59.

¨

Bomze, I., Potscher, B., 1989. Game Theoretical Foundations of Evolutionary Stability. Springer, Heidelberg. Cabrales, A., Sobel, J., 1992. On the limit points of discrete selection dynamics. Journal of Economic Theory

57, 407–419.

Cressman, R., 1997. Local stability of smooth selection dynamics for normal form games. Mathematical Social Sciences 34, 1–19.

Dawid, H., 1999. On the dynamics of word of mouth learning with and without anticipations. Annals of Operations Research, forthcoming.

Day, R., 1984. Disequilibrium economic dynamics: a post-Schumpeterian contribution. Journal of Economic Behavior and Organization 5, 57–76.

Day, R., Pingle, M., 1996. Modes of economizing behavior: experimental evidence. Journal of Economic Behavior and Organization 29, 196–209.

Deckel, E., Scotchmer, S., 1992. On the evolution of optimizing behavior. Journal of Economic Theory 57, 392–406.

Haigh, J., 1975. Game theory and evolution. Advances in Applied Probability 7, 8–11.

Ellison, G., Fudenberg, D., 1995. Word-of-mouth communication and social learning. Quarterly Journal of Economics CX, 93–125.

Friedman, D., 1991. Evolutionary games in economics. Econometrica 59, 637–666. Hofbauer, J., 1995. Imitation Dynamics for Games. Working Paper, University of Vienna.

Hofbauer, J., Sigmund, K., 1988. The Theory of Evolution and Dynamical Systems. Cambridge University Press, Cambridge.

Kandori, M., Rob, R., 1995. Evolution of equilibria in the long run: a general theory and applications. Journal of Economic Theory 65, 383–414.

Nachbar, J.H., 1990. Evolutionary selection dynamics in games: convergence and limit properties. Internation-al JournInternation-al of Game Theory 19, 59–89.

Samuelson, L., 1994. Stochastic stability in games with alternative best replies. Journal of Economic Theory 64, 35–65.

Samuelson, L., Zhang, J., 1992. Evolutionary stability in asymmetric games. Journal of Economic Theory 57, 363–391.

Taylor, P.D., Jonker, L.B., 1976. Evolutionary stable strategies and game dynamics. Mathematical Biosciences 40, 145–156.

van Damme, E., 1991. Stability and Perfection of Nash Equilibria. Springer, Heidelberg. Weibull, J.W., 1995. Evolutionary Game Theory. MIT Press, Cambridge MA.