54 1541-1672/15/$31.00 © 2015 IEEE IEEE INTELLIGENT SYSTEMS

Published by the IEEE Computer Society

A Robot Learns How

to Entice an Insect

Ji-Hwan Son and Hyo-Sung Ahn, Gwangju Institute of Science and Technology

Using a camera to

recognize a biological

insect and its heading

angle, a robot can

spread a specific

odor source to entice

the insect to travel a

given trajectory.

consists of uncertain and unpredictable el-ements that make it difficult for robots to properly interact with our environment. The key issue in this challenging task is linked to how a robot learns: it can learn by imitation or demonstration from its own observation or teacher,1,2 through trial and error,3 or via its own evolutionary skill4 or reasoning ability based on acquired data.5 But regard-less of learning method, the robot needs the ability to recognize and concentrate on a target, make a decision on how to achieve the optimal outcome, and exercise control to execute its chosen actions. On top of this, the robot should consistently and continu-ously acquire useful knowledge as part of its learning process.

Motivated by these challenges, we were curious as to whether a learning capabil-ity could be embedded into a robot. It’s extremely difficult to realize a robot with learning abilities in a real environment be-cause the robot could be affected by that environment as it learns. Moreover, the en-vironment could be changed through its interactions with the robot and by other stimulations, such as wind, flavor, and tem-perature. Thus, instead of providing a uni-versal solution for general robot intelligence, we seek to implement a learning ability in

an ideal situation. Specifically, we studied the interaction between a robot and a liv-ing, biological insect in a regulated space, with the robot spreading a specific odor to indirectly attract the insect. Unlike humans, insect behavior is much simpler, making it relatively easy for a robot to understand and interact with. Nevertheless, an insect’s behavior includes uncertain and unpredict-able elements—it has enough intelligence to survive in nature—so this wasn’t a trivial problem.

Various researchers have studied the in-teraction between robots and animals that we can divide into two classes. The first is physical contact–based interaction—for ex-ample, researchers have installed electrodes into the nervous systems of a moth,6,7 a bee-tle,8 and a cockroach,9 and then controlled the insects’ motions via electric stimuli. The second class is indirect stimuli–based inter-action—robots rely on indirect stimuli, such as using sex pheromones to interact with a moth,10 using pheromones to influence a group of cockroaches,11 and interacting with a living cricket via internally embed-ded cricket substances.12 Further examples of indirect stimuli-based interaction include mobile robot movement achieved by drag-ging a flock of ducks toward a specific goal

R

obot technology has achieved significant growth in recent years, to the

point where many expect robots to interact seamlessly with human

envi-ronments. To perfect this ability, robots must recognize various elements, make

feasible decisions, and conduct proper actuations. However, the real world

sual stimuli can control a beetle’s fl ight direction via LEDs attached to its head.17 Attaching movable cylin-ders to a turtle’s shell can affect its movement: the obstacle moves close to the turtle’s head, making the turtle move as desired to avoid it.18 How-ever, all these interaction mechanisms depend on programmed commands or human operators.

Some of our earlier works stud-ied insect and robot interactions.19,20 In our previous experiments, we as-sumed that the insect’s position and heading angle were exactly known from a camera attached to the top of a platform, and the robot only needed to entice the insect to a specifi c spot in a defi ned area. However, in this ar-ticle, the robot must fi nd the insect using a camera attached to the robot itself to recognize the insect’s posi-tion and heading angle. The robot needs to know the insect’s position at all times to entice it to a predefi ned trajectory. Previous experiments used fuzzy-logic-based reinforcement learning and expertise measurement for cooperative learning, but here, we entice the insect to follow the given trajectory by using hierarchical rein-forcement learning.

Methodologies

In our case, reinforcement learn-ing21,22 means a trial-and-error-based algorithm inspired by the learning behavior of animals. To implement reinforcement learning, we use Q-learning,23 which is an optimal ac-tion-selection policy algorithm used in reinforcement learning. This tool is most suitable for model-free sys-tems, such as that of an insect. The main Q-learning equation is

1), Γ is the discount factor (0 ≤ Γ < 1), and t is the immediate reward.

From the current state, the robot fi nds the next state under action a to maximize the Q-value, which is the total expected accumulated reward. Based on the learning algorithm, the robot fi nds its own goal and updates the state to complete the exploitation

process. However, to effi ciently fi nd a

goal, the learning process frequently requires the robot to choose an action

randomly to explore the states (the

exploration process). Thus, the

ex-ploitation and exploration processes have some trade-off issues. As a cri-terion for selecting between the two, the learning process generates a ran-dom value e in every repetition, where 0 ≤e < 1. If the random value is less than the predefi ned e value, the

learn-ing process chooses an action ran-domly—if not, the learning process chooses an action to maximize the Q-value. Here, the predefi ned value e

decreases with the increasing number of iterations, meaning that the prob-ability of choosing a random action also decreases.

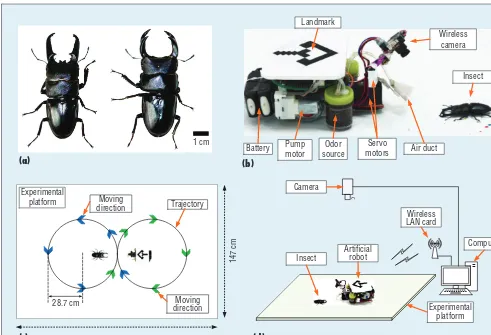

a computer. To verify that the robot can entice the insect in various direc-tions, we chose a trajectory composed of two circles (see Figure 1c). The ro-bot attempts to entice the insect to-ward the left direction in the left circle, the right direction in the right circle, and forward at the intersection point. Using this trajectory, the robot learns how to entice the insect in var-ious ways through repetition.

To entice the insect along the tra-jectory, the robot needs to know its own position, so we attached a cam-era that faces the experimental plat-form to the ceiling. A wireless camera attached to the robot detects the in-sect and computes its position and heading information with respect to the robot. In its interaction with the insect, the robot’s size is a crucial fac-tor—we needed a robot whose size was similar to the insect’s, which meant the robot couldn’t equip its own computing structure. This is why we borrow those abilities from the host computer, which controls the robot’s movement remotely by wire-less signal, conducts real-time im-age processing, and stores acquired data. Humans were only involved in constructing the robot, the experi-mental platform, and any related programs in advance. All the robot’s processes, such as recognition, deci-sion, learning, and control, were fully performed autonomously during the experiment without human aid.

We chose two types of living stag beetles for the insect: Dorcus

tita-nus castanicolor and Dorcus hopei

binodulosus (see Figure 1a). These

insects are strong enough to endure several experiments, have good mo-bility over fl at surfaces, and exhibit

A wireless camera

56 www.computer.org/intelligent IEEE INTELLIGENT SYSTEMS

R O B O T I C S

a two- to three-year life span. To de-termine interaction mechanisms be-tween the insect and the robot, we performed various experiments us-ing stimuli such as light, vibration, air flow, robot movement, physical contact, and sound. The insects’ reac-tions to these variables weren’t strong enough to achieve our goal, but we observed that they used three groups of antennae on their heads to moni-tor the environment. After conduct-ing more experiments, we found that these insects react strongly to the spe-cific odor of sawdust from their own habitats.19

To get the robot to entice the insect through odor, we equipped it with

two air pump motors and two bottles containing specific odor sources. The wireless camera mounted on the two servomotors watches and tracks the insect under study to recognize and track it in real time. The air pump motors and servomotors are con-trolled by the Atmega 128 micropro-cessor; a 7.4-v Li-Po battery supplies electricity to the whole robot system.

Experiment

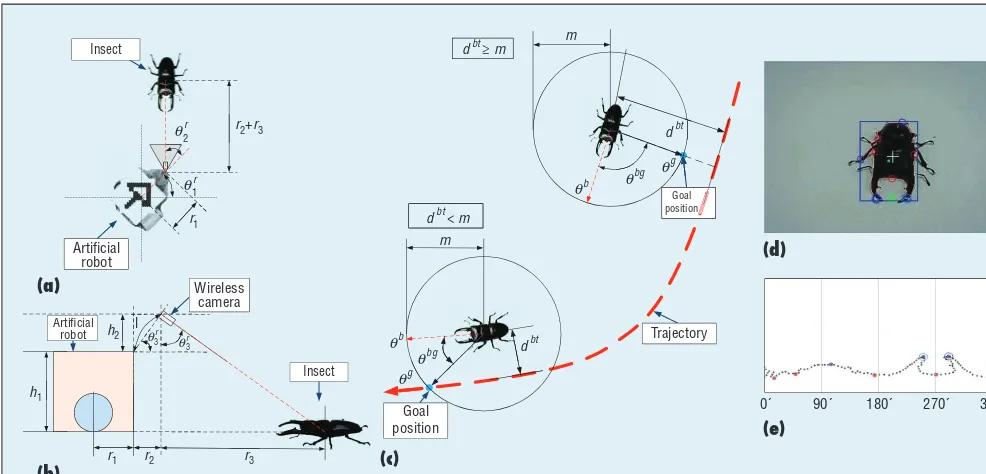

At the beginning, the robot doesn’t know where the insect is: it tries to find it by rotating its heading and increasing the wireless camera’s elevation angle. If the robot finds the insect, it approaches and recognizes

the insect’s position and heading an-gle (see Figure 2). Based on the ac-quired position data of the robot (rx, ry), the insect position is calculated as

b r r

r r

x x r

r r

=

( ) ( )

1 1

2 3 1 2

+

+ + +

cos

cos

q

q q

and

b r r

r r

y y r

r r

=

( ) ( )

1 1

2 3 1 2

+

+ + +

sin

sin

q

q q

,

where r2= cosl q3r, h2= sinl q3r, r

h h

r

3 1 2

3

=

(90 )

+ −

tan q ,

q1r is the robot’s heading angle, q2r

and q3r are the camera’s azimuth and

elevation angle, and h1, h2, l, r1, r2, and r3 are the distance values, as il-lustrated in Figures 2a and 2b.

Landmark

Wireless camera

Wireless LAN card

Artificial robot

Insect

Computer

Experimental platform Experimental

platform Moving Trajectory direction

Moving direction

147 cm

28.7 cm

Insect

Air duct Servo

motors Odor

source Pump

motor Battery 1 cm

Camera (a)

(c)

(b)

(d)

The robot must use a visual recog-nition process to detect the insect— this technology is covered in great detail in the literature. For exam-ple, one active object-recognition process uses viewpoints,24 whereas a scene category–recognition algo-rithm uses spatial pyramid matching via feature extraction.25 A convolu-tion deep belief network framework, inspired by the human brain’s struc-ture in terms of visual recognition,26 uses unlabeled images in an unsu-pervised learning process. The scale invariant feature transform (Sift) al-gorithm for object recognition27 uses key feature vectors. Another extrac-tion method for distinguishing in-variant features in image data28 uses a fast nearest-neighbor algorithm. To recognize objects and scenes, one ap-proach uses a color descriptor–based recognition process and the Sift algo-rithm.29 A naive Bayesian classifica-tion framework for the recogniclassifica-tion process uses simple binary features and model class posterior probabili-ties,30 whereas one shape-matching

and object-recognition process31 uses shape contexts by finding correspon-dences between shapes. The speeded-up robust features (Surf) algorithm32 incorporates a scale rotation invari-ant detector and descriptor via Hes-sian matrix–based interest points. To find related similarities in object and scene recognition, a database classi-fication process compares 80 million labeled images.33

In contrast to these previous works, we focus on detecting the in-sect by using contours. As Figure 1a shows, the stag beetle has prominent jaws that the robot can easily detect by analyzing its contours. To recog-nize the insect and its heading angle in real time, we use the image from the wireless camera attached to the robot. Working from the OpenCV library (http://opencv.org), a recog-nition algorithm finds several con-tours as potential candidates by using pre-entered size and color informa-tion. Then, the points of each con-tour candidate in Cartesian space are transferred into polar space at

the center of the contour image. Us-ing the prominent jaws’ distance ratio and angle relation, the recognition al-gorithm finds the insect and its head-ing angle (see Figure 2e).

To entice the insect to the desired trajectory, we first define two cases according to whether the shortest distance between the insect and the trajectory is within a predefined ra-dius or not. We start by defining a circle with radius m from the insect’s position, where dbt is the shortest distance between the insect and tra-jectory. The radius designates the in-sect’s maximum moving distance at every iteration step. If dbt ≥ m, the ro-bot tries to entice the insect toward the trajectory, with the goal position located on the circle. If dbt < m, the robot entices the insect toward the intersection point between the circle and the trajectory as a goal position. (Here, the moving direction means the direction of the goal location that the robot needs to entice the insect.)

To help the robot learn how to en-tice the insect to the trajectory, we

Figure 2. Finding the insect. (a) and (b) Note the geometric relation between the robot and the insect. (c) To make the insect follow the given trajectory, we define two cases: if the insect is far from the trajectory, the goal position will be the direction toward the trajectory requiring the least movement, and if it’s near, the goal will be the forward position in the inner circle. (d) Captured image of the insect by the wireless camera. (e) The heading angle from contour data of the acquired image.

Insect

Goal position

Goal position

Trajectory

0´ 90´ 180´ 270´ 360´

d m

Artificial robot

Artificial robot

Wireless camera

r1

h2

h1

r1 r2 r3

l

b θ

b θ

bg θ

g θ

g θ

bg

bt dbt< m

θ r

1

r

3 θ

θ r

3 θ (a)

(d)

(e)

58 www.computer.org/intelligent IEEE INTELLIGENT SYSTEMS

R O B O T I C S

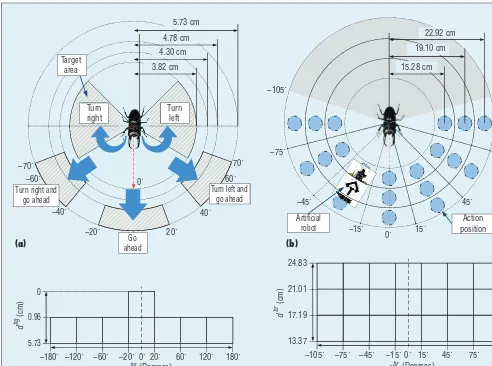

defined two types of state sets. The first set is behavior states, the objec-tive of which is to decide the motion required to entice the insect to the current goal position. This set has eight states (see Figure 3c), with seven angular sections between the insect’s heading angle and the goal direction. Behavior states are defined in the po-lar space formed by the angle ranges between the insect’s heading angle and goal direction and the distance ranges between the goal itself and the insect. Here, we only consider the two

distance ranges in the central angular section because the range between the insect and the goal position can ap-proach 0 if the insect’s heading angle aligns with the goal direction. We fur-ther defined five specific motions for the insect: turn left, turn left and go ahead, go ahead, turn right and go ahead, and turn right (see Figure 3a). When the robot finds the related goal position near the insect, as in Figure 2c, it decides the action for the cur-rently recognized behavior state based on Q-learning. If it needs to move the

insect toward the chosen state, then the chosen direction is the selected motion. Five possible directions (left, up-left, up, up-right, and right) are symmetrically connected to the five motions (turn left, turn left and go ahead, go ahead, turn right and go ahead, and turn right), respectively.

If the distance between the insect and the goal position dbg is less than a specific value and the insect’s heading angle qbg is within the goal direction, the state updates as 1 and becomes the behavior state’s goal. The robot

Figure 3. States. (a) To entice the insect, we define five specific motions: turn left, turn left and go ahead, go ahead, turn right and go ahead, and turn right. The robot learns which motion is necessary to make the insect move toward the found goal position by using the behavior state. (b) To entice the insect to enact the chosen motion for the behavior state, the robot finds a suitable action position to spread the odor source near the insect. (c) There are seven angular sections between the insect’s heading angle and the goal direction, but at the central angular section, we consider two additional cases according to the distance ranges between the goal and insect. (d) The set of action states combines the seven angular sections between the insect’s heading angle and the robot’s direction and the three distance ranges between the insect and the robot.

5.73 cm

Target area

Turn right

–70´

–60´

–40´

–20´ 20´

0´

40´

60´

70´

–105´

–75´

–45´

–15´

0´ 15´

45´

105´

75´

Action position 22.92 cm

19.10 cm

15.28 cm

Turn left and go ahead

Artificial robot Turn

left

Turn right and go ahead

Go ahead

0

0.96

5.73

–180´ –120´ –60´ –20´ 0´ 20´

(Degrees) bg

θ θbr(Degrees)

d

(cm)

bg

d

(cm)

br

60´ 120´ 180´ –105´ –75´ –45´ –15´0´ 15´ 45´ 75´ 105´

24.83

21.01

17.19

13.37 4.78 cm

4.30 cm

3.82 cm

(a)

(c)

(b)

a chosen specifi c motion. This set contains fi ve states, each of which is related to a specifi c motion: action state 1 turns left, action state 2 turns left and goes ahead, action state 3 goes ahead, action state 4 turns right and goes ahead, and action state 5 turns right. The set of action states is a combination of seven angular sec-tions between the insect’s heading angle and the robot’s direction, and the three distance ranges between the insect and the robot (see Figure 3d). The action positions are in the center of each action state’s cell. If a specifi c motion is chosen on a be-havior state, the robot fi nds a suit-able action position to spread the odor source near the insect (see Fig-ure 3b). To fi nd a suitable action po-sition, the robot explores the chosen action states under its own internal process, in which the robot virtually selects nine subactions consisting of eight directions to move (up, down, left, right, up-left, up-right, down-left, and down-right) and a choice of action positions. In the subiteration process, the robot tries to fi nd a suit-able action position on the chosen set of action states—it might fi nd a goal easily, but if the chosen action states have insuffi cient information about the goal, then the robot needs to take more time to fi nd the goal. Thus, to keep the insect continuously follow-ing the predefi ned trajectory, the ro-bot has to fi nd an available action position with limited time, which is why we limit the number of subitera-tion steps.

If the robot has selected an action position through its internal process, it moves to the selected action posi-tion and spreads the specifi c odor

dates as 1, and the position becomes a goal of that action state.

Before conducting our experiments, we needed to fi nd suitable predefi ned values: if the moving distance was too long, the robot wouldn’t be able to precisely entice the insect on the predefi ned trajectory, even with suf-fi cient knowledge for interacting with the insect. Conversely, if the moving distance is too short, the robot can’t clearly observe the insect’s behavior because the insect detects the odor

source a few seconds after the robot spreads it. In addition, the robot can’t watch the insect’s reaction if the actu-ation time is too short—and if it’s too long, the experiment’s total duration must be increased because the robot has to spread odor source for a long actuation time. We chose suitable values in advance through several trials before conducting the offi cial experiment.

To make the insect follow a chosen motion, the robot tries to fi nd avail-able action positions in the related set of action states. To fi nd the best position among available positions, the robot calculates each spot’s suc-cess rate by counting the number of

Results

In our experiment, the learning rate for behavior and action states was 0.9, and the discount factor for be-havior and action states was 0.85. To obtain more interaction opportu-nities between the robot and the in-sect, the experiment always started near the center of the experimental platform and with the insect that had the best reactions among 12 Dorcus

titanus castanicolor and 4 Dorcus

hopei binodulosus. If insect reactions

declined, or if they collided with the robot, we stopped the experiment. If the insect or robot left the experimen-tal platform, we temporarily stopped the experiment.

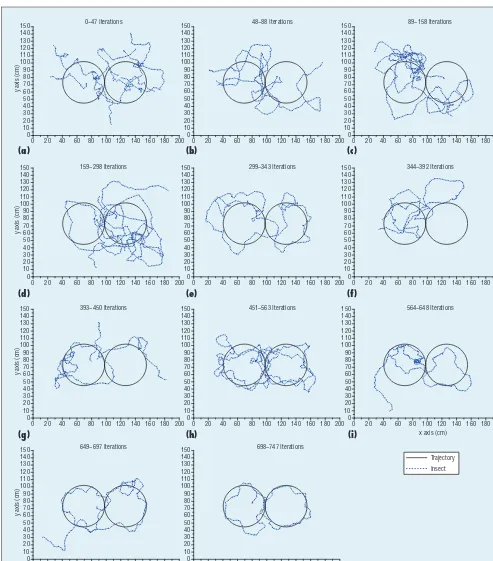

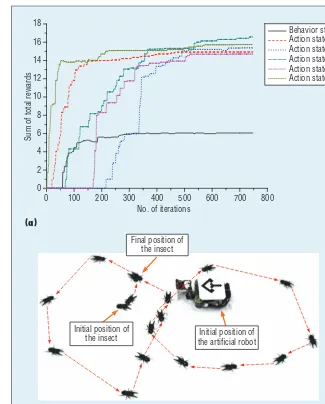

After placing the insect and robot near the center of the experimen-tal platform, the experiment started again. The robot tried to entice the insect toward the predefi ned trajec-tory. If it lost the insect, the robot tried to fi nd it again and re-entice it, following the shortest distance be-tween the insect and the predefi ned trajectory. Figure 4 shows the experi-mental results after learning through several iterations.

We performed the experiments 747 times for a total duration of 11,708 seconds (http://dcas.gist.ac.kr/ bioinsect). At the beginning (Figures 4a through 4d), the insect’s moving path didn’t follow the predefi ned tra-jectory. After increasing the number of iterations, the insect’s path became similar to the shape of the given tra-jectory. As Figure 4k shows, we even-tually got a path that came close to the predefi ned trajectory.

Figure 5b shows the captured im-age of the insect’s moving path

cor-To make the insect follow

60 www.computer.org/intelligent IEEE INTELLIGENT SYSTEMS

R O B O T I C S

responding to Figure 4k. The sum of each defined state set’s total re-wards can be considered as values of

amounts of knowledge—a criterion that the robot is learning. Here, the amount of knowledge means each

set of the defined state’s total accu-mulated reward. As mentioned in the previous section, we defined several

150 0~47 Iterations 48~88 Iterations 89~158 Iterations

159~298 Iterations 299~343 Iterations 344~392 Iterations

393~450 Iterations

649~697 Iterations 698~747 Iterations

the learning process, it updates the re-lated set of states using Equation 1. The values increased as the iterations increased (see Figure 5a) and then stably converged to specific optimal quantities.

Discussions

The total lap time (11,708 seconds) included not only time for learning but also supporting time, which com-prises the moving time for each ac-tion posiac-tion in every iteraac-tion and the interaction time to detect the in-sect’s motion. (In this experiment, the robot can spread the odor source for a maximum of 15 seconds.) The ex-periment’s total learning time wasn’t a crucial factor—instead, it was the number of iterations.

At the beginning, the insect’s moving path was random because the robot wasn’t enticing it. However, we found that the robot continuously learns knowledge during the experiment (it-erations 1 to 298). Figure 5 shows that the sum of total rewards for the set of behavior states, the set of action state 1, and the set of action state 5 ap-proached their optimal values; other states’ values increased as well. During the experiment (iterations 299 to 563), the robot learned continuously, and the total reward for action states 2, 3, and 4 reached near optimal values (Figure 5a). As the iterations increased, the ro-bot gradually learned how to entice the insect in various situations, with each path getting closer to the predefined trajectory until they started to match. We can therefore argue that a robot, equipped with a camera as an eye, can detect an insect autonomously and gradually learn to entice it on a pre-defined trajectory without human aid. Each moving path in the experiment demonstrated how the robot learns by trial and error.

Our experimental results can’t be compared with other related works directly because those works mainly focus on finding methods to control an insect or animal’s movement. Our study demonstrates not only move-ment control of the insect but en-hanced control of the robot through its own learning progress via rein-forcement learning.

During our experiments, the insect occasionally exhibited uncertain and complex behavior. For instance, the robot enticed the insect, and it sud-denly changed its moving direction

but didn’t respond to the odor source, so we removed it from the experimen-tal platform. These behaviors made the experiment difficult to proceed. In addition, the insect’s reactivity to a specific odor source varies every day— one insect didn’t respond, even though it had a good response to the same odor source in previous experiments.

Unfortunately, there were no clues as to why these behaviors happened. One hypothesis is that insects rely on their antennas when sensing. To mea-sure the odor source in the air, the insect might need to take a break to

12

10

8

6

4

2

0

0 100 200 300 400 500 No. of iterations

Final position of the insect

Initial position of

the insect the artificial robotInitial position of

Sum of total rewards

600 700 800

Action state 5

(a)

(b)

62 www.computer.org/intelligent IEEE INTELLIGENT SYSTEMS

R O B O T I C S

groom its antenna.34 According to the antenna’s condition, reactivity to an odor source can differ. Another hypothesis is that an insect might characterize the odor source as “not valuable” from previous interactions. One species of insects has an organ in its brain for learning and memory, which might interfere with experi-mental results.35 Several types of ex-periments using specific odor sources showed that a cockroach has an ol-factory learning system composed of short- and long-term memory.36–38 Bees have a learning structure for foraging food using visual and olfac-tory learning processes to distinguish odor, shape, and color.39,40 Crickets and flies have a similar olfactory and visual memory structure.41,42 In ad-dition, several studies reported that beetles also have learning and mem-ory organs in their brains.43,44 In our experiments, the insect didn’t receive an actual reward—an attractive odor source made the insect follow the ro-bot. Therefore, it might have learned that the odor source itself was useless. In addition, the insect rarely re-sponded to the robot’s movement. In our previous experiment,19 the insect didn’t respond to any robot or light source movement, so visual stimuli might not affect insect behavior.

S

o far, we’ve only conducted our experiments in ideal situations, so we can’t successfully argue that a robot can entice an insect in a realen-vironment. The current robot moves on a flat surface, and the related al-gorithms only considered a 2D space. The interaction mechanism could be affected by weather and other un-known properties of a real environ-ment, most likely resulting in more unexpected and complex behaviors. Conducting our experiment in a real environment is a goal of our future work.

Acknowledgments

This work was supported by the National Research Foundation of Korea under grant NRF-2013R1A2A2A01067449.

References

1. B. Argall et al., “A Survey of Robot Learning from Demonstration,” Robot-ics and Autonomous Systems, vol. 57, 2009, pp. 469–483.

2. P. Liu et al., “How to Train Your Robot: Teaching Service Robots to Reproduce Human Social Behavior,”

Proc. 23rd IEEE Int’l Symp. Robot and Human Interactive Communication, 2014, pp. 961–968.

3. V. Gullapalli et al., “Acquiring Robot Skills via Reinforcement Learning,”

IEEE Control Systems, vol. 14, no. 1, 1994, pp. 13–24.

4. S. Yamada, “Evolutionary Behavior Learning for Action-Based Environment Modeling by a Mobile Robot,” Applied Soft Computing, vol. 5, no. 2, 2005, pp. 245–257.

5. J.B. Lee, M. Likhachev, and R.C. Arkin, “Selection of Behavioral Pa-rameters: Integration of Discontinuous

Switching via Case-Based Reasoning with Continuous Adaptation via Learn-ing Momentum,” Proc. IEEE Int’l Conf. Robotics and Automation, vol. 2, 2002, pp. 1275–1281.

6. W.M. Tsang et al., “Remote Control of a Cyborg Moth Using Carbon Nano-tube-Enhanced Flexible Neuroprosthet-ic Probe,” Proc. IEEE 23rd Int’l Conf. Micro Electro Mechanical Systems, 2010, pp. 39–42.

7. A. Bozkurt et al., “Insect-Machine In-terface Based Neurocybernetics,” IEEE Trans. Biomedical Eng., vol. 56, no. 6, 2009, pp. 1727–1733.

8. H. Sato et al., “Radio-Controlled Cyborg Beetles: A Radio-Frequency Sys-tem for Insect Neural Flight Control,”

Proc. IEEE 22nd Int’l Conf. Micro Electro Mechanical Systems, 2009, pp. 216–219.

9. R. Holzer and I. Shimoyama, “Lo-comotion Control of a Bio-robotic System via Electric Stimulation,” Proc. IEEE/RSJ Int’l Conf. Intelligent Robots and Systems, vol. 3, 1997, pp. 1514–1519.

10. Y. Kuwana et al., “Synthesis of the Pheromone-Oriented Behaviour of Silk-worm Moths by a Mobile Robot with Moth Antennae as Pheromone Sensors,”

Biosensors and Bioelectronics, vol. 14, no. 2, 1999, pp. 195–202.

11. J. Halloy et al., “Social Integration of Robots into Groups of Cockroaches to Control Self-Organized Choices,” Sci-ence, vol. 318, no. 5853, 2007, pp. 1155–1158.

12. K. Kawabata et al., “Active Interaction Utilizing Micro Mobile Robot and On-Line Data Gathering for Experiments in Cricket Pheromone Behavior,” Robotics and Autonomous Systems, vol. 61, no. 12, 2013, pp. 1529–1538.

13. R. Vaughan et al., “Experiments in Automatic Flock Control,” Robotics and Autonomous Systems, vol. 31, no. 1, 2000, pp. 109–117.

14. M. Bhlen, “A Robot in a Cage,” Proc. Int’l Symp.Computational Intelligence

T H E A U T H O R S

Ji-Hwan Son is a postdoctoral fellow of the Distributed Control and Autonomous Sys-tems Laboratory at the Gwangju Institute of Science and Technology. His research in-terests include autonomous systems, reinforcement learning, multi-agent systems, and machine learning. Son has a PhD in mechatronics from the Gwangju Institute of Science and Technology. Contact him at [email protected].

Locomotion,” J. Royal Soc. Interface, vol. 9, no. 73, 2012, pp. 1856–1868. 16. Q. Shi et al., “Modulation of Rat

Behaviour by Using a Rat-Like Robot,”

Bioinspiration & Biomimetics, vol. 8, no. 4, 2013, article no. 046002. 17. H. Sato et al., “A Cyborg Beetle: Insect

Flight Control through an Implantable, Tetherless Microsystem,” Proc. IEEE 21st Int’l Conf. Micro Electro Mechani-cal Systems, 2008, pp. 164–167. 18. S. Lee et al., “Remote Guidance of

Un-trained Turtles by Controlling Voluntary Instinct Behavior,” PlOS ONE, vol. 8, no. 4, 2013, article no. e61798. 19. J.-H. Son and H.S. Ahn, “Bio-insect

and Artificial Robot Interaction: Learning Mechanism and Experiment,”

Soft Computing, vol. 18, 2014, pp. 1127–1141.

20. J.-H. Son et al., “Bio-insect and Artifi-cial Robot Interaction Using Coopera-tive Reinforcement Learning,” Applied Soft Computing J., vol. 25, 2014, pp. 322–335.

21. R.S. Sutton and A.G. Barto, Reinforce-ment Learning: An Introduction, MIT press, 1998.

22. L.P. Kaelbling, M.L. Littman, and A.W. Moore, “Reinforcement Learning: A Survey,” arXiv preprint cs/9605103, 1996.

23. C.J. Watkins and P. Dayan, “Q-Learn-ing,” Machine Learning, vol. 8, nos. 3–4, 1992, pp. 279–292.

24. H. Borotschnig et al., “Appearance-Based Active Object Recognition,”

Image and Vision Computing, vol. 18, no. 9, 2000, pp. 715–727.

25. S. Lazebnik et al., “Beyond Bags of Features: Spatial Pyramid Matching for Recognizing Natural Scene Categories,”

Proc. IEEE Computer Soc. Conf. Com-puter Vision and Pattern Recognition, 2006, pp. 2169–2178.

27. D.G. Lowe, “Object Recognition from Local Scale-Invariant Features,” Proc. 7th IEEE Int’l Conf. Computer Vision, 1999, pp. 1150–1157.

28. D.G. Lowe, “Distinctive Image Features from Scale-Invariant Keypoints,” Int’l J. Computer Vision, vol. 60, no. 2, 2004, pp. 91–110.

29. V. De Sande et al., “Evaluating Color Descriptors for Object and Scene Rec-ognition,” IEEE Trans. Pattern Analy-sis and Machine Intelligence, vol. 32, no. 9, 2010, pp. 1582–1596.

30. M. Ozuysal et al., “Fast Keypoint Rec-ognition Using Random Ferns,” IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 32, no. 3, 2010, pp. 448–461.

31. S. Belongie et al., “Shape Matching and Object Recognition Using Shape Con-texts,” IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 24, no. 4, 2002, pp. 509–522.

32. H. Bay et al., “Speeded-up Robust Fea-tures (SURF),” Computer Vision and Image Understanding, vol. 110, no. 3, 2008, pp. 346–359.

33. A. Torralba et al., “80 Million Tiny Images: A Large Data Set for Nonpara-metric Object and Scene Recognition,”

IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 30, no. 11, 2008, pp. 1958–1970.

34. K. Brczky et al., “Insects Groom Their Antennae to Enhance Olfactory Acu-ity,” Proc. Nat’l Academy of Sciences, vol. 110, no. 9, 2013, pp. 3615–3620. 35. Y. Li and N.J. Strausfeld, “Morphology

and Sensory Modality of Mushroom Body Extrinsic Neurons in the Brain of the Cockroach, Periplaneta Ameri-cana,” J. Comparative Neurology, vol. 387, no. 4, 1997, pp. 631–650. 36. M. Sakura and M. Mizunami,

“Olfac-tory Learning and Memory in the

in the American Cockroach Periplaneta Americana,” J. Experimental Biology, vol. 207, no. 2, 2004, pp. 369–375. 38. S. Decker, S. McConnaughey, and T.L.

Page, “Circadian Regulation of Insect Olfactory Learning,” Proc. Nat’l Acad-emy of Sciences, vol. 104, no. 40, 2007, pp. 15905–15910.

39. M. Hammer and R. Menzel, “Learn-ing and Memory in the Honeybee,” J. Neuroscience, vol. 15, no. 3, 1995, pp. 1617–1630.

40. M. Giurfa, “Behavioral and Neural Analysis of Associative Learning in the Honeybee: A Taste from the Magic Well,” J. Comparative Physiology A, vol. 193, no. 8, 2007, pp. 801–824. 41. S. Scotto-Lomassese et al., “Suppression

of Adult Neurogenesis Impairs Olfac-tory Learning and Memory in an Adult Insect,” J. Neuroscience, vol. 23, no. 28, 2003, pp. 9289–9296.

42. M. Heisenberg, “Mushroom Body Memoir: From Maps to Models,” Na-ture Reviews Neuroscience, vol. 4, no. 4, 2003, pp. 266–275.

43. M.C. Larsson, B.S. Hansson, and N.J. Strausfeld, “A Simple Mushroom Body in an African Scarabid Beetle,” J. Comparative Neurology, vol. 478, no. 3, 2004, pp. 219–232.

44. S.M. Farris and N.S. Roberts, “Coevo-lution of Generalist Feeding Ecologies and Gyrencephalic Mushroom Bodies in Insects,” Proc. Nat’l Academy of Sciences, vol. 102, no. 48, 2005, pp. 17394–17399.