It is useful at this stage to consider multiple choice items in some detail, as they are undoubtedly one of the most widely used types of items in objective testing. Multiple choice in some details, as they are undoubtedly one of the most widespread types of items in objective tests. Multiple-choice item is one of the most difficult and time-consuming types of item construction, numerous bad multiple-choice tests.

The result of this study is expected to be useful for the teacher's study to know the validity, reliability, item difficulty and definition of test items of English test.

Types of Tests

In a norm-referenced test, the mastery level of the participants is tested in the comparison test among participants based on a norm internally calculated on the basis of obtaining scores for all participants on the same test. In connection with the implementation of a learning program, pretest held before or at the beginning of the implementation of a learning program. As mentioned earlier, positions held before or at the end of the implementation of the learning program.19.

They depend on the identification of the custom key rather than what they bought from the pin.

Criteria of Good Test

Both are concerned with empirical relationship between test score and criterion, but are distinguished based on the time when criterion data is collected. If in your language teaching you can pay attention to the practicality, reliability and validity of language testing, whether those tests are classroom tests related to part of a lesson, or final exams, or proficiency tests, then you are well on your way to making accurate judgments too much about the competence of the learners with whom you work. 25. If the test is administered to the same candidates on different occasions (with no language practice taking place between these occasions), then to the extent that it produces different results, it is not reliable.

We know that the results would have been different if the test had been given the previous or the following day. The more similar the results would have been, the more reliable the test is said to be. The difficulty index (or facility value) of an item simply shows how easy or difficult the item in question appeared in the test.

Item discrimination (ID) is a statistic that indicates the degree to which an item separates students who did well from those who did poorly on the overall test. The process begins by identifying which students scored in the top group on the overall test and which students scored in the bottom group. The discrimination index ( D ) tells us whether those students who did well on the entire test tended to do well or poorly on each task in the test.

It is assumed that the total score on the test is a valid measure of the student's ability (ie the good students tend to do well on the test as a whole and the poor student poorly). On this basis, the score on the whole test is accepted as the criterion, and it therefore becomes possible to separate the "good" students from the "bad" in performances on individual items.

Try out test

Testing topics can take several different forms, from one-to-one interviews with students, through small pilot trials to large-scale field tests. As with other activities described in these guidelines, it may not be possible to implement each of these types of item testing in a given testing program due to resource limitations. One-on-one interviews with students who have been administered the items can provide very useful information.

Small-scale pilot tests can also provide useful information about how students respond to the items. In this data collection format, test developers administer the items to a larger group of students than is used in one-to-one interviews, and one-to-one debriefing is not typically performed. In large-scale field testing, test developers administer the items to a large, representative sample of students.

Because of the size and nature of the sample, statistics based on these responses are generally accurate indicators of how students may perform on the subjects in an operational administration. If the test items are administered separately from the scored items, motivation may affect the accuracy of the results. Multiple choice subject is one of the most difficult and time consuming types of subject construction, numerous.

However, the main criticism of the multiple-choice item is that it often does not lend itself to testing language as communication. The optimal number of alternatives, or options, for any multiple-choice item is five in most public tests.

Previous reserach

An exploratory analysis of item difficulty, item discrimination, and distraction and item reliability using the ITEMAN program version 3.0 because the ITEMAN program is easy to use and simple. The information included in this program is the question number, answer key, answer choice, student name, and student answer or test score. The result of this research is that this test has low validity, the analysis of difficult items showed that 82.5% of the items are classified as easy items.

And other research comes from Fitra Khoirul Anwar titled: “The Effectiveness of the English Item Test in the Odd Semester Final Test (Item Difficulty and Item Discrimination Level) in XI IPS English Student of SMA Bakti Ponorogo. So this test is a good test, which is neither too easy nor too difficult. The difference with the study above is that the study describes the formula validity, reliability, item difficulty and item discrimination. The research has shown how to calculate the value of validity, reliability, item difficulty and item discrimination, to calculate the formula of validity and reliability using a computer program, and for item difficulty and item discrimination manually without using a computer program.

Theoritical Framework

For item discrimination, these two groups are sometimes referred to as "high" and "low" students or "high" and "low" students.

RESEARCH METHOD

- Reserach Design

- Research Setting

- Population and Sample 1. Population

- Sample

- Technique of data collection

- Technique of Data Analysis

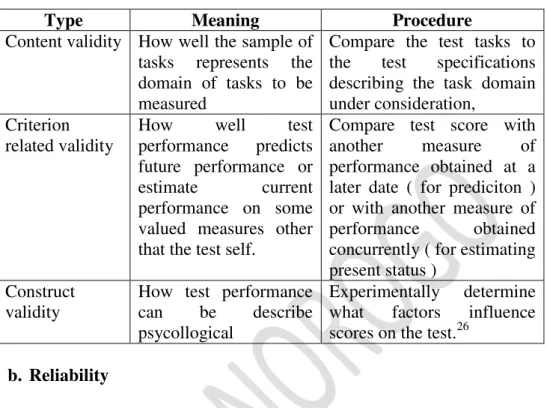

- Validity and Reliability a. Validity

From the definition above, the researcher summarizes that population is all the subjects who will be researched. The population in this research is all the grade nine students at SMPN 2 Jetis Ponorogo in academic 2014/2015. All the grade nine students in SMPN 2 Jetis in academic 2014/2015 are 92 students, this can be the population of the research.

Whether a sample is a good representation of the population depends very much on the extent to which the characteristic of the sample was equal to the population characteristics. The data includes the question, answer of all students, and the key answer of the test in ninth grade students. The total number of objective questions is 50 items and the subjects of the student are 98 respondents. The steps of collecting data in this study are.

Validity means the extent to which inferences from assessment results are appropriate, meaningful and useful in relation to the purpose of the assessment.42 Tests are said to have validity if the result is consistent with the criterion, in terms of parallels between the results of the test with the criterion. In this research, Spearman used Brown (Split half) formula to measure the reliability of the test. The difficulty index (FV) is generally expressed as the fraction (or percentage) of students who answered the item correctly.

FV) = generally expressed as the fraction (or percentage) of students who answered the item correctly. Thus, if 21 of the 26 students tested got one of the items correct, that item would have a difficulty index (or an ease value) of 77 or 77 percent.45.

RESERACH RESULT

- Research Location

- Brief History of SMPN 2 Jetis

- The Vision and Mission of SMPN 2 Jetis Ponorogo

- Data Description

- Data Analysis 1. Validity

- Discussion and Interpretation

- Recomendation

The data that has been analyzed by the researcher is the answer sheet of the students. After we try the test for the students, we get the student's test result. Correct above or equal to 0.3 and lower than or equal to 0.7 this article classified as moderate article.

Based on that classification above, the easy item showed 34% too easy item, 66% medium item and 0% difficult item. From table 4.6 it consists of too easy item. From table 4.7 which showed column number of the student, the column gives information how many students can answer that item correctly. Before classifying the result of item, we need to count/examine the top and bottom group.

The result of the analysis in table 4.10 showed that the test consists of 52% items with fairly poor item discrimination, and 48% items with poor item discrimination. In addition, the proof test must be analyzed to know the validity and reliability of the items. From the above explanation, it can be concluded that the test has low validity because there are many item-medium and many items are classified as poor item discrimination.

The result showed that the test with low validity also has low reliability. Finally, as teachers, as test makers, we had to analyze the result of the exam and a lot of feedback that we received from our students and teachers. The analysis results show that 52% of the items are classified as fairly bad items.

Based on the research result, the researcher wants to offer some recommendations to the teacher who took the mock test should analyze the item tests, for the best result for the next mock test, because the mock test is important to him. try the test before the final test.