It is hereby confirmed that Britney Muk Yuen Kuan (ID No:x 18ACB03450 ) has completed this final year project/dissertation/dissertation* titled “ Vandalism Video Analysis Employing Computer Vision Technique ” under the supervision of Prof. I understand that the University will upload softcopy of my final year project/dissertation/dissertation* in pdf format in UTAR Institutional Repository which can be made available to UTAR community and public. I declare that this report entitled "VANDALISM VIDEO ANALYSIS EMPLOYING COMPUTER VISION TECHNIQUE" is my own work, except as cited in the references.

Introduction

- Problem Statement and Motivation

- Project Objectives

- Project Scope

- Impact, significance and contribution

- Background information

- Report Organisation

In addition, Figure 1.2 shows a number of examples of vandalism events from the dataset, such as graffiti, red paint splashes, glass breakage, and the like. The real-time automated vandalism detection surveillance system will replace the duty of guard in continuous monitoring and also reduce the chance of failure in detecting anomalies due to guard fatigue. It can be considered a form of digital signal processing and is not about understanding the content of photography.

Literature Review

Comprehensive Review

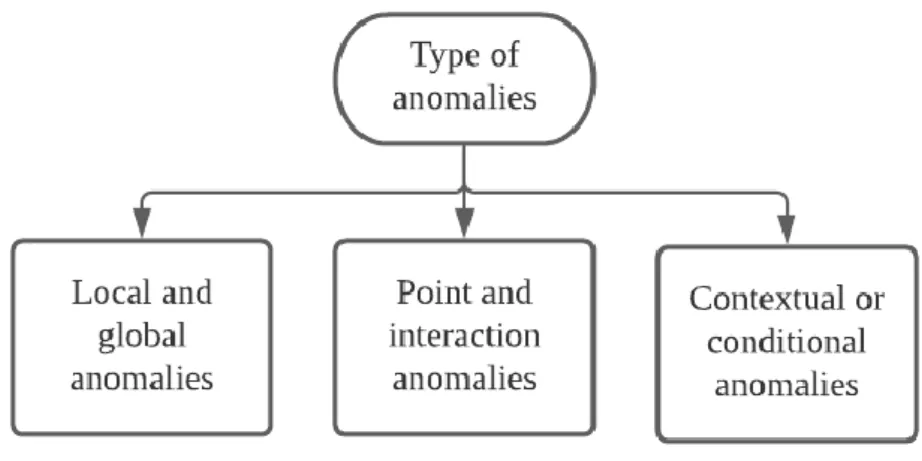

- Anomalies/Outliers

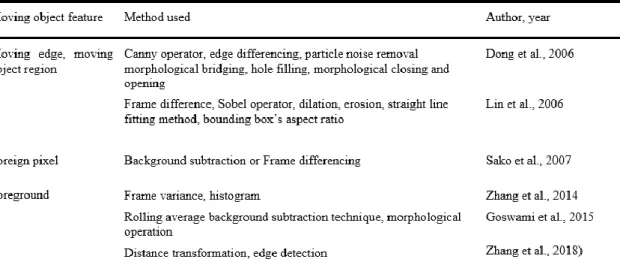

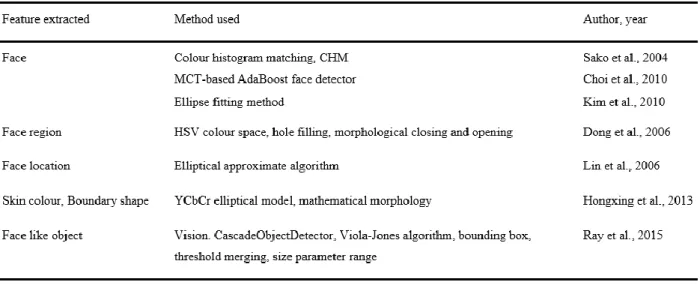

- Conventional Integrated Image Processing Methods

- Deep-Learning Based Methods for Video Anomaly Detection

- Comparative Analysis of Video Anomaly Detection Method

The state-of-the-art in deep-learning based methods used for video anomaly detection could be categorized as shown in Figure 2.5. Each of the hand-crafted/machine learning/deep neural network-based video anomaly detection methods had its own advantages and disadvantages. Methodology The selection of the ML/DNN based video anomaly detection techniques on the available data sets, targeted applications, time complexity and expected performance.

Automated Surveillance System

- Real-Time Detection of Suspicious Behaviours In Shopping Malls [9]

- Fast and Robust Occluded Face Detection in ATM Surveillance [13]

- A Study of Deep Convolutional Auto-Encoders for Anomaly Detection in Videos

- Summary of the Reviewed System

To detect suspicious behavior such as fling, the human security officers would pre-define a specific risk zone by marking a rectangle box, and the system began examining the trajectories of the tracked individuals to see if any of them have been loitering in the high-risk zone for an extended period of time. As shown in Figure 2.10, there were two parts to the autoencoder: the encoder and the decoder. Conversely, the Canny edge detector [16] was also applied to extract appearance features, while the advanced optical flow algorithm was used to extract motion between two consecutive images.

In the data preparation phase, the video would be extracted with both relevant appearance and motion features. These features and frameworks were then combined to create multiple scenarios (case studies) characterizing the input to the CAE, and the CAE subsequently learned the signature of a common event during the training phase. In summary, the proposed model successfully detected "abnormal events" that were not included in the training data.

In multi-instance learning (MIL), the training sample was bagged; a positive bag (irregular) and a negative bag (normal), with different time segments/shots of each video representing a single instance in the bag. After extracting the C3D features for each video segment, a fully connected neural network was trained using a new classification loss function that calculated the classification loss between the highest-scoring instances in the positive and negative bins (shown in red). The highest anomaly score in the positive bag represented the most likely abnormal segments (true positives), while the highest anomaly score in the negative bag represented the most likely abnormal segments but actually a normal segment (false positive).

Their experimental result had proven that their MIL solution provided a significant improvement in anomaly detection compared to the advanced approaches.

![Figure 2.10 Architecture of the proposed CAE [15]](https://thumb-ap.123doks.com/thumbv2/azpdforg/10248183.0/36.892.105.785.290.603/figure-architecture-proposed-cae.webp)

System Methodology/Approach

Real Case Scenario

- Scenario A

- Scenario B

- Scenario C

- Discussion

Design Specifications

- Methodologies and General Work Procedures

- Assumptions

The vandals must be captured on site so that the detector can work for human and suspicious features. The vandalism-prone objects must be imaged so that the significant background changes can be detected. The recorded damage/destruction of a vandalism-prone object must be large enough to indicate a vandalism event.

System Design

System Design / Overview

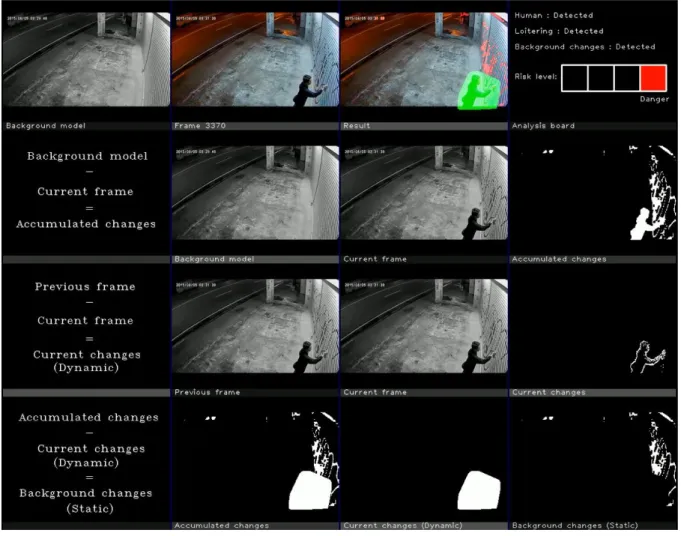

The image sequence goes through three modules such as YOLO detection to detect people, loitering detection to detect suspicious behavior i.e. loitering and especially the detection of significant background changes (static) to detect significant background changes (static) that may represent the permanent damage. caused by local vandals. Finally, the risk level for detected suspicious vandalism and vandalism events is generated on the analysis board. The following subchapters explain in detail Initialization and pre-processing, YOLO detection, loitering detection, detection of significant (static) background changes, and background estimation.

System Components Specifications

- Initialization and Pre-processing

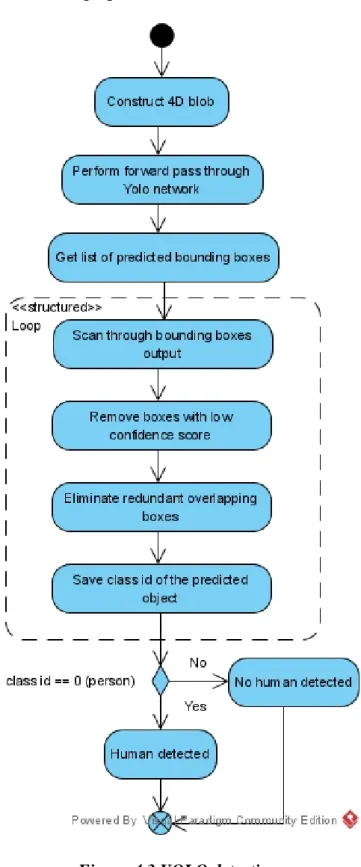

- YOLO Detection [19]

- Loitering Detection

- Significant Background Changes Detection

- Background Updating

The input frame to the neutral network must be in a specific format called a blob, so the blobFromImage function will be used to convert the image to the output frame. After that, the output fields will be scanned inside a loop to remove redundant overlapping fields with low confidence scores. Finally, the high confidence detection class ID will be extracted for later decision making, ie. The class ID of the "person" object is 0.

For each frame the variable will be decremented by one until it reaches zero and when it is less than zero the hover flag will be set from "warning" to "warning". However, if the next frame shows no human detected, the variable will be reset back to 100 and the hover behavior flag will be removed. A foreground mask (accumulated changes) can then be created between the background model and the current frame.

After processing, background changes can now be extracted from accumulated processed changes and current processed changes. The decision to activate the warning for significant static changes is based on the comparison of the threshold value, after the static changes (non-zero pixels) exceed the threshold defined in minWpixel (based on the multiplication of the video frame resolution and the percentage), Risk level " danger" will be flagged and alert to prevent further. In the proposed method, the background estimate will be implied after the vandalized event has occurred for a period of time.

As Figure 4.6 showed, 25 samples in the past recorded 300 frames will be randomly selected and the median of each pixel in BGR value will be calculated over these 25 frames.

System Implementation

Hardware Setup

Software Setup

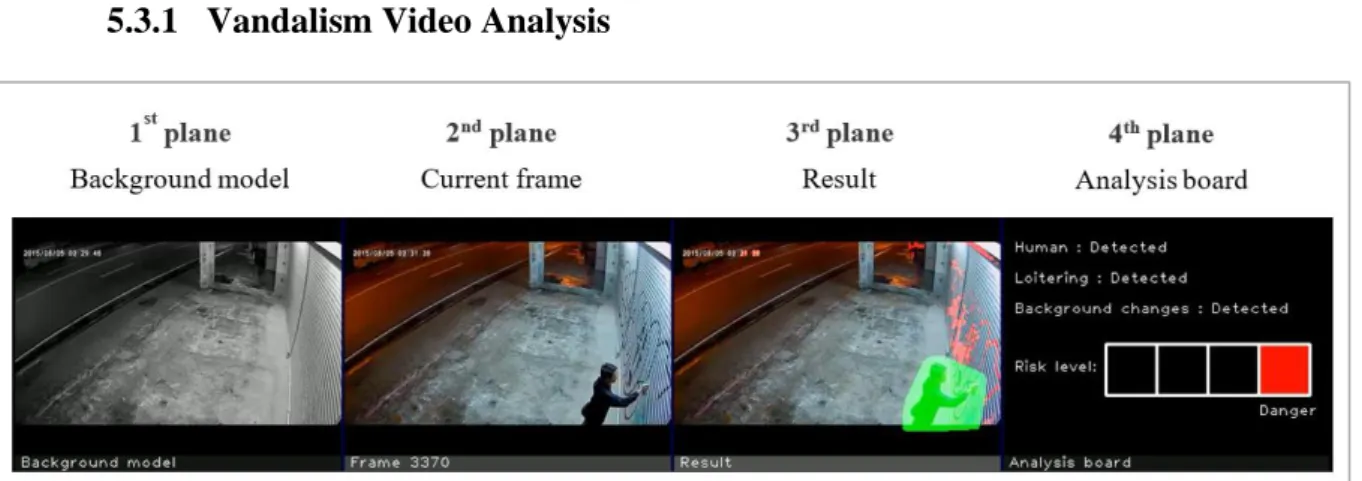

- Vandalism Video Analysis

- Detailed Window (Significant background changes detection)

The monitoring analytics interface as shown in Figure 5.1 displayed the output generated by the vandalism detection methodology. Pixels colored green in the result plane are instantaneous changes (dynamic) representing motion. While the pixels colored in red are the extracted background changes (static) that represent permanent damage from vandalism that can be easily classified by a human.

The analyzed risk level can be classified into four phases: Safe, Warning, Warning and Danger. The risk level will remain in safe phase when no human/vandal is detected and no significant destruction caused by vandalism. The risk level will rise to an alert stage when the YOLO algorithm has detected a human in the scenes.

If the person stays on the scene or hangs around the specific area for too long, the warning phase is entered as such behavior is considered suspicious and can be recognized as potential vandalism. Finally, assuming the vandal initiates the vandalism of public or private property, the system will enter the danger phase when the destruction of property made by the vandal is significant enough to be captured in the scenes and trigger the vandalism flag . The large window as shown in Figure 5.4 is dedicated to the Meaningful background changes detection approach explained in Chapter 4.2.4, this window carefully shows each step on how to extract the background changes (static) that represent the visible damage the vandalism -deed.

System Evaluation and Discussion

System Testing and Result

- Scenario A

- Scenario B

- Scenario C

- Scenario D

- Scenario E

50 From Figure 6.1 a), the system extracts the 1st frame as a background model, and no human and vandalism occurs, so that the risk level remains "safe". The risk level was then raised to "warning" as shown in Figure 6.1 c) when the men stay at the site too long. After that, the risk level rises to .. alarm” as shown in figure 6.2 b), when the man runs into the place, proven that the human detection works.

The prank status is marked as shown in Figure 6.2 c) after the man has remained on the scene for a period of time. Based on the result of Figure 6.2 d), the system is able to detect the significant background changes ie. extract and locate the vase fragments and immediately activate the "danger". alarm. Then the risk level rises to .. warning” for human detection when the young man walks into the scene as in Figure 6.3 b).

After you have been on site for a while, the loitering status is highlighted and the risk level rises to 'warning', as shown in Figure 6.3 c). In addition, the man disappears from the scene and could not be captured by the camera, as shown in Figure 6.4 c). This is due to the poor placement of the security camera. Then the risk level rises to .. alert” to human detection when the man enters the scene, as shown in Figure 6.5 b).

After staying on the scene for a while, the loitering state is flagged and the risk level increases to "warning" as shown in Figure 6.5 c).

Project Challenges

Objectives Evaluation

Conclusion and Recommendation

Conclusion

Recommendation

Liu, “A Bayesian discriminative feature method for face detection,” IEEE Transactions on Pattern Analysis and Machine Intelligence , vol. Lopes, "A survey of deep convolutional autoencoders for video anomaly detection," Pattern Recognition Letters, vol. Canny, “A computational approach to edge detection,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol.

Shah, "Tube Convolutional Neural Network (T-CNN) for action-detection in videos," IEEE International Conference on Computer Vision, pp. Schmidhuber, "Stacked convolutional auto-encoders for hierarchical feature extraction," International Conference on Artificial Neural Networks, pp. Changed the terms in static change detection and improved the result interface (demo) for better understanding.

Handle false alarm due to light intensity (e.g. car light) using color space transformation and limiting V channel ("value"). Enhance/stabilize the system using histogram equalization to eliminate the problem of sudden light/illumination changes. Note The Supervisor/Candidate(s) must/are required to provide the Faculty/Institute with a full copy of the complete set of originality report.

Based on the above results, I hereby declare that I am satisfied with the originality of the Final Year Project Report submitted by my student(s) as mentioned above. Form title: Supervisor's comments on originality report generated by Turnitin for submission of final year project report (for undergraduate programs). All references in bibliography are cited in the thesis, especially in the literature review chapter.

![Figure 2.4 Fundamental image processing steps [5]](https://thumb-ap.123doks.com/thumbv2/azpdforg/10248183.0/24.892.99.750.114.436/figure-fundamental-image-processing-steps.webp)

![Table 2.3 Studies on facial component detection, adapted from [5]](https://thumb-ap.123doks.com/thumbv2/azpdforg/10248183.0/26.892.130.789.179.506/table-studies-facial-component-detection-adapted.webp)

![Table 2.5 Studies on decision making, adapted from [5]](https://thumb-ap.123doks.com/thumbv2/azpdforg/10248183.0/27.892.142.818.467.848/table-studies-decision-making-adapted.webp)

![Figure 2.5 Classification of deep learning-based methods for the detection and localization of video anomalies [7]](https://thumb-ap.123doks.com/thumbv2/azpdforg/10248183.0/28.892.113.783.290.518/figure-classification-learning-based-methods-detection-localization-anomalies.webp)