Background

Problem Statements

Aims and Objectives

Fields such as science, medicine, business and mathematics are using systems to optimize their solution. The particle system is mainly used to optimize the solution within the problem search space, and the particles move through the space to find the best solution to certain problems. There are some rules that must be followed to optimize problems, such as lower bound, upper bound, size of cluster, iteration of clustering, clustering speed.

Optimization algorithms

Genetic Algorithm(GA)

A crossover operator that performs the propagation of traits of good survivors of lost points from the current population to a future population, which should result in a better optimal value. Furthermore, this step enables a global search within the design space and avoids the algorithm getting trapped in local minima. The particularities of the GA algorithm implemented in this related study were partially in line with empirical studies recommending a selection combination with a 50% uniform crossover probability.

Simulated Annealing (SA)

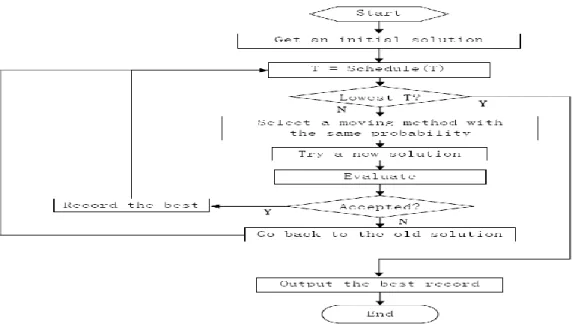

However, as energy state naturally decreases, the probability of moving to higher energy state will be reduced. The new optimum point obtained is then compared with the initial point to see if the new optimum point is better. For minimization cases, if the optimum value tends to decrease, it will be accepted and the algorithm will continue to search for the better optima.

Higher optima value for the objective function can be accepted with some probability which will be determined by Metropolis criteria. To accept the points with higher optima values, the algorithm is able to escape the local optima. As the algorithm processes, the length of the steps became shorter and the final solutions will be close.

Basically, the Mertropolis criteria uses the original user-defined parameters, T, which refers to temperature, and RT, which refers to temperature reduction factor, to precisely determine the probability of accepting the higher optimal value of the associated objective function.

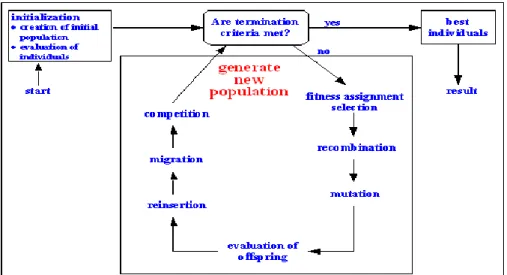

Evolutionary Algorithm (EA)

Particle Swarm Optimization (PSO)

Swarm Intelligence

Many animals interact with each other using the swarm intelligence, such as when the bird flock together, the birds interact with each other without any collision and crossing to each other due to the self-controlled within their search space. Due to the characteristics of swarm intelligence above, it is possible to design swarm intelligence with characteristics of scalable, parallel and fault tolerant.

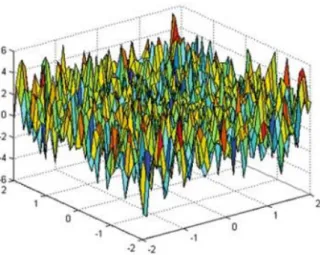

Unique PSO

Due to the uniform global optimum, there is also no need to use a handwritten calculation. Optimizing a function with a handwritten calculation is complicated and takes a long time. To find the global optimum of a function, Kennedy and Eberhart adapted the PSO method into a function.

PSO Algorithm Flowchart

Optimization of Rosenbrock function

Optimization of Matyas function

Weight Minimization of Speed Reducer(WMSR)

Weight Cost Minimization of Pressure Vessel (WMPL)

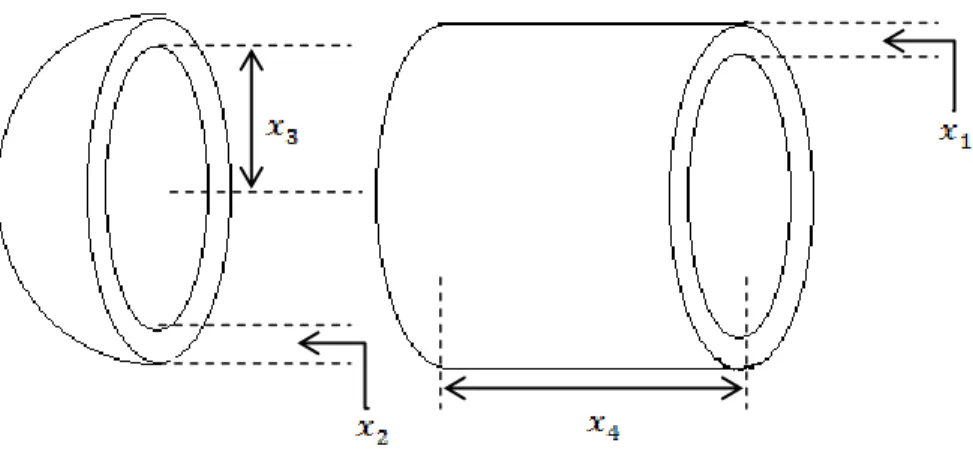

There are four design variables, which are the thickness of the shell ( ), the thickness of the head ( ), the radius of the inner part of the cylinder tube ( ), the length of the part of the cylindrical tube.

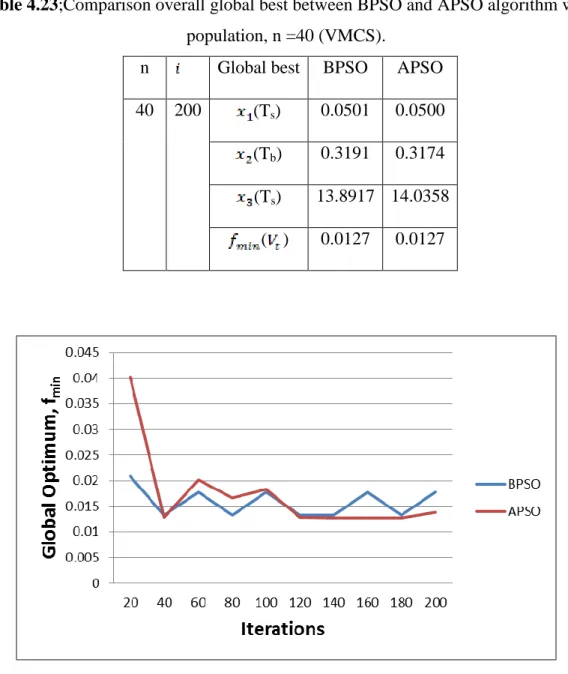

Volume Minimization of Compression Spring (VMCS)

There are three design variables which are the number of active coils of the spring ( ), the winding diameter ( ), the wire diameter.

Particle Swarm Optimization (PSO) Algorithm

Basic Particle Swarm Optimization (BPSO)

The development of the algorithm was inspired by the social behavior of nature, such as the flocking of birds. The mode of swarming was determined by the rate update equations and is described as follows. In this paper, to improve convergence speed, reduced and factored values to an acceptable range of 0-1 were implemented by reducing particle randomness as iterations progress.

Accelerated PSO (APSO)

The recorded results included some relevant parameters such as iterations, population size, and also fixed value for confidence factor and swarm confidence factor. Furthermore, the comparisons of the BPSO and APSO were discussed in this chapter for each of the problems.

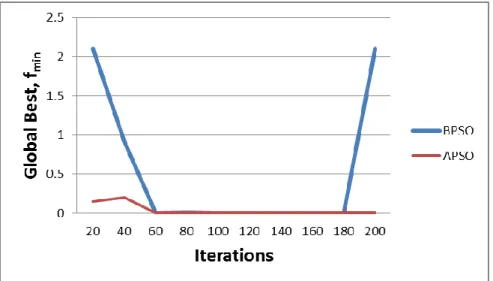

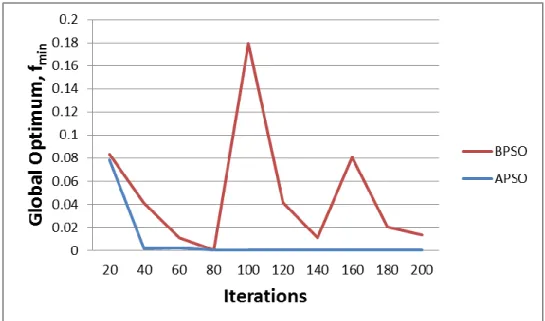

Optimization of Rosenbrock function

Overall view of the results shows the global optimum, fmin quickly reached over 200 iterations as the population size increased, as the population size refers to the number of particles responsible for swarming across the search space of the problem to the optimal point. As the population grew, acceleration for convergence with BPSO outpaced acceleration with APSO. This unstable situation can be explained by the BPSO algorithm using both the current global best and the individual best, which require more particles than APSO which only uses global best to find the optimal point.

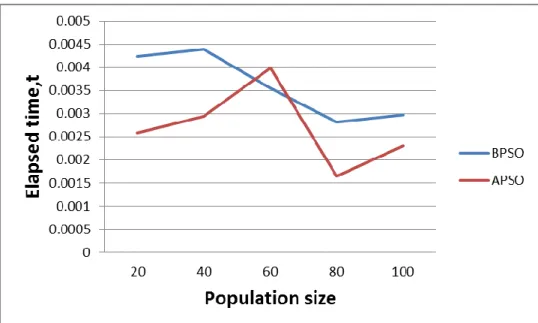

These small changes of time for both algorithms can be due to the low complexity of the fitness function.

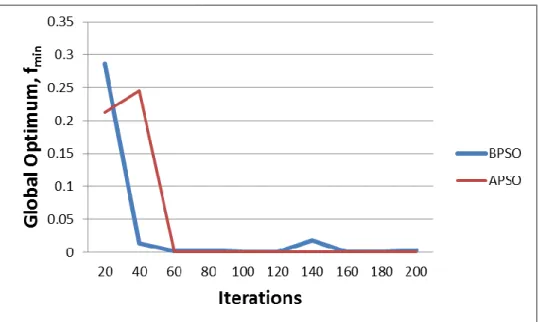

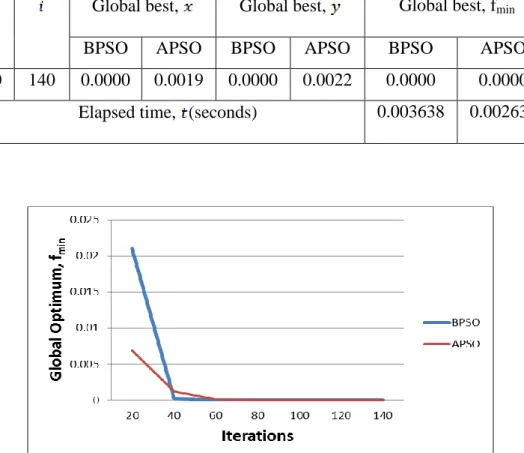

Optimization of Matyas function

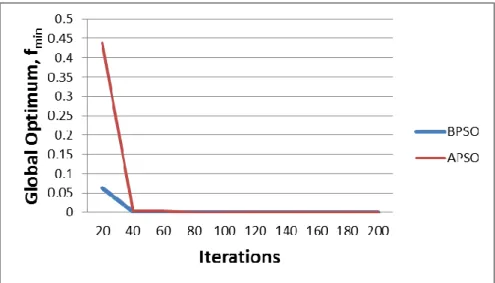

With this optimization, the BPSO gives the results for faster convergence to the optimal point compared to APSO results, even when using a population size of 20 and 40. In Figure 4.6, the exact value of the optimal point, 0, was reached on iteration of 40e by using BPSO which is a faster convergence compared to APSO reaching the optimal point at iterations of 60e. In Figure 4-7, BPSO converges to the optimal point on the 20th of the iterations and remains constant until the end of the iterations, while the APSO results in a convergence point on the 40th of the iterations.

This can be concluded that in this case the BPSO algorithm gives better convergence speed as it needed less population size to optimize even 20.

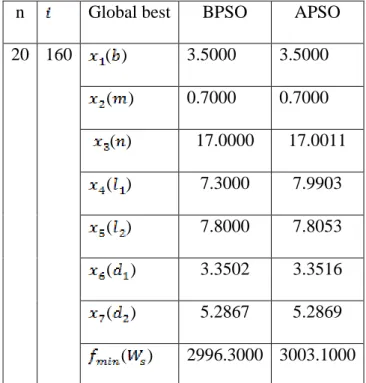

Weight Minimization of Speed Reducer (WMSR)

Both algorithms were used to perform the optimization and both give a global optimum at about 3000 kg. However, the global optimum obtained by BPSO is slightly lower than the global optimum obtained by APSO, with the best global optimum being 2996.4000 kg for BPSO and 3001.7000 kg for APSO. BPSO increased its convergence speed as the population size increased, and its convergence became stable between iterations.

From Figure 4.3.6, the elapsed time for BPSO and APSO over the population size is almost the same.

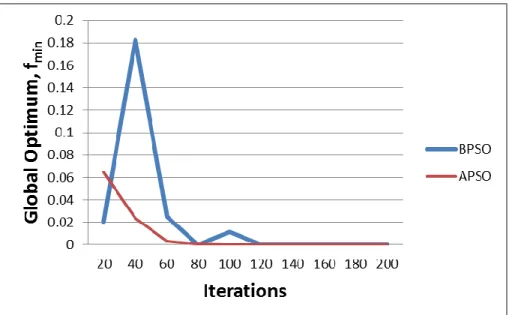

Weight Minimization of Pressure Vessel (WMPV)

Both algorithms were used to perform the optimization and both give global optimum of around 6000 kg. However, the global optimum obtained by BPSO is slightly lower than the global optimum obtained by APSO, where the best global optimum obtained is 5884.5000 kg for BPSO and 5904.0000 kg for APSO. In this problem optimization, the convergence using APSO has much higher acceleration than the BPSO, especially when the population size increases.

Since the population size was set to 100, convergence to the optimal point using APSO started at about the 20th iteration. On the other hand, convergence using BPSO started recently around the 100th iteration. From Figure 4.4.6, the elapsed time for BPSO and APSO over population size is almost the same.

The growth time over the population size for both algorithms is almost the same.

Volume Minimization of Compression Spring (VMCS)

Both algorithms were used to perform the optimization and both yield a global optimum of about 0.013 kg. At the population size of 20, there is a larger fluctuation of the optimal point between the 160th and 200th iterations, this may be due to the insufficient population size needed to accumulate above the optimal point. From Figure 4.5.5, the trend of increasing time over population size is almost the same as the previous pressure vessel optimization result.

After an overview of all these results, it can be concluded that, the optimization problem consists of multiple constraints that need more time to optimize, especially the population that was set is larger.

Conclusions

For mechanical optimization, due to high complexity subject to constraints, BPSO requires more particle solutions (population size) to converge to optimal solutions. On the other hand, WMSR and WMPV optimized by APSO algorithm were successfully accelerated compared to BPSO, since APSO only uses the global best, so the population size could be reduced. In summary, APSO using less population size than BPSO to converge to the optimal solutions, it can save some elapsed time.

However, for the VMCS problem, both algorithms converge at the same speedup, this may be due to the search space set by the small enough bounds to converge at the beginning of convergence, therefore both algorithms were not limited by the population size .

Recommendations

- Rosenbrock functions

- Matyas function

- Weight Minimization of Speed Reducer (WMSR)

- Weight Minimization of Pressure Vessel (WMPV)

- Volume Minimization of Compression Spring (VMCS)

APSO is recommended for this function because APSO gives more stable convergence than BPSO, even with a small population. BPSO is recommended for this optimization problem because it offers the best optimal solution compared to APSO and EA-Coello. In these problems, both algorithms give the same results and the convergence stability is almost the same.

Therefore, both algorithms are recommended for this problem. The population size and iterations recommended for this are 100 and 100 respectively.Cooperative quantum behavioral particle swarm optimization with dynamic context based multilevel thresholding applied to medical image segmentation. This scale is important as it increases particle mobility n=size(best,1); help=size(best,2);.