As CRUS points out in 'The Swiss Way to Quality in the Swiss universities'. CRUS thus proposed to continue pursuing the project's goals from 2013 to 2016 in the program 'Performances de la recherche en sciences humaines et sociales'.

Yes We Should; Research Assessment in the Humanities

All these defense mechanisms are not effective for today's world and especially not for the future of the humanities. One of the problems facing scholars in the humanities is defining this broader group and justifying our relationships with it. Yes, you should; Evaluating research in the humanities 27 . ulties of humanities), national funding organizations for the humanities, the European Science Foundation and/or the European Research Council.

It cannot be a coincidence that this part of the humanities already works with laboratories and large data collections.

Recognizing the humanities as a distinct part of the body of academic knowledge leads to the conclusion that humanists must take the helm in developing their own adequate forms of research evaluation. If we leave it to others, the humanities will look like arms tied to the legs. Open Access This chapter is distributed under the terms of the Creative Commons Attribution-Noncommercial 2.5 License (http://creativecommons.org/licenses/by-nc/2.5/) which permits any noncommercial use, distribution, and reproduction in any medium, provided that original author(s) and source to be credited.

The images or other third-party material in this chapter are included in the work's Creative Commons license, unless otherwise noted in the credit line; if such material is not included in the work's Creative Commons license and the action in question is not permitted by statutory regulation, users must obtain permission from the license holder to duplicate, adapt or reproduce the material.

How Quality Is Recognized by Peer Review Panels: The Case of the Humanities

1 Introduction

Second, summarizing Lamont and Huutoniemi (2011), he compares the findings of How Professors Think with a parallel study considering peer review at the Finnish Academy of Sciences. These panels are set up somewhat differently from those considered by Lamont—for example by focusing on the sciences rather than the social sciences and humanities, or by being unidisciplinary rather than multidisciplinary. Finally, building on Guetzkow et al. 2004), we review aspects of the specificity of assessment in the humanities, and more specifically, the assessment of originality in these fields.

This paper thus contributes to a better understanding of the characteristic challenges that peer review in the humanities raises.

2 The Role of Informal Rules

It examines evaluation in a range of disciplines and compares the distinctive 'evaluative cultures' of fields such as history, philosophy and literature with those of anthropology, political science and economics. Since its publication, How Professors Think has been debated in various academic circles as it addresses several aspects of evaluation in interdisciplinary panels in the social sciences and humanities. It is based on an analysis of twelve funding panels organized by major national funding competitions in the United States: those of the Social Science Research Council, the American Council for Learned Societies, the Woodrow Wilson Fellowship Foundation, a Society of Fellows at an Ivy League university, and a major social science foundation in the social sciences .

The concluding chapter discusses the implications of studying valuation cultures in national contexts, including in Europe.

3 The Impact of Evaluation Settings on Rules

The exploratory analysis points to some important similarities and differences in the internal dynamics of evaluation practices that have gone unnoticed to date and which shed light on how evaluative institutions enable and constrain various types of evaluation conventions. Moreover, the customary rules of methodological pluralism and cognitive contextualism (Mallard et al. 2009) are more salient in the humanities and social science panels than they are in the pure and applied science panels, where disciplinary identities can be united around the idea of scientific consensus, including the definition of shared indicators of quality. Finally, a concern with the use of consistent criteria and the conversion of idiosyncratic tastes is more salient in the sciences than in the social sciences and humanities, partly due to the fact that in the latter disciplines evaluators may be more aware of the role that be played. through (inter)subjectivity in the evaluation process.

While the analogy of democratic deliberation seems to describe the work of the social science and humanities panels, the science panels can best be described as functioning as a court of law, with panel members presenting a case to a jury.

4 Defining Originality

5 What Is an Original Approach?

She is concerned with the innovativeness of the overall project, rather than with specific theories or methodological details. While discussions of theories and methods start from a problem, issue, or concept that has already been constructed, discussions of new approaches concern the construction of problems rather than the theories and methodological approaches used to study them. For example, one scholar in Women's Studies speaks of the "importance of looking at [Poe] from a feminist perspective"; a political scientist comments on a proposal that “has an outsider's perspective and is therefore able to provide a unique view of the subject”;

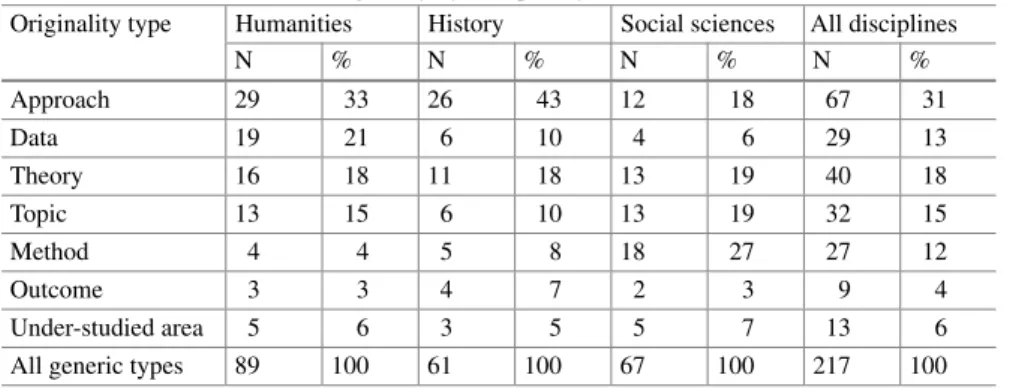

That 'original approach' is used much more often than 'original theory' to discuss originality strongly suggests a need to expand our understanding of how originality is defined, particularly when considering research in the humanities and history, because the original approach is much more central. to evaluation of research in these disciplines than in the social sciences, as we will see shortly.

6 Comparing the Humanities, History and the Social Sciences

Humanities scholars are also more likely than social scientists and historians to define originality with regard to the use of original. Twenty-one percent of them refer to this category, compared to 10% of historians and 6% of social scientists. Another important finding is that humanists and historians are less likely than social scientists to define originality in terms of method (with 4%, 8%, and 27% referring to this category, respectively).

In contrast, social scientists rarely refer to novelty in relation to something that is "canonical".

7 Conclusion

Fairness as appropriateness: Negotiating epistemological differences in peer review. Science, Technology and Human Values. New light on old boys cognitive and institutional particularism in the peer review system. Science, Technology and Human Values.

Humanities Scholars’ Conceptions of Research Quality

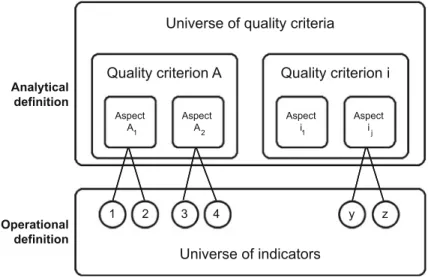

Yet the assessment of research performance in the humanities must be linked to the question of what humanities scientists consider 'good research'. This chapter presents a project1 in which humanities' conceptions of research quality were investigated, and an approach to research evaluation in the humanities was developed. This chapter is structured as follows: In section one, we outline a framework for the development of criteria and indicators for research quality in the humanities.

In the next section, we present the results of two studies in which we implemented this framework: in particular, section two describes humanities scholars' views on quality, derived from repertoire interviews, and section three presents the results of a three-round Delphi survey. survey. that resulted in a catalog of quality criteria and indicators, as well as a list of consensual quality criteria and indicators.

2 Framework

The Four Main Reservations About Tools and Procedures for Research Evaluation

- Methods Originating from the Natural Sciences

- Strong Reservations About Quantification

- Fear of Negative Steering Effects of Indicators

While humanities scholars criticize many different aspects of research evaluation and its tools and instruments, four main reservations can be identified that summarize many of these aspects: (1) the methods derived from the natural sciences, (2) strong reservations about quantification, (3) fear of negative governance effects of indicators and (4) lack of consensus on quality criteria. In particular, the inherent benefits of the arts and humanities are feared to be neglected when using quantitative measures. 2000) do not reject the possibility of a quantitative measurement of research results, they emphasize that these indicators do not measure the important information: 'Some efforts float and others sink, but it is not the measurable success that matters, rather the effort. A further negative effect often cited is the loss of diversity of research topics or even disciplines due to limitations and selection effects introduced by the use of research indicators—.

If there is a lack of consensus on the research topics and meaningful use of methods, a consensus on criteria for distinguishing between 'good' and 'bad' research is difficult to achieve (see, for example, Herbert and Kaube2008, p. 45).

The Four Pillars of Our Framework to Develop Sustainable Quality Criteria

- Adopting an Inside-Out Approach

- Relying on a Sound Measurement Approach

- Making the Notions of Quality Explicit

- Striving for Consensus

Looking at one of the most important indicators of search performance, namely citations, Moed finds that 'it is. Therefore, it is important to rely on a sound measurement approach, as the issue is not "first". However, to explain scholars' notions of quality, it is important not to simply ask them what quality is.

Since knowledge about research quality is still mainly tacit knowledge, it is important to transform it into explicit knowledge in order to develop quality criteria for research assessment in the humanities.

The Implementation of the Framework: The Design of the Project ‘Developing and Testing Quality Criteria

However, even if it is clear what the indicators of an assessment procedure measure, researchers may still fear negative governance effects because the criteria used may not be consistent with their concepts of quality. To summarize, concepts of quality must be as explicit as possible, and humanities researchers' concepts of quality must be taken into account in order to reduce researchers' fear of negative governance effects—and even to reduce the likelihood of negative governance effects in general. Although it is possible to develop quality criteria from repertoire grid interviews, we found it necessary to validate the criteria derived from the interviewed researchers' concepts of quality because we were only able to conduct few repertoire grid interviews due to time. the consuming nature of the technique.

Because both the repertory network technique and the Delphi method are time-consuming methods, we cannot investigate notions of quality across a wide range of disciplines.

3 Notions of Quality: The Repertory Grid Interviews

We also strived for consensus on the quality criteria according to the fourth pillar of the framework. Humanities' conceptions of research quality 53 Table 1 Semantic categorization of the constructs from the repertoire grid interviews. Interdisciplinarity, collaboration and public orientation are therefore not indicators of quality, but of the 'modern' conception of research.

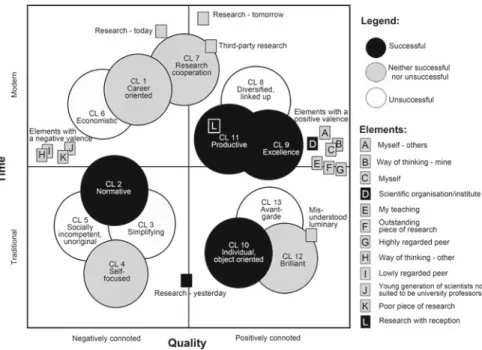

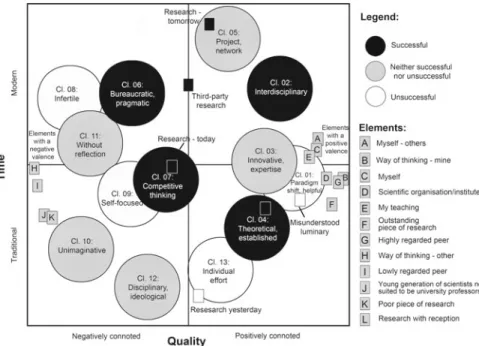

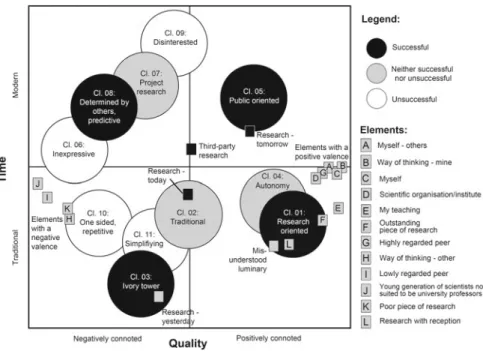

Figures 3, 4 and 5 show a visualization of the elements and groups of constructs for the three disciplines.