Facial Emotional Expressions Recognition Based on

Active Shape Model and Radial Basis Function

Network

Endang Setyati1, 2 1

Informatics Engineering Department 1

Sekolah Tinggi Teknik Surabaya (STTS) Surabaya, Indonesia

[email protected], [email protected]

Yoyon K. Suprapto2, Mauridhi Hery Purnomo2 2

Electrical Engineering Department 2

Institut Teknologi Sepuluh Nopember (ITS) Surabaya, Indonesia

[yoyonsuprapto, hery]@ee.its.ac.id

Abstract—Facial emotional expressions recognition (FEER) is important research fields to study how human beings reflect to environments in affective computing. With the rapid development of multimedia technology especially image processing, facial emotional expressions recognition researchers have achieved many useful result. If we want to recognize the human’s emotion via the facial image, we need to extract features of the facial image. Active Shape Model (ASM) is one of the most popular methods for facial feature extraction. The accuracy of ASM depends on several factors, such as brightness, image sharpness, and noise. To get better result, the ASM is combined with Gaussian Pyramid. In this paper we propose a facial emotion expressions recognizing method based on ASM and Radial Basis Function Network (RBFN). Firstly, facial feature should be extracted to get emotional information from the region, but this paper use ASM method by the reconstructed facial shape. Second stage is to classify the facial emotion expressions from the emotional information. Finally get the model which is matched with the facial feature outline after several iterations and use them to recognize the facial emotional expressions by using RBFN. The experimental result from RBFN classifiers show a recognition accuracy of 90.73% for facial emotional expressions using the proposed method.

Keywords: Facial emotional expression recognition, Facial feature extraction, Active Shape Model, Gaussian Pyramid, Radial Basis Function Network

I. INTRODUCTION

Emotion recognition through the computer-based of facial expression has been an active area of research in the literature for a long time. Many applications for teleconferencing, human computer interface and computer animation require realistic reproduction of facial expressions. Nowadays, many efforts for coexistence of human and computer have been tried by many researchers. Among them, the researchers on the emotional communication between the human and the machine have received large attention as parts of the human-computer interaction technology [1].

In 1978, Ekman and Friesen [2], postulated six primary emotions that each posses a distinctive content together with a unique facial expression. These prototypic emotional displays are also referred to as basic emotions: happiness, sadness, fear,

disgust, surprise, and anger. Several scientist define the emotions for this research as following: (1) Emotion is not a phenomenon but a construct, which is systematically produced by cognitive processes, subjective feeling, physiological arousal, motivational tendencies, and behavioral reactions [3]; (2) An emotion is usually experienced as a distinctive type of mental state, sometimes accompanied or followed by bodily changes, expressions actions [4].

The term expression implies the existence of something that is expressed. Regardless of approach, certain facial expressions are associated with particular human emotions. Research shows that people categorize emotion faces in a similar way across cultures, that similar facial expressions tend to occur in response to particular emotion eliciting events, and that people produce simulations of emotion faces that are characteristic of each specific emotion [5].

Regarding the traditional ASM depends on the setting of the initial parameters of the model, in [1] propose a facial emotion recognizing method based on ASM and Bayesian Network. Firstly, they obtain the reconstructive parameters of the new gray-scale image by sample-based learning and use them to reconstruct the shape of the new image and calculate the initial parameters of the ASM by the reconstructed facial shape. Then reduce the distance error between the model and the target contour by adjusting the parameters of the model. Finally get the model which is matched with the facial feature after several iterations and use them to recognize the facial emotion by using Bayesian Network.

[6] proposed a hierarchical model of RBFN to classify and to recognize facial expressions. This approach utilizes Principal Component Analysis as the feature extraction process from static images. This research is to develop a more efficient system to discriminate 7 facial expressions. They achieved the correct classification rate above 98.4% which is overwhelmingly distinguished compared to other approaches.

applied to 3-D scanning, where an updated real-time display of the manifold to the operator is fundamental to drive the acquisition procedure itself. Quantitative results are reported, which show that the accuracy achieved is comparable to that of two batch approaches: batch version of the HRBF and support vector machines (SVMs).

[8] develop a facial expression recognition system, based on the facial features extracted from FCPs in frontal image sequences. Selected facial feature points were automatically tracked using a cross-correlation based optical flow, and extracted feature vectors were used to classify expressions, using RBFN and FIS. Success rates were about 91.6% using RBF and 89.1% using FIS classifiers.

In this paper, we propose advanced facial emotional expressions recognition method that robust recognize human emotion by using ASM and RBFN. The reminder of this paper is organized as follows: first, we present the facial emotional feature extraction based on ASM is presented in section 2. Our facial emotional expressions recognition system based on RBFN is presented in section 3. In section 4, we present the obtained experimental results. Finally, conclusion and future works are presented in section 5.

II. FACIAL EMOTIONAL FEATURE EXTRACTION METHOD

A. Emotions and Facial Expressions

Psychologists have tried to explain the human emotions for decades. [9], [10] believed there exist a relationship between facial expression and emotional state. The proponents of the basic emotions view [11], [12], according to [13], assume that there is a small set of basic emotions that can be expressed distinctively from one another by facial expressions. For instance, when people are angry they frown and when they are happy they smile [14]. To match a facial expression with an emotion implies knowledge of the categories of human emotions into which expressions can be assigned. The most robust categories are discussed in the following paragraphs.

In Table I shows textual description of facial expressions as representations of basic emotions.

TABLE I. FACIAL EXPRESSIONS OF BASIC EMOTIONS [14]

No Basic

Emotions Textual Description of Facial Expressions

1 Happy

The eyebrows are relaxed. The mouth is open and the mouth corners pulled back toward the ears.

2 Sad The inner eyebrows are bent upward. The eyes are slightly closed. The mouth is relaxed.

3 Fear

The eyebrows are raised and pulled together. The inner eyebrows are bent upward. The eyes are tense and alert.

4 Angry

The inner eyebrows are pulled downward and together. The eyes are wide open. The lips are pressed against each other or opened to expose the teeth.

5 Surprise The eyebrows are raised. The upper eyelids are wide open, the lower relaxed. The jaw is opened.

6 Disgust The eyebrows and eyelids are relaxed. The upper lip is raised and curled, often asymmetrically.

Happy expressions are universally and easily recognized, and are interpreted as conveying messages related to enjoyment, pleasure, a positive disposition, and friendliness. Sad expressions are often conceived as opposite to happy ones, but this view is too simple, although the action of the mouth corners is opposite. Sad expressions convey messages related to loss, bereavement, discomfort, pain, and helplessness. Anger expressions are seen increasingly often in modern society, as daily stresses and frustrations underlying anger seem to increase, but the expectation of reprisals decrease with the higher sense of personal security. Fear expressions are not often seen in societies where good personal security is typical, because the imminent possibility of personal destruction, from interpersonal violence or impersonal dangers, is the primary elicitor of fear. Disgust expressions are often part of the body's responses to objects that are revolting and nauseating, such as rotting flesh, fecal matter and insects in food, or other offensive materials that are rejected as suitable to eat. Surprise expressions are fleeting, and difficult to detect in real time [5].

They almost always occur in response to events that are unanticipated, and they convey messages about something being unexpected, sudden, novel, or amazing [5], [9]. The six basic emotions defined by [11] can be associated with a set of facial expressions. Precise localization of the facial feature plays an important role in feature extraction, and expression recognition [15]. But in actual application, because of the difference in facial shape and the quality of the image, it is difficult to locate the facial feature precisely [16]. In the face, we use the eyebrow and mouth corner shape as main „anchor‟ points. There are many methods are available for facial feature extraction, such as Eye Blinking Detection, Eye Location Detection, Segmentation of Face area and feature detection, etc [17]. But facial feature extraction for recognition is still a challenging problem. In this paper, we will use the ASM as the feature extraction method.

B. Statistical Shape Model

Cootes and Taylor [18] stated that it is possible to representate a shape of an object with a group of n points,

regardless of object‟s dimensional (2D or 3D). The shape of

an object will not change even when translated, rotated, or scalated.

Statistical Shape Model is a type of model for analysing a new shape and generating it based on training set data. Training set data usually comes from a number of training images which marked manually. By analysing the variation of shape of training set, a model similar with the variation is constructed. This type of model usually called Point Distribution Model [19].

In 2D Images [19], n points of landmark, {(xi, yi)}, for a number of X vector. It is crucial that the shape of training set representated in a same coordinat with training set data before we start the statistical analysis for these vectors. The training set will be processed by harmonizing every shape so that the total distance between shapes is minimized [19].

For example, there are two shape, x1 and x2, which points which already harmonized in same frame coordinate are already acquired. These vectors is construct a distribution on n

dimensional space. If this distribution is modelled, a new data will be generated with match with existing data in training set, which in turn, is used to check whether a shape is similar with existing shapes in training set.

C. Active Shape Model

Interpretting images containing objects whose appearance can vary is difficult [18]. A powerful approach has been to use deformable models, which can represent the variations in shape and/or texture (intensity) of the target objects. This represents shape using a set of landmarks, learning the valid ranges of shape variation from a training set of labelled images [19].

The ASM matches the model points to a new image using an iterative technique which is a variant on the Expectation Maximisation algorithm. A search is made around the current position of each point to find a point nearby which best matches a model of the texture expected at the landmark. The parameters of the shape model controlling the point positions are then updated to move the model points closer to the points found in the image.

ASM [19] is a method where the model is iteratively changed to match that model into a model in the image. This

method is using a flexible model which acquired from a number of training data sample [20], [21].

Given a guessed position in a picture, ASM iteratively will be matched with the image. By choosing a set of shape parameter b for Point Distribution Model, the shape of the model can be defined in a coordinate frame which centered in the object.

Instance X in the image‟s model can be constructed by

defining position, orientation, and scale. [19]

XM(s,)[x]Xc (6)

position of an image‟s frame model.

Basically, Active Shape Model works with steps such as these [21]:

(1)Locate a better position for points in around the points in the image;

(2)Updating parameters (Xb, Yb, s, θ, b) shape and poses according the new position found in step 1;

(3)Deciding constraints on parameter b to ensure a matching shape (for example: |bi| < m√λi, where m usually has a value between two and three, eigenvalues λi are choosen so as to explain a certain proportion of the variance in the training shapes;

(4)Repeat the steps until convergent condition is achieved (convergent means there are no significant difference between an iteration and the iteration before).

In practice, the iteration will look in the image around each point for a better position and update the model parameters to get the best match to these new found position. The simplest way to get better position is to acquiring the location of edge which have the highest intensity (with orientation if known) along the profile. The location of this edge is a new location of

model‟s point. Even though, the best location should be on the strong edges which combined by the references from statistical model point [22].

To get better result, the Active Shape Model is combined with Gaussian Pyramid. With subsampling, the image will be resized and it is stored as temporary data. The next step is to calculate the ASM result from one image to another image with different size and then image with the best location will be the best result. It should be considered that a point in the

model doesn‟t always located in highest intensity edge in local structure. Those points can be representating lower intensity edge or other image structure. The best approach is by analysing what it is to be found in figure 1.

Figure 1. Point in The Model to ASM

The number of iteration for Active Shape Model process to get the best point location not depends on the image size itself. From several testing, it is known that the size from the input image does not have a significant impact with the number of iteration. It is because of the effect of subsampling that used in the face tracking process.

The accuracy of Active Shape Model depends on several factors, such as brightness, image sharpness, and noise. For brightness, it is known that the image brightness intensity will affect the accuracy of detection.

III. FACIAL EMOTIONAL EXPRESSIONS RECOGNITION BASED ON RBFN

Face recognition has been studied by many researchers due to its importance in biometric authentication system. In the face recognition, we present the necessary information (the movements of the landmarks) so as to classify for a particular facial expression (an emotional label) in the order happy, sad, angry, fear, suprised and disgusted.

The JAFFE Database have 213 face images of 7 facial expressions (6 basic facial expressions and 1 neutral facial expression) taken from 10 Japanese female models. In Figure 2 are Example of Facial Emotional Expressions of JAFFE Database.

Figure 2. Example of Facial Emotional Expressions of JAFFE Database

A. Radial Basis Function Network

RBFN is class of single hidden layer feedforward networks where the activation functions for hidden units are defined as radially symmetric basis functions phi such as the Gaussian function. The fraction of overlap between each hidden unit and its neighbors is decided by width sigma such that a smooth interpolation over the input space is allowed. The whole architecture is therefore fixed by determing the hidden layer and the weights between the middle and the output layers.

The RBFN is ideal for interpolation since it uses a radial basis function, for example Gaussian function, for smoothing out and predict missing and inaccurate inputs [8]. We would consider interpolating functions of the form:

m , d Rn,k 1,...,n'which are given as the known data points. Often, the g(.) is the normalized Gaussian activation function defined as

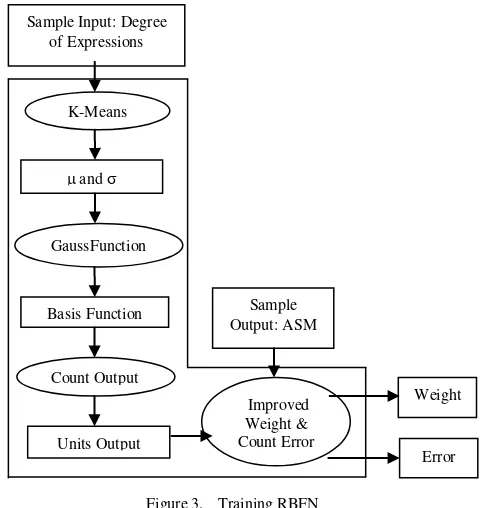

Figure 3 below is a process that occurs in training RBFN. Input vector consisting of a 6 degree of expressions. These samples are grouped using k-Means clustering algorithm to cluster number (depending on the number of hidden units) with a center cluster . Each cluster in addition to having a center cluster also has a width cluster , which is an average distance of the cluster sample from the cluster center.

Figure 3. Training RBFN

For each hidden unit, the Gauss value will be calculated by using the formula (8). Gauss value is passed to the output unit with the interpolation formula (7). The result will be compared with the sample output from ASM. Weight will continue to repair the fault has not been done for less than the tolerance value of error looping improvements already made as much as the maximum. Processes that are in the box will be repeated until the error is obtained which is smaller than the tolerance or the iteration has reached the maximum of the constant loop.

IV. EXPERIMENTAL RESULT

Figure 4. Face Detection in Several Types of Brightness

For the example, the number of iteration from image with 640x480 resolution is not too differ than image with 480x360 resolution. Table II shows the comparison between the number of iteration based on resolution.

TABLE II. NUMBER OF ITERATION COMPARISON

Resolution Number of Iteration

320 x 240 10-16

480 x 360 12 -15

640 x 480 11-17

Experimental result from [7], a typical set of sampled data is constituted of 33000 points sampled over the surface of the artifact a panda mask. The reconstruction becomes better with the number of points sampled. Acquisition was stopped when the visual quality of the reconstructed surface was judged sufficient and no significant improvement could be observed when new points were added.

Figure 5 shows the comparison of a number of images based on the number of iterations and the example of Facial Model by using ASM method.

Figure 5. ASM from left to right, 10, 20, and 30 iteration

A face can be recognized even when the details of the individual features (such as eyebrow, eye, nose, and mouth) are no longer resolved. In the Figure 5, emotional features are displayed by white lines. This paper proposed recognition procedure consists of two stages. Firstly, facial feature should be extracted to get emotional information from the face region.

Since, we use ASM method, we didn‟t take care of face region

extraction methods. Second stage is to classify the human emotion from the emotional information. For this problem, this paper uses the RBFN that is universal in the machine inference research. For the classification of the emotion from the shape model of facial image information, 30 feature points are inputted to the RBFN.

A set of 256 x 256 grayscale images are used in our experiment. In the RBFN classifier for FEER, we used 6 input layer, 10 hidden layer, and 60 output in 60 sample. We just did a bit of input samples and hidden units to be more easily

studied. The more input samples and the number of hidden units, then the result will be better. Learning rate used is 0.01. The process is divided into two parts, namely the process of calculating the hidden layer by using k-means clustering and the training process the input. The total time for facial feature extraction, pre-processing, neural network calculation takes less than 15 seconds.

TABLE III. RBFCLASSIFIER TEST RESULT

Basic Emotion

Degree of Expression

Result (%) Happy Sad Fear Angry Sur

prise Dis gust Happy 4.66 1.32 1.25 1.27 1.28 1.26 93.2

Sad 1.30 4.56 1.93 1.90 1.00 2.52 91.2

Fear 1.21 2.85 4.37 2.17 3.49 3.17 87.4

Angry 1.37 2.13 1.78 4.49 1.59 2.91 89.8

Surprise 1.81 1.49 2.54 1.62 4.54 1.00 90.8

Disgust 1.18 2.43 2.41 2.66 2.01 4.60 92.0

Average 90.73

Table III shows that the high value of the Degree of Expression equivalent to the Basic Emotion. This percentage impact on the results obtained by comparing the maximum value of expression levels.

V. CONCLUSION

For the facial emotional expressions recognition, this paper proposed facial emotional expressions recognition method that is based on ASM feature extraction method and Radial Basis Function Network emotion inference method. We expect that ASM extracts various emotional features and it has robust quality.

In this research we presented two systems for classifying of the facial expressions from JAFFE Database. In the RBFN classifier, 7 feature extracted from 30 feature points were used as training and test sequences. The trained RBFN was tested by features that not used in training and we have obtained a high result rate of 90.73%.

It is expected that the proposed face emotional expressions recognition method performs robust classification of the emotion, because it uses various emotional information such as

Ekman‟s action unit and feature shape models. It is future work to apply feature extraction methods based on ASM and HRBF.

REFERENCES

[1] Kwang-Eun Ko, Kwee-Bo Sim, “Development of the Facial Feature Extraction and Emotion Recognition Method based on ASM and

Bayesian Network,”, FUZZ-IEEE 2009, Korea, pp. 2063-2066, August 20-24, 2009.

[2] Paul Ekman and W.V. Friesen, “Facial Action Coding System (FACS),” Consulting Psychologists Press, Inc., 1978.

[3] G. Jonghwa Kim, “Emotion Recognition Based on Physiological

Changes in Music Listening,” IEEE Transactions on Pattern Analysis

and Machine Intelligence, Vol. 30, No. 12, December 2008.

[4] K. Oatley, J.M. Jenkins, “Understanding Emotions,” Blackwell Publishers, Cambridge, USA, 1996.

[5] DataFace Site: Facial Expressions, Emotion Expressions, Nonverbal

Communication, Physiognomy, “http://www.face-and-emotion.com”. [6] Daw-Tung Lin and Jam Chen, “Facial Expressions Classification with

Hierarchical Radial Basis Function Networks,” IEEE 6th International Conference on Neural Information Processing, Proceeding ICONIP ‟99,

pp. 1202-1207, 1999.

[7] Stefano Ferrari, Francesco Bellocchio, Vincenzo Piuri, and N. Alberto

Borghese, “A Hierarchical RBF Online Learning Algorithm for Real -Time 3-D Scanner,” IEEE Transactions on Neural Networks, Vol. 21, No. 12, February 2010, pp. 275-285.

[8] Hadi Seyedarabi, Ali Aghagolzadeh, and Sohrab Khanmohammadi,

“Recognition of Basic Facial Expressions by Feature-Points Tracking

using RBF Neural Network and Fuzzy Inference System,” IEEE

International Conference on Multimedia and Expo (ICME), pp. 1219-1222, 2004.

[9] Paul Ekman and W.V. Friesen, Joseph C. Hager, “The New Facial

Action Coding System (FACS),” Consulting Psychologists Press, 2002. [10] Paul Ekman, “Facial Expressions and Emotion,” American Psychologist,

Vol. 48, pp. 384-392, 1993.

[11] Paul Ekman, “Emotion in The Human Face,” Cambridge University Press, 1982.

[12] C. E. Izard, “Emotions and facial expressions: A perspective from

differential emotions theory,” in The Psychology of Facial Expression,

J.A. Russell and J. M. F. Dols, Eds. Maison des Sciences de l‟Homme and Cambridge University Press, 1997.

[13] A. Kappas, “ What facial activity can and cannot tell us about

emotions,” in The human face: Measurement and meaning, M.

Katsikitis, Ed. Kluwer Academic Publisher, 2003, pp. 215-234. [14] Surya Sumpeno, Mochamad Hariadi, and Mauridhi Hery Purnomo,

“Facial Emotional Expressions of Life-like Character Based on Text

Classifier and Fuzzy Logic,” in IAENG International Journal of

Computer Science, 38:2, IJCS_38_2_04 [online], May 2011.

[15] Kwang-Eun Ko, Kwee-Bo Sim, “Development of the Facial Emotion Recognition Method based on combining Active Appearance Models

with Dynamic Bayesian Network,”, IEEE International Conference on

Cyberworlds, pp. 87-91, 2010. IEEE Computer Society.

[16] Shi Yi-Bin, Zhang Jian-Ming, Tian Jian-Hua, Zhou Geng-Tao, “An improved facial feature localization method based on ASM,” Computer -Aided Industrial design and Conceptual design, 2006, 7th International Conference on CAIDCD ‟06.

[17] Seiji Kobayasho and Shuji Hashimoto, “Automated feature extraction of

face image and its applications,” in International Workshop on Robot

and Human Communication, pp. 164-169.

[18] T. F. Cootes and C.J. Taylor. Statistical Models of Appearance for Computer Vision. University of Manchester, 2004.

[19] T.F Cootes, D. Cooper, C.J. Taylor and J. Graham, “Active Shape Models - Their Training and Application,” Computer Vision and Image Understanding, Vol. 61, No. 1, January, pp. 38-59, 1995.

[20] Michael Kass, Andrew Witkin, Demetri Terzopoulos, “Snakes: Active Contour Models,” in Proceedings First International Conference on Computer Vision, pp. 259-268, IEEE Computer Society Press, 1987. [21] T. F. Cootes, Active Shape Models – Smart Snakes, British Machine

Vision Conference, 1992.

[22] Bram van Ginneken, Alejandro F. Frangi, Joes J. Staal, Bart M., Haar

Romeny, and Max A., “Active Shape Model Segmentation With Optimal Features,” IEEE Transactions on Medical Imaging, Vol. 21, No.

![TABLE I. FACIAL EXPRESSIONS OF BASIC EMOTIONS [14]](https://thumb-ap.123doks.com/thumbv2/123dok/1113427.949095/2.612.50.291.520.698/table-i-facial-expressions-basic-emotions.webp)