!

!!

" #$%#&'(

)*+%,

(*

2014 Electrical Power, Electronics, Communications, Controls and Informatics

Seminar (EECCIS)

Copyright ©2014 by the Institute of Electrical and Electronics Engineers, Inc. All rights

reserved.

Copyright and Reprint Permission

Abstracting is permitted with credit to the source. Libraries are permitted to photocopy

beyond the limit of U.S. copyright law, for private use of patrons, those articles in this

volume that carry a code at the bottom of the first page, provided that the per-copy fee

indicated in the code is paid through the Copyright Clearance Center, 222 Rosewood

Drive, Danvers, MA 01923.

Other copying, reprint, or reproduction requests shouldbe addressed to IEEE Copyrights

Manager, IEEE Service Center, 445 Hoes Lane, P.O. Box 1331, Piscataway, NJ

08855-1331.

IEEE Catalog Number Part Number CFP 1432Z-ART

ISBN 978-1-4799-6947-0

Additional copies of this publication are available from

Currant Associates, Inc.

57 Morehouse Lane

Red Hook, NY 12571 USA

+1 845 758 0400

+1 845 758 2633 (FAX)

email: [email protected]

For information about producing a conference proceedings and receiving an estimate,

contact

[email protected]

http://www.ieee.org/conferencepublishing

Table of Contents

A. Electrical Power

Characteristics and Suppression of Secondary Arc on 500 kV Transmission Lines for

Single Pole Reclosure Purposes A1_01-07

Kevin Marojahan B.N., Nanang Hariyanto, Muhammad Nurdin

Design of Robust-Fuzzy Controller for SMIB Based on Power-Load Cluster Model with

Time Series Analysis A2-08-15

Ismit Mado, Adi Soeprijanto, Suhartono

Impacts ofExhaust Airflow Velocity to Electrical Power Generation of

Small Wind Energy Conversion System (WECS) A3_16-22

Teguh Utomo, Unggul Wibawa

Neural Network SVPWM-DTC of Induction Motor for EV Load Model A4_23-28

Sy Yi Sim, Zainal Alam Haron, Nooradzianie Muhammad Zin, Wahyu Mulyo Utomo, Azuwien Aida Bohari, Roslina Mat Ariff

Security Constrained Optimal Power Flow Incorporating Preventive and Corrective Control A5_29-34

Rony Seto Wibowo, Tri Prasetya Fathurrodli, Ontoseno Penangsang, Adi Soeprijanto

B. Electronics

A Microcontroller-based Yarn Twist Tester B1_35-39

Daniel Santoso, Deddy Susilo, Yahya Y. Prasetyanto

Development of Two Axis Solar Tracking Using Five Photodiodes B2_40-44

Munnik Haryanti, Abdul Halim, Arbai Yusuf

Performance of LED Lights Installed on DKI Jakarta Streets B3_45-50

Endah Setyaningsih, Joni Fat, Lydwina Wardhani, Ida Zureidar

The Development of Computerized Measurement System for Electronic Device Characterization B4_51-55

Yana Sudarsa, Suheri Bakar, Trisno Y. P., P.S. Rudati

C. Communications

Frequency domain Technique For Reducing Chromatic Dispersion C1_56-61

M.F.L Abdullah, Bhagwan Das, Mohd Shah Nor Shahida

Investigations on Fractal Square Loop FSS at Oblique Incidence for GSM Applications C2_62-66

F. C. Seman, N. K. Khalid

The Influence of Hole-Truncated to Characteristic Performance of The Equilateral Triangular C3_67-69 Antenna for Mobile Satellite Communications

Muhammad Fauzan Edy Purnomo, Joshapat Tetuko Sri Sumantyo, Vita Kusumasari

The Intelligent Energy Harvesting Management Policies in Wireless Sensor Networks with C4_70-77 Directional Water-Filling Algorithm

D. Controls

An LMI Approach to Output Feedback Controller of Neutral Systems D1_78-81

Erwin Susanto, Junartho Halomoan, Mitsuaki Ishitobi

Design of Geometric-based Inverse Kinematics for a Low Cost Robotic Arm D2_82-86

Muhammad Aziz Muslim, Seif Nura Urfin

Forward Kinematics Analysis of a 5-Axis RV-2AJ Robot Manipulator D3_87-92

Mohammad Afif Ayob, Wan Nurshazwani Wan Zakaria, Wan Nurshazwani Wan Zakaria

Inverse Kinematics of a Two-Wheeled Differential Drive an Autonomous Mobile Robot D4_93-98

Eka Maulana, Muhammad Aziz Muslim, Akhmad Zainuri

Temperature Control of Liquid Egg Pasteurization System Using PLC D5_99-104 (Programmable Logic Controller) Siemens Simatic S7-200 and HMI

(Human Machine Interface) Simatic HMI Panel

Tri Oktaviana Putri, Rahmadwati, Bambang Siswojo

E. Informatics

Assessing Readiness of IP Networks to Support H.323 Desktop Videoconferencing E1_105-110 Services over Various Scheduling Techniques Using OPNET

Eko Fajar Cahyadi

Comparison Binary Search and LinearAlgorithm for German-Indonesian Sign Language E2_111-115 Using Markov Model

Fridy Mandita, Toni Anwar, Harnan Malik Abdullah

Content Based Image Retrieval System Based on Watershed Transform for Trademark Images E3_116-120

Cahyo Crysdian

Design of Small Smart Home System Based on Arduino E4_121-125

Andi Adriansyah, Akhmad Wahyu Dani

Enhance Quality Control Management for Sensitive Industrial Products Using 2D/3D Image E5_126-131 Processing Algorithms

Murthad Al-Yoonus, Mohammed Saeed Jawad, M.F.L Abdullah, Fares Al-Shargie

Implementation of Modified Vernam Algorithm for Radioactive Transportation E6_132-136 Monitoring System

Adi Abimanyu, Dwi Yuliansari N, I Wayan Mustika, Litasari

Real Time Hand Gesture Movements Tracking and Recognizing System E7_137-141

Rudy Hartanto, Adhi Susanto, P. Insap Santosa

Voice Control of Home Appliances using Android E8_142-146

978-1-4799-6947-0/14/$31.00 ©2014 IEEE 137

Real Time Hand Gesture Movements Tracking and

Recognizing System

Rudy Hartanto

Department of Electrical Engineering and Information

Technology Gadjah Mada University Jalan Grafika No. 2, Yogyakarta,

55281 Indonesia

Email: [email protected]

Adhi Susanto

Department of Electrical Engineering and Information

Technology Gadjah Mada University Jalan Grafika No. 2, Yogyakarta,

55281 Indonesia

P. Insap Santosa

Department of Electrical Engineering and Information

Technology Gadjah Mada University Jalan Grafika No. 2, Yogyakarta,

55281 Indonesia Email: [email protected]

Abstract— Human computer interaction has a long history to become more intuitive. For human being, gesture of different kind is one of the most intuitive and common communication. However, vision-based hand gesture recognition is a challenging problem, which is involved complex computation, due to high degree of freedom in human hand.

In this paper, we use hand gesture captured by web-cam instead of mice, for natural and intuitive human-computer interaction. Skin detections method is used to create a segmented hand image and to differentiate with the background. A contours and convex hull algorithm is used to recognize hand area as well as the number of fingertip of hand gesture image to be mapped with button. Moreover, for detection of hand gesture motion, we use Lucas-Kanade pyramidal algorithm.

The result shows that this system can operate well so we can interact with computer using our hand gesture instead using a mouse.

Keywords - hand gesture, human computer interaction, contours, convex hull, Lukas-Kanade Algorithm.

I. INTRODUCTION

The development of user interfaces has increased rapidly, especially in consumer devices. The use of the touch interface (e.g. touch screen) has commonly in today devices such as computer tablets, Smart Phones, and other control interface on an electronic device. The development of user interface are tends to the use of human body gesture, especially human hand as the main interface, which is more natural and intuitive. However, so far the user interface is still limited to movement on the two dimensions surface, such as the use of the mouse and touch screen.

Many researches explore various mechanisms of interaction with the computer in a more practical, efficient, and comfortable. Starting from the use of devices like ergonomic keyboard interaction based on gesture hands [1], to the effort to seek mechanisms of interaction without having to get in touch directly with the computer input devices.

The use of pointing device with tactile feedback as a mechanism of interaction with the computer have been examined by [2] in 2008. In the same year, Juan P. Wachs developed a method of vision-based system that capable to interpret the user's hand gesture to manipulate objects on

medical data visualization environment [3]. Tao Ni [4] developed the technique called rap Menu (roll-and-pinch menu) interactions to select a specific menu remotely by using hand gesture input.

[5] had developed a framework interface for immersive projection system CAVE in the field of virtual reality by using hand gestures. Hand gesture recognition is performed with the use of blob detection. This recognition model allowing the implementation of an effective interface. Other research on the recognition and tracking of a hand gesture motion done by [6]. Tracking and recognition of hand gestures motion, which is captured by web cameras, are used to control the computer cursor as a replacement of the mouse. [7] conduct research to develop a system using vision-based interface webcam cameras and computer vision technology that allows users to use their hand to interact with the computer. In this research, the user can interact with a computer using their hand movements without the use of a marker. [8] use hand segmentation and color model to track the movement of the hand. Segmentation and tracking process is done by using a color model to extract or identify the gestures hand to implement real-time applications that are better, faster and more accurate.

II. THE PROPOSED METHOD

A. Research Objectives

RGB color space to eliminate the variation of room illumination. The convexity hull algorithm is applied to recognize hand gesture contour area as well as the number of fingertips to map with the mouse button.

The second step is tracking and recognizing the moving of user hand that recorded by webcam using Lukas-Kanade pyramidal algorithm. The tracking of hand gesture motion will be used to move the cursor according to the hand movement. Fig. 1 show the system proposed, starting by capture the user hand image, removing the background and segmented process. The detection of hand image is accomplished by detecting the hand contour and determines the hand center point. The fingertips detection is obtained with convexity defect and convex hull algorithm, and will be used as the replacement of mouse buttons click. The motion detection will track the hand motions to move the cursor as a replacement of the mouse function.

Fig. 1. The Proposed System.

B. Segmentation

The segmentation process is used to obtain the hand image to be processed further. We use skin detection algorithm to detect and separate hand skin color with the background due to its simple and fast detection. To improve the reliability from the variation of environment illumination, the RGB color space image will be converted to YCrCb. This conversion will separate the intensity component Y and chromaticity components Cr and Cb. The conversion obtained using (1)

Y = 0.299*R + 0.587*G + 0.114*B Cr = (R - Y)*0.713 + 128 (1) Cb = (B - Y)*0.564 + 128

The skin color detection can be obtained by determining the range of Y, Cr, and Cb values respectively, which is the value of the user's skin color to be used as a threshold to determine whether the value of a white pixel (hand image) or black (background). Equation (2) shows the algorithm of skin color detection and threshold process to convert to binary-segmented image.

(2)

with Cr1 and Cr2 as well as Cb1 and Cb2 are the range of hand skin color value. As we know that human skin color varies across human races or even between individuals of the same race. Additional variability may be introduced due to changing illumination conditions and/or camera characteristics. Therefore,

the range value of hand skin color should be adjust according to the user skin color as well as illumination conditions and/or camera characteristics.

C. Contour Detection and

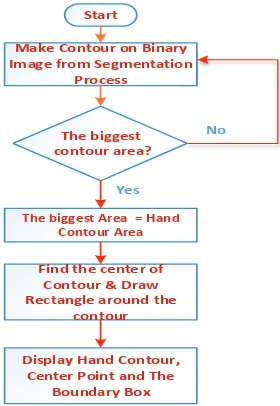

The contour is a sequence of pixels with same value. Contour is found if there are differences in pixels with its neighbors. The contour can be obtain from binary image based on the results of skin detection between white pixels (the object that has the colors resemble hands) and black pixels (objects other than hands or background). This method however, has the possibility of a high error when an object in an image has a color that is almost the same. Therefore, need to be maintained so that there is only one object in the frame, which is the biggest contour (which in this case is the user's hand). Fig 2 shows the flow chart of contour detection.

!!

Fig. 2. Contour detection.

D. Convexity defect

139

Fig. 3 Sklansky Square. [10]

The second step is determining the defect from convext hull that have been formed. Defect can be defined as a smallest area in the convex hull surrounding the hand. Search for defect is useful to find hand’s features, so that it can eventually detect the number of fingers. Convexity defects method on this hand can be seen in Fig. 4.

Fig. 4. Convexity defect of hand image [10].

The convex hull searching from a set of points Q(CH(Q)) obtained by searching smallest convex set that contain all of the points in Q. A convex hull of a set of points Q (CH (Q)) in n dimensions is a whole slice of all convex sets containing Q. Thus, for an N-point {p1, p2, ..., pN} P, the convex hull is a convex combination set that can be expressed as:

(3)

E. The Number of Fingers Detection

To detect the number of hand fingers can be implemented in five steps:

1. Determine the center point coordinate (x,y) of minimum area rectangle of hand contour (further called box).

2. Determine start point and depth point coordinates of defects founded.

3. Set the number of finger = 0. 4. For i = 0, to i < defect number do

If ((startpoint.y < box.center.y) or (depthpoint.y < box.center.y)) And (startpoint.y < depthpoint.y) and

(the distence of startpoint and depthpoint > box height/ 6,5)

Then number of fingers = 0.

5. Display detected fingers.

The value of variable “number of fingers” will increase following the number of defect found, if it satisfies the following three requirements.

a. If startpoint.y < box.center.y atau depthpoint.y <

box.center.y, indicate that startpoint and depthpoint is above the center point of contour, or in other word the palm is upward.

b. If startpoint.y < depthpoint.y, indicate that startpoint is above depthpoint, or in other word the finger is pointed upward.

c. If the distance between startpoint and depthpoint > box height/6.5, indicate that the detected hand contour is too long including the user arm, and should be restricted to the palm only.

Fig. 5 shows the flow chart of detecting and counting number of fingers.

Fig. 5. Flow chart of detecting and counting number of finger.

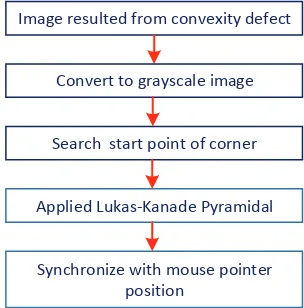

F. Motion Detection

The motion detection is accomplished by tracking the user hand motion, and implemented using Lukas-Kanade pyramidal method, showed in Fig. 6.

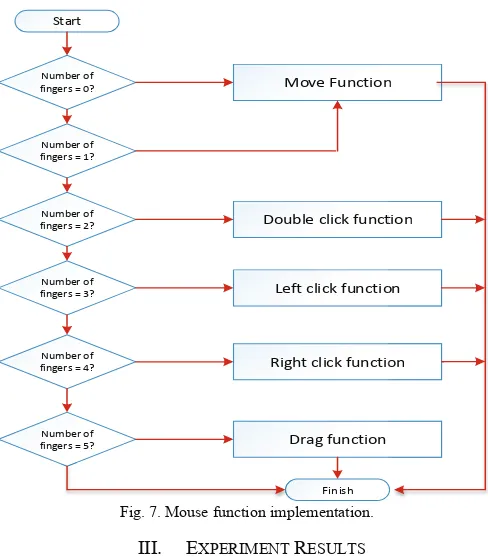

G. Mouse Function Implementation

In this research, the hand gesture motion detection and recognition will be implemented to replace the mouse function. The mouse function can be implemented using mouse event functions from API call to synthesize the mouse motion and button click, which found in user32.dll.

To implement mouse move, right and left click, double click and drag function, we use the following mouse event functions.

- Move; is implemented using MOUSEEVENTF_MOVE

- Left click; is implemented using

MOUSEEVENTF_LEFTDOWN followed with

MOUSEEVENTF_LEFTUP

- Right click, is implemented using

MOUSEEVENTF_RIGHTDOWN followed with

MOUSEEVENTF_RIGHTUP

- Drag, is implemented using MOUSEEVENTF_LEFTDOWN

continuously while move to the intended directions. The applied mouse function according to the number of fingers detected as shown in Fig. 7.

Fig. 7. Mouse function implementation.

III. EXPERIMENT RESULTS

To verify the effectiveness of the proposed approach, we done some testing using I7 1.7 GHz machine and the image sequences are captured at about 10 frames per second.

A. Segmentation using Skin Detection

The segmentation process is intended to get hand image and separate it with the image background. The RGB image captured from the camera will first converted to YCrCb color space to separate between the intensity component (Y) and color components (Cr and Cb). This conversion will reduce the pixels variation due to room illumination variation. Fig. 8 shows the original image in RGB space, image in YCrCb space and segmented image resulted using skin detection.

(a) (b) (c)

Fig. 8. (a) Original Image, (b) YCrCb image, (c) Segmented image.

B. Contours Detection

Binary image from segmentation results will then use to contour searching. The contour searching will produce more than one contour due to the imperfect segmentation, for example, there are other similar objects by hand but smaller. In order to ensure that only one contour, that is hand contour is processed, and then need to specified areas in which the largest contour. So only, the contour with the greatest area is to be formed, while the other will be ignored. This contour search results are shown in Figure 9.

Fig. 9. The resulted contours search.

C. Convexity Defect and Number of Fingers Detection

The process begins with the formation of the convex hull, than followed by searching the defects areas, which can be used to detect fingers. Convex hull that is found will be marked by a small circle with blue color, as shown in Figure 10.

Fig.10. Convexity defect of hand fingerprints.

141 system is capable to detect the number of fingers shown by users from one finger to five fingers.

Fig. 11 The detection and number of fingers recognition.

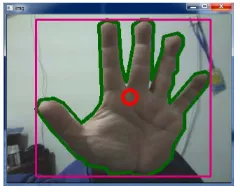

D. Motion Detection

The detection of hand movement is required so that the mouse pointer can follow the user's hand gestures. In this process a bound box is formed that will enclose the contours of the hand, the upper left corner of the box is marked with pt1 and bottom right corner marked by pt2 , and will be used as a parameter of the cvRectangle function, to display the box enclosing the contour of the hand, as shown in Figure 12.

Fig. 12. The center point on hand contours.

To find a center point of the hand contours is done by searching for a center point of the box enclosing the hand contour, as shown in Fig. 12. Further, by using the box.center.x and box.center.y as the coordinates of the center point contours, mouse cursor can be arranged to follow the movement of the hand contour. The center point of the hand contour, represented as a small circle in red.

E. Discussion

To evaluate the performance of recognition proposed, we examine the system to operate the windows application that is MS Paint using hand gesture to replace mouse as user application interface. Testing is done under normal illumination, system can recognize, and track the movement of user hand. The center of hand gesture represented by a small circle had successfully follow the user's hand movement.

The segmentation process is capable in detecting the user's hand gestures and counting the contours. The system also can count the number of user fingers that associated with

a command button on a mouse. The hand motion can also been followed properly by the system, and the mouse click function represented by number of fingers detected have work.

However, there are some limitations of the program, i.e. the change of lighting environment is still influential in the process of segmentation, in particular on the process of the removal of the background.

IV. CONCLUSION

Based on the evaluation and discussion above, can be concluded that the system can recognize and track the hand movement and can replace the mouse function to move the mouse cursor and the mouse click function as well. The segmentation algorithm using skin color detection is good enough to separate the hand image with its background. The convexity defect algorithm can work well in detecting the number of user’s hand, to replace the mouse click functions. In general, the system can detect and follow the hand movement so that can be used as user interface in real time.

The future work is to improve segmentation process in eliminating the effect of illumination changes. It is possible to use another color space such as HSV to minimize the effect of illumination changes.

REFERENCES

[1] V. Adamo, "A New Method of Hand Gesture Configuration and Animation," Information, pp. 367-386, 2004.

[2] S. Foehrenbach, W. A. König, J. Gerken and H. Reiterer, "Natural Interaction with Hand Gestures and Tactile Feedback for large, high-res Displays," in Workshop on Multimodal Interaction Through Haptic Feedback, 2008.

[3] J. Wachs, H. Stern, Y. Edan, M. Gillam, C. Feied, M. Smith and J. Handler, "A Real-Time Hand Gesture Interface for Medical Visualization Applications," Applications of Soft Computing, Advances in Intelligent and Soft Computing, vol. 36, pp. 153-162, 2006.

[4] T. Ni, R. McMahan and D. Bowman, "Tech-note: rapMenu: Remote Menu Selection Using Freehand Gestural Input," in 3D User Interfaces, 2008. 3DUI 2008. IEEE Symposium on , 2008.

[5] H. Lee, Y. Tateyama and T. Ogi, "Hand Gesture Recognition using Blob Detektion for Immerseive Projection Display System," World Academy of Science, Engineering and Technology, vol. 6, 27 02 2012.

[6] D. C. Gope, "Hand Tracking and Hand Gesture Recognition for Human Computer Interaction," American Academic & Scholarly Research Journal, vol. 4, no. 6, pp. 36-42, 11 2012.

[7] R. Bhatt, N. Fernandes and A. Dhage, "Vision Based Hand Gesture Recognition for Human Computer Interaction," International Journal of Engineering Science and Innovative Technology (IJESIT), vol. 2, no. 3, pp. 110-115, 2013.

[8] S. Patidar and D. C.S.Satsangi, "Hand Segmentation and Tracking Technique using Color Models," International Journal of Software & Hardware Research in Engineering, vol. 1, no. 2, pp. 18-22, 10 2013. [9] M. M. Youssef, "Hull Convexity Defect Features For Human Action

Recognition," The School of Engineering of The University of Dayton, 2011.