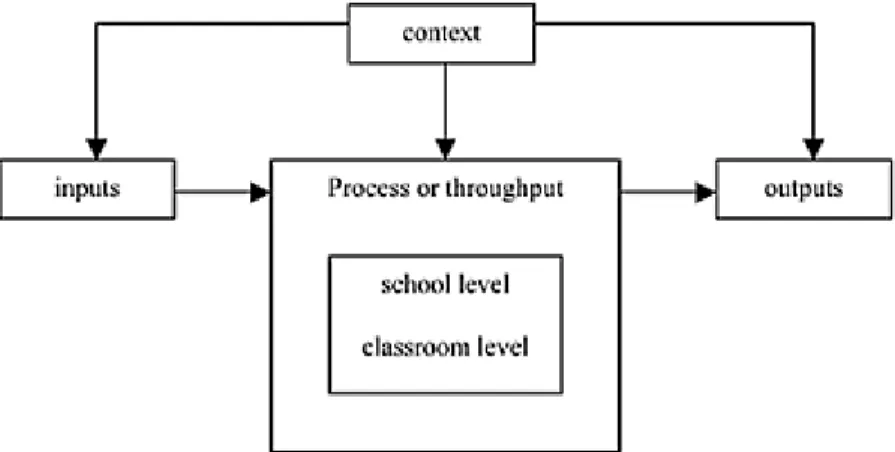

It concentrates on the application of educational evaluation, assessment and monitoring activities embedded in organizational, management and teaching processes. The structure of the book is built around a three-dimensional model on the basis of which different types of educational "M&E", as it is sometimes abbreviated, are distinguished.

Basic Concepts

Monitoring and Evaluation (M&E) in Education: Concepts, Functions and

Context

- Introduction

- Why do we Need Monitoring and Evaluation in Education?

- A Conceptual Framework to Distinguish Technical Options in Educational M&E

- Pre-Conditions in Educational M&E

- Conclusion: Why Speak of “Systemic Educational Evaluation”?

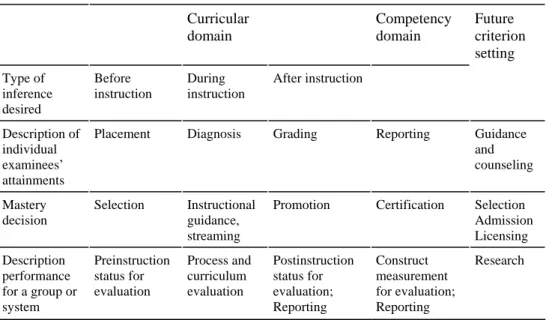

By crossing these three dimensions (see Table 1.1), the main forms of educational M&E can be characterized. What the example illustrates is that when it comes to taking concrete steps to establish or improve educational M&E, one cannot take "the political will" to do so for granted.

Basics of Educational Evaluation

Introduction

Basics of Evaluation Methodology .1 Evaluation objects, criteria and standards

- Measurement of criteria and antecedent conditions Measuring outcomes

- Controlling for background variables (value added)

- Design: answering the attribution question

Indicators of the context, for example of the school, can be judged according to whether they are favorable or unfavorable for the proper functioning of the school. External validity is threatened by selection biases (uncontrolled initial differences between treatment groups that influence treatment conditions) and artificial aspects of the experimental situation.

Important Distinctions in Evaluation Theory .1 Ideal-type stages in evaluation

- Formative and summative roles

- Accountability and improvement perspectives reconsidered

In this situation, evaluability assessment is described as an analytical activity that focuses on the structure and feasibility of the program to be evaluated. The key variables on which to collect data should be selected based on the determination of evaluation criteria and standards (ends) and the structure of the program (means)—see ad a) and ad b) above.

Introduction

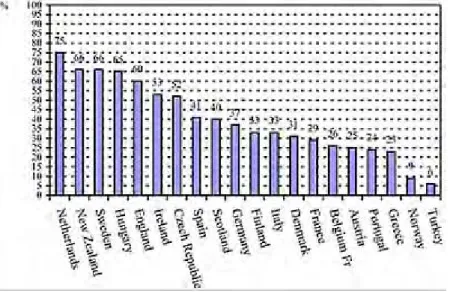

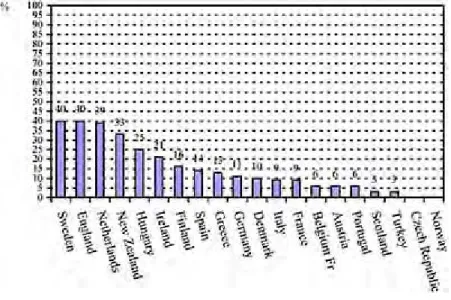

Forms That are Based on Student Achievement Measurement .1 National assessment programs

- International assessment programs General description

- School performance reporting General description

- Student monitoring systems General description

- Assessment-based school self evaluation General description

- Examinations General description

Main target groups and types of use of the information These are more or less the same as in national assessment programmes. By allowing the objective scoring of open-ended test items, testing of more general cognitive skills, and "authentic testing," the achievement testing method appears to be "moving up" in addressing these more complex aspects.

Forms That are Based on Education Statistics and Administrative Data

- System level management information systems General description

- School management information systems General description

MIS requires an Office of Education Statistics with a specialized unit for developing indicators in areas where traditional statistics do not fully cover all categories of the theoretical model. The information could be used for all kinds of corrective actions in school management.

Forms That are Based on Systematic Review, Observations and (Self)Perceptions

- International review panels General description

- School inspection/supervision General description

- School self-evaluations, including teacher appraisal General description

- School audits General description

- Monitoring and evaluation as part of teaching

Finally, some of these forms of school self-evaluation can be combined and integrated with each other. School management and staff teams are the primary audience for school self-evaluation results.

Program Evaluation and Teacher Evaluation .1 Program evaluation

- Teacher evaluation

Results of program evaluations can lead to political disputes when the results are critical, the stakes in the program are highly evaluated and the credibility of the applied research methodology is less than optimal. This type of "input" control has long been one of the most important measures for quality care in education; especially when combined with another type of input control, namely centrally standardized curricula.

Theoretical Foundations of Systemic M&E

The Political and Organizational Context of Educational Evaluation

Introduction

Rationality Assumptions Concerning the Policy-Context of Evaluations

According to the third characteristic of the rationality model, planned programs are 'actually' implemented. In many cases, a clear exploration of the goals of the evaluation will help overcome resistance.

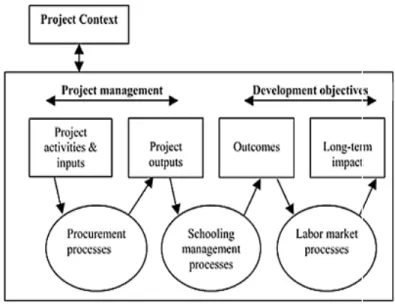

Gearing Evaluation Approach to Contextual Conditions; the Case of Educational Reform Programs

- Phase models

- Articulation of the decision-making context

- Monitoring and evaluation in functionally decentralized education systems

The next question in the sequence is whether the intended direct results of the project have been achieved. The distinction between areas of decision-making in educational systems has some similarities with Bray's use of the term "functional decentralization," as cited by Rondinelli. Examining the location of decision-making in relation to domains and subdomains is one of the most interesting possibilities.

Creating Pre-Conditions for M&E

- Political will and resistance

- Institutional capability for M&E

- Organizational and technical capacity for M&E

But the rules of the game can also be less formal and depend on convention and implicit norms. Institutional capacity for M&E is most realistically addressed as an assessment activity to gain an idea of the general climate in which M&E activities will “land” in a country. In the case of gaps, several options should be considered: narrowing the M&E objectives or changing and improving current practices, e.g.

Conclusion: Matching Evaluation Approach to Characteristics of the Reform Program, Creating Pre-Conditions and Choosing an

The above conjectures all express a contingent approach: the appropriateness or efficiency in the choice of monitoring and evaluation strategy depends on the characteristics of the reform context. The fields of educational evaluation in the sense of measuring student performance on the one hand and educational evaluation in the sense of program evaluation on the other hand have developed as two relatively separate fields. In all reform programmes, where some kind of curriculum revision is at stake, it would also be possible to use assessments of student achievement in the particular curricular area as effect criteria.

Evaluation as a Tool for Planning and Management at School Level

- Introduction

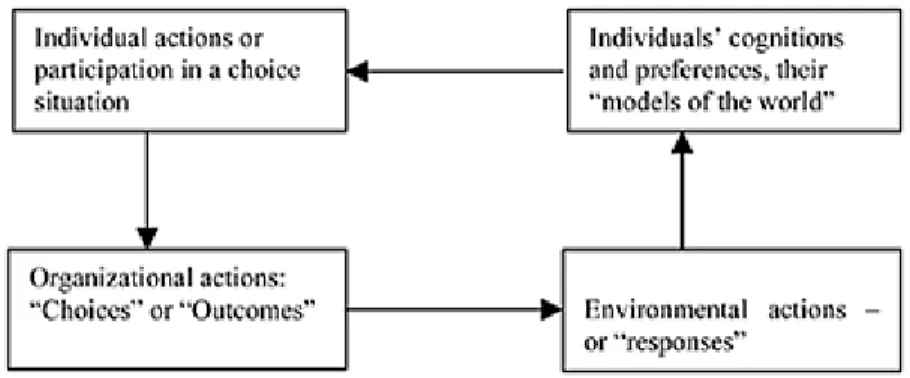

- The Rationality Paradigm Reconsidered

- Synoptic planning and bureaucratic structuring

- Creating market mechanisms: alignment of individual and organizational rationality

- The cybernetic principle: retroactive planning and the learning organization

- The importance of the cybernetic principle

- Retroactive planning

- The Organizational Structural Dimension

- Organizational learning in “learning organizations”

- Management in the school as a “professional bureaucracy”

- Educational leadership as a characteristic of “effective schools”

- Schools as learning organizations?

- Conclusion: The Centrality of External and Internal School Self- Evaluation in Learning and Adapting School Organizations

In the remaining sections, the focus will shift from procedural variations of the rationality model to organizational structures. Operational management is firmly in the hands of the professionals (teachers) in the operational core (classroom) of the organization. Second, "pedagogical management" is not entirely at odds with certain demands of the professional bureaucracy.

Assessment of Student Achievement

Basic Elements of Educational Measurement

Introduction

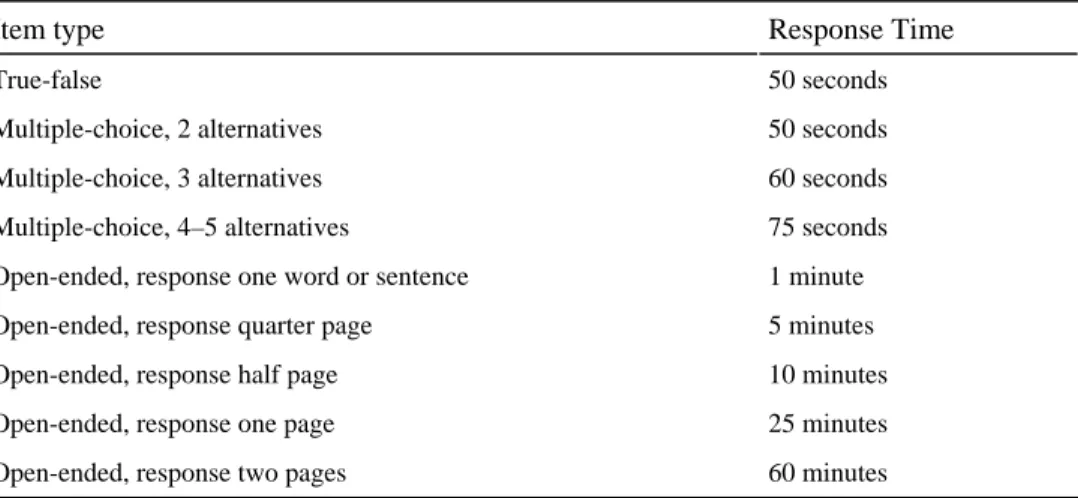

This includes the objective of the test (eg, curriculum-based skills, cognitive or psychomotor abilities) and the type of decisions to be made (eg, mastery decisions, pass/fail decisions, selection, prediction). Depending on the purpose of the test, the content area of the test and the level at which the content should be tested can be determined. Test scoring and analysis of tests and items, including conversion of scores into grades and evaluation of test quality as a measurement instrument.

Test Purposes

Construction of test materials, such as construction of multiple-choice items and open-ended items, or the construction of performance assessments. This chapter will conclude with some of these topics: assessment systems, item banking, optimal test construction, and computerized adaptive testing. Implications for a future criterion setting concern knowledge, skills and affective goals that must continue in the period after the teaching has ended.

Quality Criteria for Assessments

A judgment of the relevance and representativeness of the content is based on a specification of the boundaries and structure of the domain to be tested. One can think of the properties of the test (for example, the length of the test) or the evaluation procedure (for example, rater effects). The purpose of a reliability analysis is to quantify the consistency and inconsistency of student performance on the test.

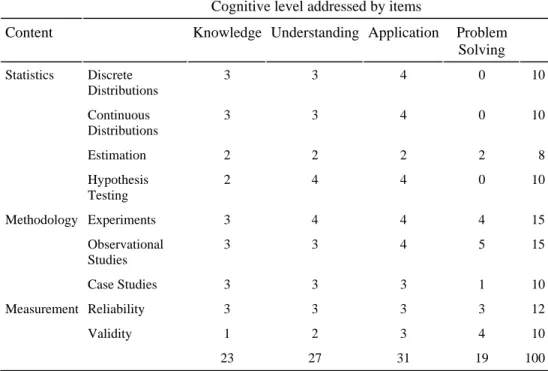

Test Specifications

- Specification of test content

- Specification of cognitive behavior level

The choice of test content involves a trade-off between the breadth of content coverage and the reliability of subscores. The main objective of the specification table is to ensure that the test is a valid reflection of the test domain and purpose. Table cell entries give the relative importance of a specific combination of content and cognitive level of behavior on the test.

Test Formats

- Selected response formats

- Constructed response formats

- Performance assessments

- Choosing a format

One of the main mistakes made in this format is that the wording of the statement closely matches the wording used in the instructional materials. The relationship between the number of items to be purchased and the probability of gambling will also be discussed in the next chapter. Fill-in items resemble multiple-choice items in that they can (in principle) be scored objectively and can provide good substantive coverage as a result of the number that can be administered in a given time.

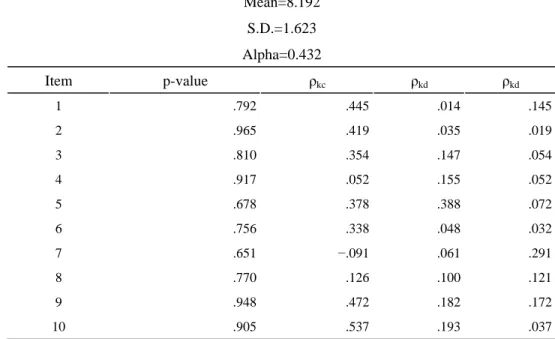

Test and Item Analysis

The expected value of the test result is equal to the true score, i.e. This means that the unreliability of the scores suppresses the correlation between the observed scores. The dependence of reliability on the variance of the true scores can be abused.

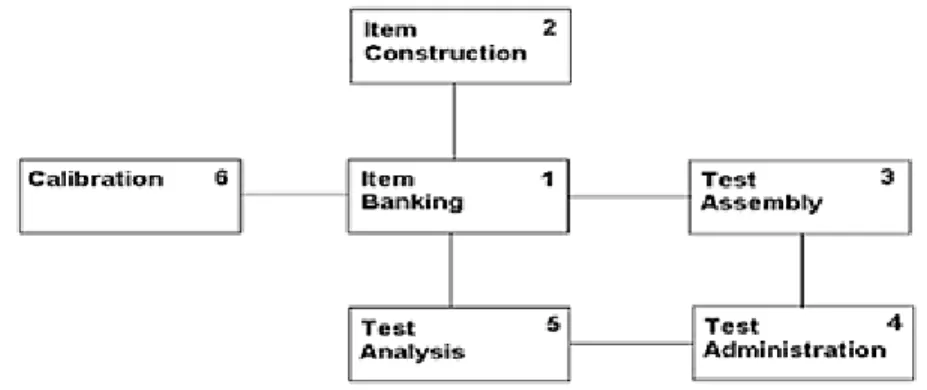

Assessment Systems

- Item banking

- Item construction

- Item bank calibration

- Optimal test assembly

- Computer based testing

- Adaptive testing

What the test constructor has done is change the definition of the population of interest. An overview of the use of computerized testing in psychological assessment can be found in Butcher (1987). Having an appropriate IRT model can confirm construct validity, it does not imply test reliability.

Measurement Models in Assessment and Evaluation

Introduction

Unidimensional Models for Dichotomous Items .1 Parameter separation

- The Rasch model

- Two- and three-parameter models

- Estimation procedures

- Local and global reliability

- Model fit

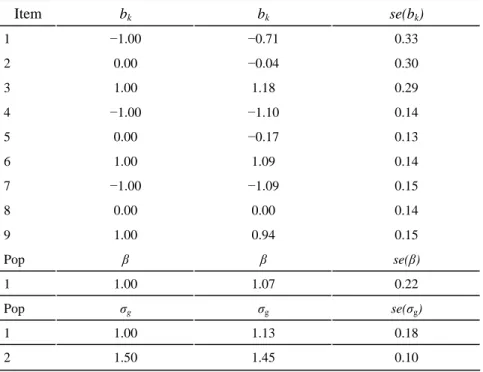

The element parameters are estimated simultaneously with the mean and standard deviation of the ability parameters. In the section on model fitting, a test for the appropriateness of the ability distribution will be described. Not that the model violation did not result in a significantly biased estimate of the item parameters.

Models for Polytomous Items .1 Introduction

- Adjacent-category models

- Continuation-ratio models

- Cumulative probability models

- Estimation and testing procedures

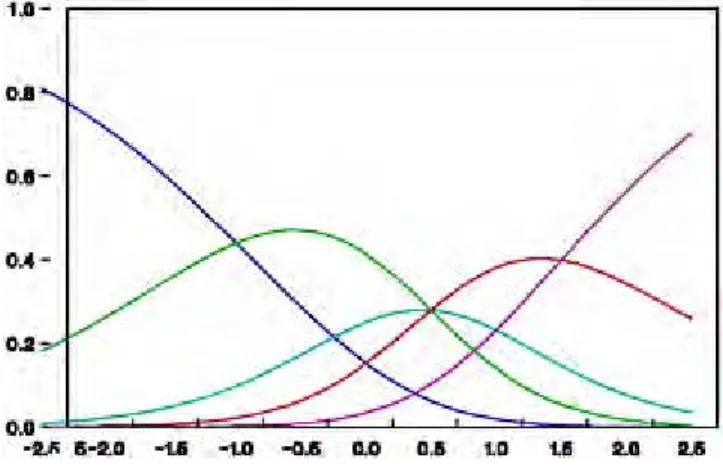

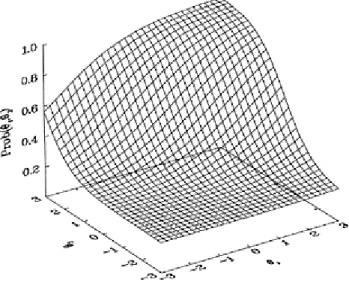

In the following, the answer to item k can be in one of the categories m=0,..., Mk. For dichotomous items, the response function was defined as the probability of a correct response as a function of the ability parameter θ. In this formulation, we define the item category function as the probability of scoring in a given item category as a function of the ability parameter θ.

Multidimensional Models

In general, however, these identification constraints will do little to provide an interpretation of the dimensions of ability. This approach is a generalization of the marginal maximum likelihood (MML) estimation procedure for unidimensional IRT models (see, Bock & Aitkin, 1981), and has been implemented in TESTFACT (Wilson, Wood & Gibbons, 1991). In the framework of adjacent category models, the logistic versions of the probability of a response in category m can be written as.

Multilevel IRT Model .1 Models for item parameters

- Testlet models

- Models for ratings

In the level 2 model, the values of the item parameters are considered as realizations of a random vector. It is assumed that the element parameters e.g. has a 3-variate normal distribution with mean µp and a covariance matrix Σp. This approach discards part of the information in the item responses, which will lead to a certain loss of measurement precision.

Applications of Measurement Models

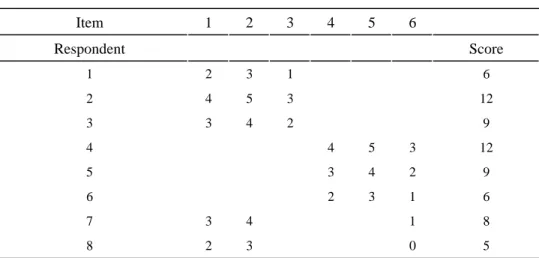

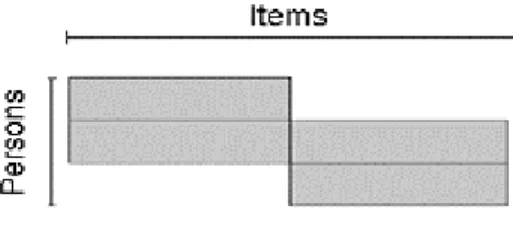

Test Equating and Linking of Assessments .1 Data collection designs

- Multi-stage testing

- Test equating

Note that this expectation depends only on the parameters of the items of the reference exam. In the example of Table 8.3, the cut-off point of the reference exam was 27; consequently, 28.0% failed the exam. In the bold row marked "English H" information is given about the results of the reference population doing the reference examination.

Multiple Populations in IRT .1 Differences between populations

- Multilevel regression models on ability

The Bayesian approximation deals with the posterior distribution of the parameters, for example p(θ, δ, β, µ, σ | y). At the student level, the variables were gender (0=male, 1=female), SES (with two indicators: father's and mother's education, scores ranging from 0 to 8) and IQ (range from 0 to 80). It can be seen that the magnitudes of the fixed effects in the MLIRT model were larger than the analogous estimates in the ML model.