Nor are other forms of analysis expected to quickly outline syntactic analysis. Words, in the sense of the proposed framework, are not mere words; they are in themselves schemas, not only in the sense of cognitive linguistics (Lakoff. But it is, on the one hand, incompatible with connectionist results and theorizing, and it is not at all factually necessary on the other hand.

Indeed, pattern matching analysis is one of the frameworks in which this kind of prima facie simplification is judged to be an "artifact." Or to put it another way, it is a collaborative computation in the sense of Arbib, et al.

Details of pattern decomposition

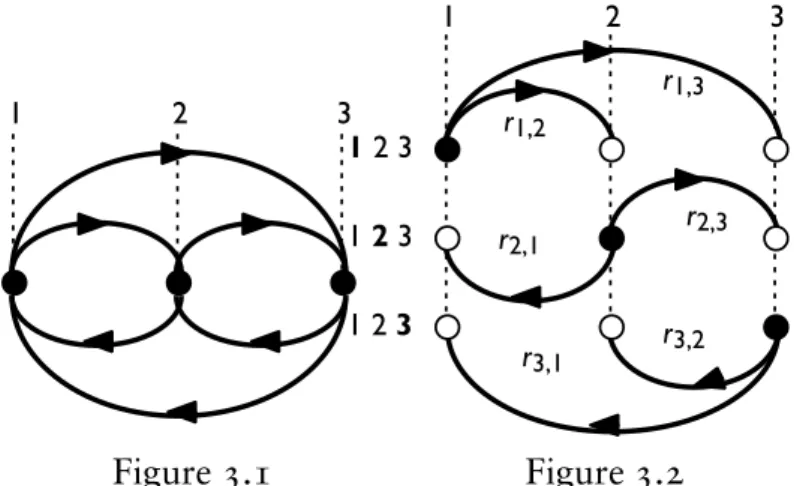

It is essential to note that the ith subpattern always corresponds to the ith unit of a base pattern; and an arrangement of subpatterns 1, 2, .., n always forms an n 3 n matrix. A procedure, loosely called categorization, is clearly needed, whereby type-based encoding is achieved as follows, from the token-based encoding in (11).

Syntax is a correlation of paradigmatics and syntagmatics

Pattern composition

Base model 0 is nothing more than a vertical column-by-column union of the corresponding submodel units. For example, Bill, as the first unit of the basic model is the superposition of Bill on 1, S on 2, and S on 3. It is insufficient to think that abstract elements like S, V, O called syntactic affixes simply specify syntactic slots (in which the lexical units are inserted).

The role of overlaps in pattern composition

To summarize, symbols like S, V and O are not silly slots; rather, they are meaning-sensitive adhesives without which subpatterns cannot be combined to form a pattern without reference to syntactic "templates" or "skeletons" such as [S NP [VP V NP]] (the appropriateness of certain categories is completely irrelevant here). The key to the emergence of surface formation is the overlap between submodels, equated to words, on the one hand, and the over-specification in submodels, equated to overlaps, on the other hand. I claim that PMA is compatible with connectionist, or at least connectionist, in that the submodels are an extension of the idea of wickelphones proposed and used by Rumelhart and McClelland (1986) to encode part of the phonology of English.

Note that it is the patterns, not the trees, that match the surface formations, such as This shows that phrase markers are practically an "intermediate format" needed only to generate strings of preterminals C1 L Cn (eg, NP V NP P NP) as "patterns", or rather "patterns" in the meaning of PMA. The arrows ⇑ here are interpreted as the operator of inserting or joining the final lexical items in P.

They assume that the basic component of a generative grammar generates strings of preterminals such as P = C1 C2 C3 C4 C5 (eg, NP V NP P NP), on the one hand, and match them to strings of lexical items. In this case and other similar cases discussed below, the base models do not need to be defined independently (for example from the base rules), as long as the submodels are already defined. The same is true for the following case, where two overlapping patterns of length 4 are given.

In (19), there is only one (empty) subpattern that has the same size as the whole.

What Structure Is Syntactic Structure, if It Isn’t A Tree?

- Classical account of syntactic structure

- A new technical metaphor for syntactic structure

- Pattern matching perspective on composition

- Pattern composition by superposition

- Syntactic structure is more complex than a tree

- Pattern matching analysis of V-gapping

For one thing, it is a theory that says that the syntactic structure of (21) is a tree as in Figure 3.3, not a set of empirical facts. In fact, formations like the Ann we are concerned with should not be generated by such a less powerful generative grammar, which consists only of the context-free rules in (22). In Figure 3.4, the difficulty in specifying BC → DEF in a graphical way is addressed by exhausting the contributions of B and C to D, E, and F.

A context-free grammar that does not include rules like NSP V → NSP V NOP and therefore assigns derivation trees like the one given in Figure 3.3, rather than derivation graphs like the one given in Figure 3.4, will certainly be more the weak one. device to describe a set of strings like (21). In what follows, I will argue against the view of the lexicon in which it is a list of context-free entries such as Ann, that concerns us. Composition is conceived here as the process and/or operation of "building" units of larger size, eg, Ann concerns us, from a given set of units of a given size.

The diagram in Figure 3.5 illustrates the lattice of precedence relations compatible with Ann p disturbs p us, where p denotes the precedence operator. Returning to the original question of how Ann bothers us = (21) is composed of and parsed into the words Ann, bothers us, in (25). From a PMA perspective, words like Ann, bothers, and we Ann bothers us are not merely lexical items.

For a better understanding, it would be useful to draw relevant points in the pattern composition in (31) as in Figure 3.8. Comparing the diagram here with the diagram in Figure 3.7 above, it should be clear that the standard, tree-based view of composition and decomposition contains a few undesirables. There are certain unusual entities such as John V fast in the diagram in Figure 3.9, and because of such exotic entities some kinds of erasure phenomena can be handled without appealing to the extra entity of erasure or movement.

Some Appeals of Pattern Matching Analysis

- Multiple parallel parsing as a communication among autonomous agents

- Where do glues come from?

- Mechanism of automatic checking

- Comparison with Optimality Theory

- Further remarks on pattern composition

The problem is to account for substrings such as ran fast in (33)a, in contrast to (33)b and c, on the one hand, and John slowly in (33)d, in contrast to (33)c, on the other side. . One of its most crucial implications is that, on the one hand, such subpatterns dispense with such phrase markers as [NP [V NP]], whether with S and VP tags or not, and even dispense with specifications such as "John V(P)" . ”, “NP ran”, as long as NP and V(P) are meaning-free, purely syntactic constructs. Although it is admittedly possible to give (39)a an analysis like the following, it is.

In passing, I would like to make some comments about the relationship between PMA and optimality theory (Prince and Smolensky 1993). However, it is not clear whether the arrangement and rearrangement of constraints in optimality theory is truly a result of self-organization, as proponents of the theory do not seem to emphasize the learning process, which connectionists debated with "classical" theories. Theorists such as Fodor and Phylyshyn (1988) and Pinker and Prince (1988) (see Tesor 1995 and Tesor and Smolensky 1995 for an alternative view). Let me conclude this digression by noting that while there is no denying that optimality theory is a promising linguistic theory, it is somewhat unclear whether it can provide deep insights into human language, since it is, at least officially, a theory of is competence. , and at worst there is another form of investigation into Universal Grammar, which could be a theory of irreality.

Since unification plays a key role in pattern matching analysis, it is clear that John ran rapidly receives two (subtly different) semantic roles imposed by subpatterns 2 and 3. John is understood as an agent on the condition that S -matches S ran, where S denotes the agent. Similarly, John is understood as another agent based on the condition that S-matches fast with S V, where S denotes the agent.

Clearly, the effect can be accounted for if we simply assume that S4 in O4 serves as a kind of abstract verb without recourse to syntactic movement or its conceptual analogue.

On the Descriptive Power of Pattern Matching Analysis

- Basic properties of cross-serial dependency in Dutch

- Pattern matching analysis of the cross-serial dependency

- Comparison with Hudson’s Word Grammar Analysis

- Diagramming co-occurrence matrices

- Is pattern matching a too powerful method?

If (46) or (48) is correct, the model matching analysis of cross-serial dependence construction is straightforward. The fairness of the pattern matching analysis is perhaps comparable to Hudson's analysis of it in his word grammar framework. Hudson gives a word-grammatical analysis of the Dutch inter-serial construction in terms of the structure of dependence, as illustrated below, quoted with some modifications.21.

The word grammar analysis of the cross-severe dependency structure presented in Figure 3.10 is informative, but I claim that PMA endorses syntactic analyzes provided by Word Grammar in terms of dependency structure. In a certain sense, therefore, a crucial property of pattern matching representation is that it makes explicit which dependency structure as in Figure 3.10 implicitly encodes. These rules are simple enough to ensure the upper compatibility of pattern matching analysis with Hudson's word grammar analysis.

The last point proves an essential argument: those dependency diagrams like the Hudson diagram in Figure 3.10 can never be more powerful than co-occurrence matrices like this one. But this fact does not mean that it is also reasonable to require that all rules of the base component be context-free, thus making the transform component only a context-sensitive grammar and keeping the base component within the context class- free grammar. Again, there is no empirical evidence that all core component rules are context-free.

Specification of the 'weakest' generative system that provides adequate descriptions of all (and only) expressions of a natural language, which is one of the goals of generative linguistics, is still feasible, even if the basic component contains context-sensitive rules, and the The transformational component is completely eliminated.

Concluding Remarks

Natural scientists can only hope that differential equations describe classes of natural phenomena very well, and no more than that. If some linguist says that the Universal Grammar "explains" this, I will say that such an explanation is just a joke: it is not scientific at all. This is as terrible a joke as the theory of differential equations "explaining" Newton's equation f = ma.

It stands to reason that a proper subset of a natural language is a context-free language whose strings are generated by a context-free grammar, even if the entire language is context-sensitive. The condition roughly states that a shift a must not move material at once across more than two boundary nodes, NP or S (or CP, especially in the later recasting of the effect in terms of "barriers" in Chomsky 1986a). If one can marvel at the words of the God-father of generative grammar, then one will soon realize that the subordination condition is more likely a performance constraint and therefore has nothing to do with UG, the theory of competence.

Note that it is better than anything that one can dispense with such a specter as competence. So it is reasonable to think, quite realistically, that competence is unreal, or an abstraction that has nothing to do with the linguistics of natural language. The multiplicity of deep structure was suggested by Lakoff (1974) in his treatment of the phenomenon called syntactic amalgams.

In this respect, the spirit of the PMA closely resembles the framework of underspecification in the Archangel's sense with a charitable misunderstanding of the notion.