Evaluating the Usability of PowerMeeting

Nowadays, Human Computer Interaction (HCI) usability testing has been widely adopted during the process of software interfaces design, implementation and evaluation, for improving their performance and enhancing user satisfaction. PowerMeeting is a real-time web-based collaborative system. It provides a range of collaborative tools, including micro blog, brainstorming tool, text editor, voting tool, etc. In order to investigate the interface and functionality of PowerMeeting platform, a HCI usability test is conducted using a series of tools, such as scenario design, open-ended interview and heuristic questionnaires. The main purpose of the report is to demonstrate the test, analyse its result and provide suggestion to the future design.

KEYWORDS

PowerMeeting, scenario, usability testing, heuristics, collaborative work

INTRODUCTION (GANG YANG & XUEBIN XU)

Background Research (Gang Yang)

In modern society, team work is becoming more and more popular in universities, corporations and research institutions. It tends to be more effective than individual work if the team is cooperative. For achieving a common goal, team work often involves a series of activities, such as problems discussion, assignments distribution, decision making, voting, brainstorming, etc. In the past, efficient team work could only be conducted face to face in a meeting. Sometimes, in order to hold a meeting, large amount of capital and time has to be wasted in travelling by team members.

Along with the development of computer and

telecommunication technology, some web-based

collaborative software tools (also called Groupware) are emerging to help people work together over the Internet [4]. The common features of these collaborative software tools consist of wikis, online chat, blogs, teleconference, videoconference, document sharing, etc. Since a significant number of relevant multinational corporations, such as Google, Microsoft, Apple, etc. are never tired of promoting their online Groupware each year, it is certain that some

relevant products have been applied more and more in people‟s daily work and life.

(Xuebin Xu)

Groupware includes the functions that enable people chat, share resources, and collaborate with each other, even hold videoconference or multi-user games. This kind of interaction requires a design that allows efficiency). As Hilts and Turoff [6] mentioned, groupware has existed since the 1970s in the form of the inter-organizational computer-mediated communication systems, such as within-company electronic mail systems. Since then, both the quality and quantity of groupware were considerably improved to meet various needs of collaboration all over the world. However, information and communication technologies cost most of the capital of a company especially to international companies and information technology companies, which rely strongly on groupware to perform a set of activities.

On the other hand, groupware demonstrates its value not only through the convenience it provides but also because of the profit it could bring to the companies, which is demonstrated by Brézillon et al. [2] in their case study of a news organization in one of Ireland‟s major cities, where the investment on groupware plays a core role over all the investments on IT. Like Bhatt et al. [1] specified, groupware could allow its users to express and share their feelings and knowledge, and construct an efficient working environment and peaceful virtual community, without the constraints of time and location. Furthermore, as Wang [10] mentioned “Synchronous groupware is software that enables real-time collaboration among collocated or geographically distributed group members”.

on the Mac OS. One way around this issue is to develop web-based groupware applications. The advantages and disadvantages of this method are obvious; web-based applications overcome the OS limitations and user licenses can be significantly less costly than desktop applications. In addition, web-based applications are easier to be maintained and updated. However, the web introduces a set of limitations that are concerned with technology available in the web.

PowerMeeting is a Web-based synchronous groupware framework developed by using features provided by the Web 2.0. PowerMeeting is built based on the Google Web Toolkit (GWT) [5], which offers a rich user experience by providing rich graphical user interface and concurrent client-server connection as mentioned by Dewsbury [5, also cited by 10]. Furthermore, the aim of PowerMeeting is to

provide the real-time client-to-client collaborative

application framework. Due to the continually improved technology such as Web 2.0 and AJAX, PowerMeeting is able to supply the functions such as online document sharing and co-editing, online chatting (both text and voice), online conference and group activities such as brainstorming and voting. Compared with the current groupware applications, PowerMeeting demonstrates some benefits. conferencing, the common feature, PowerMeeting beats the other best-known groupware in the areas of cost, operation

system dependency and group activities, such as brainstorming and voting.

Report Organisation (Gang Yang)

The rest of this report is organised in the following manner: the methods utilised in the evaluation are described in the next section. Then, the results obtained from the evaluation are presented, along with tables and diagrams. A section to discuss and analyse the results is included after that; in this section improvement suggestions are included. Finally, the conclusion summarises the findings of the evaluation.

METHODS (DENISSE ZAVALA & VASITPOL VORAVITVET)

Usability evaluation (Denisse Zavala)

By providing a set of functionality that a group of distributed users can find useful and appealing, PowerMeeting tries to “make synchronous collaboration an integral part of collaboration support on the Web” [11]. Any system that tries to achieve this purpose should be able to satisfy not only the users‟ needs, but also, it should be designed in a way that it can be easy to understand and easy to use. There are different definitions of usability, but all of them involve a user, a task and a product [9]. Furthermore, usability is concerned with how well a user can perform a task in a product. Considering these reasons, a usability evaluation is needed to identify ways in which PowerMeeting can be enhanced to support adequately users in performing collaborative tasks, and thus, make it an integral part of collaboration support on the Web.

Sample Size (Vasitpol Voravitvet)

Given the size of the project and the time available to complete the evaluation study, a sample of convenience was used to perform the evaluation. Although the group decided to test the software with two groups of people, the number of participants was kept to a small number on both groups.

The experienced users were randomly assigned. They were 7 students from HCI class. The group expected more objective feedback, which would help on identifying the issues of the system. On the other hand, 9 non-experienced users were volunteered to do the test. The group expected some issues that might be overlooked by the experienced users. Moreover, the group also expected some suggestions from the first hand experienced user.

During the group usability testing, one of the students was selected to be a session chair; he was responsible for controlling the meeting.

Demographics (Vasitpol Voravitvet)

Participants came from different academic backgrounds, but the majority came from computer-oriented and business-oriented backgrounds.

inexperienced. 7 out of the 16 participants were students from the “Human Computer Interaction and Web User Interface” module in Manchester Business School and they had already have experience using PowerMeeting; they represent the “experienced” group. The rest of the participants, on the other hand, were students from other courses and they had never used PowerMeeting before; they form the “inexperienced” group.

The inexperienced group was included in the evaluation in order to have a clear understanding on how the experience affects the interaction with PowerMeeting, in other words, we wanted to know how different the interaction with the

All the experienced participants expressed they had used groupware systems before, and they stated to often use this kind of software.

Procedure (Vasitpol Voravitvet)

The test is divided into 2 parts. The first part is the individual testing. The second part is the group testing.

The test by experienced users was conducted in the lab where a set of predefined individual and group tasks were given to them. The experienced users performed the test by using PC computers that were connected to wire network. Similar to the experienced users, the non-experienced users were provided with a set of predefined individual and group tasks, but the test was conducted on the weekend using laptops that were connected to the wireless network.

Individual Testing

1. The participant was provided with a set of individual tasks to complete.

2. After the participant finished each module of the test, they were asked to fill a questionnaire by rating the difficulty scales to complete each task.

Group Testing

1. The group of participants was provided with a scenario,

which explained the situation and the aim of the meeting. They were also provided with a set of tasks to complete.

2. One of the participants was selected to be a session chair; he was responsible for controlling the meeting. The session chair was provided with some additional tasks to perform before and during the meeting.

3. After the participants finished the whole test, they were asked to fill a questionnaire that contained questions related to the ease of use and satisfaction of the tasks.

After the participants completed all the tasks, they were asked to complete the system questionnaire which asked about satisfaction with the system. Moreover, some of the users, the session chair and other 4 participants, were asked to complete the heuristic evaluation.

Tasks (Denisse Zavala)

The tasks designed for the evaluation aimed at testing the following features of PowerMeeting:

Brainstorming, including categorisation, voting and

reporting sub-features.

Richtext

Microblog (or blog)

Chat

Although not a particular feature of PowerMeeting, the evaluation also comprised the general function of logging in.

Individual tasks

The tasks in this category were designed to evaluate each feature on its own, they are independent and the order of execution is not important.

1. Log in: Participants were asked to create a new session

and then log in. They were provided with a session name and username. They were also asked to log out of the system and log in again.

2. Brainstorming: This task required participants to create a brainstorming agenda item and then to add the different ideas provided. They were also asked to create three categories, and to organise the ideas into these categories. Deletion and edition of ideas was also included in this task. After ideas were categorised, participants were asked to vote on them and analyse the report.

3. Richtext: For this tasks, participants needed to create a new richtext agenda item, then type a given text and try the formatting capabilities, such as making the text bold, changing the font size, colour and style. Users were asked to add an image to the text and then save their changes.

4. Microblog: For this task, users were asked to create a new microblog agenda item. After that, they were asked to add certain text in certain order, and then to delete and edit some the text. They were asked to report the time of their first blogpost.

5. Agenda items: Users were asked to reorder the agenda items, moving the last item in the list to the top of the list.

Group tasks

In order to evaluate the collaborative nature of the system, a set of group tasks was designed. A meeting chair guided these tasks; they required a single item to be used by all the participants at once. The tasks are the following:

1. Log in to the system. Users were provided with a username and a shared session name to access the system.

3. Voting. Participants were asked submit their votes regarding four categories that were previously created in a different agenda item. They needed to reach a decision and they were encouraged to use the chat to contact their teammates. The session chair was responsible for leading the conversation and trying to make the decision in a timely manner.

4. Richtext. The meeting chair unlocks meeting mode and

then steps away from the meeting. Participants were asked to create a new richtext item and add some text to it. With no previous notice, the meeting chair returns and locks back the meeting mode.

Testing Environment (Vasitpol Voravitvet)

For all the tests with the experienced users, the test was conducted using a Windows XP-based PC computer. The computer was connected to the Internet via LAN. Internet Explorer 7 was used to perform the test.

For all the tests with the non-experienced users, a laptop with Windows Vista or Windows 7 operation system was used to conduct the tests. The laptop was connected to the Internet via the University of Manchester wireless network. Internet Explorer 7, Mozilla Firefox, or Google Chrome was used to perform the test.

Data collection (Denisse Zavala)

In order to collect data during the evaluation each participant was observed by a designated monitor. Monitors used a stopwatch to measure how much time participants took to complete each task.

Preference data were collected during the evaluation through questionnaires that were answered by participants at the end of each task and one general questionnaire answered at the end of the evaluation.

We used a closed-question questionnaire to gather general information about participants, such as gender, age group, academic background, familiarity with groupware software. At the end of the session we used a similar questionnaire to find out the likelihood of the participant to use PowerMeeting again. Finally, after the end of the usability test, we asked the users in an open-ended interview about their opinion concerning PowerMeeting.

Usability metrics (Denisse Zavala)

The usability metrics used in the evaluation were performance metrics and self-reported metrics.

Performance metrics

Performance metrics were used to measure how well participants were interacting with the system. We used the following performance metrics to measure individual and group tasks:

Task success: To measure how effectively users were able

to complete the tasks we used binary success. The tasks designed for the evaluation had a clearly stated goal which participants were able to determine whether they had completed it or not. Success was determined by

participants‟ expression of having completed the task and confirmed by monitor assigned to the participant.

Time-on-task: We measured efficiency through this

metric. For individual tasks monitors started measuring a task after the participant had read the instructions, the timer was started when the participant clicked the first item (or hit the first key on the keyboard) involved in the task. The timer was then stopped when the monitor confirmed the task was completed. For collaborative tasks only one timer was used, measuring the total time elapsed to reach to the conclusions included in the task.

Self-reported metrics

In order to obtain information about the participants‟ perception of the PowerMeeting and their interaction with it we used the following methods

Likert scales: We used 5-point scales of ease-of-use and of satisfaction, where the highest rate (5) represented very easy to use and very satisfying, respectively.

Semantic differential scales: 5-point scales were used in the evaluation such as the following: useless to useful, inefficient to efficient and confusing to easy-to-understand.

Heuristic evaluation (Denisse Zavala)

The usability evaluation of PowerMeeting included an expert evaluation (heuristic evaluation). We asked 5 participants to rate PowerMeeting using each of Nielsen‟s Heuristics [8].

Data analysis (Denisse Zavala)

Descriptive statistics, such as mean and standard deviation, were used to analyse the data collected.

A T-test was used in the analysis to find out if there is a significant difference in task completion time between experienced and inexperienced users.

RESULTS (KYRIAKI TSIAPARA)

Performance: Effectiveness

The first aspect of usability tested in our report is the effectiveness, measuring whether the experienced and inexperienced users were able to complete the tasks.

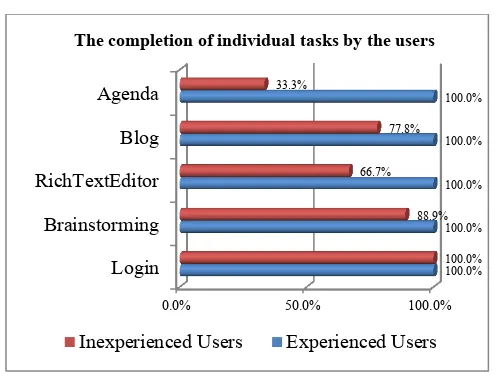

Figure 1. Task success.

Initially the users were asked to complete the tasks individually: all experienced users completed all the tasks, while the inexperienced users faced several problems. But the problematic function of PowerMeeting during our testing was, to some extent, responsible for the lower percentages.

Collaborative/group task

The experienced users managed to complete the collaborative task, but the inexperienced users were not able to use collaboratively the PowerMeeting, due to inexperienced users were concerned, only those who managed to complete the tasks are included in this measurement.

Figure 2. Time needed for completing each task (in seconds)

The inexperienced users needed overall 82% more time than the experienced users (813.32 seconds for experienced users, opposed to 1480.41 seconds for the other cluster), partially because of technical pitfalls of the system, which forced the inexperienced users to repeat parts of some tasks

(for example, inexperienced user 4 had to login again and re-type the text in RichTextEditor because the system had stopped responding).

For each task, the additional time needed in percentages from the inexperienced users (for those users who were allowed by the system to complete each task) was:

188% to login again (some users needed to change three

browsers to be able to login again and restart the system)

69% for the brainstorming (the system did not respond in

many cases)

76% for the RichTextEditor (mostly spent on typing the text)

64% for posting and editing the Blog

47% for the agenda above tasks, we will use the student t-distribution, since the sample size is very small in order to use the z values of normal distribution.

For a 95% confidence interval, the critical value of the two-tailed t with n-1=7-1=6 degrees of freedom is 2.447. As a result the constructed confidence interval is:

Mean ± tcritical * Standard Deviation / √n

Based on the above formula we perform the calculations and the results are interpreted as following:

“We are 95% confident that the true mean time for the

The completion of individual tasks by the users

Inexperienced Users Experienced Users

Time needed for completing each task (in sec)

To measure the satisfaction and beliefs of the users towards PowerMeeting, we conducted a questionnaire of three units. Both experienced and inexperienced users answered the same questions. Since one of the experienced users and some inexperienced users did not reply to all questions, we base our results only to those who responded, to achieve data that are more valuable to analysis and comparison.

Individual tasks

Login

Figure 3. Ease of login

The groups of users replied how easy they consider login and re-login are. The experienced users believe that is an easy procedure, while the inexperienced ones find it more difficult or have a moderate opinion („nor difficult, nor easy‟).

Brainstorming

The users rated how easy or difficult they believe each brainstorming activity was. The value 1 was considered to be very difficult and value 5 very easy. In the figure below the average of each item is provided for both clusters. Averages until 3 are considered as difficult; around 3 are moderate and above 3 are easy.

Figure 4. Brainstorming easiness/difficulty

Overall, the experienced users perceive the brainstorming tasks as easy. The most difficult item is the modification of ideas, which is the only item that gets a lower grade than 3.

The second lower average is for deleting ideas (average 3/5) and all the other items are ranked as easy, with the item „creating a brainstorming idea‟ being on top (average 4,67/5).

On the other hand, the inexperienced users found this task more difficult, in comparison with the other cluster. Deleting (2,13/5) and modifying (2,63/5) ideas are the two most difficult tasks in this group too, while creating and adding ideas are the only tasks that obtain an average over 3.

Figure 5. Overall brainstorming experience rating

As shown in figure 5, the inexperienced users are generally dissatisfied from the system, while one out of two experienced users are satisfied.

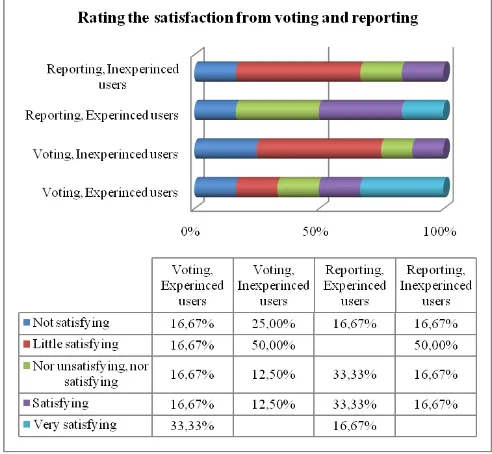

Figure 6. Voting and reporting satisfaction rating

better overall result for reporting), while three out of four inexperienced users are dissatisfied by both options.

Additionally, these functions are perceived as difficult by half inexperienced users, while the experienced ones tend to find them moderate or easy (Figure 7).

Figure 7. Voting and reporting ease of use rating. RichTextEditor

Figure 8

First of all, the easiness of each function of RichTextEditor was tested. Although we expected that the experienced users would find the system easier, saving changes is considered to be less difficult from the inexperienced users. Overall, it seems that all users do not face many difficulties in editing the text. As a consequence they are satisfied by this function (Figure 9).

Figure 9 Blog

Figure 10

The easiness of each function in blogging was also measured (Figure 10). Here, the inexperienced users faced more problems with deleting (2.67/5) and editing (2.83/5) the posts.

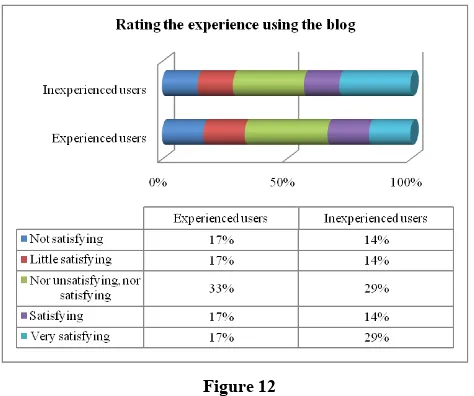

Figure 11

Figure 12

Overall, according to figure 12, the users are satisfied or have a moderate attitude towards blogging, with inexperienced users scoring slightly better.

Agenda

Only two experienced and three inexperienced users have answered how they rate the use of the agenda. Both experienced users are not satisfied by this item (they rated 1 and 2 out of 5). Two out of three inexperienced users are not satisfied (rated 1 and 2), while the third user is satisfied (giving 4 out of 5).

Collaborative/group task

Only the experienced users have responded to this part of the questionnaire.

Chat

Figure 13

As shown in Figure 13, the users have a moderate or positive attitude toward chatting in PowerMeeting. Also, they believe that it is easy to use, fast but looks poor (Table 2).

The chat is…

1 2 3 4 5

Difficult to use

16,66% 66,66% 16,66% Easy to use

Rich 16,66% 33,33% 50% Poor

Slow 16,66% 16,66% 33,33% 33,33% Fast Table 1

The researchers asked the users to grade two potential improvements (Figure 14). While the ability to change the size of the chat in the screen gets better grades, overall the users did not intensively supported these improvements.

Figure 14

Finally, the chat loses in comparison with a face-to-face meeting (Figure 15).

Figure 16 The brainstorming is…

1 2 3 4 5

Difficult to use

33,33% 66,66% Easy to use

Useless 16,66% 50% 33,33% Useful

Inefficient 16,66% 50% 33,33% Efficient Table 2

Overall the users have a moderate opinion towards brainstorming (Figure 16) and they believe that it is generally easy to use, useful and efficient (Table 3).

Additionally, the users‟ stronger wish in voting is to rate the options from the most suitable to the least suitable option (66.66%), followed by the current approach of percentages and the choice of best option (16.66% each).

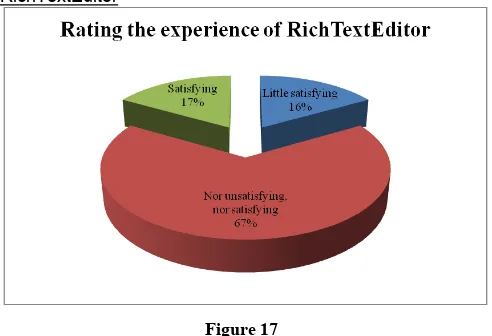

RichTextEditor

Figure 17

A moderate opinion for RichTextEditor is expressed as well (Figure 17).

Also, the participants were asked „While you were working on your text, the session chair selected „In meeting‟. This resulted to losing all your work. How would you comment that?‟. They had to choose only one option.

Their responses are as follows:

1. „It is a serious problem/pitfall of the system, making me feel really unsatisfied‟ (50%)

2. „I did not like it, but not a very important problem and thus there is no need to change it‟ (33.33%)

3. „It is accepted because this way we can work all together, viewing the same screen‟ (16.66%)

4. None chose the „other option‟

General Questions about groupware software

At the end the users completed three questions relating to

their experience with groupware software and

PowerMeeting. Their responses are:

All the experienced users and almost none of the

inexperienced users had used groupware software before (Figures 18, 19).

The experienced users often use groupware software,

while the inexperienced users never (Figures 20, 21).

More than half of the experienced users will not use PowerMeeting in the future, while inexperienced users are not sure about that (Figures 22, 23).

Figure 18 Figure 19

Figure 20 Figure 21

SUS - the System Usability Scale

The System Usability Scale was used right after testing PowerMeeting and before the discussion takes place [3].

Four experienced and two inexperienced users answered this questionnaire. The results were:

User SUS Score

Also this questionnaire was answered by four experienced and two inexperienced users. The results were:

Experienced number input (the other answer was „NA‟).

Interviews

After the end of the test, we asked the inexperienced users for their opinions concerning the system. The main points were:

1. In General, the system cannot arrange anything in a nice way

2. Rename ideas in Brainstorming is very difficult

3. They did not like the arrangement for brainstorming. Brainstorming generates in a random order and they mentioned that sometime they cannot see all brainstorming items.

4. Foreground / background in RichTextEditor are

confusing for changing text color.

5. They can have the same username in the system

6. Once in meeting mode, all unsaved document their username was still appearing in the system). 9. The system cannot display everything is full screen

and has low resolution.

10. There is no signal for incoming messages from chat.

11. The brainstorming needs a scroll bar and the ideas start overlapping due to space.

12. It is very difficult to use the edit function for brainstorming. The mouse pointer needs to point at the tiny bar and double click it.

13. They were unable to move topics

14. Deleting blog did not work

15. They were unable to delete agenda items

16. It was difficult to find how to edit text in

RichTextEditor

17. The system did not respond when writing text in RichTextEditor (it stopped typing)

18. When the users put bullets, they lost the previous format in colours

19. They disliked that they had to type the URL. „The system should allow us to put our own pictures‟ 20. The blog looks like twitter, but there is no point for

that because blogs are supposed to be seen from everyone (not only the limited number of participants in the session). „The way it works now is like chatting‟

21. „Things happen too fast (when the system works), I do

not know what it is doing and why‟

22. They liked the most that they did not have to install it, since it is available online

23. Their suggestions for the future: brainstorming tree, private chat sessions

Correlations

DISCUSSION (QI ZHANG AND ALEX TSE)

(QI ZHANG)

When the users were doing each task, we monitored their time and actions and recorded the difficulties they came across. In order to gain an insight understanding of the result and to increase the creditability of data interpretation, open-ended interviews were conducted to collect user reviews. Following the provision of “what” that this study has found from previous parts, the aim of these interviews is to disclose “why”. The advantage of collecting reviews from both experienced and inexperienced users with

different knowledge backgrounds is to develop

improvements not only concerning a technical perspective but also the end-user‟s point of view. Finally, we conclude in some core issues as below.

Individual Tasks

Login

Figure 3 shows that 83.33% of users found that login is easy or very easy. However, they had to refresh the page to re-login, which is perceived to be a serious pitfall. More specifically, if the user entered a wrong session name in the log-in page, an error message was displayed and the log-in button was somehow disabled, preventing the user from re-logging into the session. Currently, in order to re-log in to the same session again users have to click the refresh button on the web browser to reload PowerMeeting log-in page. Also, the automatic session name example (room 2) is viewed to be unnecessary for some users, whilst one user thought it is annoying to change it every time without the functionality of memorizing the previous session name.

Brainstorming

Overall, the experienced users perceived brainstorming tasks as easy whilst the inexperienced users confronted more difficulties. Among these tasks, deleting and modifying ideas are the most difficult tasks perceived by both groups. The two tasks aim to test whether users know that they can edit ideas by clicking the top dark bar of each idea.

Nonetheless, most experienced users took a little time to find the dark bar to edit ideas while two out of seven experienced users did not know how to edit. Particularly, one experienced user claimed that it was very difficult to use the double click function on the ideas to gain access to the edit and delete function. On the other hand, three out of nine inexperienced users who watched the tutorial video needed additional time to find the instruction and complete the task, while the rest six inexperienced users did not know how to access this function. One possible reason for this difficulty is that the instruction icon „click the top bar for editing‟ is not obvious and the dark bar is too thin. Meanwhile, an interesting issue that occurred during the usability test was that many inexperienced users tried to edit by using „right click‟ on the mouse. This illustrates that it would be more convenient and easier for users if the „right click‟ function were applicable. These two typical

examples show the importance to improve accessibility in the user interface.

In the meantime, two inexperienced users mentioned that they do not like the arrangement of brainstorming items, since ideas appear randomly and sometimes overlap. As a result, the users are not able to see all brainstorming ideas. Thus, it is suggested that a scroll bar could be useful.

Rich Text Editor

The overall satisfaction of Rich Text Editor is above 60% and experienced users tend to be more satisfied. Surprisingly enough, one out of seven experienced users did not know how to insert a picture and most users found really annoying that they had to type a URL. Also, the system does not allow users to upload pictures from their own computers, which is seen as a drawback that needs to be addressed. Furthermore, one experienced user and four inexperienced ones mentioned that it was difficult for them to edit text in Rich Text Editor. Notably, all users needed time to manage changing the font colour, as well as the text highlight colour. This happened because there are no clear

icons for changing colour, like (this appears at

the Microsoft office suite). Instead, these functions named as „foreground/background‟ in Rich Text Editor are quite confusing, according to the users. Additionally, all

participants assumed that „background‟ means the

background colour of the page rather than the selected text. During this test, one inexperienced user came across with the following: when he was adding bullets, he lost the previous colour format.

Blog

In general, over 60% of users had a satisfied or moderate experience using the blog and they all managed to complete this task successfully. Those who were not satisfied specified as reasons the fact that there was no option for deleting the blogpost, as well as the confusing order of the posts. One inexperienced user mentioned in the interview that the blog looked like twitter (but for limited viewers) and that the way it works now is like chatting.

Agenda by dragging it to the bottom (where the recycling bin was) and then two experienced users realized that they should go to the „Edit‟ pull-down menu to move items. This could illustrate why users are not satisfied.

private chatting room. Also, they are not happy with the fact that they cannot change the size of the group chat window. Additionally, 50% of users suggest the incorporation of a sound alarming or any other kind of notification when receiving a new message. In comparison with a face-to-face meeting, PowerMeeting is in losing ground, according to the users. However this has various reasons and some of them are not related to the functionality of PowerMeeting. For instance, some users believe that a physical meeting is more effective than any type of virtual meeting.

Rich Text Editor

From the results showed above, 50% of users claimed that losing all their work on the text when the session chair selected „In meeting‟ is a serious pitfall of the system. Thus, an automatically saving function should be built into this system to avoid such problem. Meanwhile, many inexperienced users suggested that the system should provide a small window which allows them to do their individual work without the control of the session chair even in the meeting mode.

System Usability Scale (SUS)

As far as the System Usability Scale was concerned, we can see that the score varies a lot from 35 to 47.5 for experienced users and from 30 to 50 for inexperienced users. However, this score depends on the effort and resources used to achieve the user‟s goals and the degree of satisfaction.

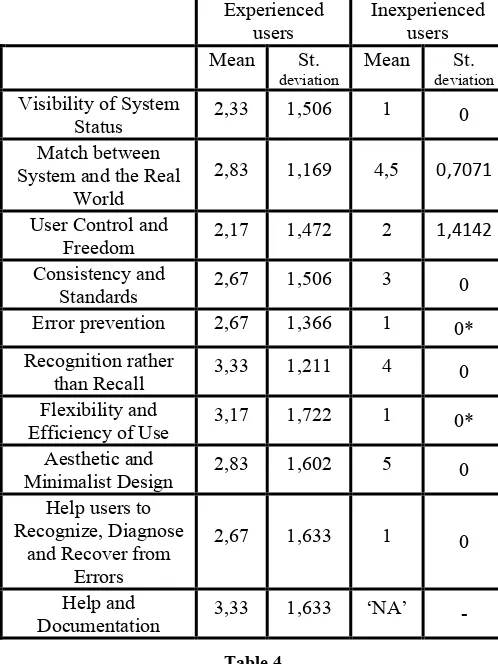

Heuristic Evaluation

The experienced users‟ opinions on each issue specified in the Nielsen‟s Heuristics questionnaires tends to have a small variance from 2,17 to 3,33 indicating their bad or moderate opinion for each aspect of PowerMeeting. Nevertheless, we pointed a significant standard deviation, considering the limited size of our sample and the limited availability of different responses. The trend is different for the inexperienced users, who achieve a score that varies from the absolute bad to the perfectly good opinion. Unfortunately, because of the quite low number of responses, we cannot draw safe conclusions. Nonetheless, the fact that 86% of the inexperienced users had never used any groupware software before can be an interesting point for further examination.

Furthermore, the Heuristics questionnaires explain the strengths and weakness of this system, according to the differentiated users. For instance, while examining whether the system matches the real world, experienced users reject this argument whilst inexperienced users perceive it as a good match. More specifically, all inexperienced users agree that the system achieves poor score in the visibility of system status, error prevention, flexibility and efficiency of use and help users to recognize, diagnose and recover from errors. In the interview we asked to justify their answers in the Heuristics questions. The users responded that their poor ratings were due of a large number of abnormal behaviours occurred while testing the system. For example,

“ideas” could not be moved to a certain place, text could not be replaced properly, and users kept being logged off automatically by the system and etc. These negatively impacted their opinions.

Nevertheless, both groups of users praise that they can use the system without memorizing everything. Furthermore,

the inexperienced users perceive the design of

PowerMeeting as modest and aesthetic.

Implications (Alex Tse)

The survey findings indicated that most of the participants have either used of heard about virtual meeting tools in the past. This implies that PowerMeeting is not a pioneer product in the market. Users are often comparing the functionality of PowerMeeting and other groupware with similar usage. Hence, the focus should be concentrating on product differentiation comparing to competitor products, i.e. why users should choose PowerMeeting and what advantages the system has over the others. In order to gain user confidence and satisfaction, offering a higher level of system compatibility, reliability, efficiency and ease of use are vital.

Furthermore, the study also discovered that the level of familiarity of using the system is a main factor of user satisfaction. Survey results indicated that experienced users generally have a higher score of system satisfaction over inexperienced users. This implies that the provision of system training material is a must to improve user satisfaction and effectiveness.

Last but not least, this study found that the web browser access approach is a leading edge and also a bleeding edge. PowerMeeting benefits from no software installation requirement. But, its performance entirely depends on network connection stability. This indicates that a stable server is the foundation of using this system. Consequently, any system improvement can only be achieved within a constantly stable network connection environment.

Recommendation of Improvements

This chapter is to reveal potential improvement areas. From the testing results and interviews, two main areas are suggested: improvement of usability and improvement of user interface.

Improvement of usability

Effectiveness and Efficiency are the main features of usability. They can be improved by four means.

1. Enrich training materials. Knowing the system and

quality comprehensive step-by-step animate training material is highly recommended, such as a video tutorial.

2. Activate log-in. The log-in button should always be active for the user under all circumstances. In case of an incorrect session name or user name is entered, a message should pop up and ask for re-enter.

3. Introduce Auto Save. To minimise the risk of losing agree to switch the mode. Before the meeting mode is switched, a warning message should pop up asking all users to save works they are currently working on.

In lines with Google Docs and Microsoft words, an

auto save function in every short interval should be adopted in the system too in case of connection failures or system crashes.

During the meeting mode, the display area of output screen could be splitting into two segments, either in a side by side or an upper/lower format. One segment displays the page which the user is currently working on; another segment could share the same view of the session chair. By having a split screen, users can carry on their own work and participate in the meeting mode simultaneously.

4. Network connection. From the findings, it is

suggested that majority of the usability problems are caused by connection failure between the server and client‟s PCs. Therefore, it is recommended that:

The system should migrate to a more stable server.

This is particularly important when the system is experiencing a high amount of active connections between clients and the server.

In case of a connection failure occurs, a warning message should alerts users regarding the network stability status. By doing this, it gives users a choice either to terminate the meeting or to continue with an unstable network, if preferred.

If the user decides to terminate the meeting due to poor connection, the system should provide a save option therefore users can always catch up their work at the next log-in.

Improvement of user interface

From an end user‟s angle, the importance of having a user -friendly interface design is second to none. Below are two mains areas that should get attentions.

1. Promote simplification

Simplify user menu. Irrelevant options and icons should be made invisible where applicable. For example, the delete button at the file menu bar, which is only for session chair to delete Brainstorming, RichText and Blog from the content,

should be hidden from the normal user menu.

Review the menu wording. It is suggested to use common wording for user menu. For example, the menu of changing font colour could be amended from “Foreground” to “Font Colour”.

Provide brief explanation for menu and icons. A balloon could show up when a user is pointing any menu or icon for over a certain length of time (e.g. 2 or 3 seconds) to briefly explain what the menu/icon is for.

2. Enrich user friendly functions

Provide additional icons. It is worth to have small

Edit and Delete icons on each of the “idea”. When

users need to modify or remove their “ideas” from

brainstorming, they can simply click onto the icon.

Enlarge the idea boxes. Adding, deleting and modifying functions in brainstorming could be main contributors to the effectiveness and efficiency of the system. A bigger clickable area could allow users to edit or delete simply by double clicks.

Enhance insert function. The system should allow files, pictures, sound tracks and video clips being uploaded from computers as well as from the World Wide Web.

Enhance drawing function. A drawing tool could be

embedded into the system with ready-made shapes and chart types. This could enable users to draw their own pictures, diagrams or charts in the system.

Research Limitations and Recommendations for Further Research

This section lists the three limitations of this research due to constrain in time and resources. Based on these limitations, areas of further research are also recommended.

Sample size

This research is based on the testing results and user reviews from 16 participants. It is considered as a relatively small sample size and therefore, findings and user reviews initial use of the system. No on-going testing is arranged to collect results from user who carries on learning and using the system. In other words, the ease of learning the system and user‟s learning curve is not fully examined. Therefore, further research can be conducted to disclose the relationships between the proficiency of system use, user satisfaction, and level of user experience. It helps to understand user‟s learning curve and therefore benefits further development of user-friendly interface.

In addition, this research focuses on the analysis of the existing system only. Hence, further research is suggested to compare the user satisfaction and efficiency between current system and the advance version with potential

improvements listed in section Recommendation of

Improvement. The benefit of this exercise is it offers empirical testing results of function usability which would direct continuous product research and development.

CONCLUSION (GANG YANG)

With the development of Web 2.0, web browsers are widely used for user interface. Compared to traditional interface, web-based interfaces have a series of advantages, for example, multi-user, real-time, no installation, and no plug-in. Therefore, they are widely based on team work. Although well-designed user interfaces can simplify the user operation and enhance the efficiency of team work, poorly-designed interfaces are likely to make work complicated and confuse team members. In order to improve user satisfaction, usability should be taken into account during the process of user interface design, implementation and evaluation.

In summary, by means of usability test, interviews and

questionnaires, we found that, to some extent,

PowerMeeting can help users carry out simple group activities online. Some functions, such as brainstorming and voting, are very useful. However, most users are not satisfied with the system. The reasons are diverse. Firstly, it is no trivial to understand all the functions of PowerMeeting even if some users have ever accessed other groupware. Moreover, some system bugs seriously affect the efficiency and continuity of team work, and can even drive users confused and crazy.

Through the test, we also found features that can improve the usability of PowerMeeting. For example, video tutorial can help inexperience users master basic operation method in a few minutes. Stable servers and network connection

1. Bhatt, G., Gupta, J.N.D., Kitchens, F. (2005) "An exploratory study of groupware use in knowledge management process", The Journal of Enterprise Information Management, Vol.18 No.1 pp28-46

2. Brézillon, P., Adam, F. and Pomerol, J.-Ch. (2003)

Supporting complex decision making processes in organizations with collaborative applications - A case study. In: Favela, J. & Decouchant, D. (Eds.) Groupware: Design, Implementation, and Use. Lecture Notes in Computer Science 2806, Springer Verlag, pp. 261-276.

3. Brooke J. (no date available). „SUS - A quick and dirty usability scale‟. [Accessed on 1 March 2011]

www.usabilitynet.org/trump/documents/Susch apt.doc

4. Carstensen, P.H.; Schmidt, K. (1999), Computer

supported cooperative work: new challenges to systems design.

http://citeseer.ist.psu.edu/carstensen99computer.ht ml

5. Dewsbury, R. (2007). Google Web Toolkit

Applications. Prentice Hall. ISBN 978-0321501967

6. Hiltz, S. R., and M. Turoff (1978), The Network

Nation: Human Communication via Computer. Reading, MA: Addison-Wesley Pub. Co.

7. IBM. (2009) Lotus Notes – Business email

solution. [ONLINE] [Accessed on 14 March 2011]

http://www-01.ibm.com/software/lotus/products/notes/

8. Nielsen, J. (1994). Usability Engineering. San

Diego: Academic Press.

9. Tullis, T. & Albert, W. (2008). Measuring the User Experience: Collecting, Analyzing, and Presenting, Morgan Kauffmann Publishers

10. Wang, W. (2008). PowerMeeting on common

ground: web based synchronous groupware with rich user experience, Proceedings of the hypertext 2008 workshop on Collaboration and collective intelligence, June 19-21, 2008, Pittsburgh, PA, USA

11. Wang, W. (No date available). PowerMeeting.

[ONLINE] [Accessed on 10 March 2011] http://sites.google.com/site/meetinginbrowsers

APPENDIXES

Tasks

Individual tasks Brainstorming

1. Create a new session named

„hci_2011_test_group8_userX‟

o Log in using your first name

o Select the option “Session Chair”

2. Using brainstorming

o Create a brainstorming item named

“Films”

o Add 4 romantic films: The Notebook,

Titanic, Grease, Pretty Woman

o Add 3 comedy films: There‟s Something

o Add 3 horror films: The silence of the

Lambs, Jaws, Psycho

o Change the name of one comedy film

3. Create three categories for these items(Romantic

films, Comedy films and Horror films)

4. Categorize these items

5. Delete one item on the romantic film category

6. Vote for categories

o Select voting option

o Vote and submit

7. Analyse report (Monitor will record the % for the

romantic category)

o Select reporting option

o Write the % for the romantic category

RichTextEditor

1. Create a rich text editor 2. Add this text:

Sir Alex Ferguson refused to speak to media rights holders on Sunday following the game at Liverpool, which Manchester United lost 3-1. The United manager did not talk to host broadcaster Sky Sports, radio rights holders TalkSport and the club's television channel MUTV.

His assistant Mike Phelan did not carry out his usual post-match engagements with the BBC. The decision not to speak to the media was made before the game.

Should any of the media organisations complain to the Premier League, the governing body would be forced to act.

3. Make this phrase text bold: The decision not to

speak to the media was made before the game. 4. Delete the last phrase.

5. Add a picture after the word MUTV. Use this

URL:

http://news.bbcimg.co.uk/media/images/51549000 /jpg/_51549756_-19.jpg

6. Make the font-size bigger

7. Change the font to Times New Roman

8. Change the text color to green

9. Change the background color of all the text to red 10. Format the last two phrases into bullet points 11. Save your text

Blog

1. Create a blog in the agenda

2. Post the name of your favorite singer

3. Post the name of a singer you don‟t like

4. Post your favorite song from your favorite singer

5. Delete the post that contains the name of your

favorite singer

6. Edit the post containing the name of the artist you don‟t like and edit it to replace that name with the name of your favorite artist

7. Write to your answer sheet the time of the oldest

post

Agenda items

1. Move the item “Films” to the bottom of the list

Log in again

1. Close the current window

2. Open Powermeeting

http://pc-364598-007.admbs.mbs.ac.uk/org.commonground.PowerM eeting/PowerMeeting.html

3. Log in to the session

„hci_2011_test_group8_userX‟ using your first name

Group tasks

Scenario

Roger Thompson is working as a senior analyst for Persona for 15 years and he will be promoted to the region manager in next Wednesday. Roger is extremely active. He golfs twice a week in the summer and swims laps three times a week in the winter. He is proud of his garden and spend an hour or two each day maintaining his lawn and flower beds. Roger is also an avid fisherman. Your task is to organise a surprise promotion party and select a gift for him.

Since all of you are living apart with each members, so you need to use PowerMeeting groupware to discuss with your team member who is going to organise place, food, drinks, and entertainments. In the discussion, you also need to discuss what time is the party start and finish. Finally, you have to vote what kind of present you are going to buy.

One of you will be a session chair. Meeting mode must be on at all time. Session chair needs to provide guidance to other team members for tasks and ensure all members agree with the decision before moving onto next task.

Task 1: Login to the session

Task 1.2: Login with your First name

Give ideas for these items

1. Foods

2. Drinks

3. Places

4. Decorations

Task 3: Voting for present

Note: The gift categories are pre-created in the session which are Golf Club, Polo Shirt, Fishing Pole, and Grass Trimmer.

Task 3.1: Voting

Votes for the gift that you think you would like to give to Roger

Task 3.2: Reporting

Session chair views the reporting and concludes the decision of the gift.

Task 4: RichTextEditor

Task 4.1: Session chair unlocks meeting mode since he/she receive an important phone call.

Task 4.2: Every participant creates a rich text and write

what you are going to wear in the party under

rich text editor (without clicking the save button). Task 4.3: Session chair finish his/her phone call and locks meeting mode again and navigate to blog and announce what is being discuss today.

Satisfaction Questionnaire

Individual tasks

Log in task

1. How easy do you find to log in a meeting room or to create a session?

Difficult 1 2 3 4 5 Easy

Brainstorming task

1. For each item below, please select how easy do you find it, where 1 is difficult and 5 is easy.

Very difficult Very Easy

Creating the brainstorming agenda item:

1 2 3 4 5

Adding ideas to the brainstorm:

1 2 3 4 5

Modifying ideas in the brainstorm:

1 2 3 4 5

Deleting ideas in the brainstorm:

1 2 3 4 5

Creating categories:

1 2 3 4 5

Adding ideas to categories:

1 2 3 4 5

Voting for categories:

1 2 3 4 5

Analysing the votes for categories:

1 2 3 4 5

2. How would you rate your experience using brainstorm activities (ideas and categories)?

Not satisfying 1 2 3 4 5 Very satisfying

3. How would you rate your experience using the voting functionality?

Not satisfying 1 2 3 4 5 Very satisfying

Confusing 1 2 3 4 5 Easy to understand 4. How would you rate your experience using the reporting functionality?

Not satisfying 1 2 3 4 5 Very satisfying

Confusing 1 2 3 4 5 Easy to understand

Rich text editor task

1.For each item below, please select how easy do you find it, where 1 is difficult and 5 is easy.

Very difficult Very Easy

Adding text to the editor:

1 2 3 4 5

Formatting the text in the editor:

1 2 3 4 5

Adding images to the editor:

1 2 3 4 5

Saving changes in the editor:

1 2 3 4 5

2. How would you rate your experience using the rich text editor?

Not satisfying 1 2 3 4 5 Very satisfying

Blog task

1. For each item below, please select how easy do you find it, where 1 is difficult and 5 is easy.

Very difficult Very Easy

Adding posts to the blog:

1 2 3 4 5

Deleting posts:

1 2 3 4 5

Editing posts:

2. How would you rate the order in which posts are displayed?

Confusing 1 2 3 4 5 Easy to understand 3. How would you rate your experience using the blog?

Not satisfying 1 2 3 4 5 Very satisfying

Agenda task

1. How would you rate your experience using the blog?

Not satisfying 1 2 3 4 5 Very satisfying

Group tasks

Chat

1. Overall how would you rate your experience using the chat.

Not satisfying 1 2 3 4 5 Very satisfying

2. For each line, please select the number that corresponds most to your opinion

The chat is

Difficult to use 1 2 3 4 5 Easy to use Rich 1 2 3 4 5 Poor Slow 1 2 3 4 5 Fast

3. For each improvement proposed below, please select how important it is for you, where 1 is not important and 5 is very important.

Sound when receiving a new message

1 2 3 4 5

The ability to change the size of the chat in my screen

1 2 3 4 5

4. Is using the PowerMeeting better than face-to-face meeting?

Worse Much better

1 2 3 4 5

Brainstorming

1. Overall how would you rate your experience using the Brainstorming?

Not satisfying 1 2 3 4 5 Very satisfying

2. How would you rate the Voting procedure?

Difficult to use 1 2 3 4 5 Easy to use Useless 1 2 3 4 5 Useful Inefficient 1 2 3 4 5 Efficient

3. How would you prefer to vote?

In percentages (as provided now) ____

Choose the best option ____

Rate the options from the most suitable option to the least

suitable ____

Rich Text Editor

1. Overall how would you rate your experience using the Rich Text Editor?

Not satisfying 1 2 3 4 5 Very satisfying

2. While you were working on your text, the session chair selected „In meeting‟. This resulted to loosing all your work. How would you comment that? (Please select the option you agree the most)

___ It is accepted because this way we can work all together, viewing the same screen

___ It is a serious problem/pitfall of the system, making me feel really unsatisfied

___ I did not like it, but not a very important problem and thus there is no need to change it

___ Other option (please state): _____________________

General Questions

1. What is your gender?

Male ____

Female ____

2. What is your age group?

Less than 20 ____

20-25 ____

25-30 ____

30-35 ____

35-40 ____

40 or above ____

3. What is your academic background?

Computer-oriented ____

Business-oriented ____

Engineering-oriented ____

4. Have you used similar groupware software (e.g: Google docs, Wiki) before?

5. How often do you use groupware software (e.g: Google docs, Wiki, PowerMeeting)?

Never Quite often

1 2 3 4 5

6. Will you use PowerMeeting again?

Yes ____ No ____ I do not know ____

Nielsen’s usability heuristics

Please evaluate Powermeeting according to Nielsen‟s

usability heuristics.

Try to answer all the items

For items that are not applicable use NA

1. Visibility of system status

The system should always keep users informed about what is going on, through appropriate feedback within reasonable time.

Bad 1 2 3 4 5 Good NA

2. Match between system and the real world

The system should speak the users' language, with words, phrases and concepts familiar to the user, rather than system-oriented terms. Follow real-world conventions, making information appear in a natural and logical order.

Bad 1 2 3 4 5 Good NA

3. User control and freedom

Users often choose system functions by mistake and will need a clearly marked "emergency exit" to leave the unwanted state without having to go through an extended dialogue. Support undo and redo.

Bad 1 2 3 4 5 Good NA

4. Consistency and standards

Users should not have to wonder whether different words, situations, or actions mean the same thing. Follow platform conventions.

Bad 1 2 3 4 5 Good NA

5. Error prevention

Even better than good error messages is a careful design which prevents a problem from occurring in the first place. Either eliminate error-prone conditions or check for them and present users with a confirmation option before they commit to the action.

Bad 1 2 3 4 5 Good NA

6. Recognition rather than recall

Minimize the user's memory load by making objects, actions, and options visible. The user should not have to remember information from one part of the dialogue to another. Instructions for use of the system should be visible or easily retrievable whenever appropriate.

Bad 1 2 3 4 5 Good NA

7. Flexibility and efficiency of use

Accelerators -- unseen by the novice user -- may often speed up the interaction for the expert user such that the system can cater to both inexperienced and experienced users. Allow users to tailor frequent actions.

Bad 1 2 3 4 5 Good NA

8. Aesthetic and minimalist design

Dialogues should not contain information which is irrelevant or rarely needed. Every extra unit of information in a dialogue competes with the relevant units of information and diminishes their relative visibility.

Bad 1 2 3 4 5 Good NA

9. Help users recognize, diagnose, and recover from errors

Error messages should be expressed in plain language (no codes), precisely indicate the problem, and constructively suggest a solution.

Bad 1 2 3 4 5 Good NA

10. Help and documentation

Even though it is better if the system can be used without documentation, it may be necessary to provide help and documentation. Any such information should be easy to search, focused on the user's task, list concrete steps to be carried out, and not be too large.

Bad 1 2 3 4 5 Good NA

System Questionnaire

Please select the appropriate answer for each question, where 1 corresponds to strongly disagree and 5 corresponds

to strongly agree.

1. I think that I would like to use

this system

frequently

Strongly Strongly disagree agree

1 2 3 4 5

2. I found the

unnecessarily complex

3. I thought the system was easy to use

1 2 3 4 5

4. I think that I

would need the

support of a

technical person to be able to use this system

1 2 3 4 5

5. I found the various functions in this system were well integrated

1 2 3 4 5

6. I thought there

was too much

inconsistency in

this system

1 2 3 4 5

7. I would imagine that most people would learn to use this system very quickly

1 2 3 4 5

8. I found the

system very

cumbersome to use

1 2 3 4 5

9. I felt very

confident using the system

1 2 3 4 5

10. I needed to learn a lot of things before I could get

going with this

system