G

e

o

i

n

t

e

l

l

i

G

e

n

c

e

N

O

V

D

E

C

2

0

13

14

BIG DATA

THE DATA

Traditional data handling methods lack the

ability to manage Big Data. The traditional

security mechanisms too are inadequate.

But in an age where 100 per cent foolproof

IT security is a mirage, how then should

defence organisations cope with the Big

Data security challenges?

I

ndian mathematician and astronomer, Aryabhatta, is said to be the founder of Zero. He gave the world Zero thousands of years ago, around 9th century C.E. Since then so much has evolved in every domain of human life but surprisingly mankind did not move beyond ‘zero’ and ‘one’ per se in respect of the IT world. It is simply these Zeroes and Ones that have been carrying the load of almost all dimensions in software and hardware; and now with data explosion taking place all around us,Big Data has started buzzing a lot more with more Zeroes and Ones.

What Is Big Data?

Big Data is the buzz phrase in the IT world right now and there are dizzying arrays of opinions on just what these two simple words actually mean. Before, we get on to building the concept of Big Data, for those who believe that Big Data is a pretty new evolving concept, I would like to share a pie of history from the ancient Egypt era.

River Nile is 6,695 km long. Back then, the engineers predicted crop yields based on certain data that they collected from the river levels. The engineers used to dig cylindrical holes of diameter up to 4 meters and depth up to 10 meters on both sides of the river at regular intervals. They would then record the water levels in these holes, for over a year, and depending on the levels recorded, they would shift and manage resources.

Now, isn’t this amazing! Scale the same thing phenomenally to date

SECURING

G

e

o

i

n

t

e

l

l

i

G

e

n

c

e

N

O

V

D

E

C

2

0

13

15 the operating system, application

and network level is necessitated beyond doubt in the current environment. The major security challenges in the Big Data adoption have been bought out below:

Access Control

Defining access controls for smaller organisations is relatively easier than the bigger organisations. Obviously ‘Size does matter’ as complexities in relationship attributes increases in the latter case. In the context of Big Data, the analysis in a cloud computing based environment, is increasingly focussed on handling diverse data sets, both in terms of variety of schemas and security requirements. Thus, legal and policy restrictions on data come from numerous sources, where we have yobibytes of data

generating from billions of devices across the globe from mobiles, stock exchanges, aeroplanes, etc, which have no criteria and known format, but surely hold hidden perceptives and intelligence that can be exploited by governments to programme strategies and policies. So there is a need to find actionable information in these massive volumes of both structured and unstructured data which is so large and complex that it is difficult to process with conventional database and software techniques.

De

fi

ning Big Data?

Big Data is defined as large pools of data that can be captured, communicated, aggregated, stored and analysed. It is now part of every sector and function of the global economy.

Put simply, Big Data is not just another new technology, but is a gradual phenomenon resulting from the vast amount of raw information data generated across, and collected by commercial and government organisations. Compared to the structured data in various applications, Big Data consists of six major attributes. Though people familiar with the Big Data concept would remember basically the three Vs, but with the evolving nature and complexities involved with the technology, the Vs have been expanding too:

» Variety — Extends beyond structured data and includes semi-structure or unstructured data of all varieties, such as text, audio, video, click streams, log files and more.

» Volume — Organisations are awash with data, easily amassing hundreds of terabytes and petabytes of information.

» Velocity — Sometimes must be analysed in real-time as it is streamed to an organisation to

maximise the data’s business value.

» Visibility — Access from disparate geographic locations.

» Veracity — Managing the reliability and predictability of inherently imprecise data types.

» Value — Importance of analysis which was previously limited by technology.

The Security Aspect

Traditional data handling methods lack the methodology required to manage huge data sets. Similarly, the way security of databases is being handled needs a relook. Traditional security mechanisms, which are sewed to securing perimeter bound static data, are inadequate. Streaming data demands ultra-fast response time. Like in most of the new generation technologies viz, Cloud Computing and Virtualisation, the technology stands ready to be adapted but it still poses several security challenges.

The need for multi-level protection of data processing nodes, that is, implementing security controls at

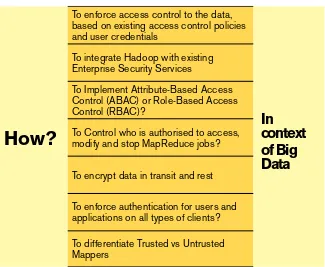

To enforce access control to the data,

based on existing access control policies

and user credentials

To integrate Hadoop with existing

Enterprise Security Services

To Implement Attribute-Based Access

Control (ABAC) or Role-Based Access

Control (RBAC)?

How?

To Control who is authorised to access,

modify and stop MapReduce jobs?

To encrypt data in transit and rest

To enforce authentication for users and

applications on all types of clients?

To differentiate Trusted vs Untrusted

Mappers

[image:2.595.217.542.500.767.2]In

context

of Big

Data

Figure 1: Security Challenges for Organisations Securing Hadoop

<<

Big Data is not just

another new technology,

but is a gradual

G

e

o

i

n

t

e

l

l

i

G

e

n

c

e

N

O

V

D

E

C

2

0

13

16

BIG DATA

making the overall scenario of design more daedal.

Hadoop

Hadoop, like many open source technologies was not created with security in mind. Its ascension amongst corporate users has invited more focus, and as security professionals have continued to point out potential security vulnerabilities and Big Data security risks with Hadoop, this has led to continued security modifications of Hadoop. There has been an explosive growth in the ‘Hadoop security’ market, where vendors are releasing ‘security-enhanced’ distributions of Hadoop and solutions that promise an increased Hadoop security. However, there are a number of security challenges for organisations securing Hadoop that are shown in Figure 1.

Privacy Matters

Big Data has the potential for invasive marketing, but can also result in invasion of privacy, decreased civil liberties, and step-up state and corporate control. It is a known fact that companies are leveraging data analytics for marketing purposes and this has been very effective in identifying and getting new customers – a boon to companies but at the expense of a consumer who is somewhat unaware about all this.

Spying anyone vide internet surf searches is usually viewed as invasion into privacy, that is, a cyber crime. We keep on disclosing more and more of our secrets by availing internet services like navigation services for a journey, seeking answers to questions like how to make cocktails or imprudent questions, the kind we would never ask our closest confidants. Thus, invading privacy has two facets, one has got to do with betterment of society, and the other is a complete business model. There are no restrictions which can dissuade companies from obtaining this private user information. Also, they are free to sell this information to data brokers who are further actuating

companies to create applications.

Distributed Programming

Frameworks

Distributed programming frameworks utilise parallelism in computation and storage to process massive amounts of data. MapReduce is one of the popular frameworks that allows developers to write programmes that process massive amounts of unstructured data in parallel across a distributed cluster of processors or stand-alone computers, basically splits an input file into multiple chunks. Now, here untrusty mappers could return wrong results, which will in turn return incorrect aggregated results. With large data sets, it is out of the question to identify that, resulting in significant damage, especially for scientific and financial computations.

Secure Data Storage

Conventional data storage solutions such as NAS and SAN fail to deliver the required agility necessitated to process Big Data. Since data storage is all set to grow indefinitely, just buying incremental storage won’t be enough as it can handle a limited colossal data. It is here that scalable and agile nature of cloud technology makes an ideal match

<<

Big Data has the

potential for invasive

marketing, but can also

result in invasion of

privacy, decreased civil

liberties, and

step-up state and

corporate control

>>

G

e

o

i

n

t

e

l

l

i

G

e

n

c

e

N

O

V

D

E

C

2

0

13

17 for Big Data management. With

cloud-based storage systems, data sets can be replicated, relocated and housed anywhere in the world. This simplifies the task of scaling infrastructure up or down by placing it on the cloud vendor. But clouds are still grey, that is, issues from the point of view of storage in cloud viz encryption, reliability, authorisation, authentication, integrity and above all availability are still evolving and yet to be resolved. Though organisations like CSA (Cloud Security Alliance) are already working to resolve such issues but it all takes time to mature.

End-Point Input Validation/

Filtering

Big Data is collected from an increasing number of sources and this presents organisations with two challenges:

Input Validation: How to trust a source from where data is being generated? How do we distinguish between trusted and untrusted data?

Data Filtering: How can an organisation separate out spam/ malicious data?

Data Provenance

Though cloud storage offers the

flexibility of accessing data from anywhere at any time while providing economical benefits and scalability, it is still considered as an evolving technology, and hence lacks the power and ability to manage data provenance. Data provenance, that is, metadata describing the derivation history of data, will be critical to big data that is largely based in cloud computing environment, to enhance reliability, credibility, accountability,

transparency and confidentiality of data. Data provenance is only set to increase in complexity in big data applications. Analysis of such large provenance graphs to detect metadata dependencies for security/ confidentiality

applications is computationally intensive and complex.

Non-Relational Databases

Each NoSQL DBs were built to tackle different challenges put forward by the analytics world and hence security was never part of the model at any point of its design stage. As already brought out, traditional technologies struggle to accommodate the Vs of Big Data and as such the relational databases were designed for data volumes which were primarily static with small, queries defined upfront and the database reside on a single server in one data center. It is here that NoSQL databases like MongoDB and Redis etc., come to the rescue and handle the Big Data Vs. But these are still evolving with respect to security infrastructure. As explained in Figure 2, the database companies recommend these to be run in a preconfigured trusted environment since they themselves are not built to handle security issues.

Conclusion

Big Data is distinguished by its different deployment model, that is, it is highly distributed, redundant and deals with elastic data repositories. A distributed file system caters to several essential features and enables massively parallel computation. But specific issues of how each layer of the stack incorporates including how data nodes communicate with

clients and resource management facilities raises several concerns. In addition to these distributed

file system, architectural issues like distributed nodes, shared data, inter-node communication, forensics, protecting data at rest, configuring patch management, etc., remain to be solved. The Bolt-On approach to security in case of Big Data may not be a recommended solution.

But at the same time, people most of the times may be paranoiac about their data. There will, inevitably, be data spills. We should try to avoid them, but we should also not encourage paranoia. Though all these security issues exist today but it certainly does not mean that we wait till all these get resolved. Hardening system architecture from a security point of view is a continuous process and there will not be a day soon when IT is 100 per cent secured. The need of the hour for user organisations is to take calculated risks and move ahead; and for the security vendors is that they must come up with a control that must not compromise the basic functionality of the cluster, should scale in the same manner as the cluster, should not compromise essential Big Data characteristics and should address a security threat to data stored within the cluster (although not that easy).

The paper was presented at

GeoIntelligence India 2013 conference

Anupam Tiwari

Joint Director, Government of India

anupam.tiwari@nic.in

<<

Though cloud storage

offers the

l

exibility