Real-time Weather Forecasting using

Multidimensional Hierarchical Graph Neuron (mHGN)

BENNY BENYAMIN NASUTION

RAHMAT WIDIA SEMBIRING

AFRITHA AMELIA

BAKTI VIYATA SUNDAWA

GUNAWAN

ISMAEL

HANDRI SUNJAYA

MORLAN PARDEDE

JUNAIDI

SUHAILI ALIFUDDIN

MUHAMMAD SYAHRUDDIN

ZULKIFLI LUBIS

Department of Computer Engineering and Informatics

Department of Telecommunication Engineering

Department of Electrical Engineering

Politeknik Negeri Medan

Jalan Almamater No. 1, Kampus USU, Medan 20155

INDONESIA

[email protected], [email protected]

Abstract: - Weather forecasting has been established for quite some time, but the quality is not yet satisfactory. The challenge to build a sophisticated weather forecast is high. Due to the difficulties in working on complex and big data, the research on discovering such weather forecast is still ongoing. It is still a big challenge to develop a self-sufficient machine that can forecast weather thoroughly. The new concept of Multidimensional Hierarchical Graph Neuron (mHGN) has opened up a new opportunity to forecast weather in real-time manner. The 91% of its accuracy in recognizing almost 11% distorted/incomplete patterns has suggested a strong indication that the accuracy of mHGN in forecasting weather will be high as well.

Key-Words: -Graph Neuron, Hierarchical Graph Neuron, Pattern Recognition, Weather Forecast

1 Introduction

Weather forecasting has been established for quite some time. People requires weather forecast to help planning their activities. By utilizing weather forecast the plan is expected to be optimum, and at the end the aim will be maximum. The challenge to build a sophisticated weather forecast is still high [1] [2] [3] [4] [5] [6] [7]. Due to the difficulties in working on complex and big data, the research on discovering such weather forecast is still ongoing [3] [5] [6] [8]. It is still a big challenge to develop a self-sufficient machine without an intervention of human being that can forecast weather thoroughly.

The obvious evidence that in preparing a weather forecast one or two weather experts have prepared a weather forecast is through the existence of

forecaster’s name on a weather report (See Figure 1,

prepared by Hamrick). The reason of the involvement of human being is that the number of variables that

needs to be formulized in mathematical functions to calculate parameters of weather is huge. If they exist, the functions are so much interrelated. Some weather experts even state that a leaf falling in Japan may cause rain in the US. The following is an example of weather forecast taken from NDAA website.

Since it is still difficult to have a weather forecast based on mathematical functions, it is a great opportunity to discover other solving methods, such us through utilizing artificial intelligent technologies. Although mathematical functions, that can determine the condition of weather, are not yet discovered,

air-temperature, wind-speed, wind-direction,

air-pressure, and air-humidity are all caused by physical states [9]. It means that what happens to weather condition is generally caused by particular physical patterns. So, time-series of several physical values will determine particular weather condition.

Multidimensional Hierarchical Graph Neuron (mHGN) has been proven to be capable of working as a pattern recognizer. The latest architecture to prove its capability was the one that uses five-dimension 5X5X5X15X15 neurons. The architecture has been tested to recognize 26 patterns of alphabetical figures. Despite of 10% of distortion in all the figures, the architecture was able to recognize in average more than 90% of those patterns. This experiment result is a positive indication that mHGN has a potential to be developed as a weather forecaster.

2 Weather Forecast

Weather forecasting normally utilizes meteorology [10] [9], a science about weather. The following is some fundamental concept of weather forecast that is important to be first discussed, before working on how to forecast the weather.

2.1 The Regularity of Weather

The characteristics of weather on the earth is strongly influenced by the characteristics of the earth itself and its surroundings. Around the earth, there are several levels of air that has been classified by weather experts. They are (See Figure 2):

Troposphere, Stratosphere, Mesosphere,

Thermosphere, and Exosphere. Each of those levels has its own characteristics. For instance, in the Troposphere and Mesosphere, the higher the altitude the lower the air-temperature will be. But, in the Stratosphere and Thermosphere is the situation different, the higher the altitude the lower the temperature will change.

Figure 2: The Classification of the Earth’s Air and its corresponding temperature [9]

Similar situation governs on the surface of the earth. The wind-direction has its own characteristics. On the equator, if we observe from the surface of the earth, the wind blows from the surface to the sky (See Figure 3). However, if we observe the earth from the sky, on the equator the wind blows to the left (See Figure 4).

Figure 3: Wind direction observed from the Earth’s surface [11]

Figure 5: Wind directions caused by High (upper) and Low (lower) air pressures [11]

Figure 6: Wind, Highs, and Lows pattern in January [11]

Figure 7: Wind, Highs, and Lows pattern in July [11]

These conditions shown by the figures above indicate that the weather in general has several regularities. It means that a weather forecast can be classified based on: 1) the area (longitude, latitude, altitude) on the earth, 2) the season (January, February, till December) of the area, and 3) time-slot (morning, noon, afternoon, evening) of the season of the area. An irregularity of weather will happen when something irregularly comes up, for instance mountain eruption, production of air-pollution from factories, or a comet hit the earth.

2.2 Particular and Important Timeframes

The other thing that need to be considered is choosing the best time frame for learning data. As already mentioned, most of the time weather condition is more or less similar to the one at the same time frame in the same season. Additionally, weather condition will not change abruptly very often. Therefore, suitable and important time frame that contains unusual or extreme weather condition need to be recorded. The collected data can then be used as the training data. By doing so, patterns of such unusual or extreme weather conditions will be able to be forecasted. In other words, the training data is the data that constitutes unique patterns.2.3 Methods of Forecasting

Plan recognizers have attracted researchers to construct a number of scenarios and to transform each scenario into hierarchical task decomposition or plan libraries. Based on such structures and libraries, they have built algorithms to infer the plan of an activity, for example an attack, or even a natural catastrophe. The probability of each step has been determined and calculated based on previous observations. Inspired by such technology, the associative memory mechanism of the mHGN also allows recalling and recognizing mechanisms based on incomplete input patterns. Hence, the mHGN can be utilized as a forecaster of weather condition, with an assumption that the patterns of the condition are already stored in the mHGN.

Although regularities of weather condition exist, to anticipate dynamic characteristics of weather, patterns that are stored in the mHGN must be updated dynamically. So, this criterion is not suitable for an approach that takes a long time for the preparation and recognition process. In addition, it is required that the recognizing quality in a weather forecast must be consistently accurate. The reason is that the dynamic condition of weather can increase the false positive errors within weather forecasting. It is the case, if its quality depends on the number of the stored patterns, or on the size of patterns. Harmer et al. have warned of the same issue, that the constantly changing states of the environment of the computer or network, such as adding new applications, modifying file systems, can increase the false positive and true negative errors of a weather forecast system.

neuron has the same architecture and functionality. Moreover, the once-only training cycle makes the mHGN suitable for such a dynamic update process.

3 Multidimensional

Hierarchical

Graph Neuron (mHGN)

The need of handling multidimensional patterns for various purposes has been started long time ago. Although according to the previous publication, the

HGN—the previous version of the mHGN—can very

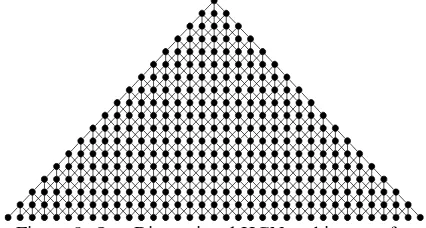

well recognize distorted 2D-patterns with up to 10% of distortion, the architecture that has been used is the one-dimensional HGN. So, for recognizing 2D-patterns (7X5 pixels), 324 neurons were deployed. The architecture looks like the following.

Figure 8: One-Dimensional HGN architecture for recognizing 7X5 2D Patterns [12]

The accuracy of the recognition results is quite high, but in fact the one-dimensional HGN architecture does not visually nor physically represent 2D-patterns correctly. The following figure shows the structure of the transformation from 2D architecture of the patterns to 1D architecture of HGN on the base level of the hierarchy.

Figure 9: Transformation of 2D Architecture to 1D Architecture on the base level

It can be observed from Figure 9 that in the 2D architecture (left), the cells nr. 15 and nr. 16 are originally located far from each other, but in 1D architecture (right) they are located next to another. On the other hand, in the 2D architecture the cells nr. 21 and nr. 26 are originally located close to each other, but in 1D architecture they are separated far from another. This is the evidence that the recognition results may not be 100% correct.

Therefore, an improved architecture that is suitable to handle true multidimensional patterns has been developed. For example, to recognize 7X5 2D-patterns, there are 35 neurons on the base level. On the first level there are 25 neurons (5X5), on the

second level there are 15 neurons (3X5), on the third level there are 5 neurons (1X5), on the fourth level there are 3 neurons (1X3), and on the top level there is only one neuron (1X1). So, the complete composition of the mHGN from the base level until the top level is: 7X5, 5X5, 3X5, 1X5, 1X3, 1X1. To

this, the total number of neurons is:

35+25+15+5+3+1=84. Please note that the complete composition can also be: 7X5, 7X3, 7X1, 5X1, 3X1, 1X1.

The composition of the second option is less suitable than the one of the first option, due to the total number of neurons in the composition of the second option is less than the number of neurons in the composition of the first option. The less number of neurons, the less accurate the recognition results will be. For the composition of the second option, the total number of neurons is: 35+21+7+5+3+1=72. This shows that the number of neurons of the composition of the second option is 12 neurons less than the number of neurons in the composition of the first option. Note also, that the one-dimensional composition comprises 18 layers, whereas the two-dimensional composition comprises only 6 layers for the same patterns size of 35 on the base level.

3.1 Multidimensional Problems

The need to solve multidimensional problems has been discussed since a long time ago. People are aware that to handle complex problems values taken from numerous dimensions must be considered and calculated. Otherwise, the result that come up after the calculation analyzing just a few parameters cannot be considered correct. In most cases, such a condition has produced very high false positive and true negative error rate. Another issue related to solving multidimensional problems is the solving method that has been used. In a complex system, not only the number of dimensions is large, but how all the dimensions are interrelated and interdependent is often not clear.

Weather system is a good example as a multidimensional system. Therefore, forecasting weather condition is also a kind of solving a multidimensional problem. Not only air-temperature, air-pressure, air-humidity, direction, and wind-speed determine the condition of weather, season, time-frame, location (longitude, latitude, and altitude) play also a big role on affecting weather condition. Moreover, flora and fauna population, factories development, people movement will also influence the condition of weather. However, the conditions of those issues are hard to measure and not clear what and how to measure it.

12345 6789 10 11 12 13 14 15

16 17 18 19 20→ 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 21 22 23 24 25

But, the phenomenon of its influence can still be measured. For instance, whenever in one area several factories have recently been established and the factories produce a lot of heat, the temperature in the area of the factories will be higher than the temperature away from them. This condition will cause weather condition that is not the same as the regular weather condition before the factories have been built. It means that the tangible measurements (such as: air-temperature, air-pressure, air-humidity, wind-direction, and wind-speed) can still be used to measure intangible conditions (such as: flora and fauna population, industries development, people movement). A problem that still exists is the interdependency amongst those tangible and intangible values. It is difficult to figure out a formula that constitutes such interdependency. This is a strong indication that such multidimensional problems may be solved using artificial intelligent approaches such as mHGN.

3.2 Multidimensional Pattern Recognition

using mHGN

As is the case with a one-dimensional HGN, a pattern will be received by the GNs in the base layer. However, in a multidimensional HGN (mHGN), the pattern size now is the result of the multiplication of

all the dimension’s sizes on the base layer. For

example, the pattern size of a two-dimensional (2D) 7X5 mHGN array is 35. In a three-dimensional (3D) 7X5X3 mHGN array, the pattern size is 105. The following figure shows two examples of the visualized architecture.

Figure 10: mHGN configuration of 2D (7x5) (left) and 3D (7x5x3) (right) for pattern sizes 35 and 105

respectively

As already mentioned, for recognizing 7X5 2D-patterns the complete composition of mHGN in each layer respectively will be: 7X5, 5X5, 3X5, 1X5, 1X3, 1X1. Similarly, for recognizing 5X7X3 3D-patterns the complete composition of mHGN in each layer respectively will be: 5X7X3, 5X5X3, 5X3X3, 5X1X3, 3X1X3, 1X1X3, 1X1X1. These composition examples show that the size of each dimension plays

a big role in mHGN architecture, and determines how the best configuration should be. In other to gain the best recognition results, the configuration is concentrated on the greatest size of the dimensions on the base level.

Although increasing the dimensions of the composition reduces the number of GNs, there are however adverse consequences. The first and most important consequence is that the accuracy in recognising noisy patterns is diminished. Owing to fewer neurons being used, the higher layer GNs must contend with a larger part of a pattern for analysis. For instance, using a one-dimensional HGN composition, a noisy pattern can possibly be recognised as a pattern of either: S, Q, or H, and the percentage of the match would subsequently decide that pattern Q is the most likely match. On the other hand, a two-dimensional HGN composition would recognise the same noisy pattern as a pattern of Q. It will, however, do so without considering other possible matches i.e. S and H. The reason for this is that the GNs that recognise sub-patterns of S and H in the one-dimensional case may no longer exist in higher dimensional compositions. The second consequence is that the number of parameters each GN must hold in higher dimensional compositions would be higher. Although this will not affect the recognition accuracy and timing performance, the configuration for the distributed architecture will, however, become more complex.

3.2.1 Experiment Results

For the experiment, each GN is operated by a thread. We have scrutinized 2D-, 3D-, 4D- and 5D-pattern recognition. The compositions used in the experiment are: 15X15 mHGN, 5X15X15 mHGN, 5X5X15X15 mHGN, and 5X5X5X15X15 mHGN respectively. For instance, in the 15X15 pattern recognition the composition requires: 225 + 195 + 165 + 135 + + 105 + 75 + 45 + 15 + 13 + 11 + 9 + 7 + 5 + 3 + 1 = 1009 neurons per value of data. As for creating patterns, binary data is used, then two values (i.e 0 and 1) of data are required. Therefore, 2018 neurons are deployed in the 15X15 composition. So, 2018 threads have been run in parallel during this 2D pattern recognition. By using threads, the activity of neurons is simulated so that the functionalities are close to the real neuron functionalities.

recognition results the mHGN is fed with a lot of randomly distorted patterns of alphabets. The recognizing accuracy is taken by calculating the average value of the results.

Unlike what we did in the previous research that there was only one order of patterns and only one distorted pattern for each alphabet, for this experiment, 20 distorted patterns for each alphabet have been prepared. After acquiring the results, the experiment is repeated 10 times with the same steps, but each time the mHGN is trained with 26 patterns of alphabets with randomly different order. So, for each alphabet for particular percentage of distortion, in total 200 distorted patterns have been prepared as testing patterns.

There are 7 levels of distortion that have been tested, they are: 1.3%, 2.7%, 4.4%, 6.7%, 8.0%, 8.9%, and 10.7%. These levels have been so chosen based on the number of distorted pixels. The sizes of pixels represent the factor and the non-factor of the dimension of the patterns. By doing so, we can observe all the possibilities of distortion. So, in total there are 5200 (26 x 20 x 10) randomly distorted testing patterns. The following Figure 11 shows 5 samples of different orders of the patterns:

Figure 11: Five different randomly ordered alphabets.

The following shows some results taken from testing 4.4% randomly distorted patterns, and the mHGN was previously stored with alphabet patterns,

and the order was

IEFXMQYJHPDKTORZCUALBGVWNS. The

value on the right side of each alphabet show the portion (percentage) of the pattern that is recognizable as the corresponding alphabet.

Figure 12: The result of al the 26 alphabets that are twenty times 4.4% randomly distorted.

The following shows 10 samples of distorted

patterns of the alphabet “A” taken from the

experiment of recognizing 5.8% randomly distorted patterns.

Figure 13: Ten different randomly 5.8% distorted patterns of alphabet “A”

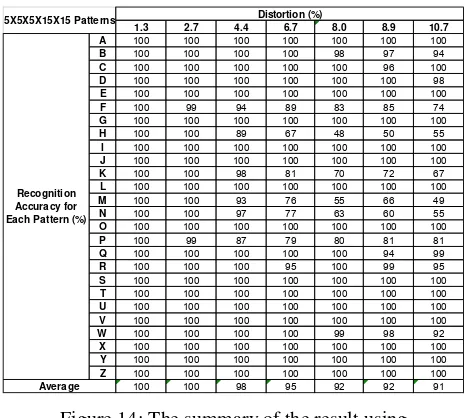

After collecting the results taken from testing 5200 patterns we can summarize how accurate the mHGN is, in recognizing different levels of distortion of 26 alphabets. The summary is taken based on the average accuracy values from all the steps. The following shows the summarized result taken from testing distorted patterns using five-dimensional 5X5X5X15X15 mHGN.

Figure 14: The summary of the result using 5X5X5X15X15 mHGN

It can be seen from Figure 14 in the last column that the mHGN is able to recognize 91% of the 10.7% distorted patterns of 26 alphabets. Some alphabets (A, C, E, G, I, J, L, O, S, T, U, V, X, Y, Z) are even 100% recognizable. As already discussed, other patterns of alphabets, such as H, K, M, N, are not very well recognized because they are visually and physically very similar. In fact, if this architecture is used to recognize different states of the same alphabet, such as regular-A, bold-A, and italic-A as

1 E N W L I S P G H J D Y A X Q R C M F V O T U K Z B

2 R P J S O Q D V C K L E F G X Y A T Z B U W T H M N

3 G B H R Z C I Y X S J K D A N T Q V E W F U P O L M

4 L N I F R X B K O C T Z A Y G V U H P J Q S W E D M

5 C E T U N R H Y G D B K F M I X V S Q J Z W O A L P

I A A 9 A 8 A 8 A54 A13 A 3 A 8 A13 A16 A 6 A15 A14 A 15 A14 A14 A 2 A 1 A54 A17 A15 20 E B B 3 B 4 B36 B37 B11 B36 B11 B 11 B37 B10 B 5 B36 B 13 B10 B12 B11 B 6 B 9 B11 B36 20 F C C40 C 7 C38 C15 C 8 C39 C 8 C 7 C 7 C 8 C15 C 7 C 0 C14 C15 C40 C14 C 1 C14 C39 20 X D D26 D10 D 4 D27 D 9 D10 D10 D 2 D26 D12 D25 D11 D 5 D12 D 6 D 9 D 9 D10 D11 D10 20 M E E10 E 6 E30 E29 E12 E11 E11 E 10 E10 E29 E11 E10 E 10 E11 E12 E29 E31 E 6 E10 E 9 20 Q F F20 F 4 F 19 F 3 F 20 F 9 F 7 F 8 F 4 F 1 F 7 F 21 H 19 F22 F 8 F 9 F 21 F 8 F 4 F 8 19 Y G G51 G14 G 51 G13 G15 G51 G14 G 50 G 7 G51 G14 G15 G 6 G15 G15 G 7 G 3 G14 G 6 G 7 20 J H H 8 H14 H 54 H14 H15 H56 H15 H 14 H 7 H54 H13 H 3 H16 H55 H14 H54 H 9 H15 H55 H54 20 H I I 54 I 6 I 1 I 53 I 6 I 14 I 14 I 55 I 15 I 15 I 54 I 14 I 14 I 15 I 54 I 6 I 15 I 14 I 16 I 5 20 P J J 54 J 5 J 5 J 14 J 15 J 13 J 54 J 14 J 55 J 6 J 55 J 15 J 15 J 6 J 54 J 55 J 14 J 13 J 14 J 3 20 D K K 4 K 7 K 8 K 8 K 6 K 6 K 8 K 5 K 8 H22 K 9 K 6 K 7 K 6 K 4 K 2 H22 K 9 K 7 K 5 18 K L L 9 L 11 L 5 L 38 L 11 L 11 L 11 L 39 L 6 L 40 L 12 L 39 L 10 L 6 L 5 L 10 L 7 L 10 L 12 L 38 20 T M M22 M19 M21 M 4 M21 M22 M 8 M 4 M 8 M 6 M 7 M 6 M 5 M 7 M 5 M 4 M 7 M 7 M 6 M 7 20 O N N19 N 4 N 3 N 7 H19 N 8 N 8 N 4 N 7 N 6 N 7 N 8 H18 N 8 N 6 N 7 N 8 N 5 N 7 N 5 18 R O O54 O16 O 15 O14 O14 O 7 O56 O 14 O54 O 8 O 3 O16 O 14 O16 O55 O54 O 6 O15 O 7 O54 20 Z P P10 P32 P 10 P 9 P 9 P10 P 5 P 6 P 5 P33 P10 P 0 P 8 P10 P10 P32 P 5 P 9 P11 P11 20 C Q Q44 Q15 Q 4 Q15 Q45 Q15 Q16 Q 13 Q14 Q13 Q 6 Q14 Q 15 Q15 Q15 Q45 Q 6 Q44 Q14 Q45 20 U R R11 R12 R 12 R 5 R12 R11 R38 R 5 R 6 R10 R10 R 7 R37 R10 R37 R37 R38 R 11 R 2 R12 20 A S S14 S15 S 8 S14 S13 S13 S 3 S 7 S13 S14 S46 S45 S 13 S15 S46 S 7 S 7 S13 S14 S15 20 L T T14 T15 T 14 T15 T15 T16 T13 T 7 T 15 T55 T15 T14 T 15 T55 T16 T54 T55 T 54 T14 T14 20 B U U 4 U 7 U10 U 7 U10 U11 U11 U 9 U11 U 5 U30 U11 U 5 U30 U 4 U10 U10 U 10 U11 U 2 20 G V V56 V14 V 54 V15 V54 V 8 V55 V 55 V 6 V14 V14 V 9 V 2 V15 V 1 V56 V16 V 14 V16 V15 20 V W W 6 W14 W30 W29 W13 W13 W13 W 3 W14 W 6 W12 W14 W11 W13 W13 W 7 W30 W30 W11 W12 20 W X X15 X53 X 16 X15 X15 X14 X15 X 14 X14 X14 X 7 X 6 X16 X54 X15 X14 X54 X 14 X13 X54 20 N Y Y15 Y16 Y 15 Y13 Y14 Y51 Y 8 Y 2 Y51 Y13 Y51 Y15 Y 14 Y13 Y52 Y16 Y15 Y 15 Y 5 Y15 20 S Z Z 7 Z 15 Z 15 Z14 Z13 Z14 Z16 Z 17 Z 0 Z 51 Z14 Z51 Z 50 Z 7 Z 6 Z 7 Z 51 Z 14 Z13 Z51 20

PATTERNS RANDOMLY DISTORTED 4.4 %

2 5 6

Patterns

Stored 9

Distorted

Pattern 3 4

Recognised patterns and their recognized portion (%) from 20 different randomly distorted patterns Recognised Correctly

11 12 13 14 15 16 17 18 19 20

Order Type 0 10 7 8 1 X,,,,XXXXX,,,,, ,,,,,XX,XXX,,,, ,,,XXXXXXXXX,,, ,,XXX,X,XXXXX,, ,XXXX,,,,XXXXX, XX,XX,,,,,XXXXX XXXXXXXXXXX,XXX XXXXXXXXXXXXXXX ,XXXXXXXXXX,XXX XXXXX,,,,,XXXXX XXXXXX,,,,XXXXX XXXXX,,,,,XXXXX XXXXX,,,,,XXXXX XXXXXX,,,,XXXXX XXXX,,,X,,XXXXX ,,,,,XXXXX,,,,, X,,,XXXXXXX,,,, ,,,XXXXXXXXX,,, ,,,XXXX,XXXXX,, ,XXXXXX,,XXXXX, XX,XX,,,,,XXXXX XXXXXXXX,XXXXXX XXXXXXXXXXX,,XX XXXXXXXXXXXXXX, XXXXX,,,,,XXX,X XXXXX,,,,,XXXXX XX,XX,,,,,XXXXX XX,X,,,,,,XXXXX XXXXX,,,,,XXXXX XXXXXX,,,,XXXXX ,,,,,XXXXX,,,,, ,,,,XXXXXXX,,,, ,,,,,XXXXXXX,,, ,,XXXXX,XXXX,,, ,XXXXXX,,XXXXXX ,XXXX,,,,,XXXXX XXXXXXXXXXXXXXX ,XXXXXXXX,XXXXX XXXXXXXXXXXXXXX XXXXX,,,,,,X,XX X,XXX,,,,,XXXXX XX,XX,,,,,XXXXX XXXXX,,,,,XXXXX XXXX,,,,,,XXXXX XXXXX,,,,,XXXXX ,,X,,XXXXX,,,X, ,,,,,,XXXXX,,X, ,,,XXXXXXXXX,,, ,,XXXXX,XXXXX,, ,XX,XX,,,X,XXXX XXXXX,,,X,XXXXX XXXXXX,XXXXXXXX XXXXXXXXXXXXXXX XXXXXXXXXXXXXXX XXXXX,,,,,XXXXX XXXXX,,,,,XXXXX XXXXX,,,,,XXXXX XXXXX,,,,,X,XXX XXXXX,,,,,XXXXX ,XXX,,,,,,XXXXX ,X,,,,,XXX,,,,, ,,X,XXXXXXX,,X, ,,,XXXXXXXXX,,, ,,XXXX,,XXXXX,, ,XXXXX,,,XXXXX, ,XXXX,,,,,XXXXX XXXXXXXXXXXXXXX XXXXXXXXXXXXXXX XXXXXXXXX,XXXX, ,XXXX,XX,,XXXXX XXXXX,,,,,XXXXX XXXXX,,,,,XXXXX XXXXX,X,,,XXXXX XXXXX,,,,,XXXXX XXXXX,,,,,XXXXX ,,,,,XXXXX,,,,, ,,,,XXXXXXXX,X, X,,XXXXXXXXX,,, ,,XXXXX,XXXXX,, ,X,XXX,,,XXXXX, XXXXX,,,,XXXXXX XX,XXXXXXXXXXXX XXX,XXXXXXXXXXX XXXXXXXXXXXXXX, XXXXX,,X,,XXXXX XXXXX,,,X,XXXXX XXXXX,,,,,XX,XX XXXXX,,,,,XXXXX ,XXXX,,X,,XXXXX XXXXX,,,,,XXXXX X,,,,XXXX,,,,,, ,,,,XXX,XXX,,,, ,,,XXXXXXXXX,,, ,XXXXXX,X,XXX,, ,XXXXX,,,XXXXX, XXXXX,,,,XXXXXX XXXXXXXXXXXXXXX ,,XXXXXXXXXXXXX XXX,XXXXXXXXX,X ,,XXX,,,,,XXXXX XXXXX,,,,,XXXXX XXXXX,,,,,XXXXX XXXXX,,,,,XXXXX XXXXX,,,,,XXXXX XXXXX,,,X,XXXXX X,,,,XXXXX,,,,, ,,,,XXXXXXXX,,, X,,XXXXX,XXX,X, ,,XXXXX,XX,XX,X ,XXXXX,,,XXXXX, XXXXX,,,,,XXXXX XXXXXXXXXXXXXXX XXXXXXXX,XXXXXX XXXXXXXXXXXXXXX XXXXXX,,,,XXXXX XXXX,,,,,,XXXXX XXXXX,X,,,XXXX, XXXXX,,,,,XXXXX XXXXX,,,,,XXX,X XXXXX,,,,,XXXXX ,,,,,XXXXX,,,,, ,,,,XXXXXXX,,,, ,,,X,XXXXXXX,X, ,,XXXXX,XXXXX,, ,,XXXX,,,XXXXX, XXXXX,X,,,XXXX, XXXXXXXXXXXXXXX XX,XXXX,XXXXXXX XXXXXXXXXXXXXX, XXXXX,,,X,XXXXX XXXXX,,,,,XXXXX XXXXX,,,,,XXX,X XXXXX,,,,,XXXXX XXXX,,,,,,XXXXX X,XXXX,,,,XXXXX XXX,,,XXXX,,,,, ,,,,XXXXXXX,,,, ,,,XXXXXXXXX,,, ,,XXXXX,XXXXX,, ,XXXXX,,,XXXXX, XXXXX,,X,,XXXXX XXXXXXX,XXXXXXX XXXXX,XX,XXXXXX XXXXXXXXXXXXXXX XXXXX,,,,XX,XXX XXXX,,,,,,XX,XX XXXXX,,,,,XXXXX XXXXX,,,,,XXXXX XXXXX,,,,,XXX,X XXXXX,,,,,XXXXX

1.3 2.7 4.4 6.7 8.0 8.9 10.7

A 100 100 100 100 100 100 100

B 100 100 100 100 98 97 94

C 100 100 100 100 100 96 100

D 100 100 100 100 100 100 98

E 100 100 100 100 100 100 100

F 100 99 94 89 83 85 74

G 100 100 100 100 100 100 100

H 100 100 89 67 48 50 55

I 100 100 100 100 100 100 100

J 100 100 100 100 100 100 100

K 100 100 98 81 70 72 67

L 100 100 100 100 100 100 100

M 100 100 93 76 55 66 49

N 100 100 97 77 63 60 55

O 100 100 100 100 100 100 100

P 100 99 87 79 80 81 81

Q 100 100 100 100 100 94 99

R 100 100 100 95 100 99 95

S 100 100 100 100 100 100 100

T 100 100 100 100 100 100 100

U 100 100 100 100 100 100 100

V 100 100 100 100 100 100 100

W 100 100 100 100 99 98 92

X 100 100 100 100 100 100 100

Y 100 100 100 100 100 100 100

Z 100 100 100 100 100 100 100

100 100 98 95 92 92 91

Recognition Accuracy for Each Pattern (%)

Average

the same letter, then mHGN will be able to gain better accuracy value.

Regarding the real time capability, the process of the 2D mHGN architecture is much faster than its previous version of 1D architecture. The reason of this is due to the less number of neurons that has been used. As already mentioned, 2018 GNs are used within this 2D architecture, whereas for the same 15X15 pattern size 25538 GNs will be required within 1D architecture.

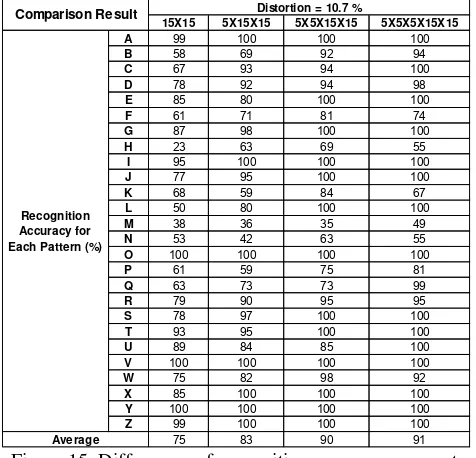

The following figure shows the differences of recognition accuracy amongst 15X15, 5X15X15,

5X5X15X15, and 5X5X5X15X15 mHGN

architectures when recognizing 10.7% distorted patterns of alphabets.

Figure 15: Differences of recognition accuracy amongst four different architectures

3.3 Time-Series in Pattern Recognition

As already mentioned in 2.3, recognizing patterns of time series problem utilizes data that has previously been recorded regularly in timely manner. For instance, if the parameter that need to be recorded is a single value, and the recording tempo is every six hours, then there will be 4 values recorded every day. In order to constructs the recorded values as a pattern, the data representation of the recorded values need to be developed so, that they can fit into a pattern recognition architecture. The following figure shows two ways of representing recorded data for 8 levels of measurement.Figure 16: Two examples of data representation for 8 levels value

It can be seen from Figure 16 that the data is represented using binary values. Additionally, the number of bit differences between any two levels is linear with the value difference between the two levels. By doing so, the pattern recognizer will work more accurately. The following figure shows an example of recorded data taken from a single value measurement and each value has 8 levels.

Figure 17: Data of 8 level value build a 2D-Pattern

It can be seen from Figure 17 that the recorded values from parameter of 8 levels data construct a two-dimensional pattern of 30X8 architecture. Utilizing these recorded data, the pattern recognizer can predict in 6 hour time if the same thing will occur again. It means that if values have been recorded and the same pattern is recognized by the pattern recognizer, then the same thing is predicted to happen again in 6 hour time.

So, to predict what will occur in 6 hour time using 30X8 mHGN architecture, the recognizer need to be fed with data measurement recorded from 7 days and 6 hours ago until now. Not only predicting something that will occur in 6 hour time, the 30X8 mHGN architecture can also be used to predict something that will occur in 12 hour time. But the recognizer for this purpose is fed with data measurement recorded from 7 days only. In this case, the pattern is not fed with 30X8 binary data, but with only 29X8 binary data. This is the same case when a pattern recognizer is fed with incomplete data (only 97% data), but the recognizer is still able to recognize the pattern. Similarly, to predict something that will occur in 18 hour time, the recognizer is fed with data measurement recorded from 6 days and 18 hours ago (only 93% data). This case is described in section

3.2.1 that after stored with 27 patterns,

5X5X5X15X15 mHGN is able to recognize incomplete/distorted (89%) patterns with 91% of result accuracy.

15X15 5X15X15 5X5X15X15 5X5X5X15X15

A 99 100 100 100

B 58 69 92 94

C 67 93 94 100

D 78 92 94 98

E 85 80 100 100

F 61 71 81 74

G 87 98 100 100

H 23 63 69 55

I 95 100 100 100

J 77 95 100 100

K 68 59 84 67

L 50 80 100 100

M 38 36 35 49

N 53 42 63 55

O 100 100 100 100

P 61 59 75 81

Q 63 73 73 99

R 79 90 95 95

S 78 97 100 100

T 93 95 100 100

U 89 84 85 100

V 100 100 100 100

W 75 82 98 92

X 85 100 100 100

Y 100 100 100 100

Z 99 100 100 100

75 83 90 91

Comparison Result Distortion = 10.7 %

Recognition Accuracy for Each Pattern (%)

Average

0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 1 1 0 0 0 0 0 0 1 1 1 0 0 0 0 0 1 1 1 1 0 0 0 0 1 1 1 1 1 0 0 0 1 1 1 1 1 1 0 0 1 1 1 1 1 1 1 0 1 1 1 1 1 1 1 1

0 1 2 3 4 5 6 7 8

0 1 1 1 1 1 1 1 1 0 0 1 1 1 1 1 1 1 0 0 0 1 1 1 1 1 1 0 0 0 0 1 1 1 1 1 0 0 0 0 0 1 1 1 1 0 0 0 0 0 0 1 1 1 0 0 0 0 0 0 0 1 1 0 0 0 0 0 0 0 0 1

0 1 2 3 4 5 6 7 8

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 0 0 0 0 0 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 0 0 0 1 1 1 1 0 0 0 0 1 1 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 1 1 1 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

2 1 1 1 3 3 2 1 1 2 2 3 4 5 5 6 7 8 7 6 5 5 4 3 3 3 4 5 5 4

18.00 00.00 06.00 12.00 18.00 00.00 06.00 12.00 18.00 00.00 06.00 12.00 18.00 00.00 06.00 12.00 18.00 00.00 06.00 12.00 18.00 00.00 06.00 12.00 18.00 00.00 06.00 12.00 18.00 00.00

4 Multidimensional Graph Neuron for

Real Time Weather Forecast

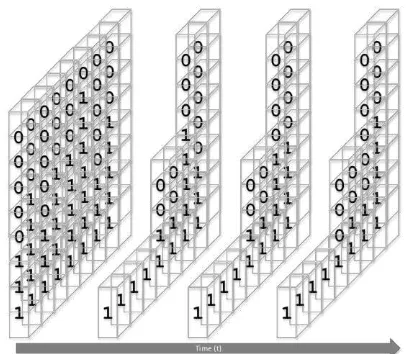

In the previous section, time series value is described and represented so, that it can be predicted through utilizing a pattern recognition, such as mHGN. In case of weather forecasting, single parameter in a location, such as humidity, is not the only value that determine the temperature of the same location within 6 hour time. Several other parameters, such as air-temperature itself, wind-speed, wind-direction, and air-pressure, need to be measured as well. It means that the number of levels or a measured value will increase according to the number of parameters. In case 5 parameters need to be measured and each value contains 8 levels, the required pattern structure would be 30X40.

Also described in the previous section that measuring a parameter at particular point of location for several periods of time will generate a two dimensional pattern. If a series of points of location need to be measured for several period of time, then the measured values will become a three dimensional pattern. The following figure depicts how some part of it will look like.

Figure 18: A row of data of 8 level value build a 3D-Pattern

Also described in the previous section that measuring parameters at particular point of location for several period of time will generate a two dimensional pattern. If a series and linear of locations need to be measured for several period of time, then the measured values will become a three dimensional pattern. If the location that need to be measured is an 2D area, then the measured values will generate a 4D pattern. Furthermore, if the location that need to be measured is a 3D area, then the measured values will generate a 5D pattern.

4.1 Global and Local Weather Forecast

As many people have experienced, weather condition within a city may vary. At particular time, in one area it rains pouring, whereas two kilometers away from it the sun shines brightly. Similarly, at particular longitude and latitude the temperature on the earth surface is 30 degree, whereas at the same coordinate but different altitude the temperature is -10 degree. This is the real situation that the result of global weather forecast will be different to the local weather forecast.Local weather forecasts seem to be important for many people, as local weather forecasts work on weather condition in more details. But, there are not many local weather forecast running in a country. The reason of this, is that a weather forecast generally require sophisticated computational resources. A lot and complex data need to be calculated and analyzed by a weather forecast. If such weather forecast need to be operated locally in small areas, very expensive infrastructures including hardware and software must be provided in a country. Generally, countries only provide country-wide (global) weather forecast, rather than local ones.

4.2 The Architecture of mHGN for

Time-Series Weather Data

The utilization of mHGN has introduced a new approach that a local weather forecast can be operated using small and cheap equipment. The values of air-temperature, air-humidity, air-pressure, wind-speed, and wind-direction can be gained through ordinary sensors. The area that is covered by those sensors can be a 3D area, because such small sensors can be easily mounted in valleys or hills, or even vehicles. The sensors can be connected to a tiny computer, such as Raspberry Pi. The tiny computer will be responsible to run several GNs. The values taken from the sensors will then be worked out within the GNs. The connectivity of neurons is developed

within a tiny computer and through the

interconnectivity of the tiny computers.

As already discussed, parameters that need to be known in a weather forecast are temperature, wind-speed, wind-direction, air-pressure, and air-humidity. So, for every parameter that need to be forecasted one architecture of mHGN need to be developed. But the values taken from the sensors will be utilized and shared to all the mHGN architectures, and the result of the prediction depends on the precision level of the parameter.

If the architecture of mHGN will forecast the value of the temperature in this city with a precision level of 1 degree, then the output of mHGN must have 11 different values. If the temperature value should have a precision level of 0.5 degree, then the output of mHGN must have 22 different values. Following those two examples, other scaling mechanisms should be straightforward.

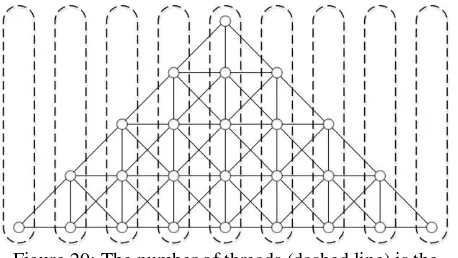

As discussed in section 3.2, within the mHGN architecture the number of neurons on the base layer is the product of dimension sizes. On the next layer above it, the number of neurons is less than the one on the layer. Such an architecture creates the hierarchy of mHGN. The following figure shows an example of one-dimension architecture of mHGN, in which the base layer contains 9 neurons, and the mHGN in total contains 25 neurons.

Figure 19: The hierarchy of one dimension of size 9 of mHGN

In HGN and mHGN experiments, each neuron and its functionalities was operated by a thread. However, the number of thread will be tremendous, especially when the mHGN is used to work on multidimensional patterns. For example, 15X15 architecture of mHGN requires 2018 neurons. This means that the number of threads that need to be run is also 2018. Such a number of threads would be difficult to be run if the computer used for the project is a Raspberry Pi. The new approach to run neurons is through utilizing threads in which the number of threads is only the same as the size of neurons on the base level. The following figure shows that instead of utilizing 25 threads the new approach to implement mHGN architecture only requires 9 threads.

Figure 20: The number of threads (dashed line) is the same as the neuron size on the base level

In short, to build a weather forecast for particular location, five parameters need to be measured. They are: air-temperature, air-humidity, speed, wind-direction, and air-pressure. So, if one parameter is represented through 8-bit binary data, then for the measurement of 5 parameters 41-bit data is needed. For the time series, 21 series of measurement will be carried out. To cover the location, 3X3X3 measurement points will be deployed. So, the mHGN dimension will be 3X3X3X41X21.

5 Discussion

As is the case with pattern recognition of alphabets, patterns are more or less different to one another. However, in time series measurement data patterns that are constructed from the measured values of the sensors can be very similar to one another. Therefore, data representation of measured values before data is fed to the architecture of mHGN plays a big role in having very accurate results. False positive and true negative rate will also be indications to determine the quality of mHGN in forecasting weather.

The data that will be used to validate this work will be the weather data taken from different cities and different countries. As mHGN is trained one-cycle only, it is a challenge to choose which data is the right data for the training purpose. When the appropriate training data has been applied, mHGN will then have a capability to forecast the air-temperature, air-humidity, air-pressure, wind-speed, and wind-direction at particular longitude, latitude, and altitude.

6 Conclusion

will be improved to the extent so, that multi oriented

of multidimensional patterns will also be

recognizable. At this stage it is also observed that mHGN still use a single cycle memorization and recall operation. The scheme still utilizes small response time that is insensitive to the increases in the number of stored patterns.

7 References

[1] K. Anderson, Predicting the Weather:

Victorians

and

the

Science

of

Meteorology, Chicago and London: The

University of Chicago Press, 2005.

[2] C. Duchon and R. Hale, Time Series

Analysis in Meteorology and Climatology:

An Introduction, Oxford, UK: John Wiley

& Sons, Ltd, 2012.

[3] A.

Gluhovsky,

"Subsampling

Methodology for the Analysis of Nonlinear

Atmospheric Time Series,"

Lecture Notes

in Earth Sciences ,

vol. 1, no. 1, pp. 3-16,

2000.

[4] P. Inness and S. Dorling, Operational

Weather Forecasting, West Sussex, UK:

John Wiley & Sons, Ltd, 2013 .

[5] P. Lynch, "The origins of computer

weather prediction and climate modeling,"

Journal of Computational Physiscs,

vol.

227, no. 2008, p. 3431

–

3444, 2007.

[6]

J. r. ı. Miksovsky, P. Pisoft and A. s. Raidl,

"Global Patterns of Nonlinearity in Real

and GCM-Simulated Atmospheric Data,"

Lecture Notes in Earth Sciences,

vol. 1, no.

1, pp. 17-34, 2000.

[7] T.

Vasquez,

Weather

Forecasting

Handbook,

Garland,

USA:

Weather

Graphics Technologies, 2002.

[8] D. B. Percival, "Analysis of Geophysical

Time Series Using Discrete Wavelet

Transforms: An Overview,"

Lecture Notes

in Earth Sciences,

vol. 1, no. 1, pp. 61-80,

2000.

[9] F. K. Lutgens and E. J. Tarbuck, The

Atmosphere:

An

Introduction

to

Meteorology, Glenview, USA: Pearson,

2013.

[10] F. Nebeker, Calculating the Weather:

Meteorology in the 20th Century, San

Diego, USA: ACADEMIC PRESS , 1995.

[11] S. Yorke, Weather Forecasting Made

Simple,

Newbury,

Berkshire,

UK:

Andrews UK Limited, 2011.

[12] B. B. Nasution and A. I. Khan, "A

Hierarchical Graph Neuron Scheme for

Real-time Pattern Recognition,"

IEEE

Transactions on Neural Networks,

pp.

212-229, 2008.

[13] B. B. Nasution, "Towards Real Time

Multidimensional

Hierarchical

Graph

Neuron

(mHGN),"

in

The

2nd

International Conference on Computer

![Figure 2 : The Classification of the Earth’s Air and its corresponding temperature [9]](https://thumb-ap.123doks.com/thumbv2/123dok/1608352.1552693/2.595.327.526.86.291/figure-classification-earth-s-air-corresponding-temperature.webp)

![Figure 7: Wind, Highs, and Lows pattern in July [11]](https://thumb-ap.123doks.com/thumbv2/123dok/1608352.1552693/3.595.94.240.69.220/figure-wind-highs-lows-pattern-july.webp)