Chapter 5, “Adaptive Coding Techniques for Reliable Medical Network Channel” by Talha and Kohno, is a motivation to economically increase the requirements of reliable medical network infrastructure and to establish reliable medical transmission over cellular networks. The technical parameters of additional channel codes are fixed for “Medical Network Channel (MNC).” Capabilities are determined for the AWGN channel and for Rayleigh fading with a parameter distribution function equal to 0.55.

Introduction

Link capacity is one of the main constraints affecting the performance of on-chip interconnects. Combining crosstalk avoidance with error-checking code, reliable intercommunication is achieved in a network-on-chip (NoC)-based system on chip (SoC). Due to parallelism, the NoC offers high performance in terms of scalability and flexibility, even in the case of millions of on-chip devices.

The effect of crosstalk capacitance is more horizontal than vertical; therefore, crosstalk errors often occur in on-chip connecting wires [2]. The crosstalk avoidance codes (CAC) are popularized to control the error in on-chip interconnections; thereby, reliable communication is obtained.

Related work

This condition reduced the worst-case capacitance from (1 + 4λ) C L to (1 + 2λ) C L; therefore, the energy dissipation decreases from (1 + 4λ) C L α V 2dd to (1 + 2λ) C L α V 2dd, where λ is the ratio between the coupling capacitance and the total capacitance, C L is the own capacitance of the connecting wire, α is the transition factor, Vdd and the supply voltage for the system. Power dissipation is reduced by reducing the transient activity of the connecting wires for the data packet. Errors occur mainly when interconnecting wires due to coupling capacitance; therefore, a robust error correcting code (ECC) is required for error detection and correction [4].

The error can occur one or more times, and multiple errors have also occurred in the connecting threads; therefore the ECCs are not sufficient to detect and correct errors. The parity check, dual rail (DR), modified dual rail (MDR), boundary shift code (BSC), and CAC are popularized among various techniques for controlling multiple jumper faults.

Joint CAC-ECC

To find out the error-free group, the parity of group 1 is compared with the sent parity (p0). If p0 is equal to p1, group 1 is considered error-free, otherwise group 3 is considered error-free. To find out the error-free group, the parity of group 1 is compared with the sent parity.

If p0 is equal to p1, group 1 is considered to be fault-free, otherwise group 2 is fault-free. If group 2 is equal to group 3, the group is considered error-free, otherwise group 1 is considered error-free.

Advanced NoC router

Combined LPC-CA-ECC scheme

Although the CAC-ECC scheme has detected and corrected cross talk errors effectively, the power consumption and data packet latency is a huge increase because more interconnected wires are used in the advanced error control scheme. Due to tripling of original data, the combined CAC-ECC scheme used more wires; thereby the power consumption by advanced method has increased.

HARQ

Implementation

The BI technique reduced the number of transitions by using the hamming distance of original data packet; thereby inverting the original data before encoding. The data transfer of the encoder is more than the decoder due to the number of rounds required to detect and correct. The required cycles increase more in case of more number of error bits and also higher data width.

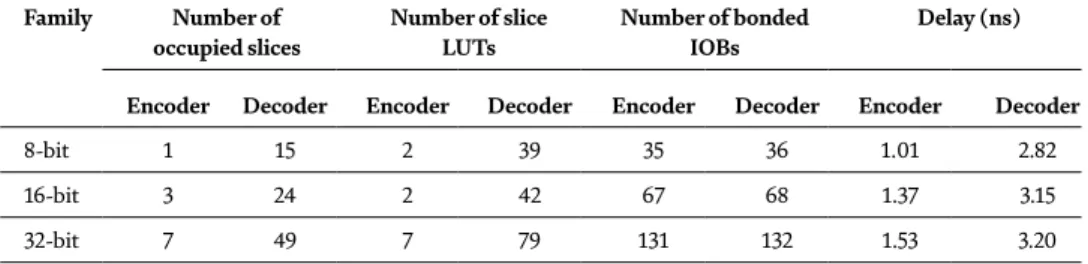

It can be seen from Table 3 that the data transmission rate of the NoC in the presence of soft errors decreased with the increase of the data width due to the higher number of interconnections. Nevertheless, the area utilization is increased in the joint LPC-CAC-ECC scheme due to the many combinational circuits required in the BI method to reduce power consumption.

Conclusion

The image pair is then used for disparity estimation (Topic 2.3), the most non-trivial part of the procedure. The optical center is the position on the image that coincides with the main axis of the camera installation. This difference in the image quality of the two cameras is reflected in the later stage of disparity assessment.

The window must completely cover the smaller of the two lines (detected lines in the two input images). The disparity values of the image features are color coded based on the disparity values.

Advancement of biomedical applications

- Signal processing in networked cyber-physical systems

- Cyber-physical systems in signal processing

- Multimodal multimedia signal processing

- Statistical signal processing

- Signal processing techniques for data hiding and audio watermarking In signal processing techniques for data hiding a novel system for embeddings

- Optical signal processing

- Virtual physiological human initiative

- Brain-computer interfaces

The biosignal processing and machine learning [12] based medical image analysis accurately diagnoses the diseases by doctors. Multimodal speaker recognition, distinguishes the dynamic speaker in an audio video cluster, which contains a few speakers, in light of the relationship between the sound and the development in the video [10]. Statistical signal processing is an approach to signal processing that treats signals as stochastic processes, using their statistical properties to perform signal processing tasks.

Optical signal processing unites different areas of optics and signal processing to be specific, non-linear gadgets and procedures, simple and computerized signal and driven information adjustment arrangements to achieve fast signal processing capabilities that can conceivably work at the line speed of fiber optic exchanges [17 , 18 ]. VPH is a composite collection of computational structures and ICT-based instruments for multilevel viewing and reconstruction of human life structures and physiology.

Neural networks and computing

Big data in bioinformatics

Image reconstruction and analysis

- Biomedical imaging

- Intelligent imaging

- PDE based image analysis

- Visualization of 3D MRI brain tumor image

- Hyperspectral imaging

- Artificial neural networks in image processing

In biomedical computing, unceasing difficulties are: board, investigation, and capacity of biomedical information. One of the administrations used in describing the capacity and transmission of picture information is the picture archiving and communication system (PACS). In this way, it is difficult to portray and evaluate the 2-D case, the use of an elective use in the algorithmic sense of the supposed.

In addition, in the 1-D case, unlike the first algorithmic representation of EMD, the productivity of the PDE-based technique was described in an ongoing paper. The acquired results confirm the value of the new PDE-based filtering process for breaking down different types of information.

Discussion

Conclusion

Other steps of the proposed decomposition method are discussed in detail in the next section. By using the steerable angle, the directional edge response can be controlled in the output stage of the transformed image. Phase-stretch gradient field extractor (PAGE) directional filter banks (A)–(D) directional filter banks of PAGE computed using the definition in Eq.

With PAGE the features are sharper as the bandwidth of the reaction is determined by the input image dimension. As the edges of the fingerprint rotate, the response value (shown here with different color values) changes.

Many-core technology

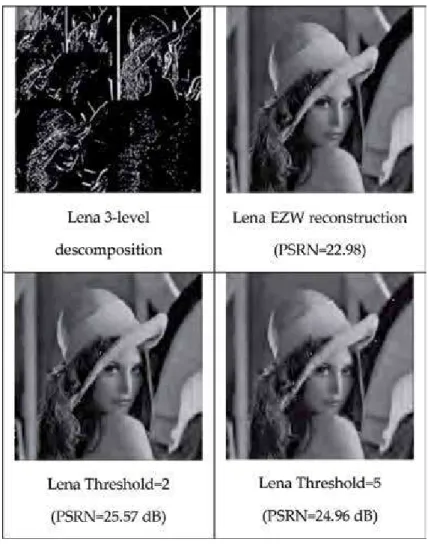

With this iterative process, multiple levels of transformation will be generated where the energy is fully compacted and represented by few low frequency coefficients [10].

Embedded Zerotree coding

At the end of the process, the image was decomposed by applying the 2-D DWT algorithm to the LL subband [9]. Wavelet coefficients are two-dimensional, given this, an image can be represented using trees due to the sub-sampling performed in the transformation. Fourier analysis allows splitting a signal into sine waves of different frequencies, because of this, waveform analysis is the breaking down of a signal into shifted and scaled versions of the original or mother waveform.

In the dominant step, the magnitude of the wavelet coefficients is compared to an arbitrary threshold value; essential data and coefficients are determined. The inclusion of the ZTR symbol increases coding efficiency because the encoder increases the correlation between image scales.

Proposed algorithm

The positive significant coefficient and the non-significant coefficients are above an arbitrary threshold, usually starting with the two highest powers, two below the maximum wavelet value, where the wavelet coefficient is insignificant and has a significant progeny. The low-pass filter follows, where significant coefficients are detected and refined under the successive approach quantification (SAQ) approach [21].

Parallel implementation

In this paper, the recursive transform method is used for multilevel decomposition, where the result data is then processed before zerotree compression, the block diagram of a wavelet-based image coding algorithm is shown in Figure 3. Our algorithm is applied as a preprocessing stage; this allows the elimination of unused data in the transformed image that are not relevant and meaningful in the image reconstruction. The use of three subbands contributes to a smaller scale within the image reconstruction process because the coefficients are mostly zero, and few large values correspond to boundary information and textures in the image [22].

In this article, an algorithm was proposed to reduce the less essential data in order to achieve higher compression, thereby preserving the high value coefficients, thereby eliminating the lower values of the minimum value subband. . In this epoch, most of the values are close to zero and the coefficients have the smallest data in each sub-band, where it is used as a threshold to eliminate the low value data in that sub-band.

Distributions of data and tasks

Task Decomposition: This refers to the division of labor for each of the cores, which will be responsible for the processing tasks. A task is a sequence of the program that can be executed in parallel, simultaneously with other tasks. The implementation of the algorithm, in the Epiphany III system with 16 cores, operating at 1 GHz, which solves matrix multiplication of 128 × 128 in 2 ms.

The breakdown of tasks should be followed by a synchronization of the various parties involved to ensure data consistency. If these matrices A, B will continue to be executed on each of the cores in the system and the result of each element of the matrix C.

Experiment results

The input matrices are A and B, C is the resulting matrix of Ai and Bj, in which they as coordinate (i, j), which are (row, column), these are elements of the matrix.

Conclusion

The driving force behind this model is parsimony, i.e. the rapid decay of the representation coefficients over the dictionary. How to efficiently represent an image over a trained dictionary to improve the performance of the image compression. The JPEG [25] and JPEG2000 standards [26] are the results of the use of the analysis sparse representation of the image through the design of analytical dictionaries, e.g.

By assigning different sparsity levels to each block, we achieve a more efficient sparse representation of the image. The encoder generates a residual image and encodes it with a sparse representation of the residual image via a trained dictionary.

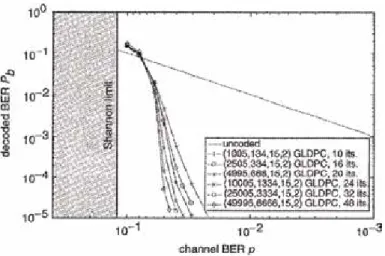

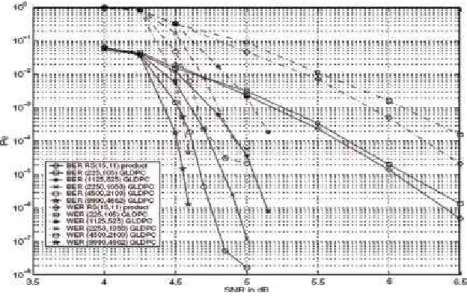

![Figure 3 shows that a chase rather than optimal SISO decoder can be successfully employed in the decoding of high-rate extended Hamming-based GLDPC codes and the BERs are close to the capacity with the efficient fast chase decoding in [21].](https://thumb-ap.123doks.com/thumbv2/1libvncom/9202154.0/26.765.191.577.80.333/successfully-employed-decoding-extended-hamming-capacity-efficient-decoding.webp)

![Figure 3 shows that a chase rather than optimal SISO decoder can be successfully employed in the decoding of high-rate extended Hamming-based GLDPC codes and the BERs are close to the capacity with the efficient fast chase decoding in [21].](https://thumb-ap.123doks.com/thumbv2/1libvncom/9202154.0/27.765.241.520.464.722/successfully-employed-decoding-extended-hamming-capacity-efficient-decoding.webp)

![Figure 12 is showing the BER performance of the ext-Hamming-based GLDPC code (with overall rate R ¼ 0:625) by the TSSA-BF algorithm compared to HD decoder BF algorithms in [7, 22] and SD chase sub-decoder (number of LRPs p ¼ 2)](https://thumb-ap.123doks.com/thumbv2/1libvncom/9202154.0/34.765.192.575.79.370/figure-showing-performance-hamming-overall-algorithm-compared-algorithms.webp)

![Figure 17 shows the GLDPC BER performance using the (32,26,4) extended Hamming subcode by this decoding with respect to the bit-flipping algorithms in [7, 22] and [23]](https://thumb-ap.123doks.com/thumbv2/1libvncom/9202154.0/38.765.173.597.832.989/figure-performance-extended-hamming-subcode-decoding-flipping-algorithms.webp)

![Figure 17 shows the GLDPC BER performance using the (32,26,4) extended Hamming subcode by this decoding with respect to the bit-flipping algorithms in [7, 22] and [23]](https://thumb-ap.123doks.com/thumbv2/1libvncom/9202154.0/39.765.144.615.252.393/figure-performance-extended-hamming-subcode-decoding-flipping-algorithms.webp)