GUIDe: Gaze-enhanced UI Design

Abst r a ct

The GUI De ( Gaze- enhanced User I nterface Design) proj ect in t he HCI Group at St anford Universit y explores how gaze inform ation can be effect ively used as an augm ented input in addition to keyboard and m ouse. We present three practical applications of gaze as an augm ented input for pointing and selection, applicat ion swit ching, and scrolling. Our gaze- based int eract ion techniques do not overload t he visual channel and present a natural, universally- accessible and general purpose use of gaze inform ation t o

facilitate interaction with everyday com put ing devices.

Ke yw or ds

Pointing and Selection, Eye Pointing, Application Swit ching, Aut om at ic Scrolling, Scrolling, Eye Tracking.

ACM Cla ssifica t ion Ke yw or ds

H5.2. User I nterfaces: I nput devices and strategies, H5.2. User I nterfaces: Windowing System s, H5.m . I nform ation interfaces and present at ion (e.g., HCI ) : Miscellaneous.

I n t r odu ct ion

The keyboard and m ouse have long been the dom inant form s of input on com puter syst em s. Eye gaze tracking as a form of input was prim arily developed for disabled users who are unable t o m ake norm al use of a

keyboard and pointing device. However, with the Copyright is held by t he aut hor/ owner(s) .

CHI 2007, April 28–May 3, 2007, San Jose, California, USA. ACM 978- 1- 59593- 642- 4/ 07/ 0004.

M a n u Ku m a r

Stanford University, HCI Group Gates Building, Room 382 353 Serra Mall

Stanford, CA 94305- 9035 sneaker@cs.stanford.edu

Te r r y W in ogr a d

Stanford University, HCI Group Gates Building, Room 388 353 Serra Mall

2

increasing accuracy and decreasing cost of eye gaze tracking system s [ 1, 2, 6] it will soon be pract ical for able- bodied users to use gaze as a form of input in addition to keyboard and m ouse – provided the result ing interact ion is an im provem ent over current t echniques. The GUI De ( Gaze- enhanced User I nt erface Design) proj ect [ 9] in the HCI Group at Stanford Universit y explores how gaze inform at ion can be effectively used as an augm ented input in addition to keyboard and m ouse. I n this paper, we present three practical applications of gaze as an augm ented input for pointing and selection, application switching, and scrolling.

M ot iva t ion

Hum an beings look wit h t heir eyes. When t hey want t o point ( either on t he com puter or in real life) t hey look before t hey point [ 8] . Therefore, using eye gaze as a way of interact ing wit h a com put er seem s like a nat ural extension of our hum an abilit ies. However, t o date, research has suggested t hat using eye gaze for any act ive control t ask is not a good idea. I n his paper on MAGI C pointing [ 18] Zhai states that “t o load t he visual percept ion channel wit h a m ot or cont rol t ask seem s fundam ent ally at odds wit h users’ nat ural m ent al m odel in which t he eye searches for and t akes in inform at ion and t he hand produces out put t hat m anipulat es ext ernal obj ect s. Ot her t han for disabled users, who have no alt ernat ive, using eye gaze for pract ical point ing does not appear t o be very prom ising.”

I n his paper in 1990, Jacob [ 7] states that: “what is needed is appropriat e int eract ion t echniques t hat

incorporat e eye m ovem ent s int o t he user- com put er dialogue in a convenient and nat ural way.” I n a later paper in 2000, Sibert and Jacob [ 15] conclude that: “Eye gaze int eract ion is a useful source of addit ional input and should be considered when designing int erfaces in t he fut ure.”

For our research we chose to invest igat e how gaze-based interaction techniques can be m ade sim ple, accurat e and fast enough to not only allow disabled users t o use them for st andard com put ing applicat ions, but also m ake t he t hreshold of use low enough t hat able- bodied users will actually prefer to use gaze- based int eract ion.

Re la t e d W or k

Researchers have tried num erous approaches to gaze based pointing [ 3, 7, 10- 13, 16- 18] . However, its use out side of research circles has been lim ited t o disabled users who are otherwise unable to use a keyboard and m ouse. Disabled users are willing to tolerate

cust om ized int erfaces, which provide large t arget s for gaze based pointing. The lack of accuracy in eye- based point ing and the perform ance issues of using dwell-based activation have created a high enough threshold t hat able- bodied users have preferred t o use t he keyboard and m ouse over gaze based pointing [ 8] .

Gaze- based scrolling is fraught with Midas Touch1 [ 7]

issues and therefore t here hasn’t been m uch in t he literature regarding gaze- based scrolling to date.

Eye Poin t : Poin t in g a n d Se le ct ion

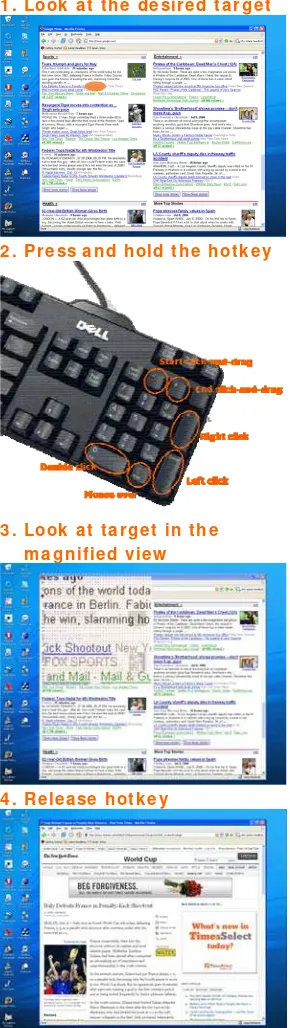

EyePoint provides a practical gaze- based solution for everyday pointing and select ion using a com bination of gaze and keyboard. EyePoint works by using a two-st ep, progressive refinem ent process that is fluidly st it ched t ogether in a look-press- look-release cycle.

To use EyePoint , the user sim ply looks at t he t arget on t he screen and presses a hot key for the desired action - single click, double click, right click, m ouse over, or start click- and- drag2. EyePoint displays a m agnified

view of t he region t he user was looking at . The user looks at the target again in the m agnified view and releases the hot key. This result s in t he appropriat e action being perform ed on the t arget ( sidebar) .

To abort an action the user can look away or anywhere outside of the zoom ed region and release the hotkey, or press the Esc key on the keyboard.

The region around t he user’s init ial gaze point is presented in the m agnified view with a grid of orange dots overlaid. These orange dots are called focus point s and m ay aid in focusing the user’s gaze at a point

1 Unint ent ional act ivat ion of a com m and when a user is looking around.

2 We provide hotkeys for all standard m ouse act ions in our research protot ype; a real world im plem ent at ion m ay use alternat ive act ivat ion techniques such as dedicated buttons located below the spacebar.

wit hin t he t arget . Focusing at a point reduces t he j itt er and im proves t he accuracy of t he system .

Single click, double click and right click actions are perform ed as soon as the user releases the key. Click and drag is a two- step interaction. The user first selects t he st art ing point for t he click and drag wit h one hot key and t hen t he dest inat ion wit h another hot key.

Technical Det ails

The eye t racker const ant ly t racks t he user’s eye- m ovem ents3. A m odified version of Salvucci’s

Dispersion Threshold I dent ificat ion fixat ion detect ion algorithm [ 14] is used along with our own sm oothing algorit hm t o help filter t he gaze dat a. When t he user presses and holds one of t he act ion specific hot keys on t he keyboard, t he system uses the key press as a trigger to perform a screen capture in a confidence int erval around t he user’s current eye- gaze. The default set t ings use a confidence interval of 120 pixels square ( 60 pixels in all four directions from the estim ated gaze point) . The system then applies a m agnificat ion fact or ( default 4x) to t he capt ured region of t he screen. The result ing im age is shown t o t he user at a locat ion centered at the previously estim ated gaze point but offset t o rem ain wit hin screen boundaries.

The user t hen looks at the desired t arget in t he

m agnified view and releases t he hot key. The user’s eye gaze is recorded when the hot key is released. Since t he view has been m agnified, t he result ing eye- gaze is

3 I f the eye tracker were fast enough, it would be possible to begin tracking when the hotkey is pressed, alleviat ing long-term use concerns for exposure to infra- red illum inat ion. 2 . Pr e ss a n d h old t h e h ot k e y

3 . Look a t t a r ge t in t h e m a gn ifie d vie w

4 . Re le a se hot k e y

4

m ore accurate by a fact or equal to the m agnification. A t ransform is applied t o determ ine the locat ion of the desired t arget in screen coordinat es. The cursor is t hen m oved to this location and the action corresponding to t he hot key (single click, double click, right click et c.) is executed. EyePoint t herefore uses a secondary gaze point in the m agnified view to refine the location of the t arget.

Evaluat ion

A quantitative evaluation of EyePoint shows that the perform ance of EyePoint is sim ilar t o t he perform ance of a m ouse, though with slight ly higher error rates. Users strongly preferred the experience of using gaze-based point ing over t he m ouse even though t hey had years of experience with the m ouse.

Eye Ex posé : Applica t ion Sw it ch in g

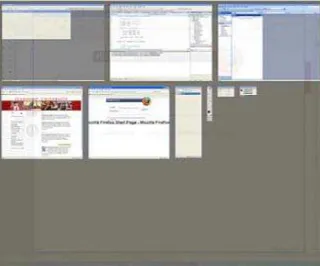

EyeExposé, com bines a full- screen two- dim ensional thum bnail view of the open applications with gaze-based selection for application switching.

Figure 2 and Figure 3 show how EyeExposé works - to swit ch to a different applicat ion, t he user presses and holds down a hotkey. EyeExposé responds by showing a scaled down view of all the applications that are current ly open on the deskt op. The user sim ply looks at the desired t arget applicat ion and releases t he hotkey.

The use of eye gaze inst ead of t he m ouse for point ing is a natural choice. The size of the tiled windows in Exposé is usually large enough for eye- tracking

accuracy t o not be an issue. Whet her t he user relies on eye gaze or the m ouse for select ing t he target , t he visual search t ask t o find t he desired applicat ion in t he t iled view is a prerequisite st ep. By using eye gaze wit h

an explicit action ( t he release of t he hot key) we can leverage t he user’s nat ural visual search t o point t o t he desired selection.

Evaluat ion

A quantitative evaluation showed that EyeExposé was significantly faster than using Alt- Tab when switching bet ween t welve open applicat ions. Error rat es in application switching were m inim al, with one error occurring in every t went y or m ore t rials. I n a qualit at ive evaluat ion, where subj ects ranked four different application switching techniques ( Alt- Tab, Task bar, Exposé w/ m ouse and EyeExposé) , EyeExposé was the subjects choice for speed, ease of use, and the t echnique t hey said t hey would prefer t o use if t hey had all four approaches available. Subj ects felt that

EyeExposé was m ore natural and faster than other approaches.

Eye Scr oll: Re a din g m ode a n d Scr ollin g

EyeScroll allows com puter users t o aut om at ically and adaptively scroll through content on their screen. When scrolling starts or st ops and the speed of the scrolling is cont rolled by t he user's eye gaze and t he speed at which the user is reading. EyeScroll provides m ult iple m odes for scrolling – specifically, a reading m ode and off- screen dwell- based targets.Reading Mode

The EyeScroll reading m ode allows users t o read a web page or a long docum ent , wit hout having t o const ant ly scroll the page m anually. The reading m ode is toggled by using the Scroll Lock key – a key which has ot herwise been relegated t o having no funct ion on the keyboard. Once the reading m ode is enabled by pressing t he Scroll Lock key, t he system t racks t he Figure 2. Pressing and holding the EyeExposé

hotkey tiles all open applications on the screen. The user simply looks at the desired target application and releases the hotkey.

user’s gaze. When the user’s gaze location falls below a system - defined threshold ( i.e. the user looks at t he bot t om part of t he screen) EyeScroll st art s t o slowly scroll the page. The rate of the scrolling is determ ined by t he speed at which t he user is reading and is usually slow enough to allow t he user t o cont inue reading even as t he t ext scrolls up. As t he user’s gaze slowly drift s up on the screen and passes an upper threshold, the scrolling is paused. This allows the reader to continue reading naturally, without being afraid that the text will run off the screen.

The reading speed is estim ated by m easuring the am ount of t im e t it t akes t he user t o com plet e one horizontal sweep from left to right and back. The delta in the num ber of vertical pixels the user’s gaze m oved ( account ing for any exist ing scrolling rate) defines the distance d in num ber of vertical pixels. The reading speed can then be estim ated as d/ t.

I n order to facilitate scanning the scrolling speed can also t ake int o account t he locat ion of t he user’s gaze on t he screen. I f t he user’s gaze is closer t o the lower edge of the screen, t he system can speed up scrolling and if the gaze is in the center or the upper region of t he screen the syst em can slow down scrolling accordingly. Since t he scrolling speed and when t he scrolling st arts and st opped are both funct ions of t he user’s gaze, the system adapts to the reading style and patterns of each individual user.

By design the page is always scrolled only in one direction. This is because while reading top to bottom is a fairly nat ural act ivit y t hat can be det ected based on gaze pat terns, at tem pt ing to reverse scrolling direct ion t riggers t oo m any false posit ives. Therefore, we chose

t o com bine t he reading m ode with explicit dwell- based activation for scrolling up in the docum ent ( see below) .

The user can disengage the reading m ode by pressing t he Scroll- Lock key on the keyboard.

Off- screen dwell- based t arget s

We placed m ultiple off- screen t arget s as seen in Figure 4 on t he bezel of t he screen. The eye- t racker’s field of view is sufficient t o detect when t he user looks at these off- screen targets. A dwell duration of 400- 450m s is used to trigger activation of the targets which are m apped to Page Up, Page Down, Hom e and End keys on the keyboard.

The off- screen dwell- based t argets for docum ent navigation com plem ent the autom ated reading m ode described above. Since the reading m ode only provides scrolling in one direct ion, if t he user wants t o scroll up or navigate t o t he t op of the docum ent he/ she can do t hat by using t he off- screen t argets.

Evaluat ion

Pilot st udies showed that subj ect s found the EyeScroll reading m ode to be natural and easy to use. Subj ects particularly liked that the scrolling speed adapted to t heir reading speed. Addit ionally, t hey felt in cont rol of the scrolling and did not feel that the text was running away at any point. Form al evaluation of EyeScroll is currently underway and results will be presented at a fut ure conference.

Con clu sion

We will dem onst rate several pract ical exam ples of using gaze as an augm ented input for interaction with

com put ers. Our approach uses gaze as an input without overloading the visual channel and consequent ly enables interact ions which feel natural and easy to use. Figure 4. Eye- t racker ( Tobii 1750) wit h

6

The true efficacy and ease of use of these techniques can only be experienced and description in words does not do t hem j ust ice. We look forward t o having CHI at t endees t ry t he t echniques for t hem selves!

Ack n ow le dge m e n t s

We would also like to acknowledge Stanford Media X and the Stanford School of Engineering m atching funds grant for providing the funding for this work.

Re fe r e n ce s

1. I PRI ZE: a $1,000,000 Grand Challenge designed t o

spark advances in eye- t racking t echnology t hrough com pet it ion, 2006. http: / / hcvl.hci.iastat e.edu/ I PRI ZE/ 2. Am ir, A., L. Zim et, A. Sangiovanni- Vincent elli, and S. Kao. An Em bedded Syst em for an Eye- Det ect ion Sensor. Com put er Vision and I m age Underst anding, CVI U Special I ssue on Eye Det ect ion and Tracking

98( 1) . pp. 104- 23, 2005.

3. Ashm ore, M., A. T. Duchowski, and G. Shoem aker. Efficient Eye Point ing with a FishEye Lens. I n Proceedings of Graphics I nt erface. pp. 203- 10, 2005. 4. Bolt , R. A. Gaze- Orchestrated Dynam ic Windows.

Com put er Grahics 15( 3) . pp. 109- 19, 1981.

5. Fono, D. and R. Vertegaal. EyeWindows: Evaluation of Eye- Cont rolled Zoom ing Windows for Focus Select ion. I n Proceedings of CHI. Port land, Oregon, USA: ACM Press. pp. 151- 60, 2005.

6. Hansen, D. W., D. MacKay, and J. P. Hansen. Eye Tracking off the Shelf. I n Proceedings of ETRA: Ey e Tracking Research & Applicat ions Sy m posium. San Antonio, Texas, USA: ACM Press. pp. 58, 2004.

7. Jacob, R. J. K. The Use of Eye Movem ents in Hum an-Com puter I nt eract ion Techniques: What You Look At is What You Get. I n Proceedings of ACM Transact ions in I nform at ion Sy st em s. pp. 152- 69, 1991.

8. Jacob, R. J. K. and K. S. Karn, Eye Tracking in Hum an-Com puter I nt eract ion and Usabilit y Research: Ready to Deliver the Prom ises, in The Mind's eye: Cognit ive

and Applied Aspect s of Eye Movem ent Resear ch, J.

Hyona, R. Radach, and H. Deubel, Edit ors. Elsevier Science: Am st erdam . pp. 573- 605, 2003.

9. Kum ar, M., GUI De: Gaze- enhanced User I nt erface

Design, 2006. St anford.

htt p: / / hci.stanford.edu/ research/ GUI De

10. Lankford, C. Effect ive Eye- Gaze I nput into Windows. I n Proceedings of ETRA: Eye Tracking Research & Applicat ions Sy m posium. Palm Beach Gardens, Florida, USA: ACM Press. pp. 23- 27, 2000.

11. Maj aranta, P. and K.- J. Räihä. Twenty Years of Eye Typing: Syst em s and Design I ssues. I n Proceedings of

ETRA: Eye Tracking Research & Applicat ions

Sym posium. New Orleans, Louisiana, USA: ACM Press.

pp. 15- 22, 2002.

12. Miniotas, D., O. Špakov, I . Tugoy, and I . S. MacKenzie. Speech- Augm ent ed Eye Gaze I nteract ion wit h Sm all Closely Spaced Targets. I n Proceedings of

ETRA: Eye Tracking Research & Applicat ions

Sym posium. San Diego, California, USA: ACm Press.

pp. 67- 72, 2006.

13. Salvucci, D. D. I ntelligent Gaze- Added I nt erfaces. I n Proceedings of CHI. The Hague, Am sterdam : ACM Press. pp. 273- 80, 2000.

14. Salvucci, D. D. and J. H. Goldberg. I dent ifying Fixations and Saccades in Eye- Tracking Protocols. I n Proceedings of ETRA: Ey e Tracking Research & Applicat ions Sym posium. Palm Bech Gardens, Florida, USA: ACM Press. pp. 71- 78, 2000. Proceedings of CHI. Seattle, Washington, USA: ACM Press. pp. 349- 56, 2001.

17. Yam ato, M., A. Monden, K.- i. Mat sum oto, K. I noue, and K. Torii. Button Selection for General GUI s Using Eye and Hand Toget her. I n Proceedings of AVI. Palerm o, I taly: ACM Press. pp. 270- 73, 2000.

18. Zhai, S., C. Morim oto, and S. I hde. Manual and Gaze I nput Cascaded ( MAGI C) Pointing. I n Proceedings of