P

redicting the future has long been regarded as a powerful means to improvement and success. The ability to make accurate and timely pre-dictions enhances our ability to control our situation and our environ-ment. Assistive robotics is one prominent area in which foresight of this kind can bring improved quality of life. In this article, we present a new approach to acquiring and maintaining predictive knowledge during the online ongoing operation of an assistive robot. The ability to learn accurate, temporally abstracted predictions is shown through two case studies: 1) able-bodied myoelec-tric control of a robot arm and 2) an amputee’s interactions with a myoelecmyoelec-tric training robot. To our knowledge, this research is the first demonstration of a prac-tical method for real-time prediction learning during myoelectric control. Our approach therefore represents a fundamental tool for addressing one majorDigital Object Identifier 10.1109/MRA.2012.2229948 Date of publication: 8 March 2013

By Patrick M. Pilarski, Michael Rory Dawson, Thomas Degris, Jason P. Carey, K. Ming Chan, Jacqueline S. Hebert, and Richard S. Sutton

Adaptive Ar tificial Limbs

Adaptive Ar tificial Limbs

unsolved problem: amputee-specific adaptation during the ongoing operation of a prosthetic device. The findings in this article also contribute a first explicit look at prediction learn-ing in prosthetics as an important goal in its own right, inde-pendent of its intended use within a specific controller or system. Our results suggest that real-time learning of predic-tions and anticipapredic-tions is a significant step toward more intui-tive myoelectric prostheses and other assisintui-tive robotic devices.

Myoelectric Prostheses

Assistive biomedical robots augment the abilities of amputees and other patients with impaired physical or cog-nitive function due to traumatic injury, disease, aging, or con-genital complications. In this article, we focus on one representative class of assistive robots: myoelectric artificial limbs. These prostheses monitor electromyographic (EMG) signals produced by muscle tissue in a patient’s body, and use the recorded signals to control the movement of a robotic appendage with one or more actuators. Myoelectric limbs are therefore tightly coupled to a human user with control pro-cesses that operate at high frequency and over extended peri-ods of time. Commercially available devices include powered elbow, wrist, and hand assemblies from a number of suppliers (Figure 1). Research into technologies and surgeries related to next-generation artificial limbs is also being carried out inter-nationally at a number of institutions [1]–[10].

Despite the potential for improved functional abilities, many patients reject the use of powered artificial limbs [1]–[3]. Recent studies point out three principal reasons why amputees reject myoelectric forearm prostheses: lack of intuitive control, insufficient functionality, and insufficient feedback from the myoelectric device [1], [3]. As noted throughout the literature, the identified issues extend beyond the domain of forearm prostheses and are major barriers for upper-body myoelectric sys-tems of all kinds.

The challenges faced by myoelectric prosthesis users are currently being addressed through improved medical tech-niques, new prosthetic technologies, and advanced control paradigms. In the first approach, medical innovation with targeted motor and sensory reinnervation surgery is opening new ground for intuitive device control and feedback [8], [11]. In the second approach, prosthetic hardware is being enhanced with new sensors and actuators; state-of-the-art robotic limbs are now beginning to approach their biological counterparts in terms of their capacity for actuation [9], [10].

In this article, we focus on the third approach to making myoelectric prostheses more accessible—improving the con-trol system. The concon-trol system is a natural area for improve-ment, as it links sensors and actuators for both motor function and feedback. Starting with classical work in the 1960s, both industry and academia have presented a wide range of increasingly viable myoelectric control approaches.

When compared with traditional body-powered hook-and-cable systems, myoelectric approaches represent a move toward more natural, physiologically intuitive oper-ation of a prosthetic device. Within the space of myoelec-tric control strategies, conventional proportional control is still considered the mainstay for clinically deployed prostheses—in this approach, the amplitude of one or more EMG signals is proportionally mapped to the input of one or more powered actuators.

Despite its widespread use, conventional myoelectric control has a number of known limitations [4]. One pri-mary challenge for conventional control is the growing actuation capabilities of current devices. Conventional control is further constrained by a limited number of sig-nal sources in a residual amputated limb. This problem becomes more pronounced with higher levels of amputa-tion; patients who have lost more function will require more complex assistive devices, yet they have fewer dis-crete sources from which to acquire control information [2], [4]. Even with advanced function switching, conven-tional approaches are only able to make use of a fraction of the movements available to next-generation devices. Intuitive simultaneous actuation of multiple joints using myoelectric control remains a challenging unsolved problem [2].

Pattern Recognition

The dominant approach for improving myoelectric control has been the use of pattern-recognition techniques. As reviewed by Scheme and Englehart [4], state-of-the-art myo-electric pattern recognition relies on sampling a number of training examples in the form of recorded signals, identifying relevant features within these signals, and then classifying these features into a set of control commands. This approach has been largely implemented in an offline context, meaning that systems are developed and trained and then not changed during regular (noncalibration) use by an amputee. Demon-strated offline methods include support vector machines, lin-ear discriminant analysis, artificial neural networks, and

Figure 1. An example of a commercially available myoelectric

principal component analysis on time and frequency domain EMG information [3]–[5]. Offline pattern recognition approaches are easy to deploy and have allowed amputees to successfully control both conventional and state-of-the-art myoelectric prostheses in real time [8]; the training time of these methods is also realistic for use by amputees.

Though less common, myoelectric pattern recognition systems can also be trained online, meaning that they con-tinue to be changed during normal use by the patient. Exam-ples within the domain of prosthetic control include the use of artificial neural networks [12] and reinforcement learning (RL) [7]. In both cases, feedback from the user or the control environment is used to continually update and adapt the device’s control policy. Online adaptation is critical to robust long-term myoelectric prosthesis use by patients and is cur-rently an area of great clinical interest [4], [5]. As discussed by Scheme and Englehart, and Sensinger et al., there have been a number of initial studies on adapting control to changes in the electrical and physical aspects of EMG electrodes (e.g., positional shifts and conductivity differences), electromag-netic interference, and signal variation due to muscle fatigue [4], [5]. This previous work has made it clear that a myoelec-tric controller must take into account real-time changes to the control environment as well as changes to the patient’s physi-ology and prosthetic hardware. To be effective in practice, adaptive methods need to be robust and easy to teach; they also should not be a time burden to the patient. However, a robust, unsupervised approach to online adaptation has yet to be demonstrated [4].

Prediction in Adaptive Control

A key insight underpinning prior work on adaptive or robust systems is that accurate and up-to-date predictive knowledge is a strong basis for modulating control. Prediction has proved to be a powerful tool in many classical control situations (e.g., model predictive control). Although classical predictive con-trollers provide a noticeable improvement over nonpredictive approaches, they often require extensive offline model design; as such, they have limited ability to adapt their predictions during online use.

Prediction is also at the heart of current offline machine learning and pattern recognition prosthesis control tech-niques. Given a context (e.g., moment-by-moment EMG sig-nals), pattern recognition approaches use information extracted from their training process to identify (classify) the current situation as one example from a set of motor tasks. In other words, they perform a state-conditional prediction of the user’s motor intent, which can then be mapped to a set of actuator commands. As a recent example, Pulliam et al. [13] used a time-delayed artificial neural network, which was trained offline, to predict upper-arm joint trajectories from EMG data. The aim of their work was to demonstrate a set of predictions that could be used to facilitate coordinated multi-joint prosthesis control.

In this article, we present a new approach for acquiring and maintaining predictive knowledge during the real-time

operation of a myoelectric control system. A unique feature of our approach is that it uses ongoing experience and observations to continuously refine a set of control-related predictions. These predictions are learned and maintained in real time, independent of a specific myoelectric decoding scheme or control approach. As such, the described tech-niques are applicable to both conventional control and existing pattern recognition approaches. We demonstrate online prediction learning in two experimental settings: 1) able-bodied subject trials involving the online myoelectric control of a humanoid robot limb and 2) trials involving control interactions between an upper-limb amputee par-ticipant and a myoelectric training robot. Our online pre-diction learning approach contributes a novel gateway to unsupervised, user-specific adaptation. It also provides an important tool for developing intuitive new control systems that could lead to improved acceptance of myoelectric prostheses by upper-limb amputees.

An Approach to Online Prediction Learning for Myoelectric Devices

Systems that perform online prediction and anticipation are in essence addressing the very natural question “what will happen next?” To be beneficial to an adaptive control system, this question must be posed in computational terms, and its answer must be continually updated online from real-time sensorimotor experience.

There are a number of predictive questions that have a clear bearing on a myoelectric control system. Exam-ples of useful questions include: “what will be the aver-age value of a grip sensor over the next few seconds?,” “where will an actuator be in exactly 30 s?,” and “how much total myoelectric activity will be recorded by an EMG sensor in the next 250 ms?” These anticipatory questions have direct application to known problems for rehabilitation devices—issues like grip slippage detection, identifying user intent, safety, and natural multijoint movement [1], [4]. It is important to note that questions of this kind are temporally extended in nature; they address the expected value of signals and sensors over protracted periods of time, or at the moment of some specific event. They are also context dependent, in that they rely on the current state and activity of the system. For example, predictions about future joint motion may depend strongly on whether an amputee is playing tennis or driving a car.

amount of time to return an outcome. Anticipations of this kind represent a form of knowledge that is common but falls outside the learning capabilities of most stan-dard pattern recognition approaches. To date, there are few approaches able to learn this form of anticipatory knowledge for real-valued signals, and fewer still can learn and continually update (adapt) this type of predic-tive representation during online, real-time operation.

RL is one form of machine learning that has demon-strated the ability to learn in an ongoing, incremental pre-diction and control setting [14]. An RL system uses interactions with its environment to build up expectations about future events. Specifically, it learns to estimate the value of a one-dimensional (1-D) feedback signal termed

reward; these estimates are often represented using a value function (a mapping from observations of the environ-ment to expectations about future reward).

RL is viewed as an approach to artificial intelligence, natural intelligence, optimal control, and operations research. Since their development in the 1980s, RL algo-rithms have been widely used in robotics and have found the best-known approximate solutions to many games; they have also become the standard model of reward pro-cessing in the brain [15].

Recent work has provided a straightforward way to use RL for acquiring expectations and value functions pertaining to nonreward signals and observations [16]. These general value functions (GVFs) are proposed as a way of asking and answering temporally extended ques-tions about future sensorimotor experience. Predictive questions can be defined for different time scales and may take into account different methods for weighting the importance of future observations. The anticipations learned using GVFs can also depend on numerous strat-egies for choosing control actions (policies) and can be defined for events with no fixed length [16]. Expecta-tions comprising a GVF are acquired using standard RL techniques; this means that learning can occur in an incremental, online fashion, with constant demands in terms of both memory and computation. The approach we develop in this article is to apply GVFs alongside myoelectric control.

Formalizing Predictions with GVFs

We use the standard RL framework of states (s!S), actions (a!A), time-steps t$0, and rewards (r!R)

[14]. In our context, a GVF represents a question q about a scalar signal of interest, here denoted r for consistency; this question depends on a given probability function for choosing actions r:S#A"[ , ]0 1 and a temporal contin-uation probability :c S"[ , ] .0 1 A question q may there-fore be written as: “given state s, what is the expected value of the cumulative sum of a signal r while following a policy

,

r and while continuing with a probability given by ?c ” Formally, the value function V sq( ) for our question is

defined as follows, where actions are taken according to r

and there exist state-dependent continuation probabilities ( )s

This defines the exact answer to a question when states are fully observable. In practice, a state is rarely fully observable or needs to be approximated to represent an answer to a question. We instead assume that we observe a vector of features x that depends on the current state s

according to some state approximation function x!

approx ( ).s We can then present the approximate answer

( )

V stq to a question q as a prediction Pq that is the linear combination of a (learned) weight vector w and the feature vector x at time t

( ) .

Pq=V stq =w xq<

For our research, the approximation function approx(s) was implemented using tile coding, as per Sutton and Barto [14]. Tile coding is a linear mathematical function that maps a real-valued signal space into a linear (binary) vector form that can be used for efficient computation and learning [14].

To facilitate incremental computation, in what follows we consider the exponentially discounted case of GVFs, where

,

0#c#1and c is the same for all states in the system. The value for state s is therefore the expected sum of an exponen-tially discounted signal r for each future time step

( ) ( ) .

The cumulative value inside this expectation is termed the

return. For the purposes of post hoc comparison, the true return Rq on a time-step t may be computed by recording

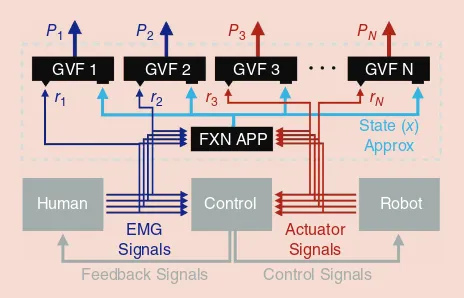

Figure 2. A schematic showing how GVFs predict the expected future

large enough that T

c approaches zero. The difference

R Pq q

; - ; between the predicted and the computed return values on any given time-step t is then a measure of the abso-lute return prediction error on that time step. In what follows, we discuss the time scale of a prediction, meaning the time constant of the return predictions defined by / (1 1-c) . Predictions and errors are also presented in temporally normalized form to allow for visual comparison on a similar scale. For normalized return predictions ( )Prq and normalized mean absolute return errors (NMARE), return values are scaled according to the time constant, e.g., using

/ ( ) .

Prq=Pq 1-c

Learning Predictions with Temporal-Difference Methods

An application of GVFs to the myoelectric control setting is depicted in Figure 2. Here sensorimotor signals from a classi-cal myoelectric control environment (light grey boxes) are used as input to a function approximation routine; the result-ing feature vector and some subset of the input signals are used to update a set of GVFs. These GVFs can then be used to predict the anticipated future value(s) for signals of interest.

For this article, GVFs were implemented as described by Sutton et al. [16]. Anticipations were then learned in an incremental fashion during online operation. This was done using temporal-difference (TD) learning, a standard tech-nique from RL; for additional details on TD learning and the TD(m) algorithm, please see Sutton and Barto [14] and Sut-ton [17]. A procedural description of this approach follows.

At the beginning of each experiment, the weight vectors

wq were initialized to starting conditions. On every time step, the system received a new set of observations s. As learning progressed, each GVF was updated with the value of an instantaneous signal of interest, denoted rq, using the TD(m) algorithm. Predictions Pq for the signals of interest ,r qq !Q

could then be sampled and recorded online using the linear combination Pq!w xq< . Learning proceeded as follows:

» initialize: w, e, s, x » loop

» observe s » x’← approx(s)

» for all questions q d Q do » δ ← rq + Pq wq┬ x’ – wq┬ x

» eq ← min(Ieq + x,1)

» wq ← wq + αδeq

» x ← x’ » end for » end loop.

As shown in the procedure above, weight vectors

wq for each GVF were updated using both the state approxi-mation xl and the signal of interest rq. For each question q, a

TD error signal (denoted d) was computed using the signal

rq and the difference between the current and discounted future predictions for this signal Next, an eligibility trace eq

of the current feature vector was updated in a replacing fash-ion, where eq was a vector of the same size as x. The trace eq was used alongside the error signal d to update the weight vector wq for each GVF with a> 0 as a scalar step-size parameter and m as the trace decay rate. Replacing traces were used as a technique to speed learning; for more detail, please see Sutton and Barto [14].

The per-time-step computational complexity of this pro-cedure grows linearly with the size of the feature vector, making it suitable for real-time online learning. Linear com-putation and memory requirements are important for myo-electric control—when using the approach presented above, increasing the number of control sources or feedback signals leads to only a linear (and not exponential) increase in the computational demand of the learning system. Many GVFs can therefore be learned in parallel during online real-time operation [18].

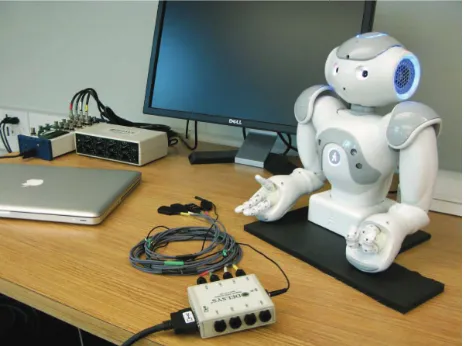

Case Study 1: Prediction During Online Control As a first application example, we examined the ability of a GVF-based learning system to predict and anticipate human and robot signals during online interactions between able-bodied (nonamputee) subjects and a robotic device (Figure 3). Specifically, we examined the anticipa-tion of two signal types: user EMG signals and the angular position of user-controlled elbow and hand joints of a robot limb. The robotic platform for these experiments was a Nao T14 robot torso (Aldebaran Robotics, France), shown alongside myoelectric recording equipment in Figure 3. The EMG signals used in device control and learning were obtained via a Bagnoli-8 (DS-B03) EMG System with DE-3.1 Double Differential Detection EMG sensors (Del-sys, Inc., Boston, MA), and a NI USB-6216 BNC analog to digital converter (National Instruments Canada).

Testing was done with seven able-bodied subjects of vary-ing age and gender. All were healthy individuals with no neu-rological or motor impairments. To generate a rich stream of

Figure 3. The experimental setup for able-bodied subject trials,

sensorimotor data in an online, interactive setting, these par-ticipants worked with the robotic platform to complete a series of randomized actuation tasks. Participants actuated one of the robot’s arms using a conventional myoelectric controller with linear proportional mapping. The EMG sig-nals were sampled and processed according to standard pro-cedures from four input electrodes affixed to the biceps, deltoid, wrist flexors, and wrist extensors of a participant’s dominant arm. Pairs of processed signals were then mapped into velocity control commands for the robot’s elbow roll actuator and hand open/close actuator. In each task, one arm of the Nao robot was moved to display a new gesture consist-ing of a static angular position on both hand and elbow actu-ators. Subjects were asked to make a corresponding gesture with the robot arm under their control. Once a subject main-tained the correct position for a period of more than 2 s, a new (random) target configuration was displayed. Visual feedback to participants consisted of a front-on view of the robot system. Subjects performed multiple sessions of the randomized actuation task, with each session lasting between 5 and 10 min. No subject-specific changes to the learning system were made; all subjects used exactly the same learn-ing system with the same learnlearn-ing parameters, set in advance of the trials. To assess the real-time adaptation of learned predictions, additional testing was done via longer unstruc-tured interaction sessions, some of which lasted over 1 h and included tasks that produced moderate muscle fatigue.

As depicted in Figure 2, the learning system observed the stream of data passing between the human, the myoelectric controller, and the robot arm. We created two GVFs for each of the different signals of interest rq in the robotic system—one to predict temporally extended signal outcomes at a short time scale (+0 8. s), and one to predict outcomes at a slightly longer time scale (+2 5. s). As done in previous work [7], the learning system was presented with a signal space con-sisting of robot joint angles and processed EMG signals; at every time step, the function approximation routine mapped these signals into the binary feature vector xl used by the learning system. All signals were normalized between 0.0 and 1.0 according to their maximum possible ranges. Parameters used in the TD learning process were

. , { . , . }, and . . 0 999 c 0 97 0 99 a 0 033

m= = = Weight vectors

e and w for each GVF were initialized to 0. Learning updates occurred at 40 Hz, and all processing related to learning, EMG acquisition, and robot control was done on a single MacBook Pro 2.53 GHz Intel Core 2 Duo laptop.

Results

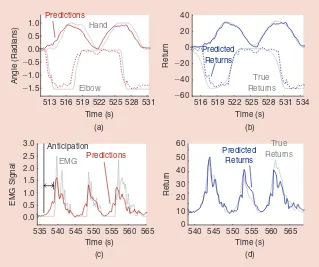

We found that predictions learned using our GVF approach successfully anticipated measured signals after only short peri-ods of online learning. Figure 4(a) shows an example of joint angle prediction for the 0.8-s time scale with one subject after

min 10

+ of learning. Here, changes to the normalized return prediction signals Prq for both the hand (solid red trace) and elbow (dotted red trace) joints can be seen to occur in advance of changes to the measured actuator signals (grey lines). Predictions for both joints can be seen to precede actual joint activity by 0.5–2.0 s. The system was also able to accurately predict myoelectric sig-nals [Figure 4(c)]. Normalized EMG predictions (red line) rise visibly in advance of the actual myoelectric events (grey line), and changes to the processed myoelectric signal were anticipated up to 1,500 ms before change actually occurred. The accu-racy of predictions for both actuator and myoelectric signals can be seen in Figure 4(b), and (d). For both slow and fast changes in the signal of inter-est, the return prediction (Pq, blue line) largely matched the true return as computed post hoc (Rq, grey line), indicating similarity between learned predictions and computed returns.

As shown in Figure 5, accurate predictions could be formed in 5 min or less of real-time learning. These learning curves show the relationship between prediction error and train-ing time, as averaged across multiple

(a) (b)

525 528 531 516 519 522 Time (s)

555 560 565 540 545 550 Time (s)

555 560 565 Anticipation

Figure 4. Examples of (a) and (b) actuator and (c) and (d) myoelectric signal prediction during

trial runs by one of the subjects. Learning progress for joint angle prediction and myo-electric signal prediction is shown in terms of the NMARE for the 0.8 s time scale, averaged into 20 bins; error bars indicate the standard deviation (v) over eight independent trials. After 5 min of learning, the average NMARE for both actuators was less than 0.2 rad (11.5°) for the 0.8 s time scale and 0.3 rad (17.2°) for the 2.5 s time scale. For the prediction of myo-electric signals, the average NMARE was less than 0.15 V, or 3% of the maximum signal magnitude (5 V). Prediction performance continued to improve over the course of an extended learning episode. These results are representative of the learning curves plotted for the other participants.

We also observed the adaptation of learned predictions in response to real-time changes. Perturbations to the task envi-ronment led to an immediate decrease in prediction accuracy, followed by a gradual recovery period. After a short learning period with one able-bodied subject, we transferred the four EMG electrodes to comparable locations on a second able-bodied subject. Within a period of less than 5 min of use by the new subject, the system had adapted its predictions about joint motion to reflect the new individual. Similar results were found for tasks involving gradual or sudden muscle fatigue; prediction accuracy was found to remain stable during peri-ods of extended use (>60 min of activity). Predictions were also found to recover their accuracy when a subject began holding a moderate weight with their controlling arm part of the way through a session. These observations reflect prelimi-nary results, and further study is needed to verify the rate and amount of adaptation achievable in these situations.

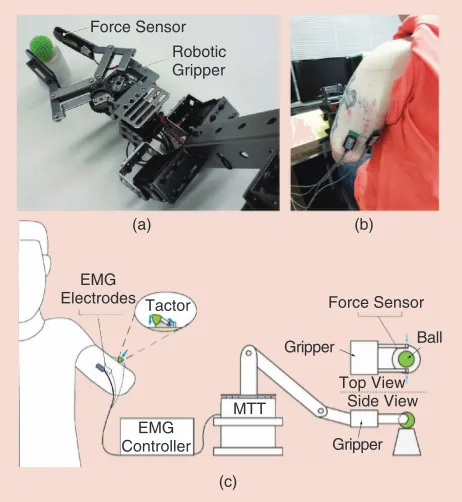

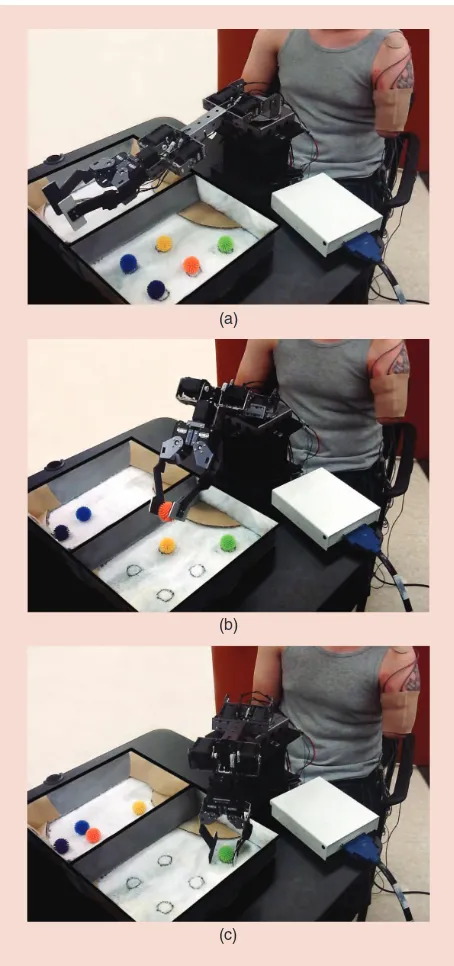

Case Study 2: Amputee Control of a Robot Arm Having demonstrated the applicability of GVFs for predicting and anticipating sensorimotor signals during able-bodied subject trials, we next assessed the ability of this approach to predict robot grip force, actuator position, actuator velocity, and other sensorimotor signals related to an amputee’s inter-actions with a robotic training prosthesis (Figure 6).

Two trials were conducted with an amputee participant. The subject was a 20-year-old male with a left transhumeral amputation, injured in a work accident 16 months prior to the first trial. Six months prior to the first trial, the subject under-went surgical revision of his limb, involving targeted muscle reinnervation as described by Dumanian et al. [11], as well as targeted sensory reinnervation. The motor reinnervation pro-cedure involved rerouting of the median nerve to innervate the medial biceps remnant muscle and the distal radial nerve to the lateral triceps muscle to provide additional myoelectric signal control sites for myoelectric prosthesis control. Sensory reinneration involved identifying specific median nerve fasci-cles with high sensory content, and coapting these fascifasci-cles to the intercostal brachial cutaneous sensory nerve. In a similar

fashion, ulnar sensory fascicles with high sensory content were coapted to the sensory branch of the axillary nerve, and the remainder of the ulnar nerve trunk was rerouted to the motor branch of the brachialis muscle. Reinnervation resulted in a widely distributed discrete representation of digital sensa-tion on the upper arm. A second trial occurred nine months after the first, wherein we observed noticeable changes to the subject’s nerve reinnervation and improvements in his ability to operate a myoelectric device.

The robot platform used by this subject was the myoelec-tric training tool (MTT), a clinical system designed to help new amputees prepare for powered prosthesis use [6]. The MTT includes a five-degree-of-freedom robot arm that mim-ics the functionality of commercial myoelectric prostheses.

NMARE (Radians)

Figure 5. Learning performance during able-bodied subject trials. Prediction

learning curves are shown for (a) elbow and hand actuator position and (b) the average of all four myoelectric signals. Accuracy is reported in terms of NMARE averaged over eight trials.

Figure 6. The experimental setup for amputee trials: (a) a multijoint

Signals from the robotic arm included the load, position, and velocity of each servomotor. A sensory feedback system was also used alongside the MTT to convey the sense of touch to the subject; robot grip force was measured and communicated to the subject via experimental tactors (microservomotors) placed over the reinnervated skin of the subject’s upper arm. The subject controlled the robot arm via a conventional myo-electric controller with linear proportional mapping. Myoelec-tric signals from three of the subject’s reinnervated muscles and two his native muscles were acquired, processed, and used

to both control and switch between the various functions of the robot arm according to standard practice for the MTT [6].

During his two visits, the participant was asked to perform a variety of different actuation and sensation tasks with the MTT. Testing spanned multiple trials and multiple days, and included periods where the subject could control the MTT in an unstructured manner. Specific tasks included using both reinnervated motor control and sensory feedback to grip, manipulate, and discriminate between a series of small com-pressible objects in the absence of visual and auditory feed-back. The subject also completed multiple trials of a modified clinical proficiency test known as box-and-blocks—a common procedure for assessing upper-limb function. This test involved the amputee participant sequentially controlling four actuators on the robot arm to transfer a series of small objects between the two compartments of a divided workspace (Figure 7). For this task, the EMG signals from a pair of native muscles were used to actuate the robot’s elbow, and signals from a reinnervated muscle pair were used to sequentially actuate the three remaining degrees-of-freedom on the robot arm (hand open/close, wrist flexion/extension, and shoulder rotation). Switching between joints was controlled using a voluntary EMG switch located on the subject’s third reinner-vated muscle group. For the box-and-blocks task, the subject was given normal visual and auditory feedback about the sys-tem and could view displayed information about his EMG signals and currently selected robot actuators. More detail on the MTT box-and-blocks task can be found in Dawson et al. [6] and Pilarski et al. [19].

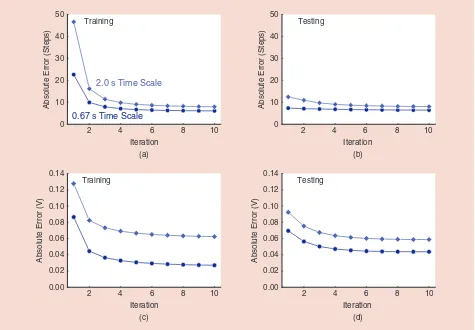

The data from these trials provided a rich sensorimotor space to evaluate our prediction learning methods. The data stream included EMG onsets (<<1 s in length), transient actu-ator motion and velocity readings (<1 s), and periods of sus-tained motion or gripping actions (1–10 s). We again created two GVFs for each signal of interest, one with a time scale of +0.67 s and one with a time scale of +2.0 s. Subsets of the available signals were given as input to the function approxi-mation routine, as outlined above, and as described in related work [7], [19]. Learning updates occurred at 50 Hz. All other parameters of the learning system, function-approximation system, and computational hardware remained the same as in the previous case study. Using this configuration, the average computation time needed to update all GVFs and retrieve predictions at each time step was approximately 1 ms.

Results

As shown in the results that follow, the system was able to successfully anticipate events initiated by the amputee subject, including joint angle changes, joint velocity changes, and grip force fluctuations. Accurate predictions were observed after 5–10 min of real-time sampling and learning. To specifically evaluate GVF performance with respect to iterative offline learning, data was also recorded and divided into independent training and testing sets. Iterative training was found to further improve the accu-racy of the system’s predictions.

(a)

(c) (b)

Figure 7. The modified box-and-blocks task, wherein an

Figure 8 shows the accuracy and rate of improvement dur-ing offline learndur-ing for both hand actuator and grip force pre-dictions on data from an object manipulation task. This is shown for the online learning case (a single pass through 16 min of sensorimotor training data) and for 2–10 additional offline learning iterations. Accuracy was evaluated on both the training data and the previously unseen testing set. Each point in Figure 8 represents the average NMARE over the training or testing data; results are shown for the 0.67-s time scale (bot-tom trace) and the 2.0-s time scale (top trace). As presented in Figure 8(a) and (b), iterative training produced a constant downward trend in joint angle prediction error (NMARE, with the angular signal presented in terms of servo control steps). The typical observed range of this actuator was +200 servo steps. Error in testing data after only one iteration—the online operation scenario—was found to be less than 7% of this total range [Figure 8(b), first data point]. The asymptotic error after multiple training passes was less than 4%. As shown in Figure 8(c) and (d), similar results were observed for force predictions. The typical range found for the grip-force sensor was 0–1.5 V; error on testing data after online training was less than 6% of this range [Figure 8(d), first data point], with an asymptotic error after multiple training itera-tions of less than 4.5%. Online and asymptotic error is

expected to further decrease with additional data, up to the limits imposed by learning system generalization and the fre-quency of previously unseen events in the sensorimotor data.

Figure 9 shows a representative example of grip force, elbow velocity, and elbow joint angle predictions after three offline learning passes through recorded training data obtained during object manipulation testing and the blocks-and-box task. Here the normalized predictions

Prq at two time scales, 0.67 s (solid red lines) and 2.0 s (dotted red lines), are compared with the measured force, velocity, and positional sensor readings (grey lines); plot-ted data is binned into 25 time-step intervals. As was found in the able-bodied study, the predicted signals anticipate changes to the actual measured signals by approximately 1–3 s. Both rapid and slowly changing sig-nals could be successfully predicted. The computational accuracy of these predictions was verified by comparing return predictions Pq to the true computed returns Rq, as done in the previous case study. The results in Figure 9 are representative of our observations for the other sig-nals and tasks in this domain.

As shown by the velocity prediction example in Figure 9, the extended nature of exponentially discounted GVF pre-dictions was found to affect the learning of events with a

(a) (b)

(c) (d)

2.0 s Time Scale

0.67 s Time Scale Training

Iteration 2

50

40

30

20

10

0

4 6 8 10

Absolute Error (Steps)

Testing

Iteration 2

50

40

30

20

10

0

4 6 8 10

Absolute Error (Steps)

Testing

Iteration

2 4 6 8 10

Training

Iteration 2

0.14

0.12

0.10

0.08

0.06

0.04

0.02

0.00

4 6 8 10

Absolute Error (V)

0.14

0.12

0.10

0.08

0.06

0.04

0.02

0.00

Absolute Error (V)

Figure 8. Learning performance on data from amputee–robot interaction. Shown for (a) and (b) actuator joint angle prediction and

short duration relative to a GVF’s time scale. At a predic-tion time scale of 0.67 s, the rise and fall of transient veloc-ity events could be accurately anticipated by more than 1 s; however, normalized predictions were smoother than the measured signal, as they took into account velocity data before and after the depicted motion event. A time scale of

0.25 s or less was found to effectively capture short-dura-tion contours in our velocity data.

Discussion

Our case studies illustrate the potential of online prediction learning within the setting of myoelectric control. Using GVFs, it was possible to learn accurate temporally abstracted predictions about a human’s interactions with a robotic device. This approach was also well suited to practical real-time implementation; learning updates and predictions were made under real-time computation constraints, and accurate predictions could be achieved within 5–10 min of online learning. In addition, few application-specific changes and tuning operations were needed to shift between the different case studies and signal types presented in this article. Learn-ing was performed in both an online settLearn-ing (learnLearn-ing durLearn-ing ongoing experience) and an offline setting (learning from recorded data); offline and online predictions shared the same incremental learning framework. Together these results dem-onstrate the generality of the proposed approach—it would be straightforward to apply our methods to other assistive robotic domains.

The results in Figure 9 highlight the relationship between prediction time scales and the temporal characteristics of sen-sorimotor events. By choosing prediction time scales appro-priate to our events and questions of interest, we found that the learning system could accurately anticipate both slow and rapid changes within the sensorimotor stream. The predic-tions in this article were formed using an exponentially dis-counted weighting of future signals (i.e., a constant cq). Though not explored here, more complex methods for tem-poral extension are possible within the GVF framework [18].

Results from both case studies demonstrate the ability of a GVF learning approach to maintain and improve the accuracy of predictions over the course of online learning. Each new observation seen by the system was used to immediately refine and update existing predictive knowledge about the robot and its domain. With a suitable choice of function approximation methods, weight values learned for one situation could con-tribute to learning about previously unseen situations with similar state characteristics. One preliminary example was our ability to transfer electrodes between two able-bodied subjects in the middle of a learning session. The capacity to actively maintain prediction accuracy suggests a method for adapting a device to ongoing changes that occur during the daily life of an amputee—sweat, fatigue, altered use patterns, or physical changes to the assistive device and the positions of its myoelec-tric sensors. In essence, our methods enable a form of continu-ing domain adaptation. Online prediction learncontinu-ing may therefore provide a basis for rapidly adapting and calibrating factory-made devices for use by specific individuals.

Although our approach performed well during structured and unstructured laboratory testing, its performance during complex real-life activities still needs to be demonstrated. Subtle changes to user intent and motor context may be diffi-cult to discriminate in some real-life activities. As such, online Observations

Predictions Anticipation

1.4

1.2

1.0

0.8

Force (V)

Velocity (Step Rate)

Angle (Steps)

0.6

0.4

0.2

0.0

80

60

40

20

0

20

40

60

80

500

450

400

350

300

5,810 5,812 5,814 5,816 Time (s)

5,818 5,820 5,822 5,755 5,758 5,761 5,764

Time (s) (b)

(c)

5,767 5,770 3,742 3,748 3,754

Time (s) (a)

3,760 3,766

Figure 9. Prediction of (a) grip force, (b) actuator velocity, (c) and

learning during daily life may require additional external input signals (e.g., from the environment, the human, or the robot limb) to fully capture the context in which human actions are occurring. Our prediction and anticipation meth-ods are not tied to a specific control approach, decoding scheme, or surgical setting; as such, they are suitable for both reinnervation patients and amputees using conventional myoelectric control. However, there may prove to be funda-mental differences in the sensorimotor signals recorded in these different domains. Such differences could impact func-tion approximafunc-tion choices and the learning speed of the pre-diction methods. Further amputee studies are necessary to investigate these differences.

Prediction and Anticipation in Practice

There are several areas where the online prediction approach presented in this article promises to significantly improve amputee experiences via myoelectric control. Enhanced proprioceptive feedback to patients is one area where temporally extended predictions hold potential ben-efit. Proprioception is an important goal as it allows an amputee to know where their prosthetic device is in space without visual confirmation; force and position feedback are cited as requisites for patient acceptance [1]. With this objective in mind, tactile and auditory user feedback sys-tems may benefit from incorporating temporally extended predictions about the motor consequences of a user’s cur-rent commands. GVFs are able to provide context-depen-dent predictions of this type in a timely fashion.

A second area of impact for online prediction learning is enhanced failure forecasting. Grip slippage prevention is one representative example [1]. Advance knowledge of force, velocity, and position signals from a robotic prehensor could be used to estimate when a grasped object is about to unin-tentionally slip. The controller could then respond and pre-vent the failure by adjusting grip force and grip stiffness, or alert the user to the impending slip using tactile feedback. The use of foresight also allows a controller to avoid unintentional collisions between a prosthesis and its environment, and to anticipate mechanical, thermal, and electrical damage to the device. Sudden changes in prediction accuracy may also help to detect and identify alterations in a system, for example, transient changes to the EMG signals, shifts in electrode posi-tioning, and other challenges to clinical robustness as identi-fied by Scheme and Englehart [4].

A final area of utility for the approach presented in this article is facilitating the intuitive control of multifunction myoelectric devices. As discussed above, one of the major barriers to intuitive myoelectric control is the disparity between the number of EMG recording sites on an amputee’s body and the control complexity of their artificial limb [2], [4]. This discrepancy between sensor and actuator spaces will only widen as limb technology becomes more advanced. GVFs provide a mechanism to improve multifunction control through anticipation of a user’s intent. Extended predictions about a user’s control behavior and their motor objectives can

be used to prioritize control options for the user, modulate actuator stiffness and compliance, or directly coordinate natu-ral timings for the simultaneous movement of multiple joints. The use of GVF predictions to streamline an amputee’s con-trol interactions in this way is the subject of ongoing studies by our group, and our preliminary results indicate tangible benefits for switching-based prosthetic control [19].

Final Thoughts: A Basis in the Brain

The view that online, adaptive predictions are important to control has an additional basis in human biology and motor learning [20]. As described by Flanagan et al. and Wolpert et al., predictions are learned by human subjects before they gain control competency [20], [21]. There is a strong relationship between sensorimotor prediction and control in the human brain, with anticipated future consequences being viewed as a fundamental component for generating and improving control [21]. Online prediction error, as represented by the human dopamine system, is also believed to play a key role in adap-tively regulating behavior [22], and has been successfully mod-eled by the same RL algorithms as we have used in this article [15]. Finally, it has been suggested that motor awareness and the feeling of “executing an action” stem from anticipatory pre-dictions in the brain, as opposed to actual muscle movement [23]. These observations from human motor control further suggest that online prediction and anticipation could positively impact the functionality, intuitiveness, and feedback of multi-function myoelectric devices.

Conclusions

study to complex real-world activities, explore the use of pre-diction learning to supplement existing myoelectric control architectures, and comprehensively evaluate prediction-based control adaptation with a population of amputees.

Acknowledgments

The authors would like to thank the Alberta Innovates Centre for Machine Learning, the Natural Sciences and Engineering Research Council, Alberta Innovates Technology Futures, and the Glenrose Rehabilitation Hospital for their support. We also would like to thank Joseph Modayil and Adam White for a number of very useful discussions, and Michael Stobbe for his technical assistance and the photographs used in Fig-ure 1. Informed subject consent was acquired in both case studies as per ethics approval by the University of Alberta Health Research Ethics Board.

References

[1] B. Peerdeman, D. Boere, H. Witteveen, R. H. Veld, H. Hermens, S. Strami-gioli, H. Rietman, P. Veltink, and S. Misra, “Myoelectric forearm prostheses: State of the art from a user-centered perspective,” J. Rehab. Res. Develop., vol. 48, no. 6, pp. 719–738, 2011.

[2] T. W. Williams, “Guest editorial: Progress on stabilizing and controlling pow-ered upper-limb prostheses,” J. Rehab. Res. Develop., vol. 48, no. 6, pp. 9–19, 2011. [3] S. Micera, J. Carpaneto, and S. Raspopovic, “Control of hand prostheses using peripheral information,” IEEE Rev. Biomed. Eng., vol. 3, pp. 48–68, Oct. 2010. [4] E. Scheme and K. B. Englehart, “Electromyogram pattern recognition for control of powered upper-limb prostheses: State of the art and challenges for clinical use,” J. Rehab. Res. Develop., vol. 48, no. 6, pp. 643–660, 2011. [5] J. Sensinger, B. Lock, and T. Kuiken, “Adaptive pattern recognition of myoelectric signals: Exploration of conceptual framework and practical algo-rithms,” IEEE Trans. Neural Syst. Rehab. Eng., vol. 17, no. 3, pp. 270–278, 2009. [6] M. R. Dawson, F. Fahimi, and J. P. Carey, “The development of a myoelec-tric training tool for above-elbow amputees,” Open Biomed. Eng. J., vol. 6, pp. 5–15, Feb. 2012.

[7] P. M. Pilarski, M. R. Dawson, T. Degris, F. Fahimi, J. P. Carey, and R. S. Sutton, “Online human training of a myoelectric prosthesis controller via actor-critic reinforcement learning,” in Proc. IEEE Int. Conf. Rehabilitation Robotics, 2011, pp. 134–140.

[8] T. A. Kuiken, G. Li, B. A. Lock, R. D. Lipschutz, L. A. Miller, K. A. Stubble-field, and K. B. Englehart, “Targeted muscle reinnervation for real-time myoe-lectric control of multifunction artificial arms,” JAMA, vol. 301, no. 6, pp. 619–628, 2009.

[9] L. Resnik, M. R. Meucci, S. L-Klinger, C. Fantini, D. L. Kelty, R. Disla, and N. Sasson, “Advanced upper limb prosthetic devices: implications for upper limb pros-thetic rehabilitation,” Arch. Phys. Med. Rehab., vol. 93, no. 4, pp. 710–717, 2012. [10] M. S. Johannes, J. D. Bigelow, J. M. Burck, S. D. Harshbarger, M. V. Kozlowski, and T. V. Doren, “An overview of the developmental process for the modular pros-thetic limb,” in Johns Hopkins APL Tech. Dig., 2011, vol. 30, no. 3, pp. 207–216. [11] G. A. Dumanian, J. H. Ko, K. D. O’Shaughnessy, P. S. Kim, C. J. Wilson, and T. A. Kuiken, “Targeted reinnervation for transhumeral amputees: Cur-rent surgical technique and update on results,” Plastic Reconstruct. Surgery, vol. 124, no. 3, pp. 863–869, 2009.

[12] D. Nishikawa, W. Yu, H. Yokoi, and Y. Kakazu, “On-line learning method for EMG prosthetic hand control,” Electron. Commun. Japan. Part. III, vol. 84, no. 10, pp. 35–46, 2001.

[13] C. Pulliam, J. Lambrecht, and R. F. Kirsch, “Electromyogram-based neu-ral network control of transhumeneu-ral prostheses,” J. Rehab. Res. Develop., vol. 48, no. 6, pp. 739–754, 2011.

[14] R. S. Sutton and A. G. Barto, Reinforcement learning: An introduction. Cambridge, MA: MIT Press, 1998.

[15] B. Seymour, J. P. O’Doherty, P. Dayan, M. Koltzenburg, A. K. Jones, R. J. Dolan, K. J. Friston, and R. S. Frackowiak, “Temporal difference models describe higher-order learning in humans,” Nature, vol. 429, no. 6992, pp. 664–667, 2004.

[16] R. S. Sutton, J. Modayil, M. Delp, T. Degris, P. M. Pilarski, A. White, and D. Precup, “Horde: A scalable real-time architecture for learning knowledge from unsupervised sensorimotor interaction,” in Proc. 10th Int. Conf. Autono-mous Agents Multiagent Systems, 2011, pp. 761–768.

[17] R. S. Sutton, “Learning to predict by the methods of temporal differences,”

Mach. Learn., vol. 3, no. 1, pp. 9–44, 1988.

[18] J. Modayil, A. White, and R. S. Sutton, “Multi-timescale nexting in a rein-forcement learning robot,” in Proc. Int. Conf. Simulation Adaptive Behaviour Odense, 2012, pp. 299–309.

[19] P. M. Pilarski, M. R. Dawson, T. Degris, J. P. Carey, and R. S. Sutton, “Dynamic switching and real-time machine learning for improved human control of assistive biomedical robots,” in Proc. 4th IEEE RAS & EMBS Int. Conf. Biomedical Robotics Biomechatronics, 2012, pp. 296–302.

[20] D. M. Wolpert, Z. Ghahramani, and J. R. Flanagan, “Perspectives and problems in motor learning,” Trends Cogn. Sci., vol. 5, no. 11, pp. 487–494, 2001.

[21] J. R. Flanagan, P. Vetter, R. S. Johansson, and D. M. Wolpert, “Prediction precedes control in motor learning,” Current Biol., vol. 13, no. 2, pp. 146–150, 2003.

[22] J. Zacks, C. Kurby, M. Eisenberg, and N. Haroutunian, “Prediction error associated with the perceptual segmentation of naturalistic events,” J. Cogn. Neurosci., vol. 23, no. 12, pp. 4057–4066, 2011.

[23] M. Desmurget, K. Reilly, N. Richard, A. Szathmari, C. Mottolese, and A. Sirigu, “Movement intention after parietal cortex stimulation in humans,” Sci-ence, vol. 324, no. 5928, pp. 811–813, 2009.

Patrick M. Pilarski, University of Alberta, Edmonton, Canada. E-mail: [email protected].

Michael Rory Dawson, Glenrose Rehabilitation Hospital, Edmonton, Canada. E-mail: [email protected].

Thomas Degris, INRIA Bordeaux Sud-Ouest, Talence Cedex, France. E-mail: [email protected].

Jason P. Carey, University of Alberta, Edmonton, Canada. E-mail: [email protected].

K. Ming Chan, University of Alberta, Edmonton, Canada. E-mail: [email protected].

Jacqueline S. Hebert, University of Alberta, Edmonton, Canada. E-mail: [email protected].