Full Terms & Conditions of access and use can be found at

http://www.tandfonline.com/action/journalInformation?journalCode=vjeb20

Download by: [Universitas Maritim Raja Ali Haji] Date: 11 January 2016, At: 22:14

Journal of Education for Business

ISSN: 0883-2323 (Print) 1940-3356 (Online) Journal homepage: http://www.tandfonline.com/loi/vjeb20

Removing Size as a Determinant of Quality: A Per

Capita Approach to Ranking Doctoral Programs in

Finance

Roger McNeill White , John Bryan White & Michael M. Barth

To cite this article: Roger McNeill White , John Bryan White & Michael M. Barth (2011) Removing Size as a Determinant of Quality: A Per Capita Approach to Ranking Doctoral Programs in Finance, Journal of Education for Business, 86:3, 148-154, DOI: 10.1080/08832323.2010.492049

To link to this article: http://dx.doi.org/10.1080/08832323.2010.492049

Published online: 24 Feb 2011.

Submit your article to this journal

Article views: 45

ISSN: 0883-2323

DOI: 10.1080/08832323.2010.492049

Removing Size as a Determinant of Quality: A Per

Capita Approach to Ranking Doctoral Programs in

Finance

Roger McNeill White

University of Pittsburgh, Pittsburgh, Pennsylvania, USA

John Bryan White

U.S. Coast Guard Academy, New London, Connecticut, USA

Michael M. Barth

The Citadel, Charleston, South Carolina, USA

Rankings of finance doctoral programs generally fall into two categories: a qualitative opinion survey or a quantitative analysis of research productivity. The consistency of these rankings suggests either the best programs have the most productive faculty, or that the university affiliations most often seen in publications are correlated with institutional quality, which biases the rankings towards larger programs. The authors introduce a per capita measure of research output to evaluate finance programs in a context that removes absolute size as a variable. The results indicate that smaller programs in the field are frequently overlooked in traditional rankings.

Keywords: ranking doctoral programs, ranking finance programs

Rankings of programs and institutions of higher education abound in both academic reading and the popular press. TheU.S. News and World ReportandThe Princeton Review

annually publish thorough rankings of dozens of both under-graduate and under-graduate programs, and multiple specialized rankings can be found for nearly any field of interest. Program directors are interested in a high ranking because that influences better students to apply to those programs. University administrators analyze rankings closely because the prestige from one program’s high ranking may spill over to the institution’s prestige as well. Potential graduate students are keenly aware of the rankings of departments in their field, because a degree from a more prestigious institution usually translates to a more successful job search. Search committees also consider the school a candidate’s degree is from in determining whom to interview. Even those without a professional interest will scan the rankings to see

Correspondence should be addressed to John Bryan White, U.S. Coast Guard Academy, Management Department, 27 Mohegan Avenue, New Lon-don, CT 06320, USA. E-mail: [email protected]

if they have bragging rights with respect to a neighbor or coworker.

Due to the variety of university programs in the United States, numerous ranking criteria and formulae exist and are used by various outlets. For instance, the U.S. News and World Reportcollegiate rankings look at quantitative factors, such as retention and graduation rates, faculty resources, student selectivity, and alumni giving. However, 25% of the ranking is based on a peer assessment, a highly qualitative measure (Morse & Flanigan, 2009).

For business schools, a critical measure of program qual-ity is based on peer-reviewed journal publications. There are any number of studies that rank the productivity of business programs by the research output of their faculty. A topic cu-riously deficient of academic interest, until recently, has been any approach ranking the doctoral programs by the research productivity of their graduates. Most industries are evaluated by the quality of their product and not the producer. Indeed, hiring committees are most interested in the graduate’s re-search productivity. They want to know what to expect from their potential new hire. We seek to provide a more detailed evaluation of the per capita output of faculty and graduates,

RANKING FINANCE DOCTORAL PROGRAMS 149

as opposed to their unadjusted contributions en masse. The correlation between the level of research activity by the faculty and the research activity of their graduates is also examined.

LITERATURE REVIEW

As previously stated, there are numerous ranking of business programs in general. The “Best Colleges and Universities” report published byU.S. News and World Reportannually is perhaps the best known ranking of business programs.U.S. News and World Report has reported these rankings since 1990. However, their ranking is essentially a ranking of the MBA programs, and not a reflection of the quality of the doctoral program. Roose and Andersen (1970) were among the early users of subjective surveys to evaluate doctoral programs. They surveyed economists from 130 departments, asking the respondents to classify departments as distin-guished, good, and adequate. Siegfried’s (1972) ranking of economics departments by published pages in major economics journals was highly correlated with the earlier peer rankings. Brooker and Shinoda (1976) applied the peer survey approach to the functional areas of business, which included finance programs. Two studies by Klemkosky and Tuttle (1977a, 1977b) found ranking departments by faculty publications (1977a) and publications by finance doctoral graduates (1977b) mirrored the results of the peer survey.

There have been several rankings of the research produc-tivity of finance programs recently.Heck and Cooley (2008) reviewed the university affiliations (place of employment and source of degree) of the authors in theJournal of Finance

(JF) for the last 60 years. Chan, Chen, and Lung (2007) used an expanded number of journal outlets (the top 15 finance journals) but limited the time period studied to 1990–2004. Another study by Heck (2007) used four publication outlets: JF,Journal of Financial and Quantitative Analysis(JFQA),

Journal of Financial Economics (JFE), and the Review of Financial Studies (RFS) from 1991 to 2005. This study also included a survey of finance department chairs or finance doctoral program directors on their opinions of the best programs. There is remarkable consistency among the departmental rankings from these studies. Each study ranked NYU at the top of research output, and the next four (alphabetically, as the order is not consistent) are Chicago, Harvard, Pennsylvania, and UCLA. Thus, research output, a generally accepted metric of graduate business faculty quality, is consistently at the top of the rankings regardless of what journals are included or what time period is used. The collective opinions of department chairs or program directors echo the research productivity results. Beyond the top five, the rankings become only slightly less consistent. For instance, 17 of the top research producing faculties are in the chairs’ and directors’ top 20. When publications are grouped

by the degree granting institution, 18 of the top 20 institu-tions by graduate productivity are in the top 20 in the opinion of the department chairs and doctoral program directors.

Although the schools at the top of the list clearly have excellent faculty who are prodigious researchers, they also tend to have large finance faculties. Three of the top five research producers, NYU, Pennsylvania, and Harvard, have the three largest finance faculties, at 36, 33, and 30 members, respectively. Chicago is close behind, at number seven, with 22 faculty members. Thus, part of their research success is the result of the number of researchers at a single institu-tion. A larger faculty who have widely diverse interests and fields may be of interest to a prospective graduate student who is uncertain of their own interest, because it increases the likelihood that someone has expertise in the field he or she ultimately selects. But the question remains as to whether those faculty members who share their interest are research productive. Using absolute research output as an indication of program quality is akin to claiming a firm’s performance is superior to another by evaluating total sales or profits. For comparisons among firms of unequal size, finance pro-fessionals use financial ratios or entries from common-sized financial statements to remove the influence of firm size when interpreting performance measures. The value of rankings by absolute research output is in part a reflection of exposure.

In this study we evaluated a similar performance mea-sure of doctoral programs in finance that is independent of the influence of program size. A doctoral student learns the most from those faculty members with whom they are most involved, those individuals who read students’ assignments, compose and grade exams, lecture, and mentor a graduate student on a daily basis, pushing the boundaries of finance and training the cohort for the next generation. A graduate student can, at any point in time, be engaged by only a limited number of professors. Professors, likewise, are limited in the number of students they can teach and whose dissertations they may advise. Although a graduate student certainly has mind-broadening informal interactions with many members of the faculty, either through scheduled paper presentations and/or random conversations in a social setting, these effects of these interactions are less significant than the structured contact of classes and supervised research. The professors the student is not engaged with during graduate school are much less relevant to the quality of training received. Once the faculty is beyond some critical mass large enough to staff a variety of fields within the discipline, the quantity of faculty plays very little role in the training of doctoral candidates. Rather, the quality of the individual faculty members the graduate student studies with is the critical factor in deter-mining the educational quality of the program.

What is needed, therefore, is a better measure of the quality of individual faculty. The average research output, or per capita output, of an individual faculty member would be a better measure of individual faculty quality than the research output of the entire department. A per capita output

measure removes the absolute faculty size as a factor in the rankings.

Likewise, the gauge of how effective a program is in producing effective researchers is not found in the absolute number of publications cataloged by the authors’ doctoral in-stitution. A program with many graduates should be expected to have more published articles from those graduates than a program with significantly fewer graduates. Publications per graduate from a particular institution is a much more instructive measure of the quality of a program’s graduates.

This per capita approach would be of interest to two groups in particular: hiring committees and prospective grad-uate students. Hiring committees are much like major league scouts, combing various applicants’ curriculum vita for in-dications of skills and training that evolves into big-league talent. The athletic scouts have a great deal of data to base their recommendations on. Baseball has its runs batted in and batting average, basketball uses average scoring and shooting percentages, and football running backs are evaluated based on total yardage and yards per carry. Note that each of these sports has an absolute measure as well as an adjusted statis-tic. A basketball player who has a 35-point scoring average with a 30% shooting average is much less attractive than one with a 30-point average that shoots 60%.

Unfortunately, hiring committees lack similar historical indicators when evaluating new PhDs. in their search for individuals who will be good teachers and productive researchers. A new doctorate has limited teaching expe-rience and often no manuscripts accepted for publication. The new doctorate’s degree granting institution is often the single most important source of expectations on the individual. The generally accepted thinking is that higher ranked programs accept more highly qualified applicants and graduate members of the profession who publish at a higher rate. Thus, a program’s rank or perception of quality (e.g., being among the top programs, a middle-tier program) is a critical input in the hiring decision.

However, hiring committees do not hire a program. They hire an individual. Therefore, rankings that are influenced by program size do not provide accurate information. A faculty may have produced 30 articles in the last 5 years in the top four journals in finance, but if there are 30 members in the department, then their research output averages only one article per person over the 5-year period. The department as a whole is certainly well known because of their total output, but the individual output is only one top article over the five-year period. A department of 10 that publishes 20 articles over the same period has an output of two articles per person. This department may less well known because the department’s name is seen on fewer articles. However, if research is an indicator of faculty quality, then this per capita approach is a better indicator than total output. A prospective graduate student looking for a research active faculty would be better served by the smaller program in the example above because of their higher per capita research output.

Prospective graduate students know that successful research is the key to a successful academic career, so they are also keenly interested in the research productivity of a program’s graduates. Graduates of large programs have a great deal of publications in the top four finance journals. However, there are also a large number of graduates from large programs. The prospective graduate student should be more interested in how individuals from programs perform, not the performance of a program’s graduates en masse. Thus, a per capita approach to the publications of a program’s graduates is much more indicative of future success than the total research output of a program’s graduates.

METHOD

In the present study we extended Heck’s (2007) analysis, ex-amining the authorships from the same four finance journals (JF, JFQA, JFE, and RFS) used in his 2007 study over the same 15-year period, 1991–2005. Rankings of this research output are made based on university affiliation at the time of publication, and by university awarding their doctoral degree. The absolute number of articles is converted to a per capita output based on faculty size as of 2004 using the 2004–2005

Prentice Hall Guide to Finance Faculty, compiled by James R. Hasselbeck. Only tenured and tenure-track faculty were included in the study. Rankings were made of the professional affiliation and degree granting institution of the authors’ per capita output. The results of the per capita rankings were then compared with the absolute rankings, as well as a qualitative ranking of programs from the survey of finance department chairs or finance doctoral program directors.

It should also be noted that, in accordance with Heck’s (2007) publication, only programs considered to be in the top 50 for each of his criteria (unadjusted publications by faculty, unadjusted publications by graduates, and rating of a program by other program directors) were evaluated. Thirty-nine schools make this list, and as with any ranking, some schools are at the bottom of this list. However, given the stringent requirements to even be included in this list, all of the programs should be considered outstanding (Heck).

In evaluating the data in accordance with the aforemen-tioned criteria, the results should be interpreted with an eye to several assumptions. The first assumption is that each aca-demic listed in Hasselback’s directory earned their PhD in finance.Although those faculty members holding an MBA or JD were easily eliminated from consideration, many faculty members were no doubt wrongly included in this analysis. Economics PhDs often teach finance, and their inclusion cer-tainly skewed the results in some manner. Also, many fac-ulty members who would consider themselves professors of risk management or real estate were also wrongly included in this study. A number of institutions report faculty in such disciplines as professors of finance, and although their termi-nal degree may be in their respective field, the institutiotermi-nal

RANKING FINANCE DOCTORAL PROGRAMS 151

reporting practices makes classifying such academics very difficult.

Faculty per capita output was determined using the number of faculty members at the end of the 15-year study period. The implicit assumption was that faculty size does not change dramatically over time. To the extent that a finance faculty has added several new PhDs near the end of the period under review, their per capita output would probably be lower because of the increased denominator and few (if any) publications from the new hires. Likewise, having one extraordinarily productive researcher on a small faculty will skew the average results upward, even though the median output may be mediocre. Rankings based on total output are also similarly affected by a single, highly productive researcher. The implicit assumption is that neither of these situations is common in the field. Faculty size ranged from 8 to 36, with a median finance faculty of 17. No attempt wa made to adjust for where the faculty mem-ber was at the time the article was published. It is assumed the article’s prestige follows the author and does not reside at the author’s institution at the time of publication. Custom seems to support this assumption, as articles are often referred to by the authors’ names only, such as Fama, Fisher, Jensen, and Roll (1969), Black and Scholes (1973), or Modigliani and Miller (1958), with no mention of institutional affiliation.

Publications per graduate also has implied assumptions. The principle assumption is that graduates of the top pro-grams enter academia at roughly the same rate. If an in-stitution places its graduates exclusively in industry, where publications in academic journals may not be as highly or encouraged, then that institution would rank poorly on the re-search activity of its graduates. Likewise, a preponderance of foreign students who return to their home country after grad-uation and do not publish in English (which is the language of the four journals included in Heck’s [2007] evaluation) would also impair a program’s performance based on the per capita output of its graduates. While these shortcomings are ac-knowledged, they also skew Heck’s study in a similar fashion. Finally, we acknowledge that the selection of four jour-nals in finance and an analysis period of 1991–2005 are arbitrary and artificial constraints. University finance fac-ulties most certainly changed during that period. However, these constraints were maintained in this study so that any results that differed from the Heck’s (2007) rankings could not be attributed to other factors. For the sake of continuity with Heck’s study, we also did not discern between publi-cations with multiple authors and single authors. If a paper had multiple authors, each got equal and full credit for the publication.

RESULTS

Recall that the ranking of finance programs, either by fac-ulty research output, research output by the author’s doctoral

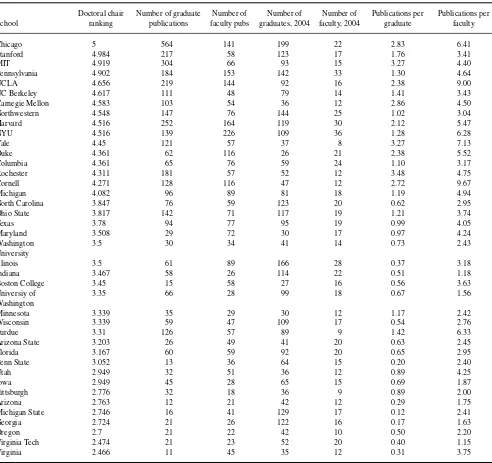

institution, or the qualitative ranking by finance department chairs or finance doctoral program directors were remarkably consistent at the top. The top five (NYU, Chicago, Penn, Har-vard, and UCLA) were the same, with only minor differences in order, in Chan et al.’s (2007) 15-year study using 21 jour-nals, Heck and Cooley’s (2008) study over the 1976–2005 period only using articles in the JF, and Heck’s (2007) study using four journals. Heck’s survey of chairs included Stan-ford and MIT in the top five, but excluded Harvard and NYU from that group. In total graduate publications, Chicago, Har-vard, and UCLA maintain a spot in the top five, and they were joined by MIT and Stanford. Heck’s results are reproduced in the first three columns of Table 1.

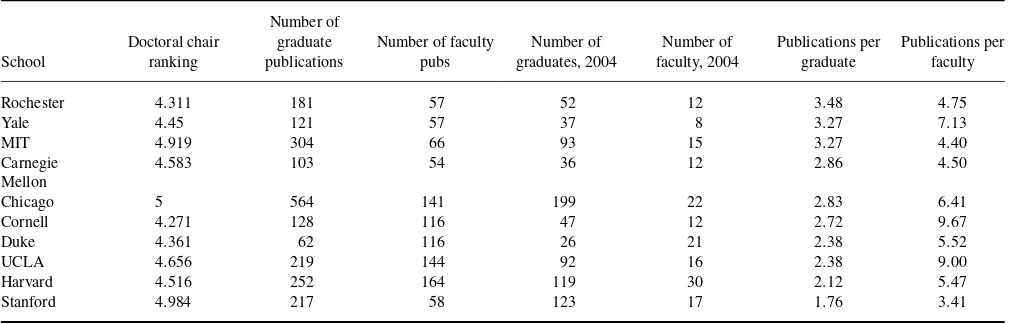

When faculty research output is evaluated on a per capita basis, the rankings vary dramatically. Cornell, which is 15th in the chairs’ ranking, 11th in publications by graduates, and 6th in faculty publications vaults to number one in per capita faculty output. UCLA and Chicago maintained a position in the top five on a per capita basis, and were joined by Yale and Purdue. Table 2 reranks the chairs’ ranking from Table 1 and displays the top 10 programs based on per capita faculty output.

Purdue, ranked 28th in the chairs’ survey, was fifth in per capita faculty publications. A quick glance at the table reveals that they did so with a faculty of nine. With such a small faculty, it is easy to see how their research productivity is overlooked when publications are evaluated en masse.

In evaluating finance doctoral programs on the basis of per capita graduate research, several other surprises find their way into the top ten. Chicago is the only chairs’ top 5 that is in the top five of research output per graduate. Rochester, which comes in at 14th in the chairs’ ranking and 11th in total faculty publications, tops the ranking for output per graduate. Table 2 reranks the chairs’ ranking from Table 1 and displays the top 10 programs based on per capita doctoral graduate output. For both a hiring committee and a prospective graduate student, this ranking would seem to be of particular interest.

There seems to be a good deal of correlation between research productivity of faculty and their graduates. The cor-relation coefficient between the number of faculty publica-tions and the number of publicapublica-tions by their graduates is 57%. When this relationship is examined on a per capita ba-sis, the correlation is even higher. The correlation coefficient between per capita faculty publications and the per capita publications of their graduates is 72.3%. This suggests that the individual faculty research productivity is more impor-tant than total faculty productivity in predicting the potential research output of a new PhD in finance (see Table 3).

CONCLUSIONS AND SUGGESTIONS FOR FURTHER RESEARCH

We have gone to great lengths to describe why many smaller, quality doctoral programs in finance are often overlooked

TABLE 1

Finance Program Ranking (Reported in Heck, 2007)

School

Doctoral chair ranking

Number of graduate publications

Number of faculty pubs

Number of graduates, 2004

Number of faculty, 2004

Publications per graduate

Publications per faculty

Chicago 5 564 141 199 22 2.83 6.41

Stanford 4.984 217 58 123 17 1.76 3.41

MIT 4.919 304 66 93 15 3.27 4.40

Pennsylvania 4.902 184 153 142 33 1.30 4.64

UCLA 4.656 219 144 92 16 2.38 9.00

UC Berkeley 4.617 111 48 79 14 1.41 3.43

Carnegie Mellon 4.583 103 54 36 12 2.86 4.50

Northwestern 4.548 147 76 144 25 1.02 3.04

Harvard 4.516 252 164 119 30 2.12 5.47

NYU 4.516 139 226 109 36 1.28 6.28

Yale 4.45 121 57 37 8 3.27 7.13

Duke 4.361 62 116 26 21 2.38 5.52

Columbia 4.361 65 76 59 24 1.10 3.17

Rochester 4.311 181 57 52 12 3.48 4.75

Cornell 4.271 128 116 47 12 2.72 9.67

Michigan 4.082 96 89 81 18 1.19 4.94

North Carolina 3.847 76 59 123 20 0.62 2.95

Ohio State 3.817 142 71 117 19 1.21 3.74

Texas 3.78 94 77 95 19 0.99 4.05

Maryland 3.508 29 72 30 17 0.97 4.24

Washington University

3.5 30 34 41 14 0.73 2.43

Illinois 3.5 61 89 166 28 0.37 3.18

Indiana 3.467 58 26 114 22 0.51 1.18

Boston College 3.45 15 58 27 16 0.56 3.63

Universiy of Washington

3.35 66 28 99 18 0.67 1.56

Minnesota 3.339 35 29 30 12 1.17 2.42

Wisconsin 3.339 59 47 109 17 0.54 2.76

Purdue 3.31 126 57 89 9 1.42 6.33

Arizona State 3.203 26 49 41 20 0.63 2.45

Florida 3.167 60 59 92 20 0.65 2.95

Penn State 3.052 13 36 64 15 0.20 2.40

Utah 2.949 32 51 36 12 0.89 4.25

Iowa 2.949 45 28 65 15 0.69 1.87

Pittsburgh 2.776 32 18 36 9 0.89 2.00

Arizona 2.763 12 21 42 12 0.29 1.75

Michigan State 2.746 16 41 129 17 0.12 2.41

Georgia 2.724 21 26 122 16 0.17 1.63

Oregon 2.7 21 22 42 10 0.50 2.20

Virginia Tech 2.474 21 23 52 20 0.40 1.15

Virginia 2.466 11 45 35 12 0.31 3.75

Note.The University of Pittsburgh was not included in Heck’s (2007) study.

by traditional ranking schemes. Although academic excel-lence is not defined solely by the quantity of research, it is certainly a universally recognized and quantifiable measure of success. No critic would deny that the four journals used in this analysis are preeminent in the field of finance. The per capita approach utilized by this paper should be of great interest to prospective PhD candidates in finance and hiring committees, if not the entire discipline.

It is acknowledged that the period of study, 1991–2005, is somewhat dated and that including only four top journals in the definition of research is most certainly a narrow

classifi-cation of finance scholarship. However, Heck’s (2007) study provided two remarkably consistent rankings of the quality of finance programs that are nearly universally accepted, a sur-vey of finance program directors (or chairs) and a quantitative measure of publications. Extending the study by including a per capita measure of research productivity required using the same time period and definition of research if the results are to have any meaningful comparison to Heck’s ranking results. What could be inferred if the rankings by total publications using four journals from 1991–2005 differed significantly from the ranking of per capita research output using more

RANKING FINANCE DOCTORAL PROGRAMS 153

TABLE 2

Doctoral Programs Ranked by Per Capita Faculty Publications

School

comprehensive list of journals in a more recent time period? Using 15 finance journals from 2000–2009 would produce a more extensive and certainly more recent indication of re-search productivity. But the value of the ranking would be less significant in the absence of a comparable survey ranking from program directors.

It should be noted that we only evaluated programs that qualified under Heck’s (2007) ranking under several strict criteria and the inclusion of a program to this list is quite an accomplishment in itself. Unfortunately, such restrictive en-trance requirements for the sample result in the exclusion of several quality programs that should not be overlooked. For instance, The University of Pittsburgh’s nine faculty mem-bers in finance published 18 articles in the four journals used in Heck’s (2007) study. This per capita output of 2.0 articles in the period under review would place the Pitt fi-nance program at number 33. Pitt’s 36 graduates published 32 qualifying articles, or 0.9 articles per capita. This output would rank tied for 22rd if included in an evaluation of per capita publications. This situation also leads to the belief that other small programs would place very highly in terms

of producing productive graduates if they were included in the study.

This study’s value is more than merely inserting smaller programs into an ordinal ranking of finance programs. Rather, the evaluation of finance programs on per capita research out-put enables researchers to get a sense of which programs are comparable when they are of different sizes. Hiring commit-tees should find this information quite useful as they seek to identify potential hires that meet the needs of their institu-tion. This study would also be useful to prospective graduate students as they seek to find a program that is a good match to their aspirations. However, programs with low per capita output should not be dismissed as a poor fit for the potential student. Rather, the prospective student should investigate as to whether there are productive faculty in the specific field they intend to study. Likewise, an institution with low research output from its graduates may send many of its graduates abroad or to industry, where a productive career is measured by a metric other that peer-reviewed journal out-put. While research continues to be a factor in promotion and tenure decisions, the importance of teaching is increasing. A

TABLE 3

Doctoral Programs Ranked by Per Capita Graduate Publications

School

prospective graduate student would do well to evaluate the classroom training a program provides if they aspire to a career in academia.

In conclusion, no ranking system is flawless or all en-compassing. At the very least, those considering entering a terminal degree program in finance now have a viable basis for quantifying the quality of PhD programs and faculties of different sizes. Also, this work should spur discussion among the upper echelons of finance as it reveals that present per-ceptions of program quality depend more on the collective quantity of a program’s exposure than the individual research abilities of their graduates and faculties.

Further research in this area is certainly merited. Program directors should continue to be surveyed regarding program quality. However, these surveys should also request a list of the top 10 (or 15 or 20) journals in finance. Subsequent stud-ies emphasizing research productivity could then evaluate programs based on this broader list of journals deemed most significant by program directors. Per capita research produc-tivity should continue to be measured in order to evaluate smaller programs that are not included in the opinion survey results.

REFERENCES

Black, F., & Scholes, M. (1973). The pricing of options and corporate liabilities.Journal of Political Economy,81, 637–654.

Brooker, G., & Shinoda, P. (1976). Peer ratings of graduate programs in business.Journal of Business,29, 240–251.

Chan, K. C., Chen, C. R., & Lung, P. P. (2007). One-and-a-half decades of global research output in finance: 1990–2004.Review of Quantitative Finance and Accounting,28, 417–439.

Fama, E. F., Fisher, L., Jensen, M., & Roll, R. (1969). The adjustment of stock prices to new information.International Economic Review,10, 1–21.

Hasselback, J. R. (Ed.). (2005).2004–2005 Prentice Hall guide to finance faculty. Upper Saddle River, NJ: Prentice-Hall.

Heck, J. L. (2007). Establishing a pecking order for finance academics: Ranking of U.S. finance doctoral programs.Review of Pacific Basin Fi-nancial Markets and Policies,10, 479–490.

Heck, J. L., & Cooley, P. L. (2008). Sixty years of research leadership: Contributing authors and institutions to the Journal of Finance.Review of Quantitative and Finance and Accounting,31, 287–309.

Klemkosky, R. C., & Tuttle, D. L. (1977a). The institutional source and concentration of financial research.Journal of Finance,13, 901–907. Klemkosky, R. C., & Tuttle, D. L. (1977b). A ranking of doctoral programs

by financial research of graduates.Journal of Financial and Quantitative Research,12, 491–497.

Modigliani, F., & Miller, M. H. (1958). The cost of capital, corporation finance and the theory of investment.American Economic Review,48 (June), 261–297.

Morse, R., & Flanigan, S. (2009). How we calculate the rankings. U.S. News and World Report. Retrieved from http://www.usnews.com/articles/education/best-business-schools/2009/ 04/22/business-school-rankings-methodology.html

Roose, K. D., & Andersen, C. J. (1970).A rating of graduate programs. Washington, DC: American Council of Education.

Siegfried, J. J. (1972). The publishing of economic papers and its impact on graduate faculty ratings, 1960–1969.Journal of Economic Literature,10, 31–49.