i

A STUDY ON CONTENT VALIDITY OF THE ASSESSMENTS PREPARED BY PRACTICE TEACHING STUDENTS

A SARJANA PENDIDIKAN THESIS

Presented as Partial Fulfillment of the Requirements to Obtain the Sarjana Pendidikan Degree

in English Language Education

By

Matius Teguh Prasetyo Student Number: 061214104

ENGLISH LANGUAGE EDUCATION STUDY PROGRAM DEPARTMENT OF LANGUAGE AND ARTS EDUCATION FACULTY OF TEACHERS TRAINING AND EDUCATION

SANATA DHARMA UNIVERSITY YOGYAKARTA

ii

A Sarjana Pendidikan Thesis on

A STUDY ON CONTENT VALIDITY OF THE ASSESSMENTS PREPARED BY PRACTICE TEACHING STUDENTS

By

Matius Teguh Prasetyo Student Number: 061214104

Approved by

Advisor

Date

iii A Thesis on

A STUDY ON CONTENT VALIDITY OF THE ASSESSMENTS PREPARED BY PRACTICE TEACHING STUDENTS

By

Matius Teguh Prasetyo Student Number: 061214104

Defended before the Board of Examiners on February 1st, 2012

and Declared Acceptable

Board of Examiners

Chairperson : Caecilia Tutyandari, S.Pd., M.Pd. __________________ Secretary : Drs. Barli Bram, M.Ed. __________________ Member : Made Frida Yulia, S.Pd., M.Pd. __________________ Member : Agustinus Hardi Prasetyo, S.Pd., M.A. __________________ Member : Caecilia Tutyandari, S.Pd., M.Pd. __________________

Yogyakarta, Faculty of Teachers Training and Education

Sanata Dharma University

Dean

iv

STATEMENT OF WORK’S ORIGINALITY

I honestly declare that this thesis, which I have written, does not contain the work or parts of the work of other people, except those cited in the quotations and the references, as a scientific paper should.

Yogyakarta, February 1st, 2012

The Writer

v

DEDICATION PAGE

This thesis is dedicated to Bapak, Ibu, Tyas, Andre, Sr. Natalia, Op.,

vi

LEMBAR PERNYATAAN PERSETUJUAN

PUBLIKASI KARYA ILMIAH UNTUK KEPENTINGAN AKADEMIS

Yang bertanda tangan dibawah ini, saya mahasiswa Universitas Sanata Dharma: Nama : Matius Teguh Prasetyo

Nomor Mahasiswa : 061214104

Demi pengembangan ilmu pengetahuan, saya memberikan kepada perpustakaan Universitas Sanata Dharma karya ilmiah saya yang berjudul:

A Study on Content Validity of the Assessments Prepared by Practice Teaching Students

beserta perangkat yang diperlukan (bila ada). Dengan demikian saya memberikan kepada perpustakaan Universitas Sanata Dharma hak untuk menyimpan, mengalihkan dalam bentuk media lain, mengelolanya dalam bentuk pangkalan data, mendistribusikannya secara terbatas, dan mempublikasikannya di internet atau media lain untuk kepentingan akademis tanpa perlu meminta ijin dari saya maupun memberikan royalti kepada saya selama tetap mencantumkan nama saya sebagai penulis.

Demikian pernyataan ini saya buat dengan sebenarnya

Dibuat di Yogyakarta

Pada tanggal : 20 Januari 2012

Yang menyatakan,

vii ABSTRACT

Prasetyo, Matius Teguh. (2012). A Study on Content Validity of the Assessment Prepared by Practice Teaching Students. Yogyakarta: Sanata Dharma University.

An assessment in an instruction is purposed to obtain the information about the students’ progress and mastery during or at the end of the instruction. The information should be valid and should represent the true condition of the students. Therefore, the assessment applied by the teachers should be valid and able to represent the intended outcomes the students should perform or master.

Based on the concept above, the writer was interested to conduct a study about the validity of the assessment, especially the content validity. The writer students established content validity in their assessments, the process was called content validation. The respondents were five randomly chosen Practice Teaching students of ELESP Sanata Dharma University in academic year of 2010/2011. The analysis was done by comparing the instructional content which was specified to some specific objectives with the intended result or the content of assessments. The analysis was also done to obtain the information about the problems which influence the content validity degree of the respondents’ assessments.

From the analysis, the respondents established some assessments which were valid based on the principle of content validity of assessment but they differed in their degree of content validity. The findings showed that the respondents established high, moderate, and low content validity. The problems which influenced the content validity degree of the respondents’ assessments were 1) the objective formulations were not clear and ambiguous, 2), there were too many objectives in an instruction for a single content, 3) the assessments did not measure all the objectives stated, 4) the assessments’ tasks or procedures did not represent the intended skills, and 5) the intended result of the assessments had little correlation with the content of the instruction. In order to overcome the problems, the writer proposed some possible recommendations, namely 1) producing well planned lesson plan and assessments, 2) stating clear and unambiguous content and objectives, 3) not formulating too many objectives for a lesson, 4) considering the four basic English skills in the assessments, and 5) improving and updating the knowledge about assessment by reading books or any other sources.

viii ABSTRAK

Prasetyo, Matius Teguh. (2012). A Study on Content Validity of the Assessment Prepared by Practice Teaching Students. Yogyakarta: Universitas Sanata Dharma.

Sebuah asesmen dalam proses pembelajaran dimaksudkan untuk mendapatkan informasi tentang kemajuan dan pemahaman siswa selama atau pada akhir proses pembelajaran. Informasi yang didapat dari asesmen tersebut haruslah valid dan dapat mewakili kondisi nyata siswa. Oleh karena itu, asesmen yang diaplikasikan oleh guru harus valid dan dapat merepresentasikan hasil yang seharusnya dicapai atau ditunjukkan oleh siswa.

Berdasarkan konsep di atas, penulis tertarik untuk melaksanakan sebuah penelitian tentang validitas dari sebuah asesmen, terutama pada validitas isi. Penulis merumuskan dua pertanyaan dalam penelitian ini, yaitu 1) bagaimana validitas isi ditampilkan oleh para mahasiswa yang sedang menjalani Praktik Pengajaran Lapangan (PPL) dalam asesmen mereka? dan 2) apakah masalah yang mempengaruhi tingkat validitas isi dari asesmen para mahasiswa PPL tersebut?

Untuk menjawab pertanyaan-pertanyaan tersebut, penulis melaksanakan sebuah penelitian kualitatif, yaitu analisis dokumen. Dokumen yang dimaksud adalah beberapa Rencana Pelaksanaan Pembelajaran (RPP) bersama dengan asesmen yang disiapkan untuk RPP tersebut yang dibuat oeh mahasiswa PPL. Analisis dokumen dimaksudkan untuk menunjukkan bagaimana para mahasiswa PPL menampilkan validitas isi dalam asesmen mereka, proses ini disebut dengan validasi isi. Responden dalam penelitian ini adalah lima orang mahasiswa PPL Universitas Sanata Dharma yang dipilih secara acak pada tahun ajaran 2010/2011. Analisis dilakukan dengan cara membandingkan isi pembelajaran yang dibagi dalam beberapa tujuan pembelajaran dengan hasil yang diharapkan atau isi dari asesmen. Analisis juga dilakukan untuk mengetahui tentang masalah-masalah yang mempengaruhi tingkat validitas isi dari asesmen para responden.

ix

dan selalu memperbaharui pengetahuan tentang asesmen melaui buku atau sumber-sumber yang lain.

x

ACKNOWLEDGEMENTS

My biggest gratefulness and never-ending gratitude go to my faithful companions, Lord Jesus Christ and Mother Mary, for endowing me with splendid blessings and love.

I would like to express my deepest and sincere appreciation to my sponsor, Made Frida Yulia, S.Pd., M.Pd. Her enduring guidance and valuable suggestions have given influential contributions to this thesis. I am greatly indebted to the lecturers of Language Learning Assessment subject, Agustinus Hardi Prasetyo, S.Pd., M.A., and Veronica Triprihatmini, S.Pd., M.Hum., M.A., who have helped me evaluate my analysis and to all lecturers who have shared their knowledge and advice which were beneficial for me in finishing this thesis. I would like to thank Jatmiko Yuwono, S.Pd, who has read and evaluated my thesis during his busy time.

Sincere thanks are also expressed to my respondents, five Practice Teaching students of 2010/2011 academic year, who have given me permission to copy and analyze the Lesson Plan and Assessments they made during their Practice Teaching period.

xi

toward Sr. Natalia, OP and the big family of Pak Anwar and Bu Irni; without their help in affording my study, I would never have the chance to study in university. My special indebtedness goes to the big family of F. Aldhika Deinza Saputra, who have given me a place to stay and finish my thesis.

I would like to express my thankfulness to all teachers in SMA and SMK Dominikus Wonosari and SD N Sedono for the beautiful moment and experiences I could get during my work there. The knowledge I get from my study is nothing without some places to apply. I know more about being a teacher from my experiences there. I would like to thank them for their encouragement and motivation for me to finish my thesis.

I would also like to thank Vincensia Retno Woro Purwaningsih for being my number-one supporter. Her love, patience, support and prayers have poured inspirations upon my days. At last, I would like to thank all friends and people whose names cannot be mentioned one by one. I thank them all for lending me a hand in finishing this thesis.

xii

TABLE OF CONTENTS

TITLE PAGE…… ... i

APPROVAL PAGES ... ii

STATEMENT OF WORK’S ORIGINALITY ... iv

DEDICATION PAGE ... v

LEMBAR PERNYATAAN PERSETUJUAN PUBLIKASI KARYA ILMIAH UNTUK KEPENTINGAN AKADEMIS ... vi

ABSTRACT …….. ... vii

ABSTRAK ... viii

ACKNOWLEDGEMENTS ... x

TABLE OF CONTENTS ... xi

LIST OF TABLE ... xv

LIST OF FIGURES ... xvi

LIST OF APPENDICES ... xvii

CHAPTER I. INTRODUCTION A. Research Background ... 1

B. Problem Formulation ... 5

C. Problem Limitation ... 5

D. Research Objectives ... 7

E. Research Benefits... 8

F. Definition of Terms... 10

CHAPTER II. REVIEW OF RELATED LITERATURE A. Theoretical Description ... 12

1. Assessment ... 12

a. The Definition of Assessment ... 12

xiii

2. Methods of Assessments ... 19

3. Principles of Language Assessment ... 20

a. Practicality ... 20

b. Reliability ... 21

c. Validity ... 21

d. Authenticity ... 26

e. Washback ... 26

4. Content Validation ... 26

a. Definition and Process ... 27

b. Instructional Goals and Objectives ... 28

B. Theoretical Framework ... 31

CHAPTER III. METHODOLOGY ... 34

A. Research Method ... 34

B. Research Participants ... 35

C. Research Instruments ... 36

1. The Researcher as the Research Instrument ... 36

2. Documents ... 36

D. Data Gathering Technique ... 37

E. Data Analysis Technique ... 37

F. Research Procedure ... 39

CHAPTER IV. RESEARCH RESULTS AND DISCUSION ... 42

A. Establishing Content Validity in Assessments ... 42

1. Determining the Instructional Content ... 44

a. Text-based Content ... 47

b. Expression-based Content ... 48

c. Tense-based Content ... 49

2. Content Validity of the Respondents’ Documents ... 50

a. High Content Validity Assessment ... 50

xiv

c. Low Content Validity Assessment ... 58

B. Problems in Producing Content Valid Assessment ... 64

1. Problems Influencing the Content Validity Degree of the Assessment ... 65

2. Possible Recommendation to Overcome the Problems ... 68

CHAPTER V. CONCLUSIONS AND SUGGESTIONS ... 71

A. Conclusions ... 71

B. Suggestions ... 74

REFERENCES ... 77

xv

LIST OF TABLE

Table Page

xvi

LIST OF FIGURES

Figure Page

xvii

LIST OF APPENDICES

Appendix A

The Sample of Respondents’ Documents ... 80 Appendix B

The Complete Analysis of the Content Validity of the Assessments

Prepared by Practice Teaching Students ... 105 Appendix C

H.D. Brown’s Lists of Microskills and Macroskills of the Four Basic

Language Skills ... 135 Appendix D

1 CHAPTER I INTRODUCTION

This chapter consists of research background, problem formulation, problem limitation, research objectives, research benefits, and definition of terms.

A. Research Background

Assessment is also used as an aid for the teachers to evaluate their progress in teaching and learning activity. The assessment result can show how far the students can understand the material given. The result becomes the indicator about the success or failure of the students and it helps teachers revise or improve the next teaching and learning activity.

Nevertheless, producing valid and reliable assessment which can serve those purposes is not easy. Teachers need to have a good ability in assessing students’ performance by making good assessment tools based on the principles of good assessment. Since the assessment is used to obtain the accurate and valid information of students’ proficiency in particular topic or material, the assessment has to be valid and accurate, too.

the teachers or administrators ability in carefully planning and giving sufficient time in the process of its production.

The students of English Language Education Study Program (ELESP) Sanata Dharma University are prospective teachers who are prepared to be good quality teachers. They learn about English and how to teach English in their study in university. They are expected to be able to master all teaching skill, which include the mastery of assessing, the knowledge of how to make good assessment, the ability to apply the principles in making assessment as an integral part of teaching and learning activity, and many more. Since producing good assessment is not an easy work and it needs time in learning and producing, they learn from the concept of assessment to the assessment production. Producing good assessment will support their teaching later.

their all understanding about teaching, classroom management, assessment application, and many more. At this point, they practice to produce sound assessments, which appropriately apply the principles of assessment. Nevertheless, since they are learning, some of the principles of assessment might be ignored or might not be appropriately obeyed.

Based on the description above, the writer is interested in conducting a research about one of the principles of assessment, namely the assessment validity. The writer is eager to conduct a research on the validity of the assessment made by the students of English Language Education Study Program (ELESP) Sanata Dharma University, especially the students who had taken Practice Teaching Subject. The writer chose Practice Teaching students as the sample of the research because, as stated above, they are supposed to master teaching and learning skills, which also include the competence in producing good assessment. Before taking the Practice Teaching subject, the students have to pass the previous subjects learning about the theory of English Language and English Language teaching. After three or four semesters obtaining the theory of English language, the students then are prepared to teach English starting from understanding about teacher and teaching, preparing the instructions, learning about approach, method, and technique in teaching, making assessment, and many more.

material through the interpretation of the assessment result. Therefore, the assessment tools and procedures have to be valid. The validation aimed at obtaining valid information about the students’ performance in particular topic, skill, or material. In other words, if teachers want to achieve valid information of the students’ performance or progress, the assessment they made should be valid, too.

B. Problem Formulation

Through this study, the writer formulates the problems which are presented into two questions.

1. How is the content validity established by the Practice Teaching students in their assessment?

2. What are the problems which influence the degree of content validity of the Practice Teaching students’ assessment?

C. Problem Limitation

are able to produce good and valid assessment. Another reason is that the students have to make assessment for the real students during their Practice Teaching period. The LLA students are not chosen because the assessment they make is not purposed for the real students and they are still practicing. Although in Practice Teaching period the students are also still practicing, they practice for the real students. Therefore, the assessment they produce has to be valid.

stated before because it does not measure what it intends to measure, which is good recount text production. Therefore, content validity in an assessment is very important and needs more attention from those who produce the assessment.

The assessment of this study is the assessment made by the Practice Teaching students during their practice teaching period. The assessment chosen is the assessment which has been prepared before they conduct their teaching and learning activity in class. The spontaneous assessment is not included. It means that the assessment chosen is planned and prepared in written form so the students can read the instructions and do what they are supposed to do in that assessment.

D. Research Objectives

Dealing with the questions mentioned, the study is conducted to achieve two objectives, namely:

1. Figuring out how the Practice Teaching students established the content

validity in their assessment. It is expected that the analysis can present the way of the students in preparing and producing the assessment so that it can be considered valid based on the concept of content validity.

E. Research Benefits

It is expected that this study will be beneficial for the students and lecturers who are involved in ELESP, and future researchers who want to study about assessment.

For students of ELESP, especially the students who are taking Practice Teaching and preparing sets of instruction in their learning, this study is expected to help them analyze their own assessment based on the theory of content validity. It is stated that it is only one source of making good assessment because there are other criteria to produce good assessment. Yet, validity, especially content validity, takes a really important role in an assessment. By understanding the concept of valid assessment, the students are expected to be more prominent in producing an assessment in their practice teaching period as well as in their real teaching later. It is also expected that the students will always keep in mind that their assessment must be valid in order to obtain valid measurement and result from their students. Valid results will serve as a very good aid for the teachers to improve their teaching.

toward assessment as an integral part of teaching. It is expected that this study could help the students set their thinking that an assessment is not only used for scoring but also it is used for wider purpose of educating the students and develop the teachers.

For lecturers of ELESP, especially who are involved in teaching evaluation and assessment, this study is expected to serve as a means to obtain depiction of the students’ mastery in producing language assessment. Therefore, the lecturers will be able to plan possible instructions which will enable the students to have a sound assessment which involved some principles of good assessment production, especially the content validity. The lecturers could help their students produce assessment which possesses high validity since the assessment is used to obtain valid information about the students’ progress and mastery on a specific material or content domain.

F. Definition of Terms

There are some terms mentioned in this study that need to be defined in order to avoid misunderstanding and to lead the readers to a better understanding on the topic being discussed.

1. Assessment

As stated before, the assessment is an integral part of teaching and learning activity. Assessment can be defined as one or more activities aimed at obtaining the information about the students’ progress or students’ achievement in learning activities. It can be done in several ways namely giving quizzes and tests, paying attention to the students’ performance in the classroom, listening to the student response in teaching and learning activities, and many more (Badder, 2000; Chatterji, 2003; and Brown, 2004).

2. Validity

Validity in this study directly points to the validity of the assessment. In an assessment, validity is one of the criteria for a good test. Brown (2001) stated in his book that validity is “the degree to which the test actually measures what it is intended to measure (p. 378).” Gronlund (1998, p. 226) as cited by Brown (2004) stated that validity is “the extent to which inferences made from assessment results are appropriate, meaningful, and useful in terms of the purpose of the assessment (p. 22).” This study grounds the validity of assessment to the definitions above. The assessment is valid if the results are appropriate, meaningful, and useful based on the purpose of the assessment or based on what is intended to be measured by the assessment.

12 CHAPTER II

REVIEW OF RELATED LITERATURE

In this chapter, the writer discusses the related literature which serves as the basis to answer the research questions. There are two major parts in this chapter, namely theoretical description and theoretical framework.

A. Theoretical Description

In this part, the writer provides some descriptions about the theories used to analyze the content validity of Practice Teaching Students’ assessments. The theories become the key for the writer to be able to analyze the assessments. 1. Assessment

Before going deeper into the discussion of assessment and the principles of language assessment, it is better for the writer to provide a clear definition about the assessment.

a. The Definition of Assessment

ideas, and promoting conceptual understanding by developing questions. Assessment is aimed at receiving information about the students’ progress and the instructional process. Airasian (1991) stated that assessment is a process of gathering, interpreting, and synthesizing information purposed to aid decision making in the classroom. Teachers collect information through assessment to help them make decisions about their students’ learning and the success of their instruction. Again, the information can be concluded from several assessment procedures or methods.

the extent to which those goals have been attained (Miller et al., 2009). Teachers use assessment information to make decision about what the students have learned what and where they should be taught, and the kinds of related services (Salvia et al., 2001).

Assessment, therefore, can be defined as some processes, activities, or systematic methods which are done to obtain information about the students’ progress or performance. The process, activities, or methods are various. Teachers can choose one or more activities to assess the students. Those activities are conducted to obtain information about the students’ progress during teaching and learning process, the students’ mastery of the teaching and learning materials, and the instructional process. It is also important to mention that, according to Siddiek (2010, p. 135), assessment is “an integral part of any effective teaching program, thus it should be subject to planning, designing, modifying, and frequent revision of its validity as tools of quality measurement.” The statement makes the assessment clearer that as a means to obtain valid information about the students and instructional progress, an assessment should be validly planned, designed, modified, and frequently revised.

from observations, recollections, test, and professional judgments all come into play.” It can be concluded that the assessment information can be obtained not only from the test but also from other procedures, namely observation, asking spontaneous questions, listening the students’ responses, and many more. Nevertheless, it should be noticed that test is often the most visible evidence of student learning for parents to see and make judgment about (Walker et al., 2004).

The position of a test in an assessment as a part of teaching activities is illustrated in Figure 2.1.

Figure 2.1 shows that test is a part of assessment while an assessment is a part of teaching. Therefore, test is not the same as assessment but it is only one of the assessment procedures or tasks. Meanwhile, an assessment is under the wide area of teaching. It means that teaching involves assessing. Assessment becomes a very important and crucial part in teaching since it helps teachers understand the progress or achievement the students have made and it can also be a useful device to monitor or evaluate the instruction prepared by the teachers, and many more.

TEST

ASSESSMENT TEACHING

Figure 2.1

Test, Assessment, and Teaching

b. Types of Assessment

There are some types of assessment. Brown (2004, p. 5-7) divided the assessment into three divisions namely the informal and formal assessment, formative and summative assessment, and norm-referenced and criterion-referenced tests.

1) Informal and Formal Assessment

The first distinction of the types of assessment is informal and formal assessment. Informal assessment can be done in many forms, starting with incidental, unplanned comments and responses, along with coaching and other impromptu feedback to students (Brown, 2004, p. 5). In contrast, he stated that formal assessment is exercises or procedures specifically designed to tap into a storehouse of skills and knowledge. They are systematic, planned sampling techniques constructed to give teacher and students an appraisal of student achievement. In addition, McAlpine (2002, p. 7) stated that “formal assessments are where the students are aware that the task that they are doing is for assessment purposes.” Tests are the example of formal assessment but not all formal assessments are in the form of tests.

2) Formative and Summative Assessment

their level of understanding on their learning in the current course. Formative assessment is used to evaluate students in the process of “forming” their competencies and skills with the goal of helping them to continue that growth process. Practically, the informal assessments are (or should be) formative (Brown, 2004, p. 6).

Summative assessment aims to measure, or summarize, what a student has grasped, and typically occurs at the end of a course or unit of instruction. CLPD of The University of Adelaide (2011) stated that summative assessment is “where assessment task responses are designed to grade and judge a learner's level of understanding and skill development for progression or certification.” Final exam in a course and general proficiency exam are examples of summative assessment.

3) Norm-Referenced and Criterion-Referenced Tests

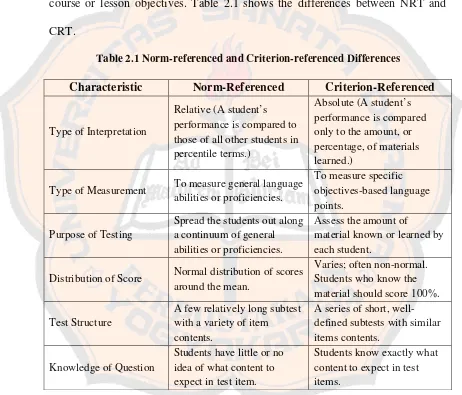

In contrast, as stated by Brown (2006), criterion-referenced test (CRT) is created to measure well-defined and fairly specific instructional objectives. Furthermore, he stated that the objectives are specific to particular course, program, school district, or state. In addition, Brown (2004, p. 7) stated that CRT is designed to give test-takers feedback, usually in the form of grades, on specific course or lesson objectives. Table 2.1 shows the differences between NRT and

Type of Measurement To measure general language abilities or proficiencies.

2. Methods of Assessments

According to Chatterji (2003), there are five different assessment methods that focus on the nature of the responses made by test-takers namely written assessment, behavior-based assessment, product-based assessment, interview-based assessment, and portfolio-based assessment.

a. Written Assessments

Written assessment includes all assessments to which test-takers respond using a paper and pencil format or, as is common today, on a computer using a word processor. The response of this assessment can be in the form of structured or open-ended response.

b. Behavior-Based Assessments

This method requires the students to perform behaviors or processes that must be observed directly. The special feature of assessment in this category is that actual behaviors, performances, and demonstrations must be assessed as they are occurring.

c. Product-based Assessments

In this method, the students have to create or construct a product. This product serves as the basis for measurement. The examples of product-based assessments are journals, terms papers, laboratory reports, books, or artwork. d. Interview-based Assessments

for the respondents to explain responses given in written or other formats. In validity perspective, it is advantageous because reasoning and explanation skills are in the domain.

e. Portfolio-based Assessments

Portfolio-based assessment provides a comprehensive picture of proficiencies the students made. It is often promoted as the best means for comprehensive documentation of evolving skills and knowledge in a particular area. According to Arter and Spandel (1992, p. 36),

a portfolio is a purposeful collection of student work that tells the story of a student’s efforts, progress, or achievement in (a) given area(s). This collection must include student participation in selection of portfolio contents; the guidelines for selection; the criteria for judging merit; and evidence of student self-reflection.

3. Principles of Language Assessment

Brown (2004, p. 19) stated five principles of language assessment namely practicality, reliability, validity, authenticity, and washback. Since this study places its attention specifically on validity of assessment, the writer will explain the validity in bigger portion than other principles. The principles explained bellow are mainly taken from Brown’s book, Language Assessment: Principles and Classroom Practices (2004, p. 19-30).

a. Practicality

constraints, it is relatively easy to administer, and it has scoring/evaluation procedure that is specific and time-efficient.

b. Reliability

A reliable test is consistent and dependable. It means that the same test should yield similar result when it will be given to the same students or matched students on two different occasions.

c. Validity

The next principle is validity. This principle becomes the basis of this study. Therefore, as stated above, the writer will give bigger portion to explain about this assessment principle.

Brown (2006) stated that validity is important especially when it is involved in the decisions that teachers regularly make about their students. Further he stated that teachers want to make the decisions of their admission, placement, achievement, and diagnostic based on the test or assessment which actually testing what they claim to measure. Therefore, teachers should consider their assessment validity. The assessment will be considered valid if it measures the objective of the course (Siddiek, 2010) because “aimless is the most single cause of ineffectiveness in teaching and of frustration of education efforts” (Cliff, 1981, p. 27, as cited by Siddiek, 2010, p. 135).

“Validity is the quality of the interpretation of the result rather than of the assessment itself, that its presence is a matter of degree, that it is always specific to some particular interpretation or use, and that it is a unitary concept” (Miller et al., 2009, p. 102). What validated is not merely the assessment procedures but the appropriateness of the interpretation and use of the result of an assessment procedure. Validity is best considered in terms of categories that specify the degree, namely high validity, moderate validity, and low validity (Miller et al., 2009). It is better to avoid thinking of assessment result as valid or invalid.

1) Content-Related Evidence

Content is simply defined as something what to teach or what the students need to learn. Price and Nelson (2011) stated that planning for instruction begins with thinking about content. Before making decision about how to teach, teachers should decide what to teach or what students need to learn (p. 3). Furthermore, Price et al. (2011) stated that it is impossible to achieve the intended result from teaching when the content, what to teach or what the students need to learn, is not exactly stated or determined. The content of language learning cannot be separated from the four basic language skills, namely reading, writing, speaking, and listening. Those four skills are integrated in language learning (Brown, 2004).

Musafi (2002) and Hughes (2003) stated that content-related evidence or content validity means that the test samples the subject matter about which conclusions are to be drawn and it requires the test-taker to perform the behavior that is being measured (as cited in Brown, 2004, p. 22). It means that if the test can clearly define the achievement being measured, it fulfills the content-related evidence or content validity.

what it is that the testers wanted to measure in the first place. The important point in content validation is “to determine the extent to which a set of assessment tasks provides a relevant and representative sample of the domain of tasks about which interpretations of assessment results are made” (Miller et al., 2009, p. 75). Since language learning also involves the four basic language skills, the microskills and macroskills of each skill are also involved in the analysis (the list of microskills and macroskills of each content can be found in Appendix D).

2) Criterion-Related Evidence

Criterion-related evidence is the extent to which the “criterion” of the test has actually been reached. It is best demonstrated through a comparison of results of an assessment with results of some other measure of the same criterion. For example, in a course unit whose objective is for students to be able to orally produce voiced and voiceless stops in all possible phonetic environments, the result of one teacher’s unit test might be compared with an independent assessment – possibly a commercially produced test in a textbook – of the same phonemic proficiency.

3) Construct-Related Evidence

“motivation” are psychological constructs. In the field of assessment construct validity asks, “Does this test actually tap into the theoretical construct as it has been defined? (Brown, 2004, p. 25)”

4) Consequential Validity

Consequential validity encompasses all the consequences of a test, including such considerations as its accuracy in measuring intended criteria, its impact on the preparation of test-takers, its effect on the learner, and the (intended and unintended) social consequences of a test’s interpretation and use (Brown, 2004, p. 26).

5) Face Validity

Gronlund (1998) stated that face validity is the extent to which students view the assessment as fair, relevant, and useful for improving learning (as cited by Brown, 2004, p. 26). Brown also cited Mousavi’s (2002) definition about face validity.

“Face validity refers to the degree to which a test looks right, and appears to measure the knowledge of abilities it claims to measure, based on the subjective judgment of the examinees who take it, the administrative personnel who decide on its use, and other psychometrically unsophisticated observers” (p. 244).

d. Authenticity

is defined as “the degree of correspondence of the characteristics of a given language test task to the features of a target language task.” The authenticity may be present in some ways. First, the language in the test or assessment is as natural as possible. Second, the test or assessment’s items are contextualized rather than isolated. Third, the topics are meaningful, relevant, and interesting for the learner. Fourth, the thematic organization to items is provided, such as through a story line or episode. Fifth, tasks represent, or closely approximate, real-world tasks (Brown, 2004, p. 28).

e. Washback

According to Hughes (2003, p. 1), washback is “the effect of testing on teaching and learning (as cited in Brown, 2004, p. 28).” Brown (2004) stated again that in large-scale assessment, washback generally refers to the effects the tests have on instruction in terms of how students prepare for the test.

4. Content Validation

a. Definition and the Process

After determining about the content the Practice Teaching students had in their teaching, the writer analyzed the appropriateness of the assessments’ content or the intended result of the assessment with the formulation of the content as specified in the objectives. As stated by Walker and Schmidt (2004), in developing the assessment tasks teachers or the authors of assessment had to match the assessment tasks to the purpose and context of instruction. Tasks should relate directly to the goals of instruction and incorporate content and activities that have been part of the classroom instruction. Walker et al. (2004) added that the teachers or the authors had to ensure that the tasks allow students to clearly demonstrate their knowledge. Further they stated that building sound assessment tasks requires careful thinking about the content the students should demonstrate during the assessment progress. The questions must relate to skills and concept in the unit of study and provide students with the opportunities to demonstrate what they know. Miller et al., (2009) stated that good assessment requires relating the assessment procedures as directly as possible to intended learning outcomes.

According to Brown (2004, p. 32-33), there are two steps in evaluating the content validity. Those two steps are formulated in two questions, namely 1) Are classroom objectives identified and appropriately framed? 2) Are lesson objectives represented in the form of test specifications? The first step requires the writer to analyze whether the content of the study is specified appropriately in some objectives. This first step is similar with the idea presented by Alderson et al. (1995), analyzing the content.

The next step is comparing between the content which is already specified in some appropriate objectives with the content of the assessment. The process does not compare the content with the assessment procedure but compare the content with the intended results of the assessment to know whether they match and appropriate with the content of the study.

b. Instructional Goals and Objectives

The content validation process requires the classroom objectives in validating the content of an assessment. The classroom objectives are stated in the lesson plan made by the teachers before they apply the whole instruction processes which include the preparation and evaluation.

Further, Graves stated that stating the goals helps teachers focus their vision and priorities for the course.

Goals are general statements, but they are not vague. For example, the goal “Students will improve their writing” is vague. In contrast, the goal “By the end of the course students will have become more aware of their writing in general and be able to identify the specific areas in which improvements is needed” is not vague (2000, p. 75). Finally, Graves (2000, p. 75) stated that “goals are benchmarks of success for a course.”

While the goals are the main purposes and intended outcomes of the course, the objectives are statements about how the goals will be achieved (Graves, 2000). The goals are divided or broken into learnable and teachable units through the objectives. Objectives or expected outcomes transform the general goals into specific students’ performance and behaviors that show the development of student learning and skill (TLC University of California, 2011). Graves (2000) added that “goals and objectives are in cause and effect relationship” (p. 77). It means that if the objectives can be achieved by the students, the goals of the course will be achieved, too. Graves also stated that objectives are in hierarchical relationship to the goals. Goals are more general and objectives are more specific.

guidelines for assessing student learning (Miller et al., 2009). The major purposes are illustrated in Figure 2.2.

Figure 2.2

The Purpose of Instructional Goals and Objectives (Miller et al., 2009, p. 47)

Objectives are statements that describe the behaviors students can perform after instruction (Airsian, 1991). Objectives describe where teachers want the students to go and they will know if the students got to the destination (Price et al., 2011). Objectives pinpoint the destination, not the journey. Well written objectives help teachers clarify precisely what they want the students to learn, help provide lesson focus and direction, and guide the selection of appropriate practice. Using objectives, teachers can evaluate whether their students have learned and whether their own teaching has worked (Price et al., 2011, p. 13).

Instructional Goals and Objectives

Convey instructional intent to others (students, parents, other

school personnel, the public)

Provide a basis for assessing student learning

(by describing the performance to be

measured) Provide direction for

the instructional process (by clarifying the intended learning

In formulating goals or objectives, according to Graves (2000), the teachers should pay attention to some aspects in teaching. The first, when the teachers want to write the goals or objectives, the teachers should keep in mind about the audience of the goals. Knowing the audience will help teachers consider whether the language they use is accessible to the audiences. The next aspect to be considered is that the goals or objectives formulated should be transparent enough for others to understand. It can be done by unpacking the language to simplify and clarify the goals or objectives (Graves, 2000).

Objectives should be S.M.A.R.T. It means that the learning or classroom objectives should fulfill the principles of simple, measurable, action-oriented, reasonable, and time-specific.

B. Theoretical Framework

The assessments chosen will be in criterion-referenced test (CRT) type which is created to measure well-defined and fairly specific instructional objectives. This type of assessment should be able to clearly present the objectives the students should achieve. The formal assessment is chosen because the assessment requires Practice Teaching students to plan and prepare their assessment. The planned and prepared assessment can show how well the students establish content validity in their assessment. Nevertheless, the assessment can be formative or summative.

The study places its attention on the content validity of an assessment. Content validity is one of the principles of a test or assessment. Content validity means that the test or assessment samples the subject matter about which conclusions are to be drawn and it requires the test-taker to perform the behavior being measured. If the assessment can clearly define and sample the intended achievements or results, the assessment is considered fulfilling the content validity. The validity of this study concerns with the validity of the intended outcome or result from the assessment and not from the assessment specifications or procedures. Validity is also stated in the matter of degree, namely high validity, moderate validity, and low validity.

questions, namely 1) Are classroom objectives identified and appropriately framed? 2) Are lesson objectives represented in the form of test specifications? The first step deals with the formulation of classroom or learning objectives. The writer analyzes the Practice Teaching students’ classroom or learning objectives formulation based on the S.M.A.R.T principle of objective formulation. The analysis is used to determine what content the Practice Teaching students want their students to learn. Although the process requires the writer to analyze the Practice Teaching students’ ability in making good learning or classroom objectives, the process focuses more in determining the intended result or content. Since the content statement involved the four basic skills in language learning, the writer also involved the microskills and macroskills analysis of each skill in the assessment tasks.

34 CHAPTER III METHODOLOGY

In this part, the writer explains about the how the study was conducted. The chapter contains the research method, research participants, research instrument, data gathering technique, data analysis technique, and research procedure.

A. Research Method

B. Research Participants

The research participants were the students of ELESP Sanata Dharma University who conducted their Practice Teaching during July to December 2010. The participants will be called as respondents in this study. All of them were in the seventh semester in 2010/2011 academic year. Assumedly, they had taken the Language Learning Assessment class, in which they had learnt about the basic concept and principles to produce good assessment. The data of the study were their assessments produced during the Practice Teaching period. The writer chose the students as the participants because throughout the Practice Teaching program, they were given the opportunity to practice teaching in the real school with real students in which they would apply all teacher competences they have obtained. They applied their knowledge and skill in teaching, namely how to handle the class, how to create good teaching materials, how to prepare the appropriate lesson plan, how to assess their students, and many more. Therefore, they also made real assessments for real students which must be good, reliable, and valid.

C. Research Instruments

In order to obtain dependable data to answer the research questions, the writer made use of two different types of research instruments.

1. The Writer as the Research Instrument

Poggenpoel and Myburgh (2003) stated that researcher as research instrument means that the writer is the key in obtaining the data from the respondents. The writer asked the data from the participants and also analyzed the data based on the theory of content validity of assessment. Furthermore, the writer reported the result of the study also.

2. Documents

teaching. The lesson plan became the first document in this study. The second document was the assessments which should be in accordance with the lesson plans prepared especially in the content being taught.

D. Data Gathering Technique

The writer gathered the data from five students of Practice Teaching subject. The writer collected the data by compiling the lesson plans along with some assessments for each lesson plan they produced during Practice Teaching period. From every student, the writer asked for two lesson plans along with some assessments prepared for the lesson plan. It was done to have more accurate analysis about the students’ ability in producing content valid assessments. A single lesson plan with some assessment items was not considered sufficient to represent the respondents’ ability. It was better for the writer to have two documents to be analyzed to support the findings about how the respondents established content validity in their assessments.

E. Data Analysis Technique

respondents’ ability in formulating the objectives but it was aimed at knowing or determining the content of the instruction planned by the respondents in their lesson plan. The second question dealt with the comparison of the instructional content with the intended result or the content of the assessment. The interpretation of the intended result of the assessment was analyzed based on the instructional content, not merely the assessment tasks or procedures.

The second one was the assessment which should measure the intended outcomes stated in the objectives. It meant that the instructional objectives should be the base of the assessment. The intended result of the assessment should clearly match with the outcomes stated in the instructional objectives. The analysis of the instructional content and its validation in the assessment involved the analysis of the microskills and macroskills of the four basic language skills.

As stated in chapter two that validity is best considered in terms of categories that specify the degree, namely high validity, moderate validity, and low validity (Miller et al., 2009, p. 72), the analysis result of the assessment content validity of the respondents were categorized in that ways. The writer would classify in which categories the respondents’ assessment was included by examining the content of the assessments. The categorization explanation of the assessment content validity degree was explained in the appendix.

F. Research Procedure

The research was conducted in the even semester of 2010/2011 academic year. The procedures of the research were as follows.

1. Preparation

2. Gathering the Data

The next step in conducting the research was obtaining the data which are the lesson plans and the assessment. The participants were chosen randomly from the students who had finished their Practice Teaching. The data were asked from the participants one by one.

3. Analyzing the Data

After gathering the data, the writer analyzed the content validity of each assessment based on the questions offered by Douglas Brown in which the content validity could be seen from the lesson objectives and the test specification (Brown, 2004, p. 32). In the process of analysis, the writer consulted the analysis to the lecturers who are in charge of teaching about assessment. As stated before, the purpose of consultation is to obtain more objective analysis about the content validity of assessment.

tasks or procedures, the intended result of assessment or the interpretation of the assessment result, and the uses of the assessments throughout the instruction. Finally, the writer concluded the content validity degree of each assessment along with the explanation why the assessment categorized in that degree.

4. Figuring Out the Problems

Next step was figuring out some problems which influence the degree of content validity of the assessment produced by the participants. The problems could be originated not only from the assessment production, but also it could be originated starting from the content statement and the objectives formulation. The unclear objective formulations would influence the content validity degree of the assessment. Afterwards, the writer proposed some possible recommendations to overcome the problems.

5. Conclusion

42 CHAPTER IV

RESEARCH RESULTS AND DISCUSSION

This chapter consists of both the presentation and discussion of the research findings. These are two sections presented in this chapter. The first section (A), which is the answer for the first research question, discusses how the Practice Teaching students established the content validity in their assessments. The second section (B) discusses the problems which influenced the content validity degree of the Practice Teaching students’ assessments and provided some possible recommendations to overcome the problems.

A. Establishing Content Validity in Assessment

The process of knowing how the respondents established content validity in their assessment was called content validation. The process, as stated by Alderson et al., (1995), involved gathering the judgment of “experts”, the people whose judgment one is prepared to trust, even if it disagrees with one’s own. A common way for them is to analyze the content of a test and to compare it with a statement of what the content ought to be. Such a content statement may be the test’s specifications, a formal teaching syllabus or curriculum, or a domain specification. In this research, the content statement was derived from the statement of the Competence Standard and Basic Competence formulated by the government within the national curriculum which were specified or divided into the lesson goals or objectives by the respondents. The process was also done by adapting the two questions offered by Brown (2004), namely “(1) are classroom objectives identified and appropriately framed?” and “(2) are lesson objectives represented in the form of test specifications?”

From those processes above, the writer applied two steps in examining the content validity of the respondents’ assessments. The first step was identifying and elaborating the instructional content or the content of the study stated by the respondents along with their specifications in objective or indicator formulations. The second step was comparing the content of the assessment with the statement of instructional content to obtain the idea of how content validity was established in the assessment.

Time-specified) of objective formulation. The analysis of the objective was not purposed to measure the respondents’ ability in formulating the objectives but the writer intended to make the objectives became clear and acceptable in order to help the writer determine the instructional content. It was also purposed to make the clear and acceptable objectives formulation as the basis of the analysis of the content validity of the assessment.

1. Determining the Instructional Content

The first step in obtaining the idea of how the respondents established content validity in their assessment was by determining the instructional content. It was important to understand the instructional content stated by the respondents in their lesson plan. The instructional content was derived from the Competence Standard and Basic Competence formulated by the government in the national curriculum. The content then was specified into some specific objectives which became the basis for making the assessment. Therefore, the writer analyzed the respondents’ instructional content along with the formulation of the objectives since the validity of the assessment’s content was compared with the instructional content and objectives formulation. The analysis of the objectives formulation was done by applying S.M.A.R.T and clear principle of the objective formulation.

formulations were various, although some of the respondents derived the content from the same Basic Competence. It was because the formulation of the Competence Standard and Basic Competence were so wide that the respondents should determine the specific content to be learnt by the students.

After examining the respondents’ lesson plan, the writer found that the content was well formulated but some of the objective formulations needed elaboration and specification, since there were some unclear and ambiguous objectives. The objectives formulated by the respondents were generally simple, reasonable, measurable, action-oriented but they were lack of the time specifications and they needed to be elaborated more in order to make them clearer.

The writer found that the objective formulations did not state the learning outcomes but they stated only the learning activities. Therefore, the writer chose another part from the lesson plan which stated the learning outcomes, which was the indicators part. If the writer used the objectives formulation which only stated the learning activities, the assessment would have high content validity compared with the objectives formulation, but it would have poor validity compared to the main instructional content and vice versa. Although the writer did not measure the respondents’ ability in formulating the instructional objective, the writer suggested that they needed to improve their ability in formulating the objectives which were derived from the main instructional content.

respondent formulated that “at the end of the instruction, the students are able to produce recount text (in the form of biography) using past tense correctly and well.” The formulation of the objective clearly stated the outcomes the students should obtain after they learnt about the theory of recount text and past tense.

Another example of the objective formulation came from the second respondent. The respondent stated that “the students are able to identify the meaning of some verbs.” The objective formulation was made to specify the content of understanding the function of past tense for reading and writing skills. The objective was simple, measurable, action-oriented. Yet, the objective was too general seen from the content of understanding the function of past tense for reading and writing skills. The respondent should specify the verb. Since the instruction dealt with past tense, the verb would be the verb two. Providing the students with some verbs in past form and asking the students to identify and mention the meaning of those verbs would help the students understand past tense. Although the objective formulation clearly stated the outcome the students should posses after the instruction, the objective does not match with the content skills involved in the instruction. Identifying the meaning of some verbs did not clearly help the students learn reading and writing.

two of them were tense-based content, and one of them was expression-based and tense-based content.

a. Text-based Content

Text-based content was understood that the respondents’ content formulation was based on the kinds of English text. The respondents wanted to teach English through understanding and production of some kinds of English texts. In this study, the writer found that the respondents dealt with three texts, namely recount, narrative, and report texts. The respondents intended that the students would learn English through the understanding and production of the specific text. Reading and writing were the content skills involved. The students were required to read the text when they wanted to understand or find the specific meaning and information from the text. The students had to write also when they were asked to produce specific text with specific or various themes.

One example of content statement of text-based content was “recount text production.” The content statement specified that the students would learn English through the understanding and producing of recount text. The content, as it should be, was general and it had to be specified in the form of objective. The objective formulation of the text-based content stated above was that “at the end of the instruction, the students are able to produce recount text (in the form of biography) using past tense correctly and well.”

questions related to the text, deciding whether the statements given were true or false based on the text given, arranging jumbled-paragraph to create an ordered text, and identifying the generic structure of the text. While assessing students’ ability in producing the text, the respondents directly asked their students to write the specific text with or without specific topic or information.

b. Expression-based Content

Expression-based content means that the respondents wanted their students to learn about the application of some English expressions used in daily life context. In this study, the writer found two expression taught by the respondents for their students. They were the expressions of introduction and the expressions of invitation. Those expressions were used by the respondents to teach listening and speaking. The respondents used some procedures to teach English through the expression of introduction and invitation. The students were asked to read or listen to some dialogues in which the expression of introduction and invitation were involved. They had to identify the expressions and responses used in the dialogues and at the end, they were asked to produce a dialogue which consisted of the expressions learned. The respondents had made the expressions to be the media for the students to practice, not only to learn them theoretically. The procedures would make the students familiar with the expression and they were supposed to be able to apply the expression in their daily life.

c. Tense-based Content

Tenses-based content means that the respondents wanted their students to understand the pattern and function of a specific tense in English. In this study, the writer found only one tense taught, namely simple past tense. Actually, this kind of tense was taught integrally with the explanation of recount text but two respondents tried to give specific time for the students to understand of the pattern and function of past tense in formulating English sentences deeper.

2. Content Validity of the Respondents’ Documents

The respondents’ assessments were various in its content validity. All of the assessments were valid based on the content validity of an assessment. Yet, they differed in their degree of validity. They possessed high, moderate, or low content validity.

a. High Content Validity Assessment

High content validity of an assessment could be achieved if the intended result of the assessment clearly represented and measured the instructional content or the outcomes of the instruction. The intended result of the assessment should represent the students performing what the teachers wanted from them to perform after the instruction. This degree of content validity could be achieved if the instructional content and its specification on the objectives were formulated well and clear so that the assessment prepared could also easily represent the outcomes to be achieved. It could be also achieved if the assessment was used optimally to achieve the outcomes of the instruction although the objectives were not appropriately formulated.

using past tense correctly and well. Since the outcome of the instruction was formulated well and appropriately, the assessment could also be prepared well.

The third respondent prepared an assessment procedure which directly asked the students to write a recount text. The kind of the recount text was biography. The outcome was clear that the biography production should use simple past tense and produced correctly and well. Although the outcome was clear, the word “well” for the degree of biography production has to be elaborated by the respondent since this feature can involve different interpretation.

The respondent also prepared the scoring rubric to measure the product of the biography made by the students. Hopefully, the rubric provided the explanation of the correctness and wellness of the product. Therefore, the students knew about the intended product they would make. Unfortunately, the respondent did not provide the scoring rubric in her documents.

exemplification, and third, the students developed and used battery of writing strategies. After analyzing the documents prepared by the third respondent, the writer could conclude that her assessment possessed high content validity.

b. Moderate Content Validity Assessment

The assessment possessed moderate content validity if the intended result of the assessment or the assessment content could fairly represent the intended performance the students should be able to do after the instruction. The assessment could also be called having moderate content validity if it could represent the main content clearly but could not really represent the content skill the students should perform after the instruction.