THE IMPLEMENTATION OF EMPIRICALLY

DERIVED, BINARY-CHOICE, BOUNDARY

DEFINITION (EBB) SCALE AS A RATING SCALE

DESCRIPTOR FOR WRITING ASSESSMENT IN

SMAN 1 WRINGINANOM

THESIS

Submitted in partial fulfillment of the requirement for the degree of Sarjana

Pendidikan (S. Pd) in Teaching English

By:

Ainun Munfadhilah

D35212047

ENGLISH TEACHER EDUCATION DEPARTMENT

FACULTY OF TARBIYAH AND TEACHER TRAINING

SUNAN AMPEL STATE ISLAMIC UNIVERSITY

vi

ABSTRACT

Munfadhilah, Ainun. (2017). The Implementation of Empirically Derived, Binary-Choice, Binary Definition (EBB) Scale as a Rating Scale Descriptor for Writing Assessment in SMAN 1 Wringinanom. A Thesis. English Teacher Education Department, Faculty of Education and Teacher Training, State Islamic University of Sunan Ampel, Surabaya. Advisor: Dra. Hj. Arba’iyah YS., MA

Key Words: Empirically derived, Binary-choice, Boundary definition (EBB) scale, Writing Assessment, EBB scale constructed Rubric, Rubric.

Writing assessment is a teacher way to examine the teacher’s goal, it must be

successful or not student apply the lesson. Writing assessment as a goal of the teacher that illustrated in a criteria used to assess the writing product. Rubric as a tool

of measurement students’ performance. Generally, rubric did not appropriate for

students. Rubric is used by teachers do not represent all the existing level of the class because teachers do not make their own rubric that includes all of abilities in one class. The appropriate rubric are created by teachers for the student's ability is going to be more objective assessment. EBB scale is a rating scale descriptor for productive

skills that constructed based on students’ performance level. This research describe

how EBB scale constructed rubric for writing. This research is descriptive qualitative method. The subject was students of X MIPA 4 in SMAN 1 Wringinanom whose did writing assessment then based on the score, students divided into two groups; better and lower performance. Based on document analysis, directly observation, and interview the researcher made aspect, descriptor, and gave point. There are six steps to construct EBB scale for an assessment. The result of rubric constructed EBB scale are; (a) the aspect of assessment are content, communicative effectiveness, grammatical and vocabulary, and mechanism, (b) there are six descriptor for each aspect that represent all student performance levels, and (c) there are six point for each descriptors. The implementation of rubric constructed EBB scale did in twice. Both of first and second implementation had no differences of student performance levels; there are seven level performances. The suggestion for the teacher could make rating scale by himself or herself, because when the teacher make the rating scale, the rating scale will be appropriate to students. It will be useful for the teacher while

C. Objective of The Study ... 8

D. Significance of The Study ... 9

E. Scope and Limitation ... 9

F. Definition of Key Term ... 10

CHAPTER II : REVIEW OF RELATED LITERATURE A. Theoretical Foundation ... 12

1. Rating Scale ... 12

2. Writing ... 13

3. Assessment ... 13

4. Rating Scale in Writing Assessment ... 14

5. Empirically derived, Binary-choice, Boundary definition (EBB) scale ... 15

6. EBB scale as a Rating Scale Descriptor for Writing Assessment ... 16

xi

F. Data Collection Technique ... 29 G. Research Instrument ... 30 H. Data Analysis Technique ... 33

CHAPTER IV : RESULT AND DISCUSSION

A. Research Finding ... 37 1. The Development of Empirically derived, Binary-choice, Boundary

definition Scale... 37 2. The Implementation of Empirically derived, Binary-choice, Boundary

definition Scale... 54 B. Discussion ... 60

1. The Development of Empirically derived, Binary-choice, Boundary definition Scale... 60 2. The Implementation of Empirically derived, Binary-choice, Boundary definition Scale... 62

CHAPTER V : CONCLUSION AND SUGGESTION

A. Conclusion ... 65 B. Discussion ... 66

REFERENCES

xii

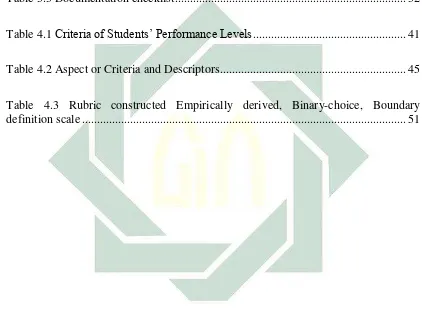

LIST OF TABLE

Table 3.1 Data of Component In-depth Interview ... 31

Table 3.2 Observation checklist ... 31

Table 3.3 Documentation checklist ... 32

Table 4.1 Criteria of Students’ Performance Levels ... 41

Table 4.2 Aspect or Criteria and Descriptors ... 45

xiii

LIST OF DIAGRAM

Diagram 4.1 The Result of First Assessment use EBB scale ... 56

xiv

LIST OF APPENDICES

Appendix A: Student’s Score Appendix B: Teacher’s Rubric

Appendix C: EBB scale Construction

Appendix D: First Implementation of Rubric Constructed EBB scale Appendix E: Second Implementation of Rubric Constructed EBB scale Appendix F: Transcript Field note

1

CHAPTER I

INTRODUCTION

This chapter orderly presents background of the study, research problems, objective of the study, significance of study, scope and limitation, and definition of key terms. Each section is presented as follows:

A. Background of the Study

Writing is one of skills in learning language that considered as the most difficult skill. Hammad said in his journal that mastering writing is most difficult for first and foreign language learners. It is a complicated process since it involve a series of forward and backward movements between the writer's ideas and the written text. 1

In writing, it is important to remember about the grammatical and the message written in the text. Writing is not only about stating the ideas into written form but also about arranging the words to be a good sentence by regarding the grammatical features. There are some requirements that have to be fulfilled to create a high quality text. Yanti states that writing is complex and difficult to teach and to learn, requiring mastery not only of grammatical and rhetorical devices but also of conceptual and judgmental elements. It can be concluded that to compose a good English essay, the mastery of language also a well

1Hammad Enas Abdullah, “

Palestinian University Students' Problems with EFL Essay Writing in an

2

understanding of grammar and its organization is needed2 Some factors of writing make this language competence to be difficult.

Dealing with this case, EFL lecturers and students face certain problems in teaching and learning writing. As many teachers of English have noted, acquiring writing skill seems to be more laborious and demanding rather than acquiring the other language skills.3 Therefore writing is the last skill that mastered by students who are learning the language.

Writing as a fundamental aspect of academic literacy and communicative competence in the current educated world, said Behizadeh and Engelhard.4 In education, writing is one of language skills that must be mastered by the student it is stated by.5 Each examination in an institution or school given to measure students' abilities at the end of studying usually is in the form of a written test. If student is not able to master writing skill, then it will be hard for them to write a grammatically correct answer. In learning language, writing and speaking is crucial to measure the ability of a student or as an assessment.

2

Yanti Nurhayat, 2012. Graduating Paper:“The analysis on the use of cohesive devices in English writing essay among the seventh semester students of English Depatment of STAIN Salatiga in the Academic Year of 2011/2012” (STAIN Salatiga, 2012), 14.

3Abdel Hamid Ahmed, “Students’ problem

s with cohesion and coherence in EFL essay writing in

Egypt: Different perspectives”… p. 211.

4

Batoul Ghanbari, Hossein Barati, and Ahmad Moinzadeh, “Problematizing Rating Scales in EFL

Academic Writing Assessment: Voices from Iranian Context”, English Language Teaching, vol. 5, no. 8 (2012), p. 76, http://www.ccsenet.org/journal/index.php/elt/article/view/18617, accessed 7 Jun 2016.

5

Fadilah Nurul, 2014. “An analysis of using cohesive devices in writing narrative text at the second

3

Dealing with assessing, writing assessment is the students’ creativity in writing evaluation which gives by the teacher to measure students’ ability.6

If speaking tends to be spontaneity, as opposed to writing that takes more time. Teacher is given assessment often in written form, since writing activities can develop students' writing ability, because from writing activities the student can write their ideas and also train the ability to make correct sentences. Assignment in the form of writing is usually in the form of essay topics and text types are determined by the teacher.

When the teachers give assessment to students, teachers have the certain criteria. In writing assignment, there are usually two aspects used as an assessment, there are; grammatical accuracy and communicative effectiveness. Grammatical accuracy is used to measure students' ability applying grammar used in writings. While communicative effectiveness is used to measure how proper the language used to convey students’ ideas into written form.

In addition, two aspects are critical to ensure reliability and validity assessments made by teacher, the teacher also should have to pay attention to the ability of students that determine the level of language proficiency and knowledge which is affected by the way of learning through a course or not. Bachman and Savigon, Fulcer, and Matthews makes some criteria for reliability and validity, one of the criteria of reliability is standards for grading shift as students improve

6

Graham and Harris, 1993. Paragraph-Academic writing to essay. Mancmillan.

4

during a course. The teacher unconsciously raises standards as the level of student ability increases.7 In most cases, a teacher in a higher grade will give the same average rating to her class as a teacher at a lower grade who uses the same rating scale. From these factors, teachers should make an assessment rubric that is valid and reliable. Rubric can represent criteria in the assessment of teachers.

Usually, the teachers make some criteria to score students’ work, but without making a rating scale descriptor. The teacher assumes that rating student with rating scale descriptor is difficult, that is why teacher rates student without rating scale descriptor. Upshur and Turner makes some procedures for the development of rating scales can help address the issue of reliability and validity.8

Rating scale is so useful to help teacher describing student ability for each level in the same grade. Teacher using rating scale in rating student that develop based on student’s level is a fair rating, because the rating scale descriptor developed based on the student’s ability. When teacher makes a rating scale, teacher needs to know student’s ability and level in a class. Skehan explain that criteria for evaluating performance more relevant to language development and use are needed. Tasks and scoring procedures need to be designed to

7

John A. Upshur and Carolyn E. Turner, “Constructing rating scales for second language tests”, ELT journal, vol. 49, no. 1 (1995), p. 5, accessed 31 May 2016.

8John A. Upshur and Carolyn E. Turner, “Constructing rating scales for second language tests”… p. 5

5

accommodate the naturalness of language use. Assessing such language effectively is more demanding than objective scoring.9

Rating given by teacher is a reflection of teachers’ perception to student’s attitude, and student’s ability in the class. Teacher does not give more attention to some factors that influence students’ rate, such as; student’s condition, ability, proficiency level, and other factors. In modern text books on language testing provide many examples of rating scales. These take the form of;

“. . . a series of short descriptions of different levels of language ability. The purpose of the scale is to describe briefly what the typical learner at each level can do, so that it is easier for the assessor to decide what level or score to give each learner in a test. The rating scale therefore offers the assessor a series of prepared descriptions, and she then picks the one which best fits each learner (Underhill 1987: 98).”10

Empirically deriverd, Binary-choice, Boundary-definition scale called EBB scale is a rating scale descriptor for productive skills. EBB scale helps teacher to construct rating scales especially in speaking and writing skill. This scoring method focused on communicative effectiveness and grammatical accuracy that construct with storytelling activity. Rating scale that adopted in speaking or writing rated task in story telling activity also can use in other speaking or writing assessment that has same purpose.

9

Skehan, P, "Progress in language testing: the 1990s", in J. C. Alderson and B. North (eds.) Language Testing in the 1990s: TheCommunicative Legacy. London: Modem English Publications and The British Council, 1991.

10

6

In EBB scale development it is essential to have samples of language (writing or speaking) generated from specific language tasks, and a set of expert judges who will make decisions about the comparative merit of sets of samples.11 According to Hamid, writing is the most challenging skill among students and teachers.12 In words, teachers should teach how to arrange the words into a good sentence using appropriate tenses. After all, the teacher teach how to write correctly, starting from constructing words into sentence and compiling sentences into paragraphs. Writing is not as easy as the other language skills, as it requires a lot of ideas to develop. According to that reason, the researcher choose writing assessment for constructing EBB scale.

There are procedure for develop rating scale descriptor; first, eight student performances were selected from the set to be rated. These should represent approximately the full range of ability in the total set. Second, each of the six members of the research team individually divided the set of eight performances into the four better and four poorer. This was done impressionistically. Third, the team discussed their dichotomous rankings and reconciled any differences. They then formulated the simplest criteria1 question that would allow them to classify performances as „upper-half’ or „lowerhalf’ according to the attribute that they

were rating. Then, working with the four upper-half performances, the team

11

Glenn Fulcher, Practical language testing (London: Hodder Education, 2010), p. 211.

12Abdel Hamid Ahmed, “Students’

problems with cohesion and coherence in EFL essay writing in

7

members individually rated each of them as „6’, „5’, or „4’. The procedure requires that at least one sample should be rated as „6’; at least two numerical

ratings must be used. Therefore, at least two of the four samples receive the same rating. This scoring was also done impressionistically. Next, rankings were discussed and reconciled. Simple criterial questions were formulated, first to distinguish level 6 performances from level 4 and 5 performances, and then level 5 performances from level 4 performances. Last, steps 4 and 5 were repeated for the lower-half performances.13

Rubric in assessment is very useful but there is very rarely used in schools. A teacher always has its own criteria in assessing his students. Majority of the raters in this study believed that native scales have to be appropriated in the context before application. In their ideas, unmediated application of native rating scales would surface a hidden conflict between the assumptions behind these scales on the one hand and the realities of the local context on the other hand.14 Oftenly, the rubric used by teachers do not represent all the existing level of the class because teachers do not make their own rubric that includes all of abilities in one class. Rubric used for assessment that inappropriate is not valid. The appropriate rubric created by teachers for the student's ability is going to be more objective assessment.

13John A. Upshur and Carolyn E. Turner, “Constructing rating scales for second language tests”,

ELT journal, vol. 49, no. 1 (1995), p. 7, accessed 31 May 2016.

14

Ghanbari, Barati, and Moinzadeh, “Problematizing Rating Scales in EFL Academic Writing

8

SMAN 1 Wringinanom is the one and only public school that exists in this region, but the assessment of foreign language subject is less valid and reliable. Assessment for foreign language subject often use a rubric that does not appropriate to the ability of the students in the classroom, so the students rarely get a perfect score in multiple object in the language. Doing assessments without using a rubric could lead to less objective assessment for students. From that reasons, the researcher makes this place to be an object of research.

B. Research Question

1. How is the development of empirically-derived, binary-choice, boundary-definition (EBB) scale for rating scale descriptor constructed for writing assessment?

2. How is the implementation of empirically derived, binary-choice, boundary definition (EBB) scale as a rating scale descriptor for writing assessment?

C. Objective of the Study

This research intended to;

1. Illustrate the development of empirically-derived, binary-choice, boundary-definition (EBB) scale for rating scale descriptor constructed for writing assessment.

9

D. Significant of the Study

The results of the study are hoped to give benefits for teachers, the researcher, and the other researchers:

1. For English Teacher

The teacher is able to make a rubric for the class based on student’s level and ability. The student for each level in same grade will be described in rating scale descriptor. The teacher also can provide an assessment to students more objective. EBB scale using is more valid and detail for assessment.

2. For the School

The school will get more objective and valid assessment. The students in the school do not have to worry that the teacher will assess subjectively, because teachers use a rubric that includes all level of the class and the rubric is more detail.

E. Scope and Limitation

10

On contrary, this study does not cover how the student improve the writing skill and environment aspect to improve the writing skill such as media, class atmosphere, and any other antecedent that may influence students’ ability to write

at the time.

F. Definition of Key Term

Several important keywords are used in this research in which the researcher needs to define to provide clearer understanding of the concept.

1. Empirically derived, Binary-choice, Boundary definition (EBB) scale

EBB scales are rating scale descriptor for productive skills that focused on grammatical accuracy and communicative effectiveness. EBB scale constructing with storytelling and developing to be rating scale descriptors. Fulcher said in his book, that EBB development it is essential to have samples of language (writing or speaking) generated from specific language tasks, and a set of expert judges who will make decisions about the comparative merit of sets of samples. An EBB has to be developed for each task type in a speaking or writing test.15

2. Writing assessment

Writing assessment is the students’ creativity in writing evaluation which

gives by the teacher to measure students’ ability.16

Writing assessment as a goal of the teacher that illustrated in a criteria used to assessing the writing

15

Glenn Fulcher, Practical language testing (London: Hodder Education, 2010), p. 211.

16

11

product. Writing assessment is a teacher way to examine the teacher’s goal, it

must successful or not student apply the lesson. Writing assessment in this research is as a media to implementation EBB scale.

12

CHAPTER II

REVIEW OF RELATED LITERATURE

This chapter orderly presents theoretical foundation: definition of rating scale, writing, and assessment. Then, it is continued by the explanation of rating scale in writing assessment, explanation of EBB scale, and EBB scale as a rating scale descriptor in writing assessment. Next is about previous studies, which have the relation of the research.

A. Theoretical Background

1. Rating Scale

In language testing, Namara states that a rating scale is a series of ascending descriptions of salient features of performance at each language level. A language performance can be assessed by examining either the whole impression of the performance or the performance according to different criteria. In this regard, there are two types of rating scales: holistic scales which describe learners‟ performances as a whole (e.g. the

13

2. Writing

Writing is a fundamental aspect of academic literacy and communicative competence in the current educated world.1 According to Hammad, writing is a form of language outcomes as a real form of language input. In writing, to produce an essay, the authors should have an idea which written with a correct grammatical, mechanical writing, content and communicative language.

Furthermore, writing as a thinking process which involves generating ideas, composing these ideas in sentences and paragraphs, and finally revising the ideas and paragraphs composed. Good writing also requires knowledge of grammatical rules, lexical devices, and logical ties.2 Moreover, White and Arndt define 'writing' as "a form of problem-solving which involves such process as generating ideas, discovering a voice with which to write, planning, goal setting, monitoring and evaluating what is going to be written, and searching with language with which to express exact meanings".

3. Assessment

Traditionally, the word “assessment” has referred to the way teachers assign letter grades on tests and quizzes. Assessment has also been used as a way to discuss teaching effectiveness. However, assessment is now

1Nadia Behizadeh and George Engelhard, “Historical view of th

e influences of measurement and

writing theories on the practice of writing assessment in the United States”, Assessing Writing, vol. 16, no. 3 (2011), p. 190, accessed 27 Jul 2016.

2Hammad Enas Abdullah, “

Palestinian University Students' Problems with EFL Essay Writing in

14

taking on a new meaning. It should be a “dynamic process that continuously yields information about student progress toward the achievement of learning goals”.3 William cited in cornard states that in

order for assessment to be considered authentic, it must focus on whether or not students can apply their learning to the appropriate situations.4

Assessment used by the teacher to measure student ability in final lesson. The teacher gives assessment to student as a product for the student‟s input. The assessments are appropriate with students‟ creativity

in applying the lesson. Teacher‟s goals visible with assessment criteria

gives to student, it must success or not student utilize their knowledge of the lesson to get the perfect assessment.

4. Rating Scale in Writing Assessment

The conceptualization of rating scale as part of the test construct has opened a new horizon on examining different aspects of rating scales functioning in performance assessment. Writing assessment as a kind of performance assessment is affected by the quality of the rating scale used. To a large extent, the common thread of arguments on rating scales converges on the important issue of construct validity. Weigle summarizes McNamara, identifying the importance of rating scale in the quality of

3Garfield, G.B., “

Beyond testing and grading: using assessment to improve student learning”,

Journal of Statistics Education, 2(1), 1994.

http://www.amstat.org/publications/jse/v2n1/garfield.html

4

Nicole Williams, Reflective journal writing as an alternative assessment (2008), p. 2,

15

assessment, on the centrality of the rating scale to the valid measurement of the writing construct:

The scale that is used in assessing performance tasks such as writing tests represents, implicitly or explicitly, the theoretical basis upon which the test is founded; that is, it embodies the test( or scale) developer’s notion of what skills or abilities are being measured by the test. For this reason, the development of a scale (or set of scales) and the descriptors for each scale level are of critical importance for the validity of the assessment. 5

5. Empirically derived, Binary-choice, Boundary definition (EBB) scale

EBB scale is a rating scale descriptors used to assessing with a specific purpose, such as the assessment of productive skills. An EBB has to be developed for each task type in a speaking or writing test.6 Fulcher states in his book that in EBB development it is essential to have samples of language (writing or speaking) generated from specific language tasks, and a set of expert judges who will the make decisions about the comparative merit of sets of samples.7

The empirically derived, binary-choice, boundary definition scales (EBBs), what distinguishes this method is that the scale – and hence the cognitive process that raters must follow – is set forth as a series of repeated and branching binary decisions. EBBs are constructed by rank ordering performances on test tasks and then identifying key features that

5Batoul Ghanbari, Hossein Barati, and Ahmad Moinzadeh, “Problematizing Rating Scales in EFL Academic Writing Assessment: Voices from Iranian Context”, English Language Teaching, vol. 5, no. 8 (2012), p. 77, http://www.ccsenet.org/journal/index.php/elt/article/view/18617, accessed 31 May 2016.

6

Glenn Fulcher, Practical language testing (London: Hodder Education, 2010), p. 212.

7

16

judges use to separate the performances into adjacent levels. EBBs represent an innovation in the logic of how raters judge performance with reference to performance data in specific contexts of language use. EBBs may not contain the rich description of the previous method, but they are relatively easy to use in real-time rating, and do not place a heavy burden on the memory of the raters.8

6. EBB scale as a Rating Scale Description in Writing Assessment

EBB scales develop for productive skills that construct based on student ability in the same level. The step to develop a rubric using EBB scale is; According to Upshur and Turner there are six steps to develop EBB scale: a. Eight student performances were selected from the set to be rated.

These should represent approximately the full range of ability in the total set.

b. Each of the six members of the research team individually divided the set of eight performances into the four better and four poorer. This was done impressionistically.

c. The team discussed their dichotomous rankings and reconciled any differences. They then formulated the simplest criteria1 question that would allow them to classify performances as „upper-half‟ or „lowerhalf‟ according to the attribute that they were rating. Similarly,

to construct an eight-point scale, three levels of questions would be needed; the rater would ask three questions to score any writing

17

sample. With a fourth level of questions, a sixteen-point scale can be made. impressionistically. A six-point scale was chosen for two reasons. First, a scale with an even number of categories allows for a binary split into two equal halves by means of the first criteria1 question. Secondly, six categories can be readily handled by judges, and provide relatively high reliability of ratings.9

e. Rankings were discussed and reconciled. Simple criteria questions were formulated, first to distinguish level 6 performances from level 4 and 5 performances, and then level 5 performances from level 4 performances.

f. Steps 4 and 5 were repeated for the lower-half performances. 10

B. Previous Study

In this part, the researcher reviewed some previous studies related to this research:

9

Finn, R. H.,”Effects of some variations in rating scale characteristics on the means and reliabilities of ratings”. 1972. Educational andPsychological Measurement 32: 255-65.

10

John A. Upshur and Carolyn E. Turner, “Constructing rating scales for second language tests”,

18

1. First, there are also some researches related to using rating scale for assessment in productive skills. Harai and Koizumi were done the study. They were analyzing validity and reliability between two rating scale, those are; EBB scale that compared with an analytic scale. The research was conducted to know the rating scale more valid and reliable to assessing productive skills. This research using Story Retelling Speaking Test (SRST) to verify two rating scales. The finding shows that EBB scale more valid and reliable for SRST, whereas analytic scale more practically. The EBB scale was slightly superior in reliability and validity, whereas the analytic scale excelled in practicality. However, the results helped us find points for revision in the scales. First, the descriptors of EBB Grammar & Vocabulary criterion should be modified. Second, the Communicative Efficiency and Content criteria of the EBB scale can be combined to enhance its practicality. Third, the current EBB binary format might be changed into one similar to the analytic scale. Since both scales have strengths, combining the good aspects of these scales may enable us to create a better scale in the future.11

2. Second is the research was done by Mu-shuan Chou, entitled “Teacher Interpretation of Test Scores and Feedback to Students in EFL Classrooms: A Comparison of Two Rating Methods”. The research was

conducted to know interpreted teacher in scoring speaking and student‟s benefit from feedback of two rating scale descriptor. This research using

11Harai Akiyo, and Koizumi Rie, “Validation of the EBB scale: A Case of the Story Retelling

19

rating scale and rating checklist that focused on the oral test in role play and simulation task to compare teachers interpret and student‟s feedback.

The rating scale descriptor are adopt for this research is the empirically derived, binary-choice, boundary definition (EBB) scale and Performance Decision Tree‟ (PDT) scale. The finding shows that rating scale more

detail in description and help student more focus than rating checklist. The criteria in the performance data-driven rating scale and the rating checklist were the same; the fifteen teachers reported differing interpretations of student performance on the role-play/simulation tasks. Eleven out of fifteen (73.3%) considered that the rating scale, with its more detailed descriptors, offered more comprehensive information of students‟

speaking ability and skills than the rating checklist. Seven said that the scale was effective in terms of helping them decide whether their students were able to use what they had learned in class and whether they used it correctly or not. Four teachers reported that they tended to focus more on what had not been done by the students in the task than pay attention to what had been done, so the rating scale with detailed descriptors helped them focus on positive aspects of student performance.12

3. Third, is “Problematizing Rating Scale in EFL Academic Writing

Assessment: Voice from Iranian Context” by Batoul Ghanbari, Hossein

Barati & Ahmad Moinzadeh. The research was conducted to know

12

Mu-hsuan Chou, “Teacher Interpretation of Test Scores and Feedback to Students in EFL

20

effectiveness using local rating scale. This research was compared the assessment used traditional approach and rating scale. The research was done with some questioner given to teacher or test-taker to know how far the teacher used local rating scale. Majority of the raters in this study believed that native scales have to be appropriated in the context before application. In their ideas, unmediated application of native rating scales would surface a hidden conflict between the assumptions behind these scales on the one hand and the realities of the local context on the other hand. McNamara states that strongly questions the validity of rating scales and by tracking the origin of scale tradition in the FSI test in the 1950s shows how successive rating scales developed over the last four decades have been heavily influenced by the assumptions, and even the wording of the original work, and rare empirical validation has been done.13

4.

5. Fourth, the other study was done by Batoul Ghanbari, Hossein Barati and

Ahmad Moinzadeh, entitled “Rating Scales Revisited: EFL Writing

Assessment Context of Iran under Scrutiny”. The research is analyzing the

construct of rating scale for writing assessment. Such as the recent study, “Problematizing Rating Scale in EFL Academic Writing Assessment:

Voice from Iranian Context” show that the rating scale was construct with

two focuses, the socio-cognitive and critical argument, deficiencies of the present practice of adopting rating scales are revealed and consequently it

21

is discussed how assessment circles in native countries by setting rating standards control and dominate the whole process of writing assessment.14 The findings shows that a local rating scale has a more valid outcomes, but test takers should always develop it to be more appropriate to student‟s

writing assessment in Iran. This article reminds that in addition to the long-term obligation of continually examining and testing the evaluation procedures and the assumptions that underlie them, a local rating scale as it takes into account the particularities of each assessment context would lead to more valid outcomes.15

6. Fifth is “Benefits from Using Continuous Rating Scales in Online Survey

Research” by Horst Treiblmaier and Peter Filzmozer. The research was

conducted to know the effectiveness using continuous rating scales in online survey. This research is compared manual measurement and computers administer surveys. The major problem with such scales in the past was the inaccurate and tedious data collection process, since with pencil-and-paper surveys it was necessary to manually measure the respondent‟s answer on a sheet of paper. The usage of computer

-administered surveys render such problems obsolete, since measurement and data collection can be done without any loss of precision.16 This

14Batoul Ghanbari, Hossein Barati, and Ahmad Moinzadeh, “Rating Scales Revisited: EFL Writing Assessment Context of Iran under Scrutiny”, Language Testing in Asia, vol. 2, no. 1 (2012), p. 83, accessed 31 May 2016.

15

Batoul Ghanbari, Hossein Barati, and Ahmad Moinzadeh, “Rating Scales Revisited: EFL

Writing Assessment Context of Iran under Scrutiny”,…p. 97.

16H. Treiblmaier and P. Filzmoser, 2009. “Benefits from Using Continuous Rating Scale”,

22

research applies the robust MCD (Minimum Covariance Determinant) estimator to a data set which consists of variables with a data range from 1 to 100. The results show that outliers (which can occur in the form of “nonsense” data or noise in any survey) severely affect the correlation of

the variables. In a next step, we illustrate that the application of robust factor analysis, which can be applied only with the 100-point scale, leads to more pronounced results and a higher cumulative explained variance. Given that factor analytic procedures are part of covariance based structural equation modeling, which is frequently used to test theories in IS research, our findings also bear huge significance for such advanced techniques.17

7. Sixth is “Effective Rating Scale Development for Speaking Tests: Performance Decision Trees” by Glenn Fulcher, Fred Davidson, and Jenny Kemp. This research was done to develop a Performance Decision Trees (PDT) scale to be easier to use for speaking test. Performance Decision Trees are more flexible and do not assume a linear, unidimensional, reified view of how second language learners communicate. They are also

pragamatic, focusing as they do upon observable action and performance, while attempting to relate actual performance to communicative competence.18 This research show that the rating scale of speaking test be easier to apply but it still valid and reliable. A major part of a validity

17

H. Treiblmaier and P. Filzmoser, 2009. “Benefits fromUsing Continuous Rating Scale”, …p. 19

18G. Fulcher, F. Davidson, and J. Kemp, “Effective rating scale developm

ent for speaking tests:

23

claim for a PDT would rest upon the comprehensiveness of the description upon which it was generated, and the relevance of the assessment catego-ries to current theocatego-ries of „successful interaction‟ within a particular

context. As such, other PDTs must be developed through a careful analysis of communication in context, and a theoretical description of the constructs that underlie successful interaction, in order to generate context sensitive assessment categories.19

8. Seventh is “Pengembangan Instrument Penilaian Membaca Kelas VII SMP” by Nila Maulan, Imam Agus Basuki, and Bustanul Arifin. This

research was done to develop an instrument for reading assessment. The product of this research was an instrument used to assess reading dictionary, reading fast, reading ceremony text, retelling story, and giving comment on narrative text. There were two instrument for assessing reading dictionary; those are assignment of reading dictionary and rubric for assessment. Instrument for assessing fast reading were subjective text and answer clue. Instrument for reading ceremony text were reading ceremony text and rubric assessment. Instrument for assessing retelling story were retell the story and rubric assessment. And instrument for assessing giving comment for text book were give comment and rubric assessment. According to evaluator expert, practice expert and students,

19

G. Fulcher, F. Davidson, and J. Kemp, “Effective rating scale development for speaking tests:

24

the researcher concluded that based on validity, reliability, and practices the product appropriate to be implemented.20

9. Eighth is “Pengembangan Rubrik Penilain Portofolio Proses Sains Siswa pada Materi Ekosistem di SMPN 1 Wedarijaksa-Pati” by Vera Widyaningsih. This research was done to develop rubric for sains assessment. Based on the research, the writer concluded that the teacher did not use rubric for each aspect on the lesson. Teacher used rubric for assess cognitive aspect in LKS only. The researcher develop rubric for assessment through identify process, construct rubric, and implementation the rubric. Based on the result, students mean had not differences between three classes. Teacher and students responses were positive for rubric assessing sains. Rubric for portfolio assignment for ecosystem material to be appropriate for assessment.21

This research is different from all of those researches because in this research, the researcher analyze the development and implementation of empirically derived, binary choice, boundary definition scale in senior high school. The procedure to develop rating scale descriptors of empirically derived, binary choice, boundary definition scale is first give the task to a group of students drawn from the target population. Take the resulting language samples and ask the group of experts to divide them into two groups

20

N. Maulani, I. Agus Basuki, and B. Arifin, “Pengembangan Instrument Penilaian Membaca

Kelas VII SMP”, Universitas Negeri Malang. 2012

21

V. Widyaningsih., Undergraduate thesis; “Pengembangan Rubrik Penilaian Portofolio Proses

25

–the „better‟ and the „weaker‟ performances.22

The researcher uses descriptive qualitative research.

22

25

CHAPTER III

RESEARCH METHOD

This chapter orderly presents research design, research setting, subject of the research, source of data, research procedures, research instrument, data collection technique and data analysis technique.

A. Research Design

Researcher conducted this study using qualitative approach to find out two things. i.e. the development of empirically derived, binary choice, boundary definition scale called EBB scale and the implementation of EBB scale. Qualitative research was designing to reveal a target audience’s range of behavior and the perceptions that drive it with

referencing to some specific topics or issues. It uses in-depth studies of small groups of people to guide and support the construction of hypotheses. The results of qualitative research are descriptive rather than predictive.1

Qualitative researchers typically rely on four methods for gathering information: (a) participating in the setting, (b) observing directly, (c) interviewing in-depth and (d) analyzing documents.2 This research was done in three stages;

1

Qualitative Research Consultants Association, ”The place for cutting-edge qualitative research”,

http://www.qrca.org/?page=whatisqualresearch accessed on 31 May 2016

26

1. Observing Directly

The researcher did observation in three times to then wrote field notes. The field notes described about students’ condition while teaching and learning process be hold.

2. Interviewing In-depth

The researcher did in-depth interviewing to explore any information about students’ level, teachers’ rubric, and writing assessment.

3. Analyzing Document

The researcher analyzed the students’ writing, the teacher’s rubric, and students’ writing as an implementation of EBB scale.

B. Research Setting

This research was conducted at SMAN 1 Wringinanom in X IPA 4 class. SMAN 1 Wringinanom is the one and only public school that exist in this region. Assessment for foreign language subject often use a rubric that does not appropriate to the ability of students in the classroom. Thus, students rarely get a perfect score in multiple object in the language. As the result, the researcher makes this place to be an object of research.

27

C. Subject of the Research

The subject of this research is all students in X IPA 4 class. There are 23 students in this class: 2 male students, and 21 female students. All of students did a test given by the teacher. The score of the test are divided students into two groups; upper and lower performances. The sample took six students as the sample to construct criteria and hierarchy, because the point of rubric is being six points. The subject of this research is also the teacher who will do an assessment and to be interviewed.

D. Source of Data

According to Lexy. J. Moleong in his book entitled “Metodologi

Penelitian Kualitatif” was took by Fuadin in his thesis, states that primary data in qualitative research are words and actions, and the secondary data are documents and others.3 In this research, the primary data was student’s writing assignment, student’s score, the English teacher, and all students at X IPA 4 class. Secondary data was teachers’ rubric.

E. Research Procedure

There were some procedure to be followed during this research, in order to find out the valid data to answer the research problems. The procedures were:

3

28

1. Instrument Preparation

The researcher prepared all the instruments to collect the data. There were steps in preparing the instrument:

a. Making the document analysis checklist to analyze the students’ writing and teachers’ rubric. The researcher also made an interview

guide for interviewing the teacher.

b. Constructing empirically derived, binary choice, boundary definition scale based on the result of document analysis.

c. Developing rating scale descriptors in a new rubric.

d. Deciding test for assessment. The test was matched with students’ material in the book that learn about intention and descriptive text, so they had been familiar about the text.

e. Assessing test using rubric was constructed EBB scale.

2. Data Collection

29

researcher implemented it for the second time for writing assessment to part of descriptive text.

3. Data Analysis

The researcher analyzed the data, and made any conclusion as the result of the research.

F. Data Collection Technique

In this research, there were four data collections: observation, in-depth interview, and document analysis.

1. Observation

Observation entails the systematic noting and recording of events, behaviors, and artifacts (objects) in the social setting chosen for study. The observational record is frequently referred to as field notes—

detailed, nonjudgmental, concrete descriptions of what has been observed.4 This technique was used to collect the data of students' behavior, class environment, and teaching-learning process. The researcher wrote field notes while doing observation to describe the information needed.

2. In-depth interview

In-depth interviews are typically much more like conversations than formal events with predetermined response categories. The researcher explores a few general topics to help uncover the participant’s views but otherwise respects how the participant frames

4

30

and structures the responses.5 Here, the researcher used in-depth interview to explore detail information about students’ level, teachers’ rubric, and how many times the teacher did writing assessment.

3. Document analysis

Document is the process of looking back at the sources of data from existing documents and used to expand the data that has been finding. The source of the document data obtained in the field of books, records, magazines and even corporate documents or official documents relating to the research focus.6 The researcher analyzed students’ writing, students’ score and the teachers’ rubric. The

document analysis technique used to answer both research questions.

G. Research Instrument

The researcher made the instrument for her research that used to collect the data.

a. Interview guide

Interview guide in this research was unstructured question. The interview focused on how many times the teacher did writing assessment, the students’ level, and how the teacher assessing writing.

5

Marshall, “Data Collecting Method”… p. 101

6Susi Ardina, “PENGARUH MATA PELAJARAN AKIDAH AKHLAK TERHADAP PEMBENTUKAN KARAKTER SISWA DI SMP WACHID HASYIM 2 SURABAYA” (UIN

31

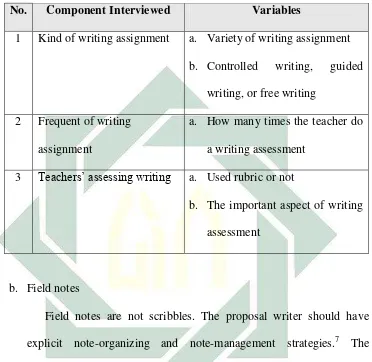

Table 3.1

Data of Component In-depth Interview

No. Component Interviewed Variables

1 Kind of writing assignment a. Variety of writing assignment b. Controlled writing, guided

writing, or free writing 2 Frequent of writing

assignment

a. How many times the teacher do a writing assessment

3 Teachers’ assessing writing a. Used rubric or not

b. The important aspect of writing assessment

b. Field notes

Field notes are not scribbles. The proposal writer should have explicit note-organizing and note-management strategies.7 The researcher wrote how teaching and learning process be hold, students’

behavior such as students’ actively engage or not.

Table 3.2 Observation checklist

No. Component of observation Variables

1 Students’ behavior a. Student are actively engage or

7

32

not

b. Student enthusiasm 2 Teaching and learning

process

a. How the teacher open the lesson, give materials, give assignment, and close the lesson

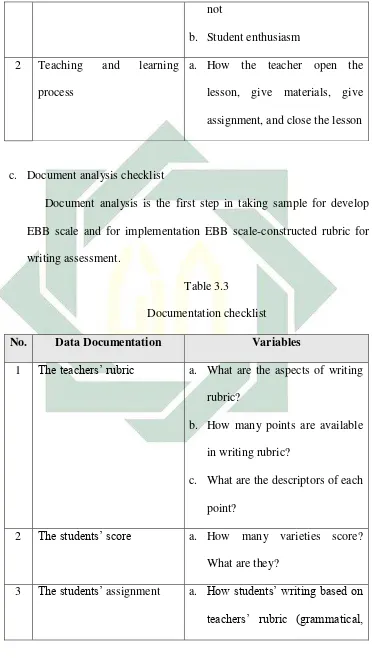

c. Document analysis checklist

Document analysis is the first step in taking sample for develop EBB scale and for implementation EBB scale-constructed rubric for writing assessment.

Table 3.3

Documentation checklist

No. Data Documentation Variables

1 The teachers’ rubric a. What are the aspects of writing

rubric?

b. How many points are available in writing rubric?

c. What are the descriptors of each point?

2 The students’ score a. How many varieties score?

What are they?

3 The students’ assignment a. How students’ writing based on

33

vocabulary, content, language use, and mechanism)

4 The students’ assessment

using new rubric

a. How students’ writing based on

rubric constructed EBB scale (content, communicative forth, then described to provide clarity to the reality or reality.8

The data analysis, as explained by Miles and Huberman version, has three activities in qualitative research, those are; data reduction, data presentation, and conclusion or verification.9

1. Data reduction

Data reduction is defined as the process of selection, focusing on simplification, abstraction, and transformation "rough" data that

8

Ismi Ulin Nafis, Undergraduate (S1) thesis: “Pelaksanaan pembelajaran agama Islam bagi penyandang tuna netra di balai rehabilitasi sosial Distrarastra Pemalang II”. (IAIN Walisongo 2013), 58.

9

34

emerged from field notes.10 The researcher collected the data reduction from document analysis, field notes and in-depth interview.

2. Data presentation

Data presentation is a description of information collected, that is possibility to take conclusions and action.11 There were two data presentation in this research, those are;

a. Empirically derived, Binary-choice, Boundary-definition scale First, give the task to a group of students drawn from the target population. Take the resulting language samples and ask the group of experts to divide them into two groups –the „better’ and the „weaker’ performances. This first division establishes a

„boundary’, which has to be defined. The experts are asked to

decide what the most important criterion is that defines this boundary; they are asked to write a single question, the answer to which would result in a correct placement of a sample into the upper or lower group.12

The researcher formulated the simplest criteria question that would allow them to classify performances as „upper-half’ or „lower-half’ according to the attribute that they were rating. Then,

working with the four upper-half performances, the team members individually rated each of them as „6’, „5’, or „4’. The procedure

10

http://eprints.walisongo.ac.id/1587/3/083111071_Bab3.pdf accessed on 27 June 2016

11

Sudarto, “Metodologi Penelitian Filsafat”…p. 59

12

35

requires that at least one sample should be rated as „6’; at least two

numerical ratings must be used. Therefore, at least two of the four samples receive the same rating. Next, rankings were discussed and reconciled. Last, steps 4 and 5 were repeated for the lower-half performances.13

The researcher was done to divide students into better and weaker performance. Based on the teacher assignment gave to students, the score gotten by the students are; three students got the higher score, the score was 94. Second score 88 was gotten by nine students. Third score 82 was gotten by six students. Fourth score 75 was gotten by four students. The last score 69 was gotten by one students. From the score, we can see that there were five level student performances. Based on the teacher, standard minimum score was 78. The high score was 94, 88, and 82. And the low score was 75 and 69 (See Appendix A).

The researcher analyzed the students’ writing and teachers’

rubric to divine the criteria and single question becomes hierarchy. Then, the researcher gave point for each hierarchy.

b. Rubric

This technique purpose to make a rubric with descriptor was constructed using Empirically derived, Binary-choice, Boundary-definition scale. The parts of rubric are like follow;

13John A. Upshur and Carolyn E. Turner, “Constructing rating scales for second language tests”,

36

1) Scores along with one axis of the grid and language behavior descriptors inside the grid for what each score means in terms of language performance.

2) Language categories along one axis and scores along the other axis and language behavior descriptors inside the grid for what each score within each category means in terms of language performance.

3. Conclusion

37

CHAPTER IV

RESULT AND DISCUSSION

This research aimed to know the development of empirically derived, binary-choice, boundary definition (EBB) scale for rating scale descriptor constructed and the implementation of empirically derived, binary-choice, boundary definition (EBB) scale as a rating scale descriptor for writing assessment. This chapter deals with the findings of the study as well as the discussions of the findings. These findings and discussions are arranged and presented in such a way in which the research question became the basis or reference for the arrangement and presentation.

A. Research Finding

1. The Development of Empirically-derived, Binary-choice,

Boundary-definition Scale

The result of observational field notes, interview and the document analysis of English teacher’s rubric, students’ score and assignment are presented bellow to answer the first research question. Based on three times of observation, interview section with English teacher and document analysis of English teacher’s rubric, students score and assignment, were got the data to

develop an empirically derived, binary-choice, boundary definition scale. There were some steps to develop an EBB scale; first was classifying the students’ level, second was creating the criteria and descriptor, third was

38

The first step to develop an EBB scale was classifying students’ level.

The researcher classified students’ level into two groups: upper and lower

groups. There were nineteen students in upper group and thirteen in lower group. Based on students’ score, there were six students’ performance levels.

The researcher did an analysis of six students’ assignments representing all ranges of students’ performance levels. The results of analysis students’

assignment are likes follow; a. Student level 1

39

b. Student level 2

Students were being in the second level because they wrote on the topic and most of sentences were clear. Students wrote five until seven sentences in each paragraph. Students wrote detail information: first paragraph told about self-identity, second paragraph told about family, and last paragraph told about hobby and favorite thinks.

Students wrote the supporting sentences for each main idea clearly. Students had few grammatical errors, pronouns, and preposition but the meaning is clear enough. Students wrote good vocabulary in use and choice. However, students put punctuation correctly, but some capitalization was incorrect.

c. Student level 3

40

d. Student level 4

Students were being fourth level because the students’ writing showed that the student wrote on the topic. Students wrote three until four sentences for each paragraph. Students wrote about self-identity, family, and hobby. Nevertheless, the supporting sentences were two until three sentences; the sentences were not detail enough to explain the main idea. Students only wrote two until three sentences in each paragraph. There were frequent grammatical errors in used pronouns, frequent lexical errors, punctuation, and capitalization that influence the meaning of the sentence.

e. Student level 5

Student were being fifth level because student’s writing showed that students wrote on the topic and the information clearly. However, student’s writing had similar with teachers’ idea and supporting sentences as an example; nevertheless, students wrote information not detail. The supporting sentences in each paragraph were only one or two sentences. There were frequent grammatical errors of pronouns, few lexical errors in some sentences but the meaning not obscure. The punctuation and capitalization were few errors that influence the meaning.

f. Student level 6

41

there was no supporting sentence and none of paragraph had finish. Students were frequent the grammatical errors and lexical errors did not communicate.

Based on the data above, the student’s level was concluded by the researcher. The result of classify students’ level showed in table bellow:

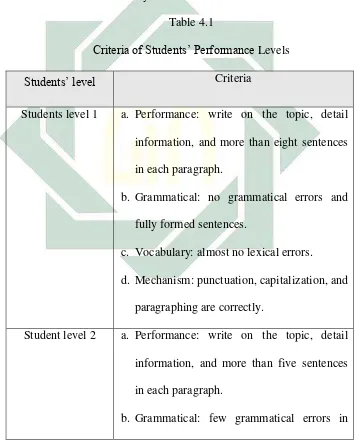

Table 4.1

Criteria of Students’ Performance Levels

Students’ level Criteria

Students level 1 a. Performance: write on the topic, detail information, and more than eight sentences in each paragraph.

b. Grammatical: no grammatical errors and fully formed sentences.

c. Vocabulary: almost no lexical errors.

d. Mechanism: punctuation, capitalization, and paragraphing are correctly.

Student level 2 a. Performance: write on the topic, detail information, and more than five sentences in each paragraph.

42

pronouns, but the meaning are clear.

c. Vocabulary: few lexical errors, but the meaning is clear.

d. Mechanism: punctuation and paragraphing are correct, but few capitalizations are incorrect.

Student level 3 a. Performance: write on the topic, most information clear but not detail, no more than six sentences in each paragraph.

b. Grammatical: few grammatical errors but meaning no obscure.

c. Vocabulary: few lexical errors but meanings are clear.

d. Mechanism: punctuation and capitalization are correct, but errors in paragraphing. Student level 4 a. Performance: write on the topic,

information are clear, but not detail, no more than four sentences in each paragraph. b. Grammatical: few grammatical errors in

pronouns influence the meaning.

43

meaning is clear.

d. Mechanism: frequent errors in placing punctuation and capitalization.

Student level 5 a. Performance: write on the topic, clear information but not detail, no more three sentences in each paragraph.

b. Grammatical: frequent grammatical errors in pronouns and the meaning are confused. c. Vocabulary: frequent lexical errors, but the

meaning is clear enough.

d. Mechanism: frequent errors in placing capitalization and punctuation.

Student level 6 a. Performance: write on the topic, but nothing is clear.

b. Grammatical: frequent grammatical errors and does not communicate.

c. Vocabulary: frequent lexical errors and the meaning are confused.

44

Students’ level was classified as described above by the researcher. Then the researcher created the criteria and descriptors. The criteria and descriptors were created based on the result of document analysis and interview with English teacher. According to the teachers’ rubric the criteria and descriptors are like follow: (See Appendix B)

a. Content

Content measured the idea with the descriptor are related ideas for scale “Excellent to very good” as a higher score, occasionally unrelated ideas

for “Good”, very often unrelated ideas for “Fair to poor”, and irrelevant

ideas for “Very poor”.

b. Organization

Organization measured text organization with the descriptor are effective and well organized for “Excellent to very good”, occasionally ineffective,

weak transition and incomplete organization for “Good”, lack organization for “Fair to poor”, little or no organization for “Very poor”.

c. Vocabulary

Vocabulary measured vocabulary use. The descriptor are effective word choice for “Excellent to very good”, mostly effective word choice for “Good”, frequently error in word for “Fair to poor”, and mostly

45

d. Language use

Language use measured grammatical accuracy. The descriptor are grammatically correct for “Excellent to very good”, mostly grammatically correct for “Good”, frequently error in grammar for “Fair to poor”, and very often error in grammar for “Very poor”.

e. Mechanism

Mechanism measured students’ handwriting with the descriptor are few

errors in spelling, punctuation, capitalization, paragraphing for “Excellent to very good”, occasionally errors in spelling, punctuation, capitalization,

paragraphing for “Good”, frequent errors in spelling, punctuation,

capitalization, paragraphing for “Fair to poor”, and dominated by errors in spelling, punctuation, capitalization, paragraphing for “Very poor”.

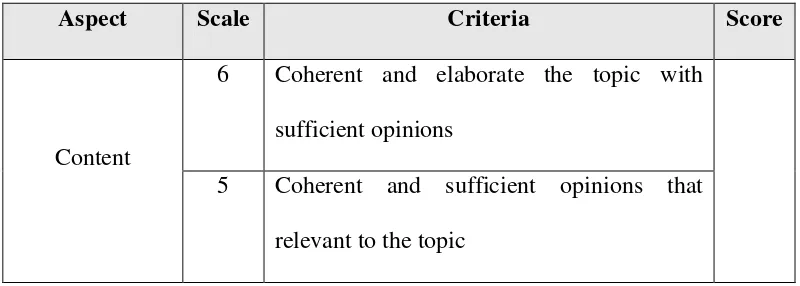

Based on the data above, the researcher conclude that the teacher’s rubric does not have detail descriptors. The descriptors explained the major aspect without detail description aspects want to score. The researcher analyzed student’s assignment and got the criteria for each level. According to the data above, the researcher created the criteria and descriptor. The result showed bellow:

Table 4.2

Aspect or Criteria and Descriptors

Aspect Descriptors

46

sufficient opinions

b. Elaborations opinions that relevant to topic c. Coherent and sufficient opinions

d. Inadequate development of the topic e. Mostly relevant the topic but no elaborate Grammatical and

Vocabulary

a. A variety of sentences pattern with almost no grammatical or lexical errors

b. Few grammatical and lexical errors

c. Verbs marked for incorrect tense and aspect d. Frequent grammatical and lexical errors e. Frequent grammatical and lexical errors, but

sentences and fragments are generally well-formed

Communicative Effectiveness

a. Well organized with logical sequence and fluent expression

b. Ideas clearly supported

c. Loosely organized but main ideas stand out d. Ideas confused or disconnected

e. Lacks logical sequencing and development

47

b. Few errors of spelling, punctuation, capitalization, and paragraphing but has distich meaning

c. Few errors of spelling, punctuation, capitalization, and paragraphing

d. Meaning confused or obscured

e. Frequent errors of spelling, punctuation, capitalization, and paragraphing

To distinct separation point for each descriptors, the researcher matched the criteria and hierarchy for descriptors with the students’ writing. The researcher giving the upper half performance with the point is 6, 5, and 4, and the lower half with point 3, 2, and 1. The researcher distinguish level 6 performance from level 4 and 5 performance, then level 5 performance from level 4 performance. The researcher worked with upper half first, then with lower half. The result of distinct separation point can be shown below (See Appendix C).

a. Grammatical and vocabulary

48

formed: there was a clear subject, to be or verb, and object included complement. Second hierarchy is “Few grammatical and lexical errors” rated as 5. Students wrote sentences fully formed, but there were some errors used to be or preposition. Students also wrote some lexical errors in verbs or nouns but the meaning was clear. Third hierarchy is “Verbs marked for incorrect tense and aspect” rated as 4. Students wrote fully formed sentences, but there were some mistake verbs used in sentences. The verb was not appropriate for tense.

Next hierarchy is “Frequent grammatical and lexical errors, but sentences

and fragments are generally well-formed” rated as 3 and 2. Students wrote sentences fully formed, but there were many mistakes in used to be or verb. Last hierarchy is “Frequent grammatical and lexical errors” rated as 1. Students wrote sentences with many mistakes: tense and verb used in sentences were not appropriate. There were many mistakes for lexical errors also. The mistake made the meaning are obscure.

b. Communicative effectiveness

The single factor to distinguish upper and lower level in communicative effectiveness is “Well organized with logical sequence and fluent expression”. First level is “Ideas clearly support” rated as 6. In this point,

students wrote with well organizer and logical sequence for the idea. Student’s idea had clearly supporting sentences that make the idea