Prototyping a Low-cost and Adaptive Mandarin

Vocal Interface Framework in Smart Home

Automation for People with Visual Impairment

Bao-Hua Hu#, $, Yu-Kuen Lai#, Theophilus Wellem*, Chui-Yuan Liu#, Gao-Jun Song$

#Department of Electrical Engineering,*Department of Electronic Engineering

Chung Yuan Christian University, Chungli, Taiwan, 32023

{phhu.2358950725, ylai, love958883, ermanwellem}@cnsrl.cycu.edu.tw

$School of Information Engineering

Nanchang Hangkong University, Nanchang, China, 330063 [email protected]

Abstract—This paper presents an ongoing framework design on a Mandarin vocal-user interface (VUI) control system for smart home automation. The system prototype is built from Jasper, an open source platform on a Raspberry Pi. The goal is to design a low-cost ubiquitous system for people with visual impairment to use voice commands accomplishing daily control tasks at home.

Keywords

—

Vocal-user interface; smart home; automation; Raspberry Pi; voice commandI. INTRODUCTION

According to the fact from World Health Organization (WHO), 285 million people are estimated to be visually impaired worldwide [1]. Visual impairment will cause functional limitation and decrease autonomy in their daily life

[2]. With the rapid development of speech recognition

technologies being applied to smart home automation [3]-[5], one of the needs for people with visual impairment is to get access to a Voice-User Interface (VUI) system, which enables them to use voice commands (VC) to control home devices like TV, lights, etc. [6]-[7].

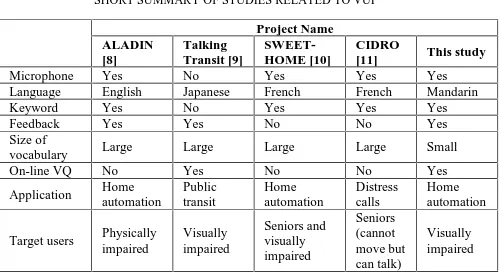

Recent studies [8]-[11] have shown that smart vocal interface can give a lot of assistance for people with visual impairment. Gemmeke, et al. [8] developed a self-taught assistive vocal interface that enables people with physical impairment to train specific words and sentences related to VC by the end-users themselves. The language used for the vocal interface is English. Kim, et al. [9] proposed a public location-aware system that enables visually impaired people to obtain real-time information of public transit through a smartphone using voice interface in Japanese. A voice-controlled experiment in French on realistic smart home automation for seniors and people with visual impairment is presented in [10]. Vacher, et al. [11] developed a French voice-controlled distress calls system in a smart home that ensures fall detection of seniors who cannot move but can talk at home.

Making a fair and comprehensive comparisons among these literatures is difficult because of the complex individual situations among target users. For most VUIs in recent projects, microphone is still one of the most popular and economical media between user and speech recognition system. The VUI

needs to be trained to understand instructions based on user inputs, and give an active action and feedback to the user, such as in ALADIN [8]. The system in [9] provides on-line voice query (VQ) in real-time about information of public transit. Overall accuracy of system throughput capacity decreases with increasing size of vocabulary. The limited vocabulary ensures that only speech relevant to the command of home automation is correctly decoded. The short summary of studies related to VUI used in speech recognition is listed in Table I. It is clear that there is no automatic on-line VUI in Mandarin, with low-cost and adaptive configurations, proposed for people with visual impairment in smart home.

TABLE I

SHORT SUMMARY OF STUDIES RELATED TO VUI

Project Name

Microphone Yes No Yes Yes Yes

Language English Japanese French French Mandarin

Keyword Yes No Yes Yes Yes

Feedback Yes Yes No No Yes

Size of

vocabulary Large Large Large Large Small

On-line VQ No Yes No No Yes

Application Homeautomation Publictransit Homeautomation Distresscalls Homeautomation

Target users Physicallyimpaired Visuallyimpaired

II. METHODOLOGIES AND FRAMEWORK OVERVIEW In this section, both block diagram and framework of our system will be described. Raspberry Pi and Jasper are chosen to be used in our system framework because their features and characteristics fit the system requirements.

A. System Block Diagram

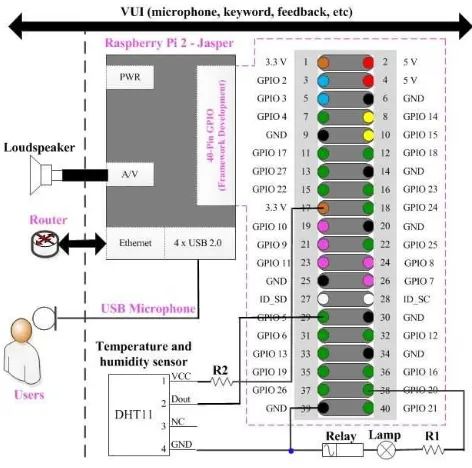

The system block diagram is shown in Fig. 1. Our Mandarin VUI system is comprised of five parts: 1) Raspberry Pi 2 and Jasper platform that guarantee performance indicators such as, low-cost, accuracy, changeable keywords (vocabularies), etc., 2) a router that provides network environment for the system to transmit data, 3) a USB microphone that ensures a medium between user and the speech recognition system, 4) target users who have visual impairment, and 5) different sensors and home appliances (TV, lamps, air-conditioner, etc.) connected to GPIO ports that can controlled by developing a new voice-controlled modules.

As shown in Fig. 1, lamps, temperature and humidity sensor, are connected to the GPIO ports. By adding two new executable files into Raspberry Pi and Jasper modules, controlling relay to turn lamp on/off and reading the temperature sensor can be done. If necessary, Jasper’s original dictionary and language

model should by updated with the CMUSphinx lmtool [12].

Fig. 1 System block diagram

B. Raspberry Pi and Jasper

The Raspberry Pi [12] is a single-board computers developed by the Raspberry Pi Foundation. The latest version of the board is the Raspberry Pi 2 model B (shown in Fig. 2), which is equipped by a Broadcom BCM2836 System-on-Chip (SoC) with quad-core ARM Cortex-A7 processor.

Fig. 2 Raspberry Pi 2 model B board

Jasper [13], an open source platform for developing always-on, voice-controlled applications, is built rely on the Raspberry Pi system which is integrated with speech recognition technology (PocketSphinx [14]). By default, Jasper is

characterized by its default calling name or keyword (“Jasper”)

and real-time feedback (“high/low beep”) in English.Common interaction sequence with Jasper is shown in Fig. 3.

Fig. 3 Common interaction sequence with Jasper

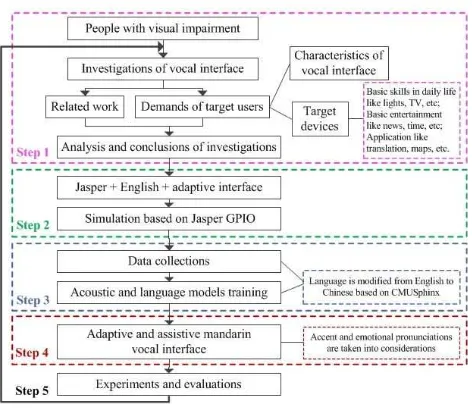

C. Framework Development

To achieve the goal of developing the vocal interface framework, we propose a design methodology in several stages as shown in Fig. 4.

1) Step 1: First, combined with literature studies, investigations of user-centered design in home automation are

held to satisfy target users’ demands (Section 1).

2) Step 2: Second, experiments on the Raspberry Pi and

Fig. 4 Steps for framework development

3) Step 3: Third, after simulations based on Jasper in

English with adaptive VUI, we need to collect new data (vocabularies in Mandarin, etc.) that are essential to this system, and then record them in database. In order to achieve higher accuracy rate of system throughputs, only vocabularies that closely related to VQ/VC are collected. This means that size of vocabulary in our system is smaller than [8]-[11], which ensure higher accuracy. After performing the data collection process, both acoustic and language models training of Jasper will be made. Since the default language of Jasper is English, we need this step to let Jasper can recognize Mandarin. This step will rely on CMU Sphinx [14] speech recognition system.

4) Step 4: The system needs to be operated in a realistic

condition and accept Mandarin speech recognition accurately for people with visual impairment. Therefore, both accent and emotional pronunciation in Mandarin, also noise effects will be taken into account. This will improve the adaptation of acoustic and language models and yield in the overall performance of the VUI.

5) Step 5: Finally, experiments and evaluations in a realistic

scenario will be carried out to evaluate the whole system performance. People above 18 years old with visual impairment will be invited as volunteers to try the system. Furthermore, other performance metrics will be added, for example, word error rate (WER).

III.EVALUATION

In order to achieve our system’sgoal to improve the life qualities at home for people with visual impairment, it is essential for us to test the VUI performance of both VQ without GPIO-TD and VC with GPIO-TD interface in English before further investigations. Based on the default settings, installation and experiments with Jasper were conducted to understand the capabilities and functionalities to be used in our system.

A. Installation

The Jasper system image can be downloaded from [15], where essential modules can be installed under the guidance of Jasper documentation [13].

B. Experiments

VQ: In this experiment, we chose three default topics to ask

Jasper on-line VQ, namely, “Time”, “Weather”,and“News”.

This interaction is depicted in Fig. 5 (“Time” can also be replaced by“Weather”or“News”).

Fig. 5 Dialog with Jasper

VC: For this experiment, a lamp is connected to the GPIO

port and a new module (written in Python) is added to provide a function that can turn the lamp on/off using VC interaction. Interaction sequence in the VC is the same as in the VQ, except that command spoken (see Fig. 3)is replaced by “turn on/off light”.

Performance metrics: We chose latency and accuracy rate

(AR) as the performance metrics in this experiment. Latency is denoted by Tcall, Tspeak, Twaitand Tresponse, while AR is defined as

= ( )( )

Here, a successful trial means that user get right answers from Jasper with VQ or devices connected to GPIO were successfully operated with VC.

C. Method

Three volunteers were invited to do some trials using VQ and VC (to control target home appliances). The total number of trials (NT) is fixed. Once a speech trial of VQ/VC is recognized and carried out successfully, the value of NS should be incremented. Otherwise, NSshould be kept the same. Latency is measured by a timer. Each delay time obtained from all volunteers, including NS, will be recorded.

D. Results

Each performance metric obtained from all volunteers is calculated into an average value. As seen in Table II, the average values of latency are Tcall= 581.45 ms, Tspeak= 720.2 ms, Twait= 866.84 ms, Tresponse= 1223.21 ms. The average of AR is 0.82.

Based on data obtained from this experiment, it can be concluded that Jasper works well in both VQ and VC, with acceptable latency and accuracy. Combined with the various and adaptive VUI that Jasper offers, Raspberry Pi and Jasper are suitable to be used in our framework.

You:“Jasper” Jasper: high beep

You:“Time”or“What is the time”or“Tell me the time” Jasper: low beep

TABLE II

EXPERIMENT CONDUCTED TO EVALUATE JASPER FUNCTIONALITY

Performance metrics Avg. Tcall(ms)Min. Max. Avg. TspeakMin.(ms) Max. Avg. Twait(ms)Min. Max. Avg.Tresponse(ms)Min. Max. NS NT AR

VQ

Time 440.81 167 738 737 522 936 493.44 323 605 686.69 480 1019 16 20 0.80

Weather 558.94 244 937 715.94 529 904 518.53 285 658 1570.35 1139 2065 17 20 0.85

News 821.31 288 1356 829.19 637 1042 1757.69 36 8457 1470.06 533 3856 16 20 0.80

VC Light_on 464.37 385 876 713.49 446 1043 679.15 330 1134 1293.59 874 3552 16 20 0.80

Light_off 621.81 407 1120 605.37 313 1364 885.39 356 1417 1145.36 667 3922 17 20 0.85

Average 581.45 298.2 1005.4 720.2 489.4 1057.8 866.84 266 2454.2 1233.21 738.6 2882.8 - - 0.82

IV.DISCUSSION

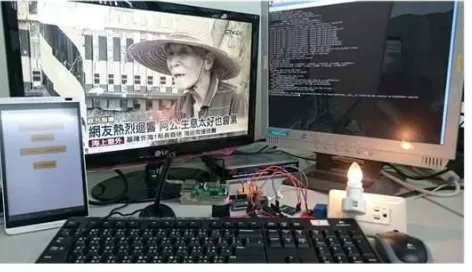

Currently, we have completed Step 1 to 3 in Fig. 4. In Fig. 6, we have successfully used Jasper voice interface both in English and Mandarin to control the lamp switch and TV via the Raspberry Pi 2 GPIO. Environmental parameters, such as temperature and humidity, can be asked and reported by using Jasper VQ and VC. However, these two languages cannot be used in the system at the same time in one session, even if exchange them within a few minutes. On the other hand, in Table II, although the latency is acceptable and AR is good, we need to do further research to improve the system performance and achieve more adaptation as much as possible. People with visual impairment, especially who also have phonological impairment (or speech sound disorder), would prefer to have a more adaptive and easy-to-understand model, which the vocal interface language can be selected. We leave this problem for future work.

Fig. 6 Simulation of controlling lamp switch

V. CONCLUSIONS AND FUTURE WORK

In this paper, we proposed a framework of low-cost and adaptive Mandarin VUI in a smart home for people with visual impairment. The experiments results showed that: 1) Our system can work well in both English and Mandarin as expected; 2) The results obtained from experiments showed that Raspberry Pi and Jasper are suitable for our system framework; 3) The VUI can provide various adjustable features:

modifiable language, changeable keyword, feedback to users’

speech, on-line VQ and VC, combined with GPIO control. Adjustable VUI is important for this home automation application. Currently, our system cannot use English and Mandarin at the same time.

For future work, we will focus on the recognitions of accent and emotional pronunciations in Mandarin (Step 4), experiments and evaluations (Step 5), and try to make the mixed of both English and Mandarin available in the VUI at the same time. Further evaluations of performance metrics in Step 2 will be investigated.

REFERENCES

[1] World Health Organization Media Center, “Visual impairment and

blindness”, http://www.who.int/mediacentre/factsheets/fs282/en/ [Online]. Accessed: November 5, 2015.

[2] De Silva, L. C., et al., “State of the art of smart homes,” Engineering

Applications of Artificial Intelligence, vol. 25, no. 7, p. 1313–1321, Oct. 2012.

[3] Portet, F., et al., “Design and evaluation of a smart home voice interface for the elderly: acceptability and objection aspects,” Pers Ubiquit

Comput, vol. 17, no. 1, p. 127–144, Oct. 2011.

[4] Christensen, H., et al., “homeService: Voice-enabled assistive technology in the home using cloud-based automatic speech recognition,”

in Proceedings of the Fourth Workshop on Speech and Language

Processing for Assistive Technologies. Grenoble, France: Association

for Computational Linguistics, 2013. p. 29-34.

[5] Chan, M., et al.,“A review of smart homes—Present state and future

challenges,” Computer Methods and Programs in Biomedicine, vol. 91,

no. 1, p. 55–81, Jul. 2008.

[6] Vacher, M., et al., “Multichannel automatic recognition of voice command in a multi-room smart home: an experiment involving seniors

and users with visual impairment”. INTERSPEECH 2014

[7] Ni, Q., et al., “The Elderly’s Independent Living in Smart Homes: A

Characterization of Activities and Sensing Infrastructure Survey to Facilitate Services Development,” Sensors, vol. 15, no. 5, p. 11312–

11362, May 2015.

[8] Gemmeke, J. F., et al., “Self-taught assistive vocal interfaces: an

overview of the ALADIN project,” inINTERSPEECH, p. 2039-2043,

2013.

[9] Kim, J.-E., et al., “Enhancing Public Transit Accessibility for the

Visually Impaired Using IoT and Open Data Infrastructures,” in

Proceedings of the First International Conference on IoT in Urban Space, p. 80–86, 2014.

[10] Vacher, M., et al.,“Evaluation of a Context-Aware Voice Interface for Ambient Assisted Living: Qualitative User Study vs. Quantitative

System Evaluation,” ACM Trans. Access. Comput., vol. 7, no. 2, p. 5:1–

5:36, May 2015.

[11] Vacher, M., et al., “Recognition of Distress Calls in Distant Speech Setting: a Preliminary Experiment in a Smart Home,” inSpeech and Language Processing for Assistive Technologies (SLPAT), p. 1-6, 2015.

[12] Raspberry Pi, https://www.raspberrypi.org/ [Online]. Accessed: November 5, 2015.

[13] Jasper: The ON switch for Internet-of-Things, https://www.jasper.com/. [Online]. Accessed: November 5, 2015.

[14] CMUSphinx: Open source speech recognition toolkit, http://cmusphinx.sourceforge.net/ [Online]. Accessed: November 5, 2015.