A COMPARATIVE STUDY BETWEEN HANDWRITTEN AND

COMPUTER TEXT ESSAYS ON RATERS’ SCORES

(A Script)

By Mei Dianita

FACULTY OF TEACHER TRAINING AND EDUCATION UNIVERSITY OF LAMPUNG

ABSTRACT

A COMPARATIVE STUDY BETWEEN HANDWRITTEN AND COMPUTER

TEXT ESSAYS ON RATERS’ SCORES

By

MEI DIANITA

The involvement of technology in teaching learning process is undeniably

demanding; however, studying its effect on assessment has received little attention.

This study aims at finding out the effect on essays presented as handwritten and as

computer printed text on raters' scores and whether the length of the essays influence

the standard in scoring. Twenty one (21) sample essays were randomly selected out

of forty one (41) essays submitted by students of English Study Program of Lampung

University. The researcher employed six (6) raters to score essays in three different

formats: handwritten, computer text in single space, and computer text in double

space. The design of this research applied

one shot case study design

and the data

were analyzed using one way ANOVA. The result revealed that handwritten essays

received higher scores than those in computer printed texts. The F-value was 5.5

while the table was 5.05 and the p value was 0.006. It showed that value >

F-table in the significance level of <.05; thus, it can be concluded that the research

hypothesis was accepted and that there was statistically different mean on raters

scores. It is suggested that the future researchers to deeply analyze the causes of

presentation effect on raters scores and to apply the more valid strategies to "train

away" or to reduce the presentation effect which might put students into disadvantage

when they are writing in computer mode. For future teachers, raters, or assessors, it is

suggested that they should be more aware of such presentation effect when scoring

students responses in any formats to maintain the reliability of scoring

A COMPARATIVE STUDY BETWEEN HANDWRITTEN AND

COMPUTER TEXT ESSAYS ON RATERS’ SCORES

By Mei Dianita

A Script

Submitted in a Partial Fulfillment of The Requirements for S-1 Degree

in

The Language and Arts Department of Teacher Training and Education Faculty

FACULTY OF TEACHER TRAINING AND EDUCATION UNIVERSITY OF LAMPUNG

CURRICULUM VITAE

Born as a baby girl named Mei Dianita from an average family in a small district, she is now a young lady with a happy life. She was born in Bakti Rasa village, Palas District, South Lampung, on May 8th, 1989. Her biological parents are Sarjono and Rusiti, who got divorced in 1994. She had two biological siblings, her elder sister Yuniati, and her younger sister, Esti Susanti. She moved and lived with her father and her elder sister to a place in East Lampung where she spent her childhood and teenage life there. Life might be complicated when her father got married for the second time, and her mother got married to another man as well. From the new marriage life, she got new siblings, Kurnia Wati, Rino, the twins brothers Redi Kusuma and Rudi Kusuma, and the little one Cika.

After moving to East Lampung, she began her kindergaten in TK Bina Putra, Batanghari Nuban, East Lampung. In the same year, she enrolled in an Elementary School of SD N 2 Cempaka Nuban, Batanghari Nuban, East Lampung from 1996-2002. On July 2002, she was registered as a student in SMP N 2 Kotagajah, Central Lampung, and graduated in 2005. Then she enrolled in SMA N 1 Kotagajah, the best school in the Regency of Central Lampung. She started the school in 2005 and graduated in 2008. Since elementary school, she was kind of a study oriented girl; therefore she grabbed the first rank in almost every grade level and won several achievements, particularly in English field.

In 2011, a few months after she returned home, she was proposed for a marriage by a gentleman; and she agreed. She continued her busy classes, even if when she was pregnant. In 2013, she was taking a compulsory course of Society Engagement (KKN) and Teaching Practice Program (PPL) which required her to stay in a village called Pagar Dewa, West Tulang Bawang. Living in such hardship made her think and gratitude for any easy access she can get back in the town.

At times, she is running an online translation and proofreading bussiness called

Your Grammar Detective. She hopes she can apply her ability in English field to help others as well as possible. She is confidence that those who shed more sweats and tears in pursuing dreams, will be the winner afterall, like her life motto, man jadda wa jadda.

Bandar Lampung, April 2015 .The writer

MOTTO

DEDICATION

This scipt is dedicated to my very special people in my life; My strong father, Sarjono, for your sincere du’a and support;

My elder sister, thanks for every drop of tears you shed to help me staying at school;

viii

ACKNOWLEDGMENTS

Alhamdulillaah, all praise goes to Him alone, Allaah aza wajalla, the Merciful, the beneficent. It is obviously that without Him, we are nothing. The writer is very grateful that Allaah has granted her such beautiful path of life journey. It is admitted that arranging such research paper is relatively tough for a college examination; but still, life ahead is indeed the most difficult exam. Eventually, by the wills of Allaah, this script is finally completed.

Entitled “A Comparative Study between Handwritten and Computer Text Essays on Raters’ Scores”, this script is dedicated to English Education Study Program, the Department of Language and Arts Education, Teacher Training and Education Faculty, Lampung University, as a partial fulfilment of the equirements for S-1 (bachelor degree).

The writer would like to address her sincere gratitudes to those who have mentored and gave constructive criticisms during the completion of this script. Prof. Patuan Raja, M.Pd., as her first advisor; Drs. Ramlan Ginting Suka, M.Pd., as her co- advisor, and Dr. Muhammad Sukirlan, M.A., as her examiner; not to mention her academic advisor, Prof. Cucu Sutarsyah, M.A and her Head of English Study Program, Ari Nurweni, M.A. She thanks them for being so friendly and encourage her to move on. May Allaah rewards all of them with jannah.

A bunch of thanks is delivered as well to Satria Adi Pradana, M.A. as the instructor of Literature class who has kindly permitted me to take samples from his class; and to the 3rd semester students of English Study Program, She did appreciatte their efforts. She also thanks the six raters who have helped her in assessing the data; she really appreciated all their best works.

Behind the college life, the writer would like to express her everlasting gratitudes to her parents; her beloved father, Sarjono and mother, Rusiti for any prayer and support during her life span; not to mention her step mother, Siti Suparin, and her step father, Sumarto, for all supports they’ve shared. The writer does not want to forget any single support both financially and mentally shared by her very loved sister, Yuniati; she thanks Allaah for giving her such great sister. May Allaah loves her always. Her other siblings are gifts that Allaah has made her life more colorful. Esti Susanti, Kurnia Wati, Rino, the twins brother Rudi Kusuma & Redi Kusuma, and the little one Cika; they are just so cute and nice.

ix

sweetness of standing right next to her. Next, her lovely little prince, Ghozi Al-Ghifari who always cherishes her days. He is such cute and strong little boy since he used to accompany the writer in times of hardship; especially when she had to take a community engagement in a place far away from the town. She prays for his good state of health and faith.

The first two years of the writer’s college life was such important landmark she shared the joy with her classmates. She should make a list of those important folks; Novi, Tias, Tuti, Milah, Myra, Desi, Nisa, Devina, Siska, Deva, Purwanti, Gestiana, Kiki, Istyaningsih, Reni, Berlinda, Epi, Anu, Ledy, Virnez, Diah, Indah, Annisa, Aryani, Eni, Atin, Azmi, Sule, Ali, Wira, Aji, Veri, Miftah, Ferdi, Mirwan, Rizka, Ma’ruf, etc. The time flew so fast that almost all of them have graduated two years faster than the writer herself. She will miss them all so much. The dorm life also occupied the writer sweet moment during her college life. There were countless laughs and joys they spent the days at dorm. Mbak Eka who loved to sing in the bathroom, the oldest and also the funniest, Novi and Linda her room-mates, who have pleased to share the bed with her, Mbak Tika, Mbak, Nita, Mbak Nur, Mbak Hesti, Misri, Amel, Fau, Aristina, Indah, Tia, Cimut, Eti, Eri, Devi. She just love them all tons.

The writer’s productive days at college was also filled with organization world. English Society (Eso) was one of them, the organization that met her with new challenges and where she spent extra efforts to involve actively in any activity. To all of the seniors she cannot mention one by one, to her comrades, she thanks them forever.

There was no better year than a year she spent in the U.S college. Therefore, she really wants to thank her international student advisor, her professors, her instructors, her Ugrad friends from all around the globe, her colleagues, her roomates, muslim sisters and brothers in the U.S, and the like. She thanks them all countless times for such beautiful year.

Finally, even a shop assistant deserves a sincere thank from the writer. Thank you for the nice help when the writer needed a highlighter to work on the revision. Not to mention the ‘angkot’ driver for dropping the writer from home to campus, thank you for the service. Next, staffs at the photocopy service, thank you for serving her and her drafts. Also, the campus cafetaria for serving her foods in times of hunger. There are still a lot of individuals she cannot mention one by one who have contributed to her life span. Without any disrespect, she thank them all so much. May Allaah keeps them in a good health.

Bandar Lampung, April 2015 The Writer,

CONTENTS

page

ABSTRACT ... i

COVER ... ii

APPROVAL ... iii

CURRICULUM VITAE ... iv

MOTTO ... vi

DEDICATION ... vii

ACKNOWLEDGMENT ... viii

CONTENTS ... x

TABLES ... xii

CHARTS ... xiii

APPENDICES ... xiv

I. INTRODUCTION ……….…. 1.1Background of the Problem ……….. 1.2Formulation of the Problem ……….. 1.3Objective of the Research ………. 1.4Uses of the Research ………. 1.5Scope of the Research ………... 1.6Definition of Terms ………... II. LITERATURE REVIEW ………..

2.1Previous Research ………... 2.2The Concept of Writing ...………. 2.3The Concept of Argumentative Essay Writing ... 2.4The Concept of Writing Assessment ... 2.5Writing and Technology Involvement ………... 2.6Theory on Raters ...………... ...… 2.7Theoretical Assumption... ………..…... 2.8 Hypothesis ………... III. RESEARCH METHOD ……… 3.1Design of the Research ……….. 3.2Population and Sample of the Research ……… 3.3Research Instrument ………...….………... 3.4Research Procedure...………... 3.5Criterion of a Good Test... 3.5.1 Validity of the Research... 3.5.2 Reliability of the Raters ... 3.6 Data Treatment ... 3.7 Hypothesis Testing ...

xii

IV. RESULTS AND DISCUSSION……… 4.1Result of the Research ……….. 4.2Result of Pre-Experiment and Experiment ... 4.3Result of Presentation Effect ……… 4.3.1 Transcription ... 4.3.2 Distribution of Scores between Pair of Raters... 4.3.3 Distribution of Scores among Presentation Formats... 4.3.4 Essay Length on Raters’ Scores……… 4.4Result of one way ANOVA ... 4.5Discussion of Findings………... V. CONCLUSIONS AND SUGGESTIONS ………. 5.1Conclusions ………... 5.2Suggestion ………. REFERENCES ... APPENDICES ...

TABLES

page

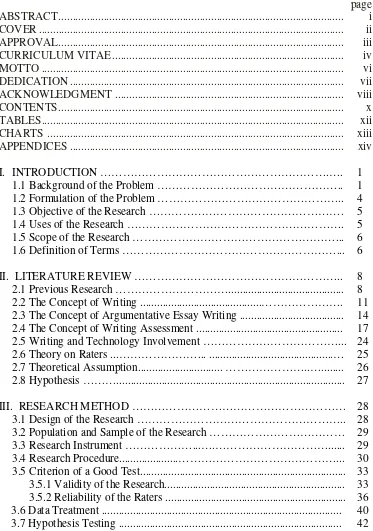

Table 3.1 Essay Distribution among 6 Raters ...32

Table 3.2 Specification on Data Collecting Instrument ………...34

Table 3.3 Rating Scale Distribution ...37

Table 3.4 Frequency Table of Inter-Rater Agreement ...37

Table 3.5 Cross-Tabulation Percent of Agreement ...38

Table 3.6 Symmetric Measures ... …...39

Table 3.7 Test of Normality ………...41

Table 4.1 Score Distribution from Rater 1 and 2 ...48

Table 4.2 Score Distribution from Rater 3 and 4 ...49

Table 4.3 Score Distribution from Rater 5 and 6 ...50

Table 4.4 Distribution of Score in Handwritten Format ...51

Table 4.5 Distribution of Score in Single Space Format ...53

Table 4.6 Distribution of Score in Double Space Format ... ……55

Table 4.7 Mean Score Comparison among the Three Formats ... ……58

Table 4.8 Test of Homogeneity of Variances ... ..61

Table 4.9 Descriptive Statistics ... …….61

Table 4.10 One Way ANOVA ...62

Table 4.11 Result of Post Hoc Test ...62

CHARTS

page

Chart 4.1 Distribution of Score in Handwritten Format in Development

of Idea and Organization Aspect...52 Chart 4.2 Distribution of Score in Handwritten Format in Grammar and

Sentence Structure Aspect... ..53 Chart 4.3 Distribution of Score in Single Space Format in Development

of Idea and Organization Aspect ... ..54 Chart 4.4 Distribution of Score in Single Space Format in Grammar and

Sentence Structure Aspect ... 55 Chart 4.5 Distribution of Score in Double Space Format in Development

of Idea and Organization Aspect ... 56 Chart 4.6 Distribution of Score in Double Space Format in Grammar and

APPENDICES

page

Appendix 1 Research Schedule………...77

Appendix 2 Training Activities………..………...78

Appendix 3 Beowulf Assignment for Literature Class...81

Appendix 4 Content-Based Writing Rubric…………...82

Appendix 5 Sample of Students Essays…………...84

I. INTRODUCTION

This chapter is framed in six points: (1) background of the problem, (2)

formulation of the problem, (3) objectives of the research, (4) uses of the research,

(5) scope of the research and (6) definition of terms.

1.1Background of the Problem

In today’s world the emergence of modern technology works together with our

daily routine. Computer is one of modern technology that is assistive and

beneficial to create a more effective work. In this digital era, the role of

technology, particularly computer, in academic setting is very undeniable. From

faculty members to staffs, headmaster, teachers, students, they all have to master

the basic skill of computer operation.

In Indonesian education system, students are taught the skill of computer since

they are in the middle school. Students are taught the basic skill of typing, editing,

printing, etc. By the times they are in high school, they have developed a fluency

in typing.

At times, when technology develops rapidly, and the demand of computers is

high, people start to leave the traditional technology. Computers have become the

2

how to use computers at a young age and are often instructed to complete written

assignments using them. This practice of requiring all written assignments to be

word processed eliminates the inequality in assessment that can occur from

varying handwritings among students.

In college life particularly, the lecturers and instructors often assign students to

type their tasks instead of handwrite them. The hardship of writing down word by

word of sentences of an essay has been replaced by the magic tool of computer.

Students feel more confident when completing their assignments with computer

rather than with handwriting. They are confident that they can gain higher score

when they complete the assignment with computer rather than with handwriting.

Recently, our education system has started to implement the test administration

via computer on the national examination for high school students. Even though it

was still a trial, but it revealed that the government has put extra efforts on the

more effective assessment via computer. There were two kinds of the test

administration on the national examination: Paper Based Test (PBT) and CBT

(Computer Based Test). The test administrations were only for multiple-choice

tests, which mean that the comparability between the two different test

administrations will likely gain the same result.

A different perspective is applied on the test administration for open-ended test,

for instance, essay. Unlike multiple-choice test, essay is subjective test which

requires more complex aspects of scoring; thus, investigating the equality between

both Computer Based Test (CBT) and Paper Based Test (PBT) is highly

3

In L1 setting, a research on testing via computer done by Bunderson, Inouye, and

Olsen (1989) and also by Mead and Drasgow (1993) suggests the same results for

multiple-choice tests administration between CBT and PBT as administering tests

via paper-and-pencil. Then in the early 1990s, several research were conducted to

investigate the effects of two modes of answering an examination questions on

students’ scores via handwritten or word processed. Powers, Fowles, Farnum and

Ramsey (1994) conducted a research to examine whether Computer Based Test

(CBT) gained the higher scores compared with Paper Based Test (PBT). In

conducting the research, these authors converted a sample of original handwritten

essay answers into word processed versions and transcribed a sample of original

word processed essay into handwritten versions. The finding of this research

revealed that handwritten answers were awarded higher average scores than word

processed answers, regardless the original mode in which the answers were

produced.

Earlier before a research by Powers, et al, several studies were conducted to find

out the influence of “neat” and “sloppy” penmanship upon students’ scores. The

result reported that that essays presented with neater penmanship receive higher

scores than those presented with sloppy penmanship (Marshall & Powers, 1969;

Markham, 1976; Bull and Stevens, 1979). This finding drew a further thought

about the neatness of writing appearance on scores. Thus, one would expect that

essays presented as neatly formatted computer-printed text would receive higher

4

More recently, Russell and Plati (2000) have advocated that state testing programs

that employ extended open-ended items also allow students the option of

composing responses on paper or on computer. In response to these findings,

ETS recently conducted a study that involved administering the National

Assessment of Educational Progress Writing test on paper and on computer. As

more testing programs offer students the option of producing essay responses on

paper or on computer, the presentation effect reported by Powers et al. (1994) and

Russell and Tao (2004) raises a serious concern about the equivalence of scores.

In this research, the writer tried to compare between two different formats of

original handwritten and computer printed of the same essay, in which formats

would result in higher scores. This study is a half replicate with the study

conducted by Russell and Tao (2004) which investigated the same problem about

assessment in both presentation mode.

1.2 Formulation of the Problem

After concerning the background of the problem above, the researcher formulates

two research questions as follows:

1. Is there any difference on raters’ scores when essay is presented as handwritten

and computer text at third semester students of English Education Study

Program of Lampung University?

5

1.3 Objectives of the research

1. To find out whether there is any difference on raters’ scores when essay is

presented as handwritten and computer text at the third semester students of

English Education Study Program of Lampung University.

2. To find out whether the length of essay eliminate the presentation effect or not.

1.4Uses of the research

The findings of the research are expected to benefit both theoretical and practical.

1.4.1 Theoretical

Theoretically, the finding of this research can be used to verify the previous

research in the focus of writing assessment which includes the role of computer

and the comparison with handwritten format.

1.4.2 Practical

Practically, this research is hoped to benefit both English instructors and future

researchers as follows:

a. Instructors

It is hoped that the writing instructors or teachers are more aware of such

influence from the presentation effect. They are expected to put it into account

when scoring writing through the different presentation.

b. Future Researchers

Likewise, the future researcher might benefit from this research as one of the

literary review to compare and contrast in developing similar or linier

6

1.5 Scope of the research

This research is a comparative study between two different presentation of essay;

handwritten and computer printed. The population in this research was taken from

the forty one (41) submitted essays produced by 41 students of English Education

study program of Lampung University. The sample was determined by random

sampling through lottery. There were twenty one (21) sample essays to be the

sample of this research. The sample essay were gathered from a writing task

during Introduction to Literature class. Later, those original handwritten would be

transcribed verbatim in the formats of computer printed text prior to the scoring

process by raters.

1.6 Definition of Terms

To avoid misunderstanding in the context of research, the following key terms are

defined as follows:

1. Assessment is all activities teachers use to help students learn and to gauge

student progress.

2. Argumentative Essay is a genre of writing that requires the student to

investigate a topic; collect, generate, and evaluate evidence; and establish a

position on the topic in a concise manner.

3. Essay is a short literary composition on a particular theme or subject, usually

7

4. Beowulf is s an Old English epic poem consisting of 3182 alliterative long

lines. It is possibly the oldest surviving long poem in Old English and is

commonly cited as one of the most important works of Old English literature.

5. Computer text is a product of typing on computer using keyboard.

6. Hand-written text is a prodcut of writing by hand on paper using pen or

pencil.

7. Mode of presentation is a mental state or perception towards the presentation

medium.

8. Rater is a person who estimates or determines a rating.

9. Rubric is a scoring rubric is a set of ordered categories to which a given piece

of work can be compared.

10. Writing prompt is an entry that generally contains a question to help you pick

II. LITERATURE REVIEW

This chapter presents literature review related to the research problem. Therefore, a number of relevant topics are reviewed here, they are; (1) the previous research, (2) the concept of writing, (3) the concept of argumentative essay writing, (4) the concept of writing assessment, (5) writing and technology involvement, (6) theory on raters, (7) theoretical assumption, and (8) hypothesis.

2.1 Previous Research

9

To explain this seemingly contradictory finding, Powers, et al (1994) offered several hypotheses, some of which drew upon their work as well as the work of Arnold, Legas, Obler, Zpacheco, Russell, and Umbdenstock (as summarized by Powers et al., 1994). These hypotheses included:

A. Readers may have expected fully edited and polished final products when presented as computer-printed text and thus had higher expectations for these essays;

B. Handwritten text caused the reader to feel closer to the writer which “allowed for a closer identification of the writer’s individual voice as a strong and important aspect of the essay” (as quoted in Powers et al.);

C. Readers may have given handwritten responses the benefit of the doubt when they encountered sloppy or hard-to-read text;

D. Hand-written responses appeared longer and thus appeared to have been the result of greater effort.

10

This replicated findings of Arnold, Legas, Obler, Pacheco, Russell and Umbdenstock (1990) that student papers converted to word-processed versions received lower scores than did the original handwritten versions. Arnold et al suggested that the reviewers may have had higher expectations of the word processed work, less empathy with the authors of word processed work or may have been less likely to give the benefit of any doubt. Powers et al suggested other possible factors including lack of evidence in the word processed versions of evidence to revise work, greater visibility of typographical errors, or apparently shorter answers.

11

space taken up by the handwritten essay. The computer-printed scores still received lower scores; however, the effect was reduced when the computer essay was double spaced rather than single spaced. Therefore, this study has also added evidence that the difference in scores is due to the visibility of errors. Through interview with raters, this study also suggests that the higher standard and expectations raters have for text presented as computer print and the ability of some raters to identify with students and see their effort when they handwrite essays may impact the scores they award.

More recent work in the United Kingdom by researchers at the University of Edinburgh (Mogey, Paterson, Burk and Purcell, 2010) compared transcribed scripts of first year students in a mock examination: handwritten scripts were transcribed into typed format and typed scripts were transcribed into handwritten format. Mogey et al found “weak evidence” that handwritten scripts generally scored slightly higher than typed scripts.

Since this study is partly replicated the previous research done by Russell and Tao (2004), therefore, the methodology also follow what have been done by them. Unlike Powers et al (1994) who gathered the sample essays from students of Business and Law, this study focused on sample essays from students of Language and Arts Department.

2.2 The Concept of Writing

12

punctuation, spelling, grammar, diction, etc. In other words, a writer should achieve the ability of crafting a good piece of writing by combining those elements in free-mistake as well as possible.

A simple evidence how knowing proper punctuation matters in composing a writing product can be shown in this example.

Let's eat Grandma!

Such simple sentence may lead to a big disaster semantically when the writer unaware of the proper punctuation needed to make the sentence meaning correct; a comma is definitely needed in that sentence; thus:

Let's eat, Grandma!

When we compare with the first sentence, the meaning is totally changed. In the first sentence, the meaning is to eat grandma; while in the second one, the meaning is to invite grandma to eat. If it is not merely sort of joke, punctuation indeed can save people live!

13

will make 'double noun'. If it is the case, then the sentence is also grammatically incorrect; therefore, a thorough understanding of correct spelling is important to create a correct sentence.

Diction also plays a major role when composing a good piece of writing. A good writer know what proper diction to use in different context and setting. This ability can be achieved by reading a lot of writing products and understand the different usage from each diction. The improper use of diction may lead the writer to be underestimated by his readers simply because he writes 'thou' instead of 'you' in modern setting of writing. Readers can even judge the writer as less capable in carrying out the 'proper diction' in the right setting; therefore writers should be careful when choosing the correct dictions in their writing.

Similar with the concept proposed by Walters (1999:90), Tarigan (1981:1) put the skill of writing as the last skill learners should master, following the other three skills in language composition; listening, speaking, and reading. It suggests that writing is the most difficult skill to master a language since it has three prerequisites of skills mentioned above. It is not surprising that students often feel reluctant when they are assigned a writing assignment.

14

There are two general purposes of writing according to Ellis (1990:93); to spread the message to others and to keep it for personal use. As in academic setting, writing, particularly writing an essay, is aimed to communicate the writers' thought to the readers. Therefore students are expected to be able to produce a good piece of writing and deliver the message effectively.

2.3 The Concept of Argumentative Essay Writing

Essay as a form of written product is very familiar in academic setting as an attempt to high critical thinking of a composition. In college level, particularly in English major, composition is part of curricula that has met the standardized material and assessment. Students are expected to comprehend the skill in composition from basic writing level to advanced level by the end of their college years. Even before students enter the college or university, they are familiarized by various writing forms and texts when they are in middle and high school. Therefore when they enter the college or university, they are fully ready to digest every small aspect in writing.

As this research employed essay to gather the data, a thorough explanation is needed to complete the understanding about essay, particularly argumentative essay.

15

literature or previously published material. Argumentative assignments may also require empirical research where the student collects data through interviews, surveys, observations, or experiments. Detailed research allows the student to learn about the topic and to understand different points of view regarding the topic so that she/he may choose a position and support it with the evidence collected during research. Regardless of the amount or type of research involved, argumentative essays must establish a clear thesis and follow sound reasoning.

The structure of the argumentative essay is held together by the following.

• A clear, concise, and defined thesis statement that occurs in the first

paragraph of the essay.

In the first paragraph of an argument essay, students should set the context by reviewing the topic in a general way. Next the author should explain why the topic is important (exigence) or why readers should care about the issue. Lastly, students should present the thesis statement. It is essential that this thesis statement be appropriately narrowed to follow the guidelines set forth in the assignment. If the student does not master this portion of the essay, it will be quite difficult to compose an effective or persuasive essay.

• Clear and logical transitions between the introduction, body, and

conclusion.

16

from the previous section and introduce the idea that is to follow in the next section.

• Body paragraphs that include evidential support.

Each paragraph should be limited to the discussion of one general idea. This will allow for clarity and direction throughout the essay. In addition, such conciseness creates an ease of readability for one’s audience. It is important to note that each paragraph in the body of the essay must have some logical connection to the thesis statement in the opening paragraph. Some paragraphs will directly support the thesis statement with evidence collected during research. It is also important to explain how and why the evidence supports the thesis (warrant).

However, argumentative essays should also consider and explain differing points of view regarding the topic. Depending on the length of the assignment, students should dedicate one or two paragraphs of an argumentative essay to discussing conflicting opinions on the topic. Rather than explaining how these differing opinions are wrong outright, students should note how opinions that do not align with their thesis might not be well informed or how they might be out of date.

• Evidential support (whether factual, logical, statistical, or anecdotal).

17

argumentative essay will also discuss opinions not aligning with the thesis. It is unethical to exclude evidence that may not support the thesis. It is not the student’s job to point out how other positions are wrong outright, but rather to explain how other positions may not be well informed or up to date on the topic.

• A conclusion that does not simply restate the thesis, but readdresses it

in light of the evidence provided.

It is at this point of the essay that students may begin to struggle. This is the portion of the essay that will leave the most immediate impression on the mind of the reader. Therefore, it must be effective and logical. Do not introduce any new information into the conclusion; rather, synthesize the information presented in the body of the essay. Restate why the topic is important, review the main points, and review your thesis. You may also want to include a short discussion of more research that should be completed in light of your work.

A common method for writing an argumentative essay is the five-paragraph approach. This is, however, by no means the only formula for writing such essays. If it sounds straightforward, that is because it is; in fact, the method consists of (a) an introductory paragraph (b) three evidentiary body paragraphs that may include

discussion of opposing views and (c) a conclusion.

2.4 The Concept of Writing Assessment

18

1. to determine the rate or amount of (as a tax)

2. to impose (as a tax) according to an established rate b: to subject to a tax, charge, or levy

3. to make an official valuation of (property) for the purposes of taxation 4. to determine the importance, size, or value of (assess a problem) 5. to charge (a player or team) with a foul or penalty

The term assessment is generally used to refer to all activities teachers use to help students learn and to gauge student progress. Though the notion of assessment is generally more complicated than the following categories suggest, assessment is often divided for the sake of convenience using the following distinctions:

1. initial, formative, and summative 2. objective and subjective

3. referencing

4. informal and formal.

As in writing, the assessment is categorized as subjective assessment. It is a form of questioning which may have more than one correct answer (or more than one way of expressing the correct answer). There are various types of subjective questions, include extended-response questions and essays.

19

assessment can also refer to the technologies and practices used to evaluate student writing and learning.

In “Looking Back as We Look Forward: Historicizing Writing Assessment as a Rhetorical Act,” Kathleen Blake Yancey offers a history of writing assessment by tracing three major shifts in methods used in assessing writing. She describes the three major shifts through the metaphor of overlapping waves: “with one wave feeding into another but without completely displacing waves that came before”. In other words, the theories and practices from each wave are still present in some current contexts, but each wave marks the prominent theories and practices of the time.

The first wave of writing assessment (1950-1970) sought objective tests with indirect measures of assessment. The second wave (1970-1986) focused on holistically scored tests where the students’ actual writing began to be assessed. And the third wave (since 1986) shifted toward assessing a collection of student work (i.e. portfolio assessment) and programmatic assessment.

20

In the first wave of writing assessment, the emphasis is on reliability: reliability confronts questions over the consistency of a test. In this wave, the central concern was to assess writing with the best predictability with the least amount of cost and work.

The shift toward the second wave marked a move toward considering principles of validity. Validity confronts questions over a test’s appropriateness and effectiveness for the given purpose. Methods in this wave were more concerned with a test’s construct validity: whether the material prompted from a test is an appropriate measure of what the test purports to measure. Teachers began to see incongruence between the material being prompted to measure writing and the material teachers were asking students to write. Holistic scoring, championed by Edward M. White, emerged in this wave. It is one method of assessment where students’ writing is prompted to measure their writing ability.

The third wave of writing assessment emerges with continued interest in the validity of assessment methods. This wave began to consider an expanded definition of validity that includes how portfolio assessment contributes to learning and teaching. In this wave, portfolio assessment emerges to emphasize theories and practices in Composition and Writing Studies such as revision, drafting, and process.

21

1. Portfolio

Portfolio assessment is typically used to assess what students have learned at the end of a course or over a period of several years. Course portfolios consist of multiple samples of student writing and a reflective letter or essay in which students describe their writing and work for the course. “Showcase portfolios” contain final drafts of student writing, and “process portfolios” contain multiple drafts of each piece of writing. Both print and electronic portfolios can be either showcase or process portfolios, though electronic portfolios typically contain hyperlinks from the reflective essay or letter to samples of student work and, sometimes, outside sources.

2. Timed-Essay

Timed essay tests were developed as an alternative to multiple choice, indirect writing assessments. Timed essay tests are often used to place students into writing courses appropriate for their skill level. These tests are usually proctored, meaning that testing takes place in a specific location in which students are given a prompt to write in response to within a set time limit. The SAT and GRE both contain timed essay portions.

3. Rubric

22

more science-based. One of the original scales used in education was developed by Milo B. Hillegas in A Scale for the Measurement of Quality in English Composition by Young People. This scale is commonly referred to as the Hillegas Scale. The Hillegas Scale and other scales used in education were used by administrators to compare the progress of schools.

In 1961, Diederich, French, and Carlton from the Educational Testing Service (ETS) publish Factors in Judgments for Writing Ability a rubric compiled from a series of raters whose comments were categorized and condensed into a

five-factor rubric:

Ideas: relevance, clarity, quantity, development, persuasiveness

Form: Organization and analysis

Flavor: style, interest, sincerity

Mechanics: specific errors in punctuation, grammar, etc.

Wording: choice and arrangement of words

As rubrics began to be used in the classroom, teachers began to advocate for criteria to be negotiated with students to have students stake a claim in the how they would be assessed. Scholars such as Chris Gallagher and Eric Turley, Bob Broad, and Asao Inoue (among many) have advocated that effective use of rubrics comes from local, contextual, and negotiated criteria.

23

students alike to evaluate criteria, which can be complex and subjective. A scoring rubric can also provide a basis for self-evaluation, reflection, and peer review. It is aimed at accurate and fair assessment, fostering understanding, and indicating a way to proceed with subsequent learning/teaching. Another advantage of a scoring rubric is that it clearly shows what criteria must be met for a student to demonstrate quality on a product, process, or performance task.

Douglas H. Brown (2004:335) states that in teaching writing, the compositions is supposed to:

a) meet certain standards of prescribed English rhetorical style; b) reflect accurate grammar;

c) be organized in conformity with what the audience would consider to be conventional.

It means that a good deal of attention was placed on “model” composition that the students would emulate and how well a student’s final product measured up against a list of criteria including content, organization, vocabulary use, grammatical use and mechanical consideration such as spelling and pronunciation.

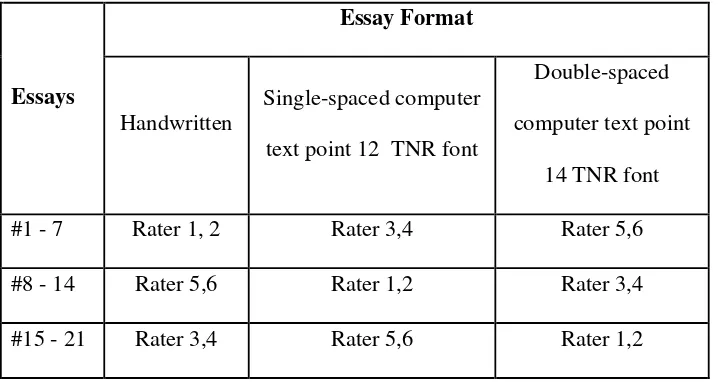

24

In this study, the researcher employed a content based rubric which was taken from the documentary of English Language Center (ELI) of Missouri State University. The rubric itself was aimed for grading the English Foreign Learners (EFL) in the university and had been applicable to the wider scope of EFL assessment in all around the states. Thus, the researcher was adopting the rubric to grade the essay performances in this research (see Appendix 4).

2.5 Writing and Technology Involvement

In this age of technology, the involvement of computer has been widely used in helping students to write down their ideas. Instructors and teachers have introduced computer as an assistive tool to learn writing. The students at junior level have to master at least the basic skill of typing and editing in Microsoft to ease them in completing the assignments.

In the level of college or university, the students have been very familiar with the instruction through computer assignment. Essay assignment or writing response should be submitted as a printed piece rather than as a handwritten piece. Unfortunately, although composing essays on computers is becoming more common, studying its effect on writing assessment has received little attention as reported by Chase (1979).

25

in order to assess the students’ essays to find out whether there is difference on raters’ scores for different format of essay.

2.6 Theory on Raters

Introduced by Cronbach, L.J., Nageswari, R., & Gleser, G.C. (1963), Generalizability theory which is also known as G Theory, is a statistical framework for conceptualizing, investigating, and designing reliable observations. It is used to determine the reliability (i.e., reproducibility) of measurements under specific conditions. It is particularly useful for assessing the reliability of performance assessments. In G theory, sources of variation are referred to as

facets. Facets are similar to the “factors” used in analysis of variance, and may include persons, raters, items/forms, time, and settings among other possibilities. These facets are potential sources of error and the purpose of generalizability theory is to quantify the amount of error caused by each facet and interaction of facets. The usefulness of data gained from a G study is crucially dependent on the design of the study. Therefore, the researcher must carefully consider the ways in which he/she hopes to generalize any specific results. Is it important to generalize from one setting to a larger number of settings? From one rater to a larger number of raters? From one set of items to a larger set of items? The answers to these questions will vary from one researcher to the next, and will drive the design of a G study in different ways.

26

facets of interest are then considered to be sources of measurement error. In most cases, the object of measurement will be the person to whom a number/score is assigned. In other cases it may be a group or performers such as a team or classroom. Ideally, nearly all of the measured variance will be attributed to the object of measurement (e.g. individual differences), with only a negligible amount of variance attributed to the remaining facets (e.g., rater, time, setting).

The results from a G study can also be used to inform a decision, or D, study. In a D study, we can ask the hypothetical question of “what would happen if different aspects of this study were altered?” For example, a soft drink company might be interested in assessing the quality of a new product through use of a consumer rating scale. By employing a D study, it would be possible to estimate how the consistency of quality ratings would change if consumers were asked 10 questions instead of 2, or if 1,000 consumers rated the soft drink instead of 100. By employing simulated D studies, it is therefore possible to examine how the generalizability coefficients would change under different circumstances, and consequently determine the ideal conditions under which our measurements would be the most reliable.

2.7 Theoretical Assumption

Since the researcher set up two alternatives of hypotheses in this study, there should be two strong assumptions in conducting the study:

27

the three modes of presentations. 1) Mechanical errors, 2) Higher expectation on essay as computer printed text, and 3) Stronger connection when reading essay as handwritten text rather than as computer printed text.

The second assumption is to prove the second hypothesis that the length of essay can eliminate the presentation effect. In another word, the double space essay would likely to receive higher score than the single space essay. The assumption comes up from the idea that handwritten essays tend to appear longer than computer text essays.

2.8 Hypothesis

H0 : There is no difference on raters' scores between essay presented as handwritten form and essay presented as computer printed text

H1 : There is difference on raters' scores between essay presented as handwritten form and essay presented as computer printed text

III. RESEARCH METHODS

This chapter discusses five core elements of methodology. They are (1) design of the research, (2) population and sample of the research, (3) research instrument, (4) research procedures, (5) criterion of a good test, (6) data treatment, and (7) hypotheses testing.

3.1. Design of the Research

In designing this study, the researcher adopted one shot study design. Based on the research question in this study, handwritten and computer test are independent variables; while raters’ score is dependent variable.

Here is how the design looks like:

X1

Y X2

Where,

X : Medium of presentation X1 : Handwriting

3.2. Population and Sample of the Research

The population in this research were essays produced by one class of third semester students in English Study Program of Lampung University. There were forty one students in that class as the population. All of the students were following the training session and the writing task. At the end of the writing task, there were forty one essays produced by forty one students.

In order to choose the research sample, the researcher used random sampling by simply drawing lottery for twenty one (21) essays out of forty one essays (41). The 21 sample size was selected randomly to meet the exact number of sample in which the raters would be scoring.

3.3. Research Instrument

In collecting the data the writer used the following instrument:

Writing Task

In this writing task, the participants were asked to hand-write one page length of an argumentative essay from a given prompt. The writing task was conducted on September 16th, 2014 with 41 students participated. The participants were given 2x45 minutes time allocation to finish their essay based on the prepared prompt.

The next meeting, which was the following week on September 16th 2014, the researcher administered the writing task based on the prompt prepared before. The prompt itself was created from the current material the students were discussing in the Literature class. The prompt was about an old epic from Anglo-Saxon era which tells about the ancient hero, Beowulf. For further details, see the prompt in

Appendix 3.

3.4 Research Procedure

The procedures in this research were conducted in the following sets (1) determining the sample of the research, (2) administering training session, (3) administering writing task, (4) transcribing original essay, (5) distributing the original and transcribed essay, (6) scoring essays by raters, (7) analyzing the data, and (8) drawing findings and conclusions from the data.

1. Determining the Sample of the Research

The sample would be taken from 21 essays out of 41 essays produced by students of English Study Program in Lampung University. The essays were made during Introduction to Literature class.

2. Administering Training Session

3. Administering Writing Task

The writing task was conducted on September 16th, 2014 in the same class. The students were asked to handwrite one full page essay on paper which is around 400 words. The instruction was made clear; the students composed the essay from the given prompt. The prompt was made to meet their understanding about the topic they had learned; it was under the topic of ‘Beowulf’, an epic poem from Anglo-Saxon era. The students were given 2x45 minutes time allocation to finish and submit their essays.

4. Transcribing Original Essay

It was transcribing the original handwritten format into computer-text format. The transcribing was done verbatim (including all spelling, grammar, and punctuation errors) into computer format by the research team.

To ensure the precision in transcription, the following procedures were adopted. When transcribing responses from their original handwritten form to computer text, responses were first transcribed verbatim into the computer. The transcriber then printed out the computer version and compared it word by word with the original, making corrections as needed. A second person then compared these corrected transcriptions with the originals and made additional changes as needed.

5. Distributing the original and transcribed essays

Table 3.1 Essay Distribution Among 6 Raters

Essays

Essay Format

Handwritten

Single-spaced computer text point 12 TNR font

Double-spaced computer text point

14 TNR font #1 - 7 Rater 1, 2 Rater 3,4 Rater 5,6 #8 - 14 Rater 5,6 Rater 1,2 Rater 3,4 #15 - 21 Rater 3,4 Rater 5,6 Rater 1,2

From the table of distribution above, the total sample size were 63 essays; 21 for the original handwritten, 21 for single space, and 21 for double space. We can also see that none of pairs or raters scored twice for the same essay. They were also unaware of the presentation effect as being the core of this investigation.

6. Scoring Essays by Raters

There were six raters employed in this research. Four of them were advanced graduate students in several state universities in Indonesia. The other two raters were English instructors in a Language Testing Center in a local university. After all, there were three pairs of raters working on different format of essay, but none of them scored the same essay twice.

7. Analyzing Data

The researcher used one way ANOVA to analyze the data. The data were statistically computed through the Statistical Package for Social Science (SPSS) version 19.

8. Drawing findings and conclusions

The last step of this research was drawing findings and conclusions from the data analysis above. In this step, the researcher also formulated some suggestions and recommendations for further research.

3.5 Criterion of a Good Test

In analyzing the data, the researcher used one way ANOVA to measure more than two or three groups of mean, they are raters’ scores on: original handwritten, computer text single spaced point 12, and computer text double spaced point 14.

a. Validity

1982: 250). The validity of this research will be seen from content and construct validity.

Content Validity

In order to meet the content validity, the researcher applied rubric to assess the essay. The rubric was selected because it has been widely applied to assess the performance of English Foreign Learners (EFL) in the states.

[image:51.612.109.539.560.710.2]Following a scoring procedure for composition items for ESL/EFL students, all responses in a given format were multiplied by seven for Idea Development and Organization criteria, and were multiplied by three for Grammar and Sentence Structure criteria. The scoring guidelines for the composition items focused on two areas of writing, namely Idea Development and Organization, and Grammar and Sentence Structure. Both scale for Idea Development and Organization and the scale for Grammar and Sentence Structure ranged from 0 to 10 and were multiplied by seven and three respectively. Table below presents the category descriptions for each point on the two scales.

Table 3.2: Specification on Data Collecting Instrument for EFL

Q1 - Development and Organization (multiply rating by 7 points)

Rater’s Comments:

Overall Content --Did you cover all aspects of the prompt? --Did you use topic sentence(s)

--Did you organize your answer well and/or use transitions?

Excellent Good Averag e

Needs Improvement

Q1 - Grammar and Sentence Structure (multiply rating by 3 points)

Are your sentences complete and correct? Are your sentences clear and easy to understand? Type of Mistake How

many times? Rater’s Comments art=article use frag=fragment cap=capitalization pos=possessive prep=preposition pro=pronoun p=punctuation ros=run on sentence sva=subject/verb agree sn/pl=singular / plural sp=spelling vt=verb tense mod=modal use wf=word form inf/ger=infinitive/gerund wo=word order wc=word choice wm=word missing ss=sentence structure

Overall level of interference with meaning: Excellent Good Average Needs

Improvement

Unacceptable Score x3 10 9 8 7.5 7 6 5 4 3 2 1 0

Source: Content-based writing rubric for EFL Students (Doc. of Missouri State University)

Construct Validity

Construct validity measures whether the construction had already referred to the theory and objectives or not (Hatch and Farhady, 1982: 251). The rubric presented above has met the concept of writing assessment as discussed in Chapter 3.

b. Reliability of the Raters/Inter-rater Reliability

Since this research employed multiple raters in assessing students’ essays; thus the reliability of the raters is very important to measure. The reliability of raters is known as inter-rater reliability which is a measure used to examine the agreement between two people (raters/observers) on the assignment of categories of a categorical variable. It is an important measure in determining how well an implementation of some coding or measurement system works. In this ANOVA based research, the researcher used Kappa for assessing the reliability of agreement between a fixed number of raters when assigning categorical rating to a number of items or classifying items. The measure calculates the degree of agreement in classification over that which would be expected by chance.

Below is the formula of Cohen's Kappa Inter-rater Reliability Coefficient:

Where,

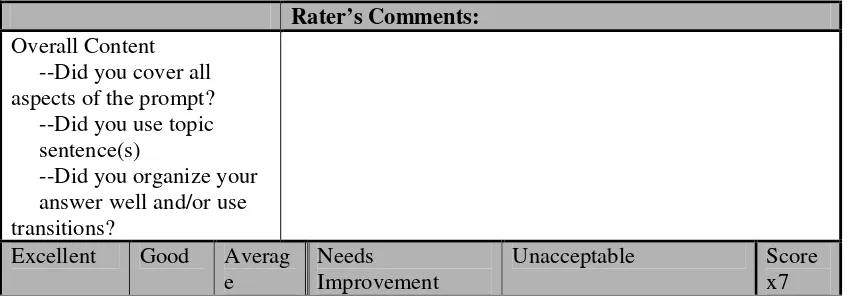

In accordance with the content based rubric (see Appendix 4), there are four scales that raters needed to score the essay: 1-30 for unacceptable, 40-70 for needs improvement, 75 for average, 80-99 for good, and 100 for excellent.

[image:54.612.149.393.207.299.2]Below is the description of the rating scale:

Table 3.3 Rating Scale Distribution

Value Scale Description

0 0 - 30 Unacceptable

1 40 - 70 Needs Improvement

2 75 Average

3 80 - 99 Good

4 100 Excellent

The data of ratings were then calculated using SPSS version 19 to find out the percent agreement among raters. The higher the percent agreement, the more reliable the raters are.

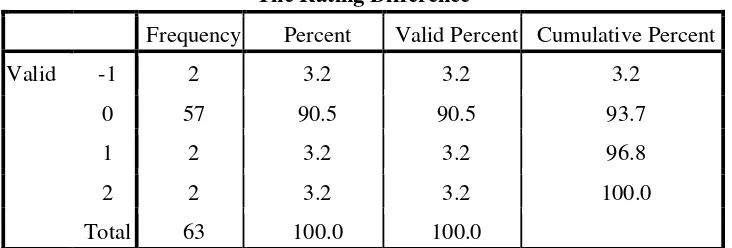

As shown, the table below presents the result of Kappa Correlation Coefficient

[image:54.612.131.497.506.630.2]which was statistically calculated with SPSS version 19.

Table 3.4 Frequency Table of Inter-Rater Agreement

The Rating Difference

Frequency Percent Valid Percent Cumulative Percent Valid -1 2 3.2 3.2 3.2

0 57 90.5 90.5 93.7

1 2 3.2 3.2 96.8

2 2 3.2 3.2 100.0 Total 63 100.0 100.0

agreement. The variable that is not zero (0) is identified as the difference perception between raters somewhere in the scoring or it can be said there was disorder in the agreement. However, the number showed a low frequency which was only about 9 %.

Below is the table that shows how Kappa can analyze the percentage of count and

expected count of agreement. Prior to the calculation, the researcher grouped the

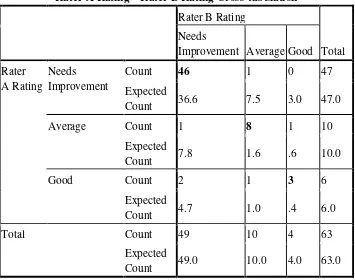

[image:55.612.133.488.405.685.2]six raters into two pairs: rater A consists of rater 1, 3 and 5; rater B consists of rater 2, 4, and 6. The idea behind grouping these raters was because the valid percent in the previous table above showed a high percentage of validity, thus all raters are relatively comparable.

Table 3.5 Cross-tabulation Percent of Agreement

Rater A Rating * Rater B Rating Cross-tabulation

Rater B Rating

Total Needs

Improvement Average Good Rater

A Rating Needs Improvement

Count 46 1 0 47 Expected

Count 36.6 7.5 3.0 47.0 Average Count 1 8 1 10

Expected

Count 7.8 1.6 .6 10.0 Good Count 2 1 3 6

Expected

Count 4.7 1.0 .4 6.0 Total Count 49 10 4 63

Expected

The expected count means that the expected chance set by the null hypothesis. If in the count percent the number is higher than the expected count, it means that the raters agree above chance. From the cross-tabulation table above, we can see that the null hypothesis set 36.6% of agreement for category Needs Improvement, but from the count percent we can see that both raters agree 46% which means there was an improvement in agreement. We can also see that in

Average, the expected count was 1.6% for average category, but both raters rated 6% of agreement, which also means the level of agreement for Average category was above chance. There was only 1% difference, that was when rater 2 rated as 1% as average, while rater 2 rated as needs improvement. When we look at in

[image:56.612.135.510.509.616.2]Good category, the expected count was .4%, while both raters seemed agree for 3% level of agreement for Good category. From the table above it can be concluded that the level of agreement was good because both raters agree beyond chance. The Kappa then estimated the above chance above as the value level which was presented in table below.

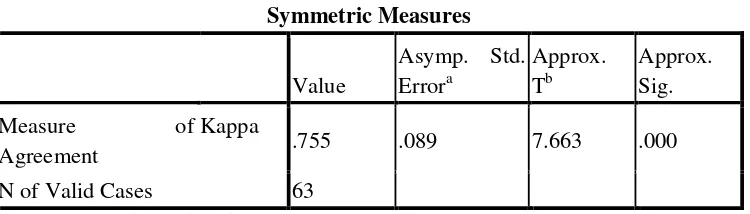

Table 3.6 Symmetric Measures

Symmetric Measures

Value

Asymp. Std. Errora

Approx. Tb

Approx. Sig. Measure of

Agreement

Kappa

.755 .089 7.663 .000 N of Valid Cases 63

a. Not assuming the null hypothesis.

b. Using the asymptotic standard error assuming the null hypothesis.

3.6 Data Treatment

In running one way ANOVA, there are five data assumptions that should not violate in order to support the result of the ANOVA calculation (Setiadi 2006: 173). They are:

1. There is only one dependent variable and one independent variable with three or more level. In this research, the dependent variable is the raters’ scores and the independent variable is the essay formats with three type of treatments, they are handwritten, single space point 12 TNR fonts, and double space point 14 TNR fonts. So, the first assumption is not violated.

2. The dependent variable should be measured at the interval/ratio level. In this study, the dependent variable is continuous variable, that is the scores awarded by raters, and it is ranged from 0-100. Therefore the second assumption is met.

3. It is a between group comparison. In this research the independent variables are the subjects to compare. So, the third assumption is not failed.

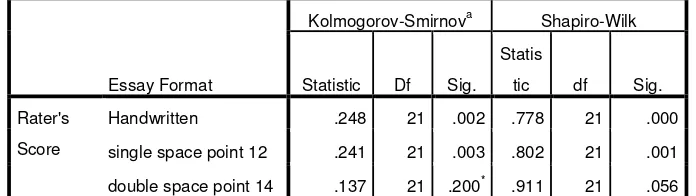

4. The dependent variable should be approximately normally distributed for each category of the independent variable. In this research, the researcher employed Shapiro-Wilk test of normality

Table 3.7 Test of Normality

Tests of Normality

Essay Format

Kolmogorov-Smirnova Shapiro-Wilk

Statistic Df Sig.

Statis

tic df Sig.

Rater's

Score

Handwritten .248 21 .002 .778 21 .000

single space point 12 .241 21 .003 .802 21 .001

double space point 14 .137 21 .200* .911 21 .056

a. Lilliefors Significance Correction

*. This is a lower bound of the true significance.

The table above explained the result of Shapiro-Wilk test of normality. We can see from the table that for the "handwritten", "single space point 12", and "double space point 14" format group, the dependent variable "raters' scores" was deviated. It was shown by the significancevalue of less than 0.05 (only one category met this assumption), so the data has non-normal distribution. Fortunately ANOVA only requiring approximately normal data because it is quite "robust" to violations of normality, meaning that assumption can be a little violated and still provide valid results.

5. The number of sample size is not too small (at least 5 data for each cell). In this research the sample data for each category is 21. So, the last assumption is not violated.

3.7 Hypothesis Testing

The hypothesis was statistically analyzed using One Way Anova that draws the conclusion in significant level if P > 0. 05, H0 accepted, and P < 0.05, H1 accepted.

H0 : There is no difference on raters’ score for both essays presented as

handwritten format and as computer-text format;

H1 : There is difference on raters’ score for both essays presented as

handwritten format and as computer-text format;

H0 : The length of essay does not eliminate the presentation effect;

H1 : The length of essay eliminates the presentation effect.

V. CONCLUSIONS AND SUGGESTIONS

This chapter presents the conclusions and suggestions of this research. The researcher

presents two main points from the result findings and two suggestions for

improvement.

5.1

Conclusions

a.

The different mode of presentations results in different scoring because of the

factor called

presentation effect

. Based on the result of this research, it was

revealed that there was statistically difference on scores awarded on original

handwritten than on single space and double space computer format. The

F

valuewas higher than

F

tablein the significance level

p

-values < .05. In this

research, the

F

valuewas 5.5 and the

F

tablewas 5.05, which means

F

value>

F

tablein the significance level of

p

-value < .05. The results suggested that the null

hypothesis was rejected and the research hypothesis was accepted.

b.

The length of the essays did not eliminate nor reduce the presentation effect.

The mean score of essay in double space version was lower than the mean

5.2

Suggestions

a. For future researchers, it is suggested to choose the different topic of essay

when comparing the two different modes of presentations since it is assumed

that the different topic might result in different result. Further, the future

researcher needs to deeply analyze the causes of presentation effect on raters’

scores. If it is caused by the errors visibility, then what happen if both formats

contain no error? The future researchers might want to manipulate any

spelling, punctuation, or capitalization mistakes in the transcribed format of

handwritten, so raters will receive both formats in free error. The future

investigation should prove whether the cause of presentation effect is still

reliable or not.

b. It is important that the issues of comparability between administration

modes continue to be explored to ensure that the tests remain a valid

measure of language proficiency. This exploratory study should be focused

on the comparability of computer-based (CB) and paper-based (PB)

writing assessment.

c. For future teachers, raters, or assessors, it is suggested that they should be more

aware of such presentation effect when scoring students responses in any

REFERENCES

Anderson, L., and Krathwohl, D. 2001. A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. New York: Longman.

Arnold, V., Legas, J., Obler, S., Pacheco, M.A., Russell, C. and Umbdenstock, L. 1990. Do students get higher scores on their word-processed papers? A study of bias in scoring hand-written versus word processed papers.

Arter, J. 2000. Rubrics, scoring guides, and performance criteria: Classroom tools for assessing and improving student learning. (ERIC Document Reproduction Service No. ED446100). Retrieved April 20, 2014 from http://www.cel.cmich.edu/ontarget/feb03/Rubrics.htm

Bangert-Downs, R. 1993. The word processor as an instructional tool: A meta-analysis of word processing in writing instruction. Review of Educational Research, 63, 69–93.

Bloom, B., 1956. Taxonomy of educational objectives: The classification of educational goals. Handbook I: cognitive domain. New York: D