A simple algorithm to enrich eLectures

with instructor notes

Nael Hirzallah&Wafi AlBalawi&Ahmad Kayed& Sawsan Nusir

Published online: 3 September 2009

#Springer Science + Business Media, LLC 2009

Abstract One of the devices that may help in content development for eLearning services is an Electronic Copyboard (e-board). Once it is available in every classroom, it helps in extracting the lecturer notes in an electronic as-in-class format. This paper introduces an alternative solution to e-boards. The presented solution is much cheaper and much simpler to install if compared to that of the e-boards. It could be implemented using a web camera and a video processing algorithm to extract notes that were added or removed by the lecturer. The notes extracted along with the slides presented and the recorded lecturer voice may compose a complete as-in-class electronic form of the lecture (eLecture). This paper presents first the proposed Notes Extraction Algorithm and runs few experiments when the notes are added on a traditional white board under various scenarios. The algorithm takes into consideration the brightness variations of the video in detecting the notes especially since this is normal to occur due to for instant to the lecturer movements in front of the white board. Second, the paper presents an authoring tool that includes the proposed algorithm to extract notes and combines them along with video and slides, if exist, before generating eLectures that could be viewed using popular players.

Keywords eLearning . Multimedia education . eLectures

DOI 10.1007/s11042-009-0343-3

N. Hirzallah

:

W. AlBalawi:

A. KayedFahad Bin Sultan National University, Tabuk, Kingdom of Saudi Arabia

W. AlBalawi

Computer Engineering Department Chair, College of Computing, Fahad Bin Sultan National University, Tabuk, Kingdom of Saudi Arabia

1 Introduction

Not only most educational institutes are moving towards eLearning to elevate the quality of education, but also many countries have already enforced eLearning standards and implemen-tation requirements on their institutes. One part of a traditional lecture that must be considered within the content development for eLearning is the lecturer written notes during the lecture. During such lectures, the instructor needs often to write some comments or draw some sketches to highlight or elaborate few points he/she is explaining to the students. On the other hand, you see some students copying what is being written on the board in front of them, whether using white or black boards, and some who don’t. One of the reasons for many of those students who don’t do any copying during a lecture may be to stay fully focused on the subject being delivered.

Thus getting a copy of the notes being written during a lecture without the hassle of copying them down is a feature that both groups of students would love to have just after the lecture. This allows the students to verify the correctness of what they’ve copied, to allow them to stay focused during the lecture, or to remember hints once they look at the notes in the format that is similar to that presented by the instructor. Thus, Electronic Copyboards, or in short e-boards, could be the ideal solution to satisfy this need. e-boards are electronic devices that resembles a white screen. It simply captures notes and images from the marker board, and gives you the choice of printing out the notes, saving a digital copy to an optional portable media (CompactFlash, or USB Memory Stick), or transferring files directly to a computer via USB or even wireless.

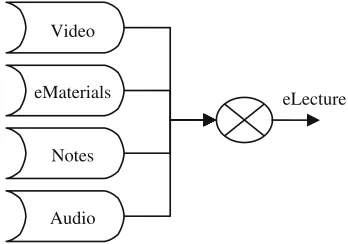

A further step could be offered besides making the notes available to students is to have the complete lecture in an electronic format (eLecture in short). Figure1shows the typical components of an eLecture.

For that, systems to create electronic course content during a normal lecture were developed, such as TTT [7] and AOF [6]. The major advantage of these systems is the reduced costs for the creation of electronic course content.

This concept of content production by presentation recording was called“Authoring on the fly”(AOF). Its main objective is to automatically capture live lectures and presentations

in classrooms and lecture halls, make them available as eLectures in a variety of output formats for access over the internet, and use them as kernels of web-based learning modules. The AOF concept, which started in mid 90s, has been realized and implemented into several prototype systems over the last few years and used in many institutions. This is because lightweight content production using presentation recording instead of traditional authoring systems has become an increasingly popular method of creating instructional media for offline use, since it makes use of already existing educational potential at universities and companies. Teachers are normally well experienced in face-to-face

education and training. Nowadays, live events, such as training sessions in companies or universities, are in many cases already based on some kind of electronic material, such as PowerPoint-slides, digital images, video clips, etc. The slides used in the live-event are graphically annotated and verbally explained by the presenter. Hence, it was natural to exploit this effort already invested in live-events for the automatic production of instructional content for later off-line use as well.

In [3], Screen Capturing algorithm was presented. It takes into consideration animations and notations inserted by the instructor during a lecture. For this to include all parts of the lecture, in particular the notes, the instructor has to insert soft notes rather than hard notes, i.e., notes must be added through the presentation application such as PowerPoint and not on board.

Other AOF systems focus on the electronic presentation, such as PowerPoint, the audio recorded from the presenter, and the video input from a camera recording what is going on. A major disadvantage of using such video recording to extract or view the notes being written on the board is a tradeoff between keeping the video file small and the readability of the notes. If the resolution is large enough to capture the notes being put on the board, the size of the video would be extremely large even if compression is used, while if it is kept small, the notes then might not be readable. Thus again, supplementing such AOF systems with the output of e-boards representing the hard notes that are written by the instructor would be the ideal solution.

Having mentioned the needs for e-boards that may play a great role in both printing the notes to the students, as well as being part of an AOF systems, this paper presents an alternate solution to using the e-boards. The proposed system is expected to overcome many of the major disadvantages the e-boards usually suffer from. One of the disadvantages is the high cost of e-boards, which exceeds a thousand and sometimes ten thousand dollars, if compared to the low cost of the PC camera needed in the proposed system. The portability the proposed system enjoys allows the instructor to carry the system to wherever he/she plans to give the lecture in a much easier way than carrying an e-board. The time and efforts required to implement and install e-boards is yet another issue to consider. Furthermore, instructors who prefer to use traditional blackboards will no more be able to use them when e-boards are installed; however, with the proposed system they would still be able to do so. Finally, the ability to offer slide show along with recording the notes, shoots the cost of such e- boards even higher, while affecting not the proposed system.

In the next section the basis of the proposed system will be explained with the aid of few simulated samples. In section III, few challenges that would prevent the system from achieving good results will be discussed; hence, justifying making few enhancements to the system to overcome these challenges. A section on analyzing the results of few experiments will precede a section on how to package such system with an AOF to generate eLectures using SMIL. Finally, the conclusion section will summarize the contribution of this paper.

2 The algorithm

After discussing the major drawbacks of e-boards in the previous section, the system presented in this section is expected to be cheap, easy to install, portable, and applicable on both white and black boards, as well as with or without slide show.

On the other hand, one cannot hide the fact that many of the mentioned disadvantages are being overcome by recent eboards technology. The prices of such eboards are decreasing yet the features provided are increasing. For instance, you may find portable eboards that consist of two flexible bars to be mounted on both vertical edges of a traditional white board. These bars are equipped with sensors embedded in them to track the movements of the markers. Thus, one may no more view the proposed solution as a permanent alternative to eboards, but at least an ad-hoc one to be used in situations where such eboards are not available.

The Notes Extraction Algorithm (NEA) proposed in this section will consider many challenges such as notes may be added as well as erased, the instructor moves often forward and backward covering the board, the board brightness may change due to light reflections either from the instructor or from other objects such as curtains, clouds and trees, and finally the color of the markers may change. The basic idea of the algorithm will first be presented then gradually introducing necessary enhancements.

To show the notes of a lecture being written on a board, periodic snapshots from a camera recording the full board may be more than enough. However, such snapshots even if compressed may be large in size for a 40 min lecture. Thus, instead of taking dummy snapshots, two further steps are applied. One is to consider only significant snapshots where it is believed that notes have been added or erased, yet, the instructor is expected to be absent from the snapshot. Another step is to include only the differences between these consecutive snapshots. In other words, the plan is to save the first image to be considered as Layer zero, while the subsequent information represent the additions as layer two, three and so on. By doing so, the overall size of this sequence would be acceptable.

To explain this in details, Fig.2presents the main steps of this algorithm:

1. Take the first snapshot of the board just before the start of the lecture, and name it Snapshot at 0, or S0.

2. Run Edge detection on it to get an Edge frame at 0, or E0.

3. Capture periodically a snapshot, say every t seconds, and name it Siwhere i equals 1,

2, etc…

4. LetΔibe the difference between Siand the original snapshot S0.

5. IfΔiis small but not zero then run edge detection on Sito output Enwhere n equals to

1, 2, 3, etc…; otherwise, skip it if it is not considered a New Start. The New Start is an

event which will be explained towards the end of this section.

6. Generate the Difference frame at instant n, or Dn, which is equal to the complement

intersection between En−1 and En i.e. Dn¼ðEn 1\EnÞc. The complement results in

the elements that do not exist in the intersection of En−1and Enbut in their union.

7. Generate the Modified Difference frame at n, or Mn, which is achieved by coloring Dn

as follows: those elements or edges found in En−1but not in Enare colored in white,

while edges found in Enbut not in En−1are colored using the original color.

8. Output the Final frame (Fn-frame) which is equal to Fn−1∩Mn

To understand step 7 more, assume that E0(the Edge frame at instant 0) is shown on the

left hand side of Fig.3, while E1is shown on the right hand side. Note that the following

few figures are drawn using Microsoft Paint, for the argument sake, and do not reflect the camera output.

E0∩E1will result in everything but the letter R. Thus the complement will be the letter R.

Fig. 2 Algorithm basic flow chart

Fig. 3 First two edge frames of

If for instant E2appears as in Fig.4, then E1∩E2will result in everything except the

letter R and e, and the complement will be the letter R and e. In this case R takes the color of white (which means that R has been erased) and e takes the color of black, since they appear in E1and E2,respectively.

Theoutputgenerated will be similar in concept to that of the MPEG Group of Pictures (GoP) where I-frame is similar to a JPEG frame while P and B are predictive and Bi-directional frames which mainly contain motion vectors. Thus, the output frame of the algorithm at instant n, namely Fn, will be equal to S0, a compressed full image, added to it

M1to Mn, which mainly represent the differences in the notes.

The threshold value, Ts, mentioned in the flow chart, to which the difference value between snapshots is compared, must be set appropriately to exclude the instructor when moving within the frame, yet not to miss significant notes. The algorithm is flexible to a point even if the instructor body is considered, it will be removed (or erased) in the next Mn

frame or so, since he/she usually disappears once he/she moves out of the frame.

TheNew Startmentioned in the flow chart as well, is usually triggered manually when the complete background changes. This is used when the instructor is pointing the camera towards the slide show screen used by the projector. That is, the board considered in the algorithm is the screen itself. Thus, the notes being entered by the instructor, in this case, could be either hard notes, i.e. written on the screen itself, or soft notes added through the presentation application, such as using the pointers option of the PowerPoint. Therefore, when such scenario is implemented, the New Start is triggered after the next slide option is triggered.

A snapshot taken from the camera can be seen in Fig.5. It is worth to note again that the higher the resolution of the camera is, the better the results are.

A typical setup is to have the instructor body to represent about 1/5 of the frame, so Ts can be set large enough to reach 20% of the frame size. It is also worth to note that the instructor must leave the frame once in a while so the algorithm gets the chance to work on extracting the notes written on the board; otherwise, this will cause the algorithm to skip the snapshots taken periodically where the instructor is present within the frame, and the notes will be accumulated causing the time elapsed to receive another M frame to be long. However, this assumption can be relaxed though splitting the frame into two halves or more vertically, as shown in the Fig.6, and using each part as an individual frame.

Fig. 4 E2of the simulating

Moreover, similar to the assumption of few e-boards, in order for this algorithm to work well, notes written on the board must be thick. Finally, both the camera and the board must be completely fixed, as mounting them to a wall, allowing no movements even if small.

3 Analysis of the algorithm

The algorithm explained in the previous section seems to be very simple; however, once it is tested on real scenarios, many challenges appear. The most of which is the inter-frame brightness instability. Since the instructor often moves forward and backward, within or beyond the frame, causing changes in the light reflections projected on the board, many areas within the frame will suffer changes in brightness. The proposed algorithm care less when the instructor is within the frame, since it skips these frames; however, for those frames where the instructor is expected to be outside the frame, brightness plays an essential role in the value ofΔ(shown at the bottom of the flow chart Fig.2) as well as in the E frames. Light reflections may not only be caused by the instructor body, but also by other objects such as moving curtains or students. To reduce this negative behavior, an attempt was made to remove the brightness component and work on the IQ part of the YIQ

Fig. 5 A sample snapshot

color space. However, the attempt to apply noise filtration on E frames was more successful. Details on the noise filtration used will be discussed later in this section.

The second challenge was to use the appropriate edge detection method. As it is known, edge detection is normally applied on still images; however, when we apply edge detection on two similar frames within a video stream, depending on the edge detection algorithm used, one may get two different results. Edges in images are areas with strong intensity contrasts—a jump

in intensity from one pixel to the next. There are many ways to perform edge detection. However, the most may be grouped into two categories: gradient and Laplacian.

The gradient method detects the edges by looking for the maximum and minimum in the first derivative of the image. A pixel location is set to‘1’or declared an edge location if the value of the gradient exceeds some threshold. As mentioned before, edges will have higher pixel intensity values than those surrounding it. So once a threshold is set, you can compare the gradient value to the threshold value and detect an edge whenever the threshold is exceeded. Furthermore, when the first derivative is at a maximum, the second derivative is zero. As a result, another alternative to finding the location of an edge is to locate the zeros in the second derivative. This method is known as the Laplacian. A zero-crossing edge operator was originally proposed by Marr and Hildreth [5] which is based on Laplacian method. While another method based on the gradient method could be found in Ref. [2].

In the proposed algorithm, Sobel method [2] is used to detect edges. Yet again, due to the brightness instability mentioned earlier, false edges may be detected once in a while. These appear in the form of noise in D frames (the complement intersection of two E frames). Thus, to solve this issue, two modifications would be introduced to the algorithm. The first one is to apply noise filtration after detecting the edges. This noise filtration process mainly counts the neighboring pixels within the 5×5 block. If the number of neighboring pixels found‘1’(i.e. representing edges, or black) exceeds 19 then the pixel in question is set to‘1’; otherwise it is set to‘0’.

The second modification is using bidirectional intersection similar to that used by the Bidirectional B-frame in MPEG. That is, Dnmentioned in the flow chart in Fig.2becomes

the result of the complement intersection of not only Enand En+1but also the complement

intersection of En−1(as the 1stpart) and the intersection of Enand En+1(as the 2nd part).

Thus, we would end up with the following for Dn:

Dn¼ðEn 1\ðEn\Enþ1ÞÞC

By doing this, and considering the assumption that many of the noise would have less chance to appear in the same area in more consecutive significant snapshots, the new formula will output only real edges or notes that have been added at time Enwith noise or

false edges being reduced or eliminated. To understand this, let’s look at Fig.7.

To get D1, we need to intersect E1and E2, which will result in the board frame and the

letter R, removing many of the false edges or noise as well as the letter e. Intersecting the result with E0, will get just everything but R. The complement of this, which is the resulted

D1, is just the letter R with the left over noises in E1. Consequently, F1will be equal to F0∪

M1, where M1is D1but with colored edges—i.e. the letter R in black, as in Fig.8.

To further keep Fnclean from false edges or notes, it is expected that the initial Edge

frame, E0, (rather than be equal to just the edges of S0) to be as follows.

E0¼E0\E 1:

Which means that E0equals to the intersection of the edges of two the initial snapshots

The only drawback is when some notes have been added at instant n but erased at instant n+1. These notes would be lost for good. Hence, unless notes last forn≥2, then they will not be captured. This can be seen in the case above after we change the scenario by erasing the letter R from the board atn=2. Since intersecting E1and E2may result in just the board

frame and no letters, D1will be equal to∅, and thus F1will be the same as F0. However,

the scenario will be different if R has lasted for another significant snapshot. One may argue this point, however, by claiming that notes which disappear quickly are insignificant anyway; thus, losing them will not harm.

Finally, due to choosing edge detection; the algorithm may miss significant color fillings made by the instructor. For example, if the instructor drew a solid circle, then the edge detection process may only get the external line of the circle. For that and since the algorithm seeks to output only M (or F) frames, an attempt to apply binarization on the grayscale version of the snapshot rather than edge detection, yet keeping the noise filtration, was performed. This change in the algorithm outputs similar results to those if the latter

Fig. 7 The simulating example with noise

Fig. 8 F1of the simulating

would be applied when no fillings exist, but misses not such solid drawings. However, since the fillings are not frequently used by instructors, and if used, parallel or semi parallel lines are drawn instead to fill an object on the board, thus, allowing these lines to be captured through edge detection anyway. For that, we applied no further enhancement to the algorithm.

4 Experiments

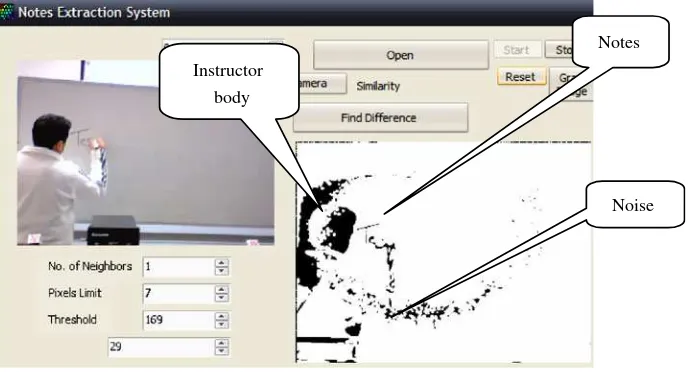

The modified algorithm was tested on various scenarios that use white boards. Figure 9 shows one of the experiments after relaxing the threshold test, i.e. Tsis set very large, to

show how the frames look like when the instructor is within the frame. The frame on the right side shows a D-frame when the instructor is present. You may easily see the noises due to the light reflections from the instructor body. These noises, but not the notes, keep moving or vibrating. Even if they stayed after the instructor moves out, the intersection with another E frame will be enough to remove instable noises.

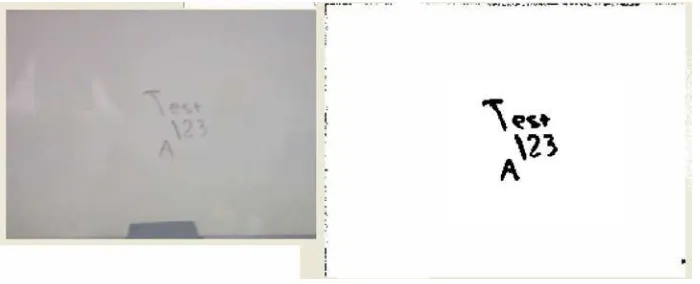

Figure10shows a single M frame generated once the instructor moves out of the frame shown previously in Fig.5.

The above figure illustrates that the notes written have been successfully extracted; however, the missing parts of the letter E can easily be noticed, as well as few left over noises.

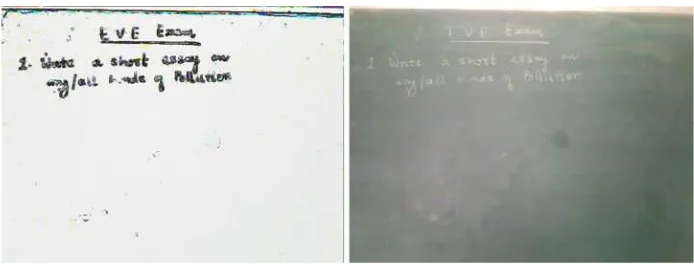

Figure11shows another M frame on the right of the figure which is generated once the instructor moves out of the frame. It is worth to note that these are M and not F frames; otherwise, the white board and other objects such as the rear end of the computer case on the left of Fig.11would be shown.

Another experiment was performed but this time using a black board. Similar to the white board, the F frames were extracted successfully, as shown in Fig.12.

The black board suffers from the fact that the color of the board slowly turns whiter as it is used more and more during the same lecture. Furthermore, unlike the white board, it is very normal for the instructor to leave writing remainings spread all over the board.

Noise Notes

Instructor body

Although these have minor effects on the lecture, but not on the eLecture as they form some kind of noise (false noises). On the other hand, due to the dark color of the board, the brightness variations and true noises have less negative effects if compared to that when the board is white. It is worth to note here that this paper is not trying to dig deep into edge detection or noise filtration algorithms to select the best available in literature or to modify it, as these are considered in the field of image processing. The paper tries to focus on notes extraction as a concept without the use of eboards and introduce them in eLectures, as we will better see in the next section.

5 Taking part in eLectures

Once the F-Frames are generated from the NEA, they get packaged with other components namely Video, Audio and eMaterials (electronic materials containing dynamic contents such as animations) to output an eLecture, as illustrated in Fig.1. A common format that is widely-spread for eMaterials is PowerPoint slides. Yet, authors may use dedicated standard tools for their lectures. Some work has been done in the literature to generate eLectures that can be put and viewed online, such as AOF [4]. There are issues that may be considered as challenges to such work. The first is to have an authoring tool that does not need special training for its users to use, and the second is to have the format of the outcome known by popular player applications. Classroom 2000, [1], succeeded in overcoming many of these challenges. The project began in July 1995 and its first experiment was in January 1996 in

Fig. 11 A snapshot and its M frame

Fig. 10 M frame of from the

Georgia Tech. Since then, variations of the initial prototype have been installed at various places. In Classroom 2000, the entire lecture experience is turned into a multimedia authoring session. However, it requires the instructor to use an upright electronic whiteboard system which is connected to their Zen* system. The outcome of the system can be viewed through normal internet browsers.

In our previous work [3], an authoring tool based on Screen Capturing Algorithm (SCA) was presented. The algorithm captures the computer screen shots and intelligently chooses the appropriate key frames. These frames were supposed to have captured significant animations, slide transitioning and soft notes that were inserted by the instructor during his presentation or lecture. The tool then combines these key frames with the video and audio representing the presenter or instructor into a SMIL file. SMIL, Synchronized Multimedia Integration Language [8], a W3C Recommendation, was our choice of the language to package an eLecture. SMIL allows integrating a set of independent multimedia objects into a synchronized multimedia presentation using defined regions. Combining this SCA (where soft notes added through the presentation application are captured) with the proposed NEA, made to focus on extracting only hard notes written on the board by the instructor, would then result in a new bundled authoring tool. The tool was implemented using C# under .net framework 2. It used Microsoft DirectX AudioVideoPlayback, Capture, and Direct Show NET libraries to capture the video and offer a set of codecs to users. It also used Real Player 11 Gold to compress captured video under“rm” extension. Finally, it was built on top of both proposals of screen capturing and notes extraction algorithms. Images were saved in JPEG format, while video in “rm”format. Figure 13shows a snapshot of the main screen of the tool

while in action. Note that the tool when capturing the screen does not capture itself even if its main screen is on top of the slides being displayed.

There are two possible scenarios of lectures that would be suitable to use this bundled tool to generate eLectures. These scenarios are as follows:

a) The instructor uses slides and writes additional notes on a side whiteboard.

b) The instructor has no electronic slides and uses only a whiteboard to write the notes.

The layout of the generated eLecture will depend mainly on the scenario of the lecture. It will either be composed of three active regions or just two, as illustrated in Fig.14.

Figure14shows an eLecture being played by RealPlayer™application, a RealNetworks™ product. The students are offered to stream in and view eLectures through their normal internet

browsers, as RealPlayer™add-on component used by internet browsers are, as well, publicly available.

Although the authoring tool proposed gives the user options to connect the appropriate camera to the appropriate algorithm, whether SCA or NEA, as well as to set the dimensions and locations of the various regions, but two predefined layouts are offered for simplicity. In both layouts, the video region is set as 160 x 120, while the notes images are set 240 x 180 in scenario (a), and 960 x 720 in scenario (b).

Note that in scenario (a), the video region may show the face of the instructor while explaining the slides shown on the projector connected to his/her notebook/PC. On the other hand, it may appear blank during the times when the instructor is writing additional notes (hard notes) on a side board away from the camera assigned to the video region. One of the advantages of such eLecture format is that it is difficult on students to download the complete content. This is because the content will be distributed over multiple files, but referenced by one SMIL file. This may prevent the eLectures from free distributions, unless of course, when it is explicitly offered in zipped files by the instructors.

6 Conclusion

This paper proposed a Notes Extraction Algorithm that could replace eboards, especially when they are not available in order to capture notes being added or written by instructors on the boards during their lectures. This system, unlike many of the eboards available in the market, is easy to setup and easy to carry. Besides, it is considered very cheap if compared to most of the eboards. However, we don’t claim that the results presented here were better

than those if captured by e-boards (we did not compare the same scenarios, but we expect a 100% accuracy for the e-boards); however, if we included the cost, portability, ease of

Fig. 13 Bundled authoring tool

install, and applicability on both White and Black boards in the comparison formula, then we would conclude that the proposed system is acceptable as commented by 10 different instructors who tested the system.

With this portable software system running on a PC or notebook with a camera, once integrated with an AOF system like that in [3], as presented in this paper, the instructor will be able to generate eLectures from his/her presentation. The paper

Video Region: Close up view from Camera 1

Video Region: Wide view from Camera 1 Image Region:

images extracted from the SCA representing slides

Image Region: images extracted from Camera 2 representing Notes

Image Region: images extracted from same Camera 1 representing Notes

a

b

presented various scenarios of lectures to be packaged by the proposed bundled authoring tool. The generated eLectures include video, audio, animations, and notes and uses a standard format, namely SMIL, which may be viewed by popular and publicly available players.

References

1. Abowd GD (1999) Classroom 2000: An experiment with the instrumentation of a living educational environment. IBM Syst J 38(4):508–530

2. Cherri AK, Karim MA (1989) Optical symbolic substitution: edge detection using Prewitt, Sobel, and Roberts operators. Appl Opt 28:4644

3. Hirzallah N (2007) An authoring tool for as-in-class E-Lectures in E-Learning systems. Am J Appl Sci 4 (9):686–692 ISSN 1546–9239

4. Hrst W, Mueller R, Ottmann T (2004) The AOF method for production, use, and management of instructional media. In Proceedings of ICCE 2004, International Conference on Computers in Education, Melbourne, Australia

5. Marr D, Hildreth E (1980) Theory of edge detection. Proc R Soc Lond 207:187–217

6. Ottmann Th, Müller R (2000. The“Authoring on the Fly”-system for automated recording and replay of

(Tele) presentations. ACM/Springer Multimedia Systems Journal, Special Issue on “Multimedia

Authoring and Presentation Techniques”, 8(3)

7. Ziewer P, Seidl H (2002) Transparent Teleteaching. University of Trier

8. Synchronized Multimedia Integration Language (SMIL) 1.0 Specification, W3C Recommendation, 15-June-1998 (http://www.w3.org/TR/REC-smil/)

Dr. Nael Hirzallahhas finished his Masters and PhD Degrees from the University of Ottawa, Canada. He

Wafi Albalawi received his Bachelor of Science degree in Computer Science from King Abdul-Aziz University, Saudi Arabia in 1995. He then joined Tabuk Teachers' College as a Teacher Assistant. In 1997, Albalawi was awarded a scholarship from the government of Saudi Arabia to finish his graduate studies. In 1999, he completed his Master of Science in Computer Science from Missouri University of Science and Technology, and in 2004 he completed his Ph.D. in Computer Science at West Virginia University, USA. Dr. Albalawi was appointed as assistant professor at the Computer Science department in the University of Tabuk, KSA in 2005. He was then promoted as the chairperson of the department then as the dean of Tabuk Teachers’College for 2 years. In 2008, Dr. Albalawi joined Fahad Bin Sultan University as the Dean of Computing College. His research interests include Biometrics, e-learning, e-commerce, databases and Computer Security.

Ahmad Kayedreceived his Ph.D. from the University of Queensland, Australia, (2003) and Master’s degree