7/8/2015 JNW - Journal of Networks

http://www.academypublisher.com/jnw/ 1/2

Journal of Networks

(JNW, ISSN 17962056)

EditorinChief

Prof. Dr. Niki Pissinou

Telecommunications & Information Technology Institute, College of Engineering and Computing, Florida International University, Miami, FL, USA

Associate EditorinChief

Dr. Kami Makki

Department of Computer Science, Lamar University, USA

Prof. Dr. Sirisha Medidi

Department of Computer Science, Boise State University, USA

Area of Interests

· Network Technologies, Services and Applications, · Network Operations and Management

· Network Architecture and Design · Next Generation Networks

· Communication Protocols and Theory · Signal Processing for Communications · Formal Methods in Communication Protocols · Multimedia Communications

· Information, Communications and Network Security · Communications QoS, Reliability and Performance Modeling

· Network Access, Error Recovery, Routing, Congestion, and Flow Control · BAN, PAN, LAN, MAN, WAN, Internet, Network Interconnections · Broadband and Very High Rate Networks,

· Data Networks and Telephone Networks, · Wireless Communications & Networking · Bluetooth, IrDA, RFID, WLAN, WMAX, 3G · Wireless Ad Hoc and Sensor Networks · Next Generation Mobile Networks · Optical Systems and Networks

Journal of Networks (JNW, ISSN 17962056) is a scholarly peerreviewed international scientific journal published monthly, focusing on theories, methods, and applications in networks. It provide a high profile, leading edge forum for academic researchers, industrial

professionals, engineers, consultants, managers, educators and policy makers working in the field to contribute and disseminate innovative new work on networks.

JNW reflects the multidisciplinary nature of communications networks. It is committed to the timely publication of high quality papers that advance the stateoftheart and practical applications of communication networks. Both theoretical research contributions (presenting new techniques, concepts, or analyses) and applied contributions (reporting on experiences and experiments with actual systems) and tutorial expositions of permanent reference value are published.

Email questions or comments to JNW Editorial Office.

ACADEMY PUBLISHER JOURNALS ABOUT

7/8/2015 JNW - Journal of Networks

http://www.academypublisher.com/jnw/ 2/2

Copyright @ 2006–2013 ACADEMY PUBLISHER — All Rights Reserved.

· Satellite and Space Communications

Abstracting and Indexing

Academic Journals Database; Academy Search; BASE; BibSonomy; Cabell Computer Science/Business Information Systems; CAID – Computer Abstracts International Database; CNKI; CrossRef; CSA; DBLP; Directory of Open Access Journals (DOAJ) Computer Science; DOI; EBSCO; EI Compendex (On Hold); Engineering Village 2; EZB; GALE; Genamics JournalSeek; GetCITED; Gold Rush; Google Scholar; INSPEC; io port.net; iThenticate; J4F; JAL; JournalTOCs; NewJour; OAIPMH Registered Data Providers; OAJSE; OAKList; OCLC; Open JGate Engineering & Technology (JET); Ovid LinkSolver; PASCAL; PKP Open Archives Harvester; ProQuest; PubZone; QCAT; ResearchBib; SCImago; Scirus; SCOPUS; SHERPA/RoMEO; Socolar; The Index of Information Systems Journals; Trove; True Serials; Udini; UIUC OAIPMH Data Provider Registry; ULRICH's Periodicals Directory; WorldCat; WorldWideScience; ZDB

Subscription Information

The Journal of Networks (JNW, ISSN 17962056) is published one volume with 12 issues a year from 2006. Subscriptions may be entered at any time for a volume at the following rates (overland mail):

Institutional Subscription: EUR 1200 Personal Subscription: EUR 960 Price for Single Copy: EUR 130

Find more information at the Subscription page.

7/8/2015 Editorial Board - Journal of Networks

http://www.academypublisher.com/jnw/editorialboard.html 1/2

Editorial Board

We are seeking professionals to join our Editorial Board. If you are interested in becoming a Editor, please send your CV and a onepage summary of your specific expertise and interests to the following email address. We will review your information and if appropriate, we will place you on our list of Editorial Board.

Please send your application to: [email protected].

EditorinChief

Dr. Niki Pissinou

Florida International University, USA

Associate EditorinChief

Dr. Kami Makki Lamar University, USA

Dr. Sirisha Medidi Boise State University, USA

Editors

Dr. Marcelo Sampaio de Alencar ([email protected]) Federal University of Campina Grande, Brazil

Dr. Abderrahim Benslimane (abderrahim.benslimane@univavignon.fr) University of Avignon, France

Dr. Saad Biaz ([email protected]) Auburn University, USA

Dr. Azzedine Boukerche ([email protected]) University of Ottawa, Canada

Dr. Deng Pan ([email protected]) Florida International University, USA

Ms. Celia Desmond ([email protected]) World Class Telecommunications, Canada

Dr. Christos Douligeris ([email protected]) University of Piraeus, Greece

Dr. Hossam Hassanein ([email protected]) Queens University, Canada

Dr. Jun He ([email protected]) University of Arizona, USA

Dr. Mary Ann Ingram ([email protected]) Georgia Tech, USA

Dr. Sanjay Jasola ([email protected]) Gautam Buddha University, India

Dr. Xiaohua Jia ([email protected]) City University of Hong Kong, Hong Kong

Dr. Masoumeh Karimi ([email protected]) Florida International University, USA

Dr. TaiHoon Kim ([email protected]) Hannam University, Korea

Dr. Cheng Li ([email protected])

ACADEMY PUBLISHER JOURNALS ABOUT

7/8/2015 Editorial Board - Journal of Networks

http://www.academypublisher.com/jnw/editorialboard.html 2/2

Copyright @ 2006–2013 ACADEMY PUBLISHER — All Rights Reserved.

Memorial University of New Foundland, USA

Dr. Chunming Liu, ([email protected]) TMobile, USA

Dr. Luigi Logrippo ([email protected]) University of Quebec in the Outaouais, Canada

Dr. Alan Marshall ([email protected]) Queens University Belfast, Northern Ireland, UK

Dr. Fabio Martignon ([email protected]) University of Bergamo, Italy

Dr. George Mastorakis ([email protected]) Technological Educational Institute of Crete, Greece

Dr. Natarajan Meghanathan ([email protected]) Jackson State University, USA

Dr. Edmundo Monteiro ([email protected]) University of Coimbra, Portugal

Dr. Fabrice Theoleyre ([email protected]) CNRS, France

Dr. George S. Tombras ([email protected]) National and Kapodistrian University of Athens, Greece

Dr. Vijay Varadharajan ([email protected]) Macquarie University, Australia

Dr. Mohamad Watfa ([email protected]) American University of Beirut, Lebanon

Dr. Lei Zhang ([email protected]) Frostburg State University, USA

Dr. Qian Zhang ([email protected])

Hong Kong University of Science and Technology, Hong Kong

Dr. Chi Zhou ([email protected]) Illinois Institute of Technology, USA

Dr. Zuqing Zhu ([email protected])

University of Science and Technology of China, China

Journal of Networks

ISSN 1796-2056

Volume 10, Number 6, June 2015

Contents

REGULAR PAPERS

Performances of OpenFlow-Based Software-Defined Networks: An overview

Fouad Benamrane, Mouad Ben mamoun, and Redouane Benaini

Downlink Channel Allocation Scheme deploying Cooperative Channel Monitoring for Cognitive Cellular-FemtocellNetworks

Van-Toan Nguyen, Kien Duc Nguyen, Hoang Nam Nguyen, and Keattisak Sripimanwat

Study on Auto Detecting Defence Mechanisms against Application Layer Ddos Attacks in SIP Server

Muhammad Morshed Alam, Muhammad Yeasir Arafat, and Feroz Ahmed

Specification and Validation of Enhanced Mobile Agent-Enabled Anomaly Detection and Verification in Resource Constrained Networks

Muhammad Usman, Vallipuram Muthukkumarasamy, and Xin-Wen Wu

A Lightweight Authentication Protocol Based on Partial Identifier for EPCglobal Class-1 Gen-2 Tags

Zhicai Shi, Fei Wu, Yongxiang Xia, Yihan Wang, Jian Dai, and Changzhi Wang

A Multi-Constrained Routing Algorithm for Software Defined Network Based on Nonlinear Annealing

Lijie Sheng, Zhikun Song, and Jianhua Yang

Energy Efficiency of Image Compression for Virtual View Image over Wireless Visual Sensor Network

Nyoman Putra Sastra, Wirawan, and Gamantyo Hendrantoro

329

338

344

353

369

376

Energy Efficiency of Image Compression for

Virtual View Image over Wireless Visual Sensor

Network

Nyoman Putra Sastra

a,b, Wirawan

a, and Gamantyo Hendrantoro

aaDepartment of Electrical Engineering, Institut Teknologi Sepuluh Nopember, Surabaya, 60111, Indonesia

Email: {wirawan, gamantyo}@ee,its.ac.id

bDepartment of Electrical Engineering, Universitas Udayana, Bali, 80236 Indonesia

Email: [email protected]

Abstract— Many applications use virtual view to see objects or sceneries in certain directions where there is no angle of visual sensor viewing the directions. Generating indirect virtual view images also save visual sensor node in a network. In addition visual sensors have abilities to give more information related to virtual view images. In fact, generating virtual view images using Wireless Visual Sensor Network is a challenging task, due to the limitation of energy, computation, and bandwidth. Therefore this paper proposes using combination of camera selection method with parameters optimization of JPEG 2000 image com-pression and image transmission strategy applying mutual information criteria. The camera selection method based on the smallest value of disparity was adopted to reduce transmission of number of images. In each node of visual sensor, the optimization of JPEG 2000 image compression using parameters selection such as DWT level and bit rate was applied to reduce the size of the images. However image quality in PSNR has to be preserved. By implementing the sensor selection, the image compression, and the mutual information strategic, the study results that the total energy required to send one compressed image can be reduced to 10.27% of energy consumption of uncompressed image. Thus the image will be faster delivered.

Index Terms— Wireless Sensor Network, virtual view im-ages, image transmission, image compression, Linux embed-ded

I. INTRODUCTION

W

IRELESS Sensor Network (WSN) is a wireless communication system with limited resources, especially on energy, computation, and bandwidth [1], [2]. The use of WSN platform in visual data transmission, such as image and video, is known as WVSN [3]. Visual sensor or camera generates information in the form of images, or video, to be further processed for various visual applications at a distributed processing unit or a central processing. WVSN is widely used in many applications such as environmental observation and surveillance sys-tem, which is designed with limited resources to provide optimal information to users. This limitation of resources, mainly on energy source of WVSN, creates a challenge in system design since the size of data is larger than scalar data.Visual applications require various angle of view in order to observe an object. Even though visual sensors are distributed in many places, certain FoV may not be available. Moreover, observation of one particular direction is changing in time because of the movement or displacement of objects and weather condition. To address user demand of viewing an object from certain angle, more than one camera is required.

More visual sensor means better view or scenery re-construction, but limitation of energy, computation, and bandwidth become issues to be addressed. Thus, an image compression technique is required to save those resources. A visual sensor selection method is also needed to genera-te virtual image with the lowest size of data transmission. In wireless network, energy required to transmit infor-mation is larger than energy to process data [3]. To save energy, there are some issues to be considered in this paper: 1) selecting images to be transmitted which has differences of the information from predefine image of each visual sensor, 2) Applying distributed compression to the captured images, 3) Reducing number of images or visual sensors as basic image to generate image on certain FoV

The first issue will be addressed in Section 2.A.4 that discuss implementation of mutual information strategy. Section 2.A.1.up to Section 2.A.3 will meet the second issue by optimizing JPEG 2000 parameters, which is adapted in Imote2 with its OS Linux Embedded. Last issue will be responded in Section 2.C and Section 2.D, which will discuss selection technique of visual sensor, or camera who provide maximal information of images, in other words, from all available visual sensors, only few are selected to provide maximal information.

The method of visual sensor selection is required to reduce number of visual sensors. There are many visual selection methods that had been developed by some researchers. In [4]–[6], cameras are communicating to find number of active cameras to create space that is desired in scene. They developed distributed processing and centered algorithms. These three researches require three processes on sensor nodes and these processes require extended time that lead to significant energy consumption. None of them is aimed to directly reconstruct virtual image in WVSN

JOURNAL OF NETWORKS, VOL. 10, NO. 6, JUNE 2015 385

platform.

In [7] and [8], researches of multiview image from overlapping images captured by a group of visual sensors in WVSN environment have been conducted to broaden the view. In [7], visual sensor selection is based on spatial correlation and entropy between images. The implementation of image joining in WVSN or WMSN in [8] is mainly about the effort of resources utiliza-tion reducutiliza-tion, especially on memory usage, by reducing less important information and transmitting only image’s portion of a visual sensor that overlapped with images from other sensor visual. There is no explanation of energy consumption in that research. In [8] also nothing specifically addresses the selection of visual sensors to evoke the image of a particular FoV. Ref. [9] is research of generating duplicates of foot ball play scene. In this method, image is constructed from two and three visual sensors located near the FoV without explicitly describing the criteria of visual sensor selection.

In our previous research [10], we have developed a method of image construction in WVSN. This method has successfully generated virtual image with low PSNR compared to capture image by visual sensor in the FoV. The research had not implemented image compression after capturing using visual sensor. This causes the image file size becomes larger, such that sending one image to central processing requires up to 10 minutes of time, with the cost of high energy consumption.Additionally, the re-search did not make effort of reducing data transmission. Moreover, In this study did not implement the mutual information criterion for the selection of images to be sent.

Based on the discussion of the above studies, the main contribution of this research is to give solution for energy saving in distributed processing. The distributed processing by means of image compression and image selection which have different information to the infor-mation already stored in each visual sensor node. The use of energy on each process is measured. The quality of virtual view image is improved and compared to the result in [10]. WVSN platform XScale PXA 271 processor, visual sensor SOC OV 7670, and sensor board with Linux Embedded OS [11]–[13] are used in this research. This paper is organized as follows. Section II describes proposed scheme and system design. Section III presents testing, measurement and analysis of the system. Finally, section IV concludes the research.

II. THE PROPOSEDSCHEME

Multivisual sensors are used in WVSN to provide multiview and multi resolution services, and also for en-vironment surveillance. With the availability of resources, image can be constructed by sending all image captured by visual sensor. In WVSN application, visual sensor node processes the image or video before transmission.

Virtual view image is image on peculiar FoV where no camera is directly available to serve users. Many applications have taken advantages of the virtual view

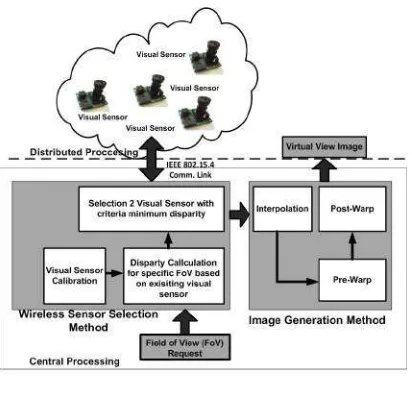

Figure 1. System Schema.

image, for examples, (1) object recognition as a result of captured object from different point of view, (2) view widen of specific scenery, and (3) virtual reality such as to see player and ball position in foot ball game.

WVSN inherently has limited resources; as a result a method is required to save resources by sending the image that was selected based on some defined criteria. Fig. 1 shows the platform of generating virtual view image in WVSN. Initially, system is setup whereas sensor node position is calibrated. Each sensor node will send its captured image to central processor as a referenced image. When an image is requested based on FoV, the system will calculate and calibrate the requested FoV according to all images in the central processor. A sensor node, then, is selected to send the image which is employed as a virtual view image foundation. Next step, the sensor node will capture and compressed new images. The new images are compared to the referenced image. Mutual information is used as decision basis in sending the image. Finally the image will be foundation in building virtual view image.

A. Image Processing in Visual Sensor Node

1) Wireless Sensor Network Platform: In this research we use Imote2 as WSN platform. Imote2 has modules of radio board and visual sensor board. The radio board uses Intel Xscale PXA271 processor with 32 MB SDRAM, 32 MB flash, IO port as GPIO, 2x SPI, 3x UART, PC, SDIO, USM host, USB client, and integrated wireless communication IEEE 802.15.4 (CC2420). The Visual sensor board provides miniature speaker and line output, color image and video camera chip, omni Vision OV7670, audio capture and playback CODEC WM890, onboard microphone and line input, and PIR (Passive Infrared) motion sensor. Table I displays the Imote2 consumption energy parameters.

2) Embedded Linux Operating System: Linux embed-ded has been successfully developed in Imote2 board [12], [13]. With this Linux embedded system, development of

386 JOURNAL OF NETWORKS, VOL. 10, NO. 6, JUNE 2015

TABLE I. PARAMETERS OFIMOTE2.

Initial Energy 200 Joules Active Camera withdraws 40 mW PXA Processor Active 192.3 mW Image Capture (including Sensing) 0.04 J Radio Active 46.39µJ/bit Read from SD Flash 5.32 nJ/bit Write in SD Flash 7.65 nJ/bit Image Dimension 640x480 pixel

Image Size 900 KB

Algorithm 1Installation step of Linux embedded imple-mentation

1: Linux Embedded Implementation

a. Compiling Linux Kernel for JSVN Implementation b. Flashing Bootloader, Linux Kernel, and File system to JSN Platform

2: Visual Sensor Implementation

c. Modified Visual Sensor for Linux Implementation d. Cross compiling and upload Linux OV7670 driver e. Cross compiling and upload binary coding to capture image

3: JPEG 2000 Compression Implementation in JSN f. OpenJPEG Library modification

g. OpenJPEG Cross compiling for XScale Processor f. Upload binary code from Linux Host to JSN with Embedded Linux

applications for scalar and multimedia data becomes eas-ier and more dynamic. The advantage of Linux embedded OS is the ability of replacing the TinyOS bootloader, which support images size up to 28 MB and independent configuration and data storage. The communication model is technology independent (programming via wireless may be possible with same communication protocol), and the flexibility of Linux to be modified on working or running system.

The main steps of the implementation of Linux embed-ded are installing or uploading bootloader, kernel, and file system. Bootloader or bootloader object (Blop) is the basic system start-up and used to initialize system components, i.e. system memory initialization, system clock management, and GPIO initialization. When Blob finishes system initialization, system will automatically run Linux kernel. Kernel is generally read in system file, known as JFFS2. The JFFS2 itself is a system file that had been activated by bootloader. Linux Embedded system that is installed in flash memory puts the system file in the end of memory. The implementation steps are shown below.

3) Image Compression on Visual Sensor: The DWT processes of JPEG 2000 compression method are per-formed after preprocessing, including tiling, level off-set, and Irreversible Colour Transform (ICT) [14]. The tiling process is the process of dividing source image into uniform size of non-overlappingsquared tiles, except for tiles on image boundaries. Each tile is compressed

TABLE II. PARAMETERS OFJPEG 2000.

Image Dimesion 640 x 480 x 3

DWT Level 3

Compression Lossless Bit Rate 0.01 Size of Block code (bc) 64x64

Tile size 640 x 480 (1 tile)

independently using its own parameters. The aim of tiling is to reduce memory utilization.

Preprocessed image source becomes input for blocks of wavelet transform. DWT is a two dimensions filter separable along its columnand row. The filter works by applying low pass filter(L)and high pass filter(H)on each sample, row by row, and columnby column. The output is then applied again to the same filter. As the result, image is divided into sub-bands: low-low (LL1) low-high(LH1), high-low(HL1)and high-high(HH1) [15]. High pass sub-band refers to the residues of original image, which is required in the perfect reconstruction of the image from its low resolution version. DWT level can be raised to create transformation level two with sub bandsLL2,LH2,HL2,HH2, and so on.

The implementation of JPEG 2000 in this research is using library OpenJPEG [16] on WSN platform. The JPEG 2000 parameters are altered for system optimization that is reducing energy consumption while keeping the image quality. From our preliminary experiments, as shown in installation step of Linux embedded implemen-tation, the parameters of JPEG 2000 being used in this implementation is as in II. These parameters have low energy consumption with adequate PSNR.

4) Mutual Information to Determine Image Transmis-sion: Joint histogram of two images can be used to estimate joint probability distribution from grey values of image by dividing each value of histogram with its total value. Shannon entropy for joint distribution is defined as:

H=−!

i,J

p(i, j) log2p(i, j) (1)

The value of joint entropy of two images defines their degree of correlation. In registry image, low joint entropy means that both images are similar. Indifferent to registry image, for camera selection, large value of joint entropy is preferable to get more information.

Another technique to see information contain within two or more images is by computing mutual information. Mutual information is one of many quantities to state how much one random variable provides information about another random variable. The mutual information of two images,I andJ, is defined as:

M(i, j) =H(i) +H(j)−H(i, j); (2)

WhereH(i, j)is joint entropy of two imagesIandJ. The equation shows that low joint entropy means high mutual information. In other words, whenever mutual information

JOURNAL OF NETWORKS, VOL. 10, NO. 6, JUNE 2015 387

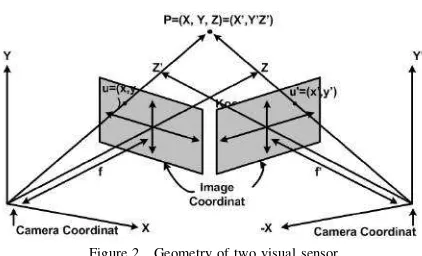

Figure 2. Geometry of two visual sensor.

of two images taken from visual sensors tends to be similar, there is no information is required to be sent from sensor node to central processing. The sensor only sends image, after being compressed, when there is a significant change in mutual information value.

B. Visual Sensor Selection

Disparity is difference of depth of a point P, on the same scenery subjected to different field of view, which is shown in 2. Disparity changes as rotation and translation difference of one field of view to other field of view [17]. To simplify the implementation, virtual view movement in this study only the horizontal rotation, which are in the left and right pointP.

Two visual sensors used to generate images with the same scenery, as in 2, and according to [17], one point in the first cameraChas projection point below:

x=fy

z y=f

y

z (3)

Projection that point on the second cameraC!, is stated

by below equation:

x!=fy!

z! y‘ =f

y!

z! (4)

Coordinate system C! can be expressed as coordinate

system C rotated by R and followed by translation T, [tx ty tz]

From this system model, there is no rotation around axes X and Z (rotation on horizontal direction only). Rotation equation becomes [15]:

¯

Position of point P on image plane that produced by visual sensorCcan be located on image plane produced by visual sensor C! by substituting coordinate equation

between visual sensor C and visual sensor C! into

pro-jection equation:

Whenever FoV of visual sensor C! is field of view of

desired virtual view, this geometry of two visual sensors model can be used to get scene for virtual view image.

We can get disparity value from reference point on geometry of two visual sensors. For visual sensor i

and visual sensor j in 2, the disparity, δ, between two images at cameraiand camerajwith position parameters (δi,ri,θi) and (δj,rj,θj) are calculated as [6], [18]:

First visual sensor is selected by searching smallest rotation angle β that has minimum disparity between visual sensorsC and desired FoV. Second visual sensors selected by smallest disparity with opposite direction to β, seen from virtual view. As example, let position of first visual sensor be on the right side of virtual view, so the position of second visual sensor is on the left side of virtual view. From this two points, the FoV disparity when seen from both visual sensors position is defined by equation below:

Whereδiandδjare the disparities of the two visal sensors

that are selected against the desired direction of view.

C. Virtual View Generation

There are three stages to generate a virtual image view items, namely, interpolation, pre-wrap, and post-wrap. In addition, interpolation is the step to generate intermediate image by using pixel correspondence between two images from two selected sensors. A method of correspondence pixel pairs search along epipolar line from both images is used to make a fast computation. Moreover, epipolar line correspondence is:

⇢

l=FTp;

l!=F p!. (11)

Where l and l! are a pair of epipolar line, and F is

fundamental matrix [10] as a result from this equation:

pT =F p!= 0 (12)

WherePT andP!are pair of point from two-dimensional

coordinate that corresponds from two images.F is funda-mental matrix that has redundancy value. The parameter

F can be solved by least square method by minimizing value from equation below:

n

388 JOURNAL OF NETWORKS, VOL. 10, NO. 6, JUNE 2015

Interpolation process suited to disparity viewpoint of two selected visual sensors. Furthermore, interpolation process to find new image points is defined below:

pv=d!1p+d!1p! (14)

To estimate epipolar lines coordinate on virtual view and warps interpolated image by mean of placing those lines on correct position on virtual view post-wrap process aims. By then, virtual view is produced.

D. Energy Consumption

Since energy source of WVSN solely from battery with limited power, thus energy consumption becomes a critical criteria on implementation [19]. This means that the main priority of one particular model or any implementation method of image virtual view generator is to produce low energy consumption. In general, there are three tasks that affect energy consumption of WVSN, i.e.: 1) energy for images capturing, 2) energy for images processing, and 3) energy for images transmitting. Those three energy consumptions are formulated as below:

EW V SN =Ecapt+Eproc+Etransmit (15)

Ecapt is images capturing energy, Eproc is images

pro-cessing energy consumption, and Etransmit is images

transmitting energy consumption. Furthermore, we can manually compute energy consumption by measuring voltage(V), current (I), and time of the whole process i.e. images capturing time (tcapt), images processing time

(tproc), and images transmitting time (ttransmit), with or

without any compression methods, as:

E=V ⇥I⇥(tcapt+tproc+ttransmit) (16)

Energy consumption of JPEG 2000 image compression can be estimated from DWT processes. In this research, OpenJPEG library used as JPEG 2000 representation using Le Gall filter model 5−3 [15], [20]. Equations regarding the filter are:

L0(z) =

H0(z)defines low frequency sub band of DWT filter and

G0(z)defines high frequency subband of DWT filter. The

impulse responses of the filter are:

L[2n] =−x[2n−2] + 2x[2n−1]

Analyzing energy consumption of DWT image com-pression requires size and type of some basic operations of the given process. Basic operations such as shifting

and adding can derive from (19) and (20). In (19), filter decomposition requires 8 shifts and 8 adds operations to convert 1 pixel of an image into low pass coefficient, meanwhile (20) requires 2 shifts and 4 adds operations to convert into high pass coefficient.

Overall the computation of an image on DWT decom-position is acquired by counting the whole operations. The computation of one image of size M xN with L DWT level is estimated step by step as follows. Assume that the first step is horizontal decomposition. Since the whole pixels at even position are decomposed into low pass coefficient and pixels at odd positions are decomposed into high pass, which total weight at horizontal is 14

M N(10S+12A). The vertical decomposition has similar weight. Image’s size will reduce with factor of 4 from initial size for every transformation level. Then, the total computation cost becomes:

Apart from arithmetic operations, on transformation steps, energy is significantly consumed from memory access. Within memory access processes, each pixel goes through read and write processes 2 times, thus memory access for

M xN image is:

Total processing load is computed as sum of computa-tional load (CDW T) and memory access load, (Cread)

Furthermore, if Eshif t denote energy consumption for

shift (S) operation,Eadd denote energy consumption for

add (A) operation,Ereaddenote energy consumption for

read memory access, andEwritedenote energy

consump-tion for write memory access, then the overall energy consumption for processing becomes:

Eproc=EDW T +EreadCread+EwriteCwrite

JOURNAL OF NETWORKS, VOL. 10, NO. 6, JUNE 2015 389

Image transmission is generally in the form of data packets. If the number of packets ism, average packets size ist, and energy required to transmit 1 bit data isEb,

transmission energy is defined as:

Etransmit=m·t·Eb (25)

The IEEE 802.15.4 communication system on Linux embedded OS uses the tosmac structure data packet [1], with the payload of each packet being 28 bytes. From this model and substituting (24) and (25) into (15), the energy consumption for transmitting a JPEG 2000 compressed image becomes:

EW V SN =Ecapt+Eproc+Etransmit

According to (26), the captured energy remains fixed for the same devices. Therefore total consumption energy depends on both processing energy (Eproc)

and transmission energy (Etransmit). The transmission

energy (Etransmit) is affected by image size in bits.

E. Peak Signal to Noise Ratio (PSNR)

Error metrics often used to compare various image compression techniques are the Mean Square Error (MSE) and the Peak Signal to Noise Ratio (PSNR). The MSE is the cumulative squared error between the compressed and the original image, whereas PSNR represents a measure of the peak error. The mathematical formula for PSNR and MSE are:

Where I represents matrix of original images, K is compressed image,mis number of pixel row of image,

i is index of that row,n represents number of image’s pixel column andjis index of the column.

F. Procedures

The following flowchart of virtual view image gener-ation procedures in accordance with 3, is described as follows:

1) A number of visual sensors are installed and de-ployed in the desired area of coverage. These vi-sual sensors send vivi-sual information to the central processing. The central processing unit is in charge of collecting information from each of the visual sensor and received a request from a user for a FoV. 2) Calibration to determine the position and direction of view and visual coverage of each sensor or point of reference to sensor node is done before any

other task because the direction of visual sensor is assumed not to be changed for energy savings. The disparity between cameras is obtained by determin-ing the direction of view of each sensor. An initial image of each visual sensor is distributively stored in each sensor node and also in a central processing to be used ingenerating virtual image.

3) JPEG 2000 image compression method is imple-mented on all visual sensors to compress the cap-tured image. The purpose of image compression is to save energy in the image delivery as the transmission of data requires much higher energy than computing the data.

4) After the system recognizes the position of each sensor node in accordance to reference point, re-quest of each user to any desired FoV can be performed.

5) Desired FoV will be compared with the position / direction of view of any visual sensors. Based on mutual information criteria and the disparity, the system decides which camera to be used.

6) Since environmental conditions might change at any time, due to weather, new additional object, changing the position of objects and others, then on every FoV request, a new image will be captured from selected visual sensors. The new image will be compared with the initial image to find their differences.

7) The system calculates mutual information of im-ages. Furthermore, only two images with the largest mutual information are transmitted to a central processing to save energy for transmitting. 8) Image generation process is done at central

process-ing. The Image generation steps are 1) Interpolation of correspondence points, 2) pre-wrapping process, 3) post-wrapping process.

III. RESULTS AND DISCUSSION

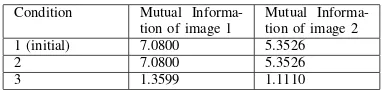

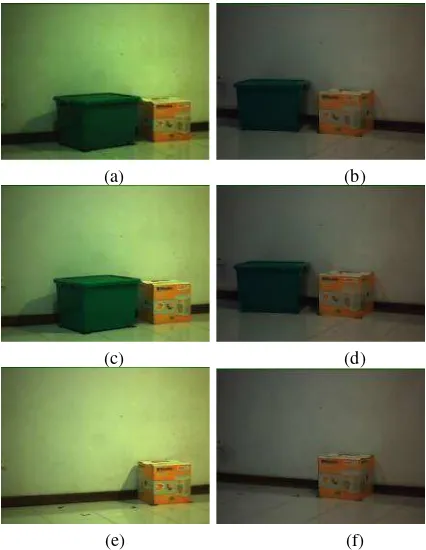

Initial stage is begun when all visual sensor nodes capture and store images. Then the nodes send the compressed images to central processing. Each node has calculated and stored mutual information of the images. When particular FoV is requested, the central processing will select two visual sensor nodes based on disparity method as seen in Fig. 4.

In the initial stage, the mutual information computation for the first image and the second image to themselves applies (2), that areH(I11, I11)= 7.0800 andH(I21, I21)

= 5.3526 (See TABLE III). Condition 2 is the condition when images are captured with no differences to the initial condition, Fig. 4c and Fig. 4d, so the mutual in-formation of images is the same. The mutual inin-formation areH(I11, I12)=7.0800 andH(I21, I22)= 5.3536. On the

other hand, the mutual information, as seen in TABLE III is obtained from calculation of image in Fig 4c to the image in Fig. 4a, and image in Fig 4d to theimage in Fig 4b.

390 JOURNAL OF NETWORKS, VOL. 10, NO. 6, JUNE 2015

Figure 3. Image Generation Flowchart based on desired FoV request.

Whenever any differences occurred, as in condition 3, the mutual information between initialized image and captured image at that time, becomes smaller, that are

H(I11;I13)= 1.3599 andH(I21;I23)= 1.1110. These are

the criteria that are used to determine whether it is necessary to send new image to the central processing. When mutual information is relatively the same, the visual sensor node only sends one bit data, 0, to inform the central processing that there is no new image available. When the object or scene has experienced any modifi-cation, visual sensor node sends another bit, i.e. bit 1, followed by new captured image.

Energy consumption as seen in TABLE IV is obtained

TABLE III.

MUTUALINFORMATION COMPUTATION.

Condition Mutual Informa-tion of image 1

Mutual Informa-tion of image 2 1 (initial) 7.0800 5.3526

2 7.0800 5.3526

3 1.3599 1.1110

from (26). For condition 2 and condition 3, the process-ing energy consumption (Eproc) and transmission energy

consumption (Etransmit) are change as follows:

EW V SN =m·t·Eb+M N L

!

i=1

1 4l−1

(10Eshif t+ 12Eadd+ 2Eread+ 2Ewrite)

Assumed that active processor is a condition when signal processing takes place, then Eshif t, Eadd, Eread, and

Ewrite can be substituted with the energy consumption

when the processor is active, as shown in Table I. Ac-cording to this table, the energy consumption of active processor is 192.3 mW. Then by encoding time duration, the EDW T, the proccesing energy consumption (Eproc)

becomes:

EP roc=M N L

!

l=1

1 4l−1

(10Eshif t+ 12Eadd+ 2Eread+ 2Ewrite)

=Pproc·t

wheretis the time required for encoding processes. Based on JPEG 2000 parameters in TABLE II, the average encoding time for 0.1 bpp and DWT level 3 is 18.5 s and the energy consumption becomes:

EW V SN = 0.04J+ 192.3mW⇥18.5s+ 864⇥3,291.5 = 0.04J+ 3.558J+ 131,9J= 135.52J

At condition 2, when two selected sensor nodes send two images, then total energy consumption of sending two compressed images by JPEG 2000 as base of virtual image construction is 271.04 J. Meanwhile, at condition 3, when the mutual information value is the same as the value at initial stage, only information bit is sent. Therefore the energy is required for transmission process is:

EW V SN =Ecapt+Eproc+Etransmit

= 0.04J+ 1.10J+ 0.02J= 1.16J

Hence total energy is required for transmitting informa-tion from two selected sensors of 2.32J.

On the contrary, transmitting image without compres-sion makes file size of 900 KB. This large file is split into 32,915 packets with average of 28 byte payload per packet. By the use of Imote2 parameter on TABLE II and computed using (26), energy consumption becomes:

EW V SN =Ecapt+m·k·Eb= 0.04J+

32,915·864·46.39µJ= 1,319.35J

JOURNAL OF NETWORKS, VOL. 10, NO. 6, JUNE 2015 391

(a) (b)

(c) (d)

(e) (f)

Figure 4. Images captured by visual sensors. a). Initial image captured by visual sensor I. b). Initial image captured by visual sensor II. c). Image created by visual sensor I for one FoV without any alteration on the scene. d). Image created by visual sensor II for one FoV without any alteration on the scene. e). Image created by visual sensor I for one FoV with alteration on the scene. f). Image created by visual sensor II for one FoV with alteration on the scene.

(a) (b)

Figure 5. a) Virtual View image on disparity 0.5; b) Image Created by visual sensor on the same FoV with the same disparity

Finally, the energy consumption to send one compressed image is reduced to only 10.27% compared to energy consumption without compression (see Table 4). When there is no modification of object or scene, i.e. condition 2, the energy consumption reduces to 3% when comparred with the whole compressed image is transmitted.

The total energy consumption of sending two com-pressed images by JPEG 2000 as base of virtual image construction is 271.04 J, and 2639.70 J for uncom-pressed images. Virtual image of disparity of 0.5 is shown in Fig. 5a and the captured image from visual sensor for FoV as requested by user is shown in Fig. 5b.

TABLE IV.

ENERGY CONSUMPTION COMPARISON

Method Send Compessed Image

Send informa-tion bit

Send Raw Image [10] Image Size

(KB)

90 0 900

Ecapt(J) 0.04 0.04 0.04

Ecomp(J) 3.35 1.10 0

Etrans(J) 131.93 0.02 1319.30

Etotal(J) 135.52 1.16 1319.35

P SN R 25.65 36.65 36.6

IV. CONCLUSIONS

Virtual view image from one particular direction that is demanded by user requires at least two images from visual sensors. This application becomes a challenge in WVSN environment due to its limited resources. One method to save energy consumption is by applying image compression, such as JPEG 2000 on each visual sensor.

In this research, we conclude that by applying such compression method, energy consumption on each sensor node increases as much as 3.35 J. This cost is compro-mised by significant reduction of energy consumption on image transmission. Total energy required to send one compressed image reduces to only 10.27% of energy consumption of uncompressed image.

The mutual information computation as detector for scene modification also increases energy consumption on each node. However when mutual information shows no change on the scene, the total energy required is down to 1.16J, or 0.9% of energy of uncompressed image.

ACKNOWLEDGMENT

The authors are grateful to the anonymous ref-erees for their valuable comments and sugges˜tions to improve the presentation of this paper. This work is supported by Indonesian Ministry of Ed-ucation and Culture through the BPPS scholarship and 2013 Doctoral Research Grant, contract number: 175.45/ ˜UN14.2/PNL.01.03.00/2013, May 16, 2013.

REFERENCES

[1] I. F. Akyildiz and M. C. Vuran,Wireless Sensor Networks. New York, NY, USA: John Wiley & Sons, Inc., 2010. [2] J. Zhang, C.-d. Wu, Y.-z. Zhang, and P. Ji,

“Energy-efficient adaptive dynamic sensor scheduling for target monitoring in wireless sensor networks,” ETRI journal, vol. 33, no. 6, pp. 857–863, 2011.

[3] M. Wu and C. W. Chen, “Collaborative image coding and transmission over wireless sensor networks,”EURASIP J.

Appl. Signal Process., vol. 2007, no. 1, pp. 223–223, Jan.

2007. [Online]. Available: http://dx.doi.org/10.1155/2007/ 70481

[4] M. Morbee, L. Tessens, H. Lee, W. Philips, and H. Agha-jan, “Optimal camera selection in vision networks for shape approximation,” inProceedings of the 2008 IEEE

10th Workshop on Multimedia Signal Processing, Cairns,

Queensland, Australia, October 2008, pp. 46 – 51, iSBN: 978-1-4244-2295-1.

392 JOURNAL OF NETWORKS, VOL. 10, NO. 6, JUNE 2015

[5] L. Tessens, M. Morbee, H. Lee, W. Philips, and H. Agha-jan, “Principal view determination for camera selection in distributed smart camera networks,” inDistributed Smart Cameras, 2008. ICDSC 2008. Second ACM/IEEE

Interna-tional Conference on, Sept 2008, pp. 1–10.

[6] T. Matsui, H. Matsuo, and A. Iwata, “Dynamic camera allocation method based on constraint satisfaction and cooperative search,” inin Proceedings of 2nd International Conf. on Software Engineering, Artificial Intelligence,

Net-working and Parallel/Distributed Computing, 2001, pp.

955–964.

[7] R. Dai and I. F. Akyildiz, “Joint effect of multiple cor-related cameras in wireless multimedia sensor networks,”

in Communications, 2009. ICC ’09. IEEE International

Conference on, June 2009, pp. 1–5.

[8] W. C. Chia, L. W. Chew, L.-M. Ang, and K. P. Seng, “Low memory image stitching and compression for wmsn using strip-based processing,” Int. J. Sen. Netw., vol. 11, no. 1, pp. 22–32, Jan. 2012. [Online]. Available: http://dx.doi.org/10.1504/IJSNET.2012.045037

[9] E. Monari and K. Kroschel, “A knowledge-based camera selection approach for object tracking in large sensor networks,” in Distributed Smart Cameras, 2009. ICDSC

2009. Third ACM/IEEE International Conference on, Aug

2009, pp. 1–8.

[10] N. P. Sastra, Wirawan, and G. Hendrantoro, “Virtual view image over wireless sensor network,”Telkomnika J., vol. 2011, no. 1, pp. 223–223, Dec. 2011. [Online]. Available: http://dx.doi.org/10.1155/2007/70481

[11] S. Taherian, M. Pias, R. Harle, G. Coulouris, S. Hay, J. Cameron, Jonathan and Lasenby, G. Kuntze, I. Bezodis, G. Irwin,et al., “Profiling sprints using on-body sensors,”

inPervasive Computing and Communications Workshops

(PERCOM Workshops), 2010 8th IEEE International

-Conference on. IEEE, 2010, pp. 444–449.

[12] R. Parthasarathy, N. Peterson, W. Song, A. Hurson, and B. Shirazi, “Over the air programming on imote2-based sensor networks,” inSystem Sciences (HICSS), 2010 43rd

Hawaii International Conference on, Jan 2010, pp. 1–9.

[13] L. Nachman, J. Huang, J. Shahabdeen, R. Adler, and R. Kling, “Imote2: Serious computation at the edge,” in Wireless Communications and Mobile Computing

Confer-ence, 2008. IWCMC ’08. International, Aug 2008, pp.

1118–1123.

[14] D. S. Taubman and M. W. Marcellin, JPEG 2000:

Im-age Compression Fundamentals, Standards and Practice.

Norwell, MA, USA: Kluwer Academic Publishers, 2001. [15] D.-G. Lee and S. Dey, “Adaptive and energy efficient

wavelet image compression for mobile multimedia data services,” in Communications, 2002. ICC 2002. IEEE

International Conference on, vol. 4, 2002, pp. 2484–2490

vol.4.

[16] O. J. Group, “Open jpeg, c-library of jpeg 2000,” 2011, url:http://www.openjpeg.org/.

[17] H. Aghajan and A. Cavallaro, Multi-camera networks:

principles and applications. Academic press, 2009.

[18] R. Dai and I. Akyildiz, “A spatial correlation model for vi-sual information in wireless multimedia sensor networks,”

Multimedia, IEEE Transactions on, vol. 11, no. 6, pp.

1148–1159, Oct 2009.

[19] D. M. Pham and S. M. Aziz, “An energy efficient im-age compression scheme for wireless sensor networks,”

inIntelligent Sensors, Sensor Networks and Information

Processing, 2013 IEEE Eighth International Conference on. IEEE, 2013, pp. 260–264.

[20] D. Le Gall and A. Tabatabai, “Sub-band coding of digital images using symmetric short kernel filters and arithmetic coding techniques,” inAcoustics, Speech, and Signal Pro-cessing, 1988. ICASSP-88., 1988 International Conference on, Apr 1988, pp. 761–764 vol.2.

Nyoman Putra Sastra received the B.Eng.

and M.Eng. degree in electrical engineering from Institut Teknologi Bandung (ITB), In-donesia, in 1998 and 2001, respectively. Since 2001, he joined with Universitas Udayana, Bali as lecturer. He is currently working toward the Ph.D.degree in electrical engineering from ITS. His research interests include His research interests include wireless multimedia sensor networks and multimedia signal processing. He is a Student Member of the IEEE.

Wirawan received the B.E. degree in

telecommunication engineering from Institut Teknologi Sepuluh Nopember (ITS), Surabaya, Indonesia, in 1987, the DEA in signal and image processing from Ecole Suprieure en Sci-ences Informatiques, Sophia Antipolis, and the Dr. degree in image processing from Telecom ParisTech (previously Ecole Nationale Su-prieure des Tlcommunications), Paris, France, in 1996 and 2003, respectively. Since 1989 he has been with ITS as lecturer in the Electrical Engineering Department. His research interest lie in the general area of image and video signal processing for mobile communications, and recently focuses more on underwater acoustic communication and networking and on various aspects of wireless sensor networks. He is a member of IEEE.

Gamantyo Hendrantororeceived the B. Eng

degree in electrical engineering from Institut Teknologi Sepuluh Nopember (ITS), Surabaya, Indonesia, in 1992, and the M. Eng and PhD degrees in electrical engineering from Carleton University, Canada, in 1997 and 2001, respec-tively. He is presently a Professor with ITS. His research interests include radio propaga-tion modeling and wireless communicapropaga-tions. He has recently been engaged in various col-laborative studies, including investigation into millimeter wave wireless systems for tropical areas with Kumamoto University, Japan, implementation of digital TV systems with BPPT, Indonesia, and development of Indonesia’s first educational nano-satellite (IINUSAT) together with five other universities and LAPAN. Dr. Gamantyo Hendrantoro is a senior member of the IEEE.

JOURNAL OF NETWORKS, VOL. 10, NO. 6, JUNE 2015 393

Instructions for Authors

Manuscript Submission

We invite original, previously unpublished, research papers, review, survey and tutorial papers, application papers, plus case studies, short research notes and letters, on both applied and theoretical aspects. Manuscripts should be written in English. All the papers except survey should ideally not exceed 12,000 words (14 pages) in length. Whenever applicable, submissions must include the following elements: title, authors, affiliations, contacts, abstract, index terms, introduction, main text, conclusions, appendixes, acknowledgement, references, and biographies.

Papers should be formatted into A4-size (8.27" x 11.69") pages, with main text of 10-point Times New Roman, in single-spaced two-column format. Figures and tables must be sized as they are to appear in print. Figures should be placed exactly where they are to appear within the text. There is no strict requirement on the format of the manuscripts. However, authors are strongly recommended to follow the format of the final version.

All paper submissions will be handled electronically in EDAS via the JNW Submission Page (URL: http://edas.info/N10935). After login EDAS, you will first register the paper. Afterwards, you will be able to add authors and submit the manuscript (file). If you do not have an EDAS account, you can obtain one. If for some technical reason submission through EDAS is not possible, the author can contact [email protected] for support.

Authors may suggest 2-4 reviewers when submitting their works, by providing us with the reviewers' title, full name and contact information. The editor will decide whether the recommendations will be used or not.

Conference Version

Submissions previously published in conference proceedings are eligible for consideration provided that the author informs the Editors at the time of submission and that the submission has undergone substantial revision. In the new submission, authors are required to cite the previous publication and very clearly indicate how the new submission offers substantively novel or different contributions beyond those of the previously published work. The appropriate way to indicate that your paper has been revised substantially is for the new paper to have a new title. Author should supply a copy of the previous version to the Editor, and provide a brief description of the differences between the submitted manuscript and the previous version.

If the authors provide a previously published conference submission, Editors will check the submission to determine whether there has been sufficient new material added to warrant publication in the Journal. The Academy Publisher’s guidelines are that the submission should contain a significant amount of new material, that is, material that has not been published elsewhere. New results are not required; however, the submission should contain expansions of key ideas, examples, elaborations, and so on, of the conference submission. The paper submitting to the journal should differ from the previously published material by at least 30 percent.

Review Process

Submissions are accepted for review with the understanding that the same work has been neither submitted to, nor published in, another publication. Concurrent submission to other publications will result in immediate rejection of the submission.

All manuscripts will be subject to a well established, fair, unbiased peer review and refereeing procedure, and are considered on the basis of their significance, novelty and usefulness to the Journals readership. The reviewing structure will always ensure the anonymity of the referees. The review output will be one of the following decisions: Accept, Accept with minor changes, Accept with major changes, or Reject.

The review process may take approximately three months to be completed. Should authors be requested by the editor to revise the text, the revised version should be submitted within three months for a major revision or one month for a minor revision. Authors who need more time are kindly requested to contact the Editor. The Editor reserves the right to reject a paper if it does not meet the aims and scope of the journal, it is not technically sound, it is not revised satisfactorily, or if it is inadequate in presentation.

Revised and Final Version Submission

Revised version should follow the same requirements as for the final version to format the paper, plus a short summary about the modifications authors have made and author's response to reviewer's comments.

Authors are requested to use the Academy Publisher Journal Style for preparing the final camera-ready version. A template in PDF and an MS word template can be downloaded from the web site. Authors are requested to strictly follow the guidelines specified in the templates. Only PDF format is acceptable. The PDF document should be sent as an open file, i.e. without any data protection. Authors should submit their paper electronically through email to the Journal's submission address. Please always refer to the paper ID in the submissions and any further enquiries.

Please do not use the Adobe Acrobat PDFWriter to generate the PDF file. Use the Adobe Acrobat Distiller instead, which is contained in the same package as the Acrobat PDFWriter. Make sure that you have used Type 1 or True Type Fonts (check with the Acrobat Reader or Acrobat Writer by clicking on File>Document Properties>Fonts to see the list of fonts and their type used in the PDF document).

Copyright

Submission of your paper to this journal implies that the paper is not under submission for publication elsewhere. Material which has been previously copyrighted, published, or accepted for publication will not be considered for publication in this journal. Submission of a manuscript is interpreted as a statement of certification that no part of the manuscript is copyrighted by any other publisher nor is under review by any other formal publication.

Submitted papers are assumed to contain no proprietary material unprotected by patent or patent application; responsibility for technical content and for protection of proprietary material rests solely with the author(s) and their organizations and is not the responsibility of the Academy Publisher or its editorial staff. The main author is responsible for ensuring that the article has been seen and approved by all the other authors. It is the responsibility of the author to obtain all necessary copyright release permissions for the use of any copyrighted materials in the manuscript prior to the submission. More information about permission request can be found at the web site.

Authors are asked to sign a warranty and copyright agreement upon acceptance of their manuscript, before the manuscript can be published. The Copyright Transfer Agreement can be downloaded from the web site.

Publication Charges and Re-print

The author's company or institution will be requested to pay a flat publication fee of EUR 360 for an accepted manuscript regardless of the length of the paper. The page charges are mandatory. Authors are entitled to a 30% discount on the journal, which is EUR 100 per copy. Reprints of the paper can be ordered with a price of EUR 100 per 20 copies. An allowance of 50% discount may be granted for individuals without a host institution and from less developed countries, upon application. Such application however will be handled case by case.