Deploying Rails

Deploying Rails will help you transform your deployment process from brittle chaos into something organized, understandable, and repeatable.

➤ Trek Glowacki

Independent developer

Covering such a wide range of useful topics such as deployment, configuration management, and monitoring, in addition to using industry-standard tools follow-ing professionally founded best practices, makes this book an indispensable resource for any Rails developer.

➤ Mitchell Hashimoto Lead developer, Vagrant

Targeted for the developer, Deploying Rails presents, in a clear and easily under-standable manner, a bevy of some of the less intuitive techniques it has taken me years to assemble as a professional. If you build Rails apps and have ever asked, “Where/how do I deploy this?” this book is for you.

➤ James Retterer

Deploying Rails

Automate, Deploy, Scale, Maintain,

and Sleep at Night

Anthony Burns

Tom Copeland

The Pragmatic Bookshelf

Programmers, LLC was aware of a trademark claim, the designations have been printed in initial capital letters or in all capitals. The Pragmatic Starter Kit, The Pragmatic Programmer, Pragmatic Programming, Pragmatic Bookshelf, PragProg and the linking g device are trade-marks of The Pragmatic Programmers, LLC.

Every precaution was taken in the preparation of this book. However, the publisher assumes no responsibility for errors or omissions, or for damages that may result from the use of information (including program listings) contained herein.

Our Pragmatic courses, workshops, and other products can help you and your team create better software and have more fun. For more information, as well as the latest Pragmatic titles, please visit us at http://pragprog.com.

The team that produced this book includes: Brian Hogan (editor)

Potomac Indexing, LLC (indexer) Kim Wimpsett (copyeditor) David Kelly (typesetter) Janet Furlow (producer) Juliet Benda (rights) Ellie Callahan (support)

Copyright © 2012 Pragmatic Programmers, LLC. All rights reserved.

No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form, or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior consent of the publisher. Printed in the United States of America.

ISBN-13: 978-1-93435-695-1

Contents

Preface . . . ix

Acknowledgments . . . xv

1. Introduction . . . 1

1.1 Where Do We Host Our Rails Application? 1

1.2 Building Effective Teams with DevOps 5

1.3 Learning with MassiveApp 7

2. Getting Started with Vagrant . . . 9

Installing VirtualBox and Vagrant 10

2.1

2.2 Configuring Networks and Multiple Virtual Machines 18

2.3 Running Multiple VMs 21

2.4 Where to Go Next 24

2.5 Conclusion 25

2.6 For Future Reference 25

3. Rails on Puppet . . . 27

Understanding Puppet 27

3.1

3.2 Setting Up Puppet 28

3.3 Installing Apache with Puppet 33

3.4 Configuring MySQL with Puppet 44

3.5 Creating the MassiveApp Rails Directory Tree 47

3.6 Writing a Passenger Module 50

3.7 Managing Multiple Hosts with Puppet 54

3.8 Updating the Base Box 55

3.9 Where to Go Next 56

3.10 Conclusion 58

4. Basic Capistrano . . . 61

Setting Up Capistrano 62

4.1

4.2 Making It Work 65

4.3 Setting Up the Deploy 68

4.4 Pushing a Release 69

4.5 Exploring Roles, Tasks, and Hooks 76

4.6 Conclusion 80

5. Advanced Capistrano . . . 81

Deploying Faster by Creating Symlinks in Bulk 81

5.1

5.2 Uploading and Downloading Files 83

5.3 Restricting Tasks with Roles 85

5.4 Deploying to Multiple Environments with Multistage 87

5.5 Capturing and Streaming Remote Command Output 88

5.6 Running Commands with the Capistrano Shell 90

5.7 Conclusion 93

6. Monitoring with Nagios . . . 95

A MassiveApp to Monitor 96

6.1

6.2 Writing a Nagios Puppet Module 98

6.3 Monitoring Concepts in Nagios 105

6.4 Monitoring Local Resources 106

6.5 Monitoring Services 110

6.6 Monitoring Applications 121

6.7 Where to Go Next 125

6.8 Conclusion 126

6.9 For Future Reference 127

7. Collecting Metrics with Ganglia . . . 129

Setting Up a Metrics VM 130

7.1

7.2 Writing a Ganglia Puppet Module 131

7.3 Using Ganglia Plugins 140

7.4 Gathering Metrics with a Custom Gmetric Plugin 143

7.5 Producing Metrics with Ruby 146

7.6 Where to Go Next 148

7.7 Conclusion 149

7.8 For Future Reference 150

8. Maintaining the Application . . . 153

Managing Logs 153

8.1

8.3 Organizing Backups and Configuring MySQL Failover 160

8.4 Making Downtime Better 169

9. Running Rubies with RVM . . . 173

Installing RVM 174

9.1

9.2 Serving Applications with Passenger Standalone 177

9.3 Using Systemwide RVM 180

9.4 Watching Passenger Standalone with Monit 182

9.5 Contrasting Gemsets and Bundler 184

9.6 Conclusion 184

10. Special Topics . . . 185

Managing Crontab with Whenever 185

10.1

10.2 Backing Up Everything 188

10.3 Using Ruby Enterprise Edition 193

10.4 Securing sshd 196

10.5 Conclusion 197

A1. A Capistrano Case Study . . . 199

A1.1 Requires and Variables 199

A1.2 Hooks and Tasks 201

A2. Running on Unicorn and nginx . . . 205

Installing and Configuring nginx 206

A2.1

A2.2 Running MassiveApp on Unicorn 208

A2.3 Deploying to nginx and Unicorn 209

A2.4 Where to Go Next 210

Bibliography . . . 213

Preface

Ruby on Rails has taken the web application development world by storm. Those of us who have been writing web apps for a few years remember the good ol’ days when the leading contenders for web programming languages were PHP and Java, with Perl, Smalltalk, and even C++ as fringe choices. Either PHP or Java could get the job done, but millions of lines of legacy code attest to the difficulty of using either of those languages to deliver solid web applications that are easy to evolve.

But Ruby on Rails changed all that. Now thousands of developers around the world are writing and delivering high-quality web applications on a regular basis. Lots of people are programming in Ruby. And there are plenty of books, screencasts, and tutorials for almost every aspect of bringing a Rails applica-tion into being.

We say “almost every aspect” because there’s one crucial area in which Rails applications are not necessarily a joy; that area is deployment. The most ele-gant Rails application can be crippled by runtime environment issues that make adding new servers an adventure, unexpected downtime a regularity, scaling a difficult task, and frustration a constant. Good tools do exist for deploying, running, monitoring, and measuring Rails applications, but pulling them together into a coherent whole is no small effort.

In a sense, we as Rails developers are spoiled. Since Rails has such excellent conventions and practices, we expect deploying and running a Rails application to be a similarly smooth and easy path. And while there are a few standard components for which most Rails developers will reach when rolling out a new application, there are still plenty of choices to make and decisions that can affect an application’s stability.

Rails applications, and we’ll review and apply the practices and tools that helped us keep our consulting clients happy by making their Rails applications reliable, predictable, and, generally speaking, successful. When you finish reading this book, you’ll have a firm grasp on what’s needed to deploy your application and keep it running. You’ll also pick up valuable techniques and principles for constructing a production environment that watches for impending problems and alerts you before things go wrong.

Who Should Read This Book?

This book is for Rails developers who, while comfortable with coding in Ruby and using Rails conventions and best practices, may be less sure of how to get a completed Rails application deployed and running on a server. Just as you learned the Rails conventions for structuring an application’s code using REST and MVC, you’ll now learn how to keep your application faithfully serving hits, how to know when your application needs more capacity, and how to add new resources in a repeatable and efficient manner so you can get back to adding features and fixing bugs.

This book is also for system administrators who are running a Rails application in production for the first time or for those who have a Rails application or two up and running but would like to improve the runtime environment. You probably already have solid monitoring and metrics systems; this book will help you monitor and measure the important parts of your Rails applications. In addition, you may be familiar with Puppet, the open source system provi-sioning tool. If so, by the end of this book you’ll have a firm grasp on using Puppet, and you’ll have a solid set of Puppet manifests. Even if you’re already using Puppet, you may pick up a trick or two from the manifests that we’ve compiled.

What Is in the Book?

This book is centered around an example social networking application called MassiveApp. While MassiveApp may not have taken the world by storm just yet, we’re confident that it’s going to be a winner, and we want to build a great environment in which MassiveApp can grow and flourish. This book will take us through that journey.

We’ll start with Chapter 2, Getting Started with Vagrant, on page 9, where we’ll learn how to set up our own virtual server with Vagrant, an open source tool that makes it easy to configure and manage VirtualBox virtual machines.

In Chapter 3, Rails on Puppet, on page 27, we’ll get an introduction to what’s arguably the most popular open source server provisioning tool, Puppet. We’ll learn about Puppet’s goals, organization, built-in capabilities, and syntax, and we’ll build Puppet manifests for the various components of MassiveApp including Apache, MySQL, and the MassiveApp Rails directory tree and sup-porting files. This chapter will provide you with a solid grasp of the Puppet knowledge that you’ll be able to build on in later chapters and, more impor-tantly, in your applications.

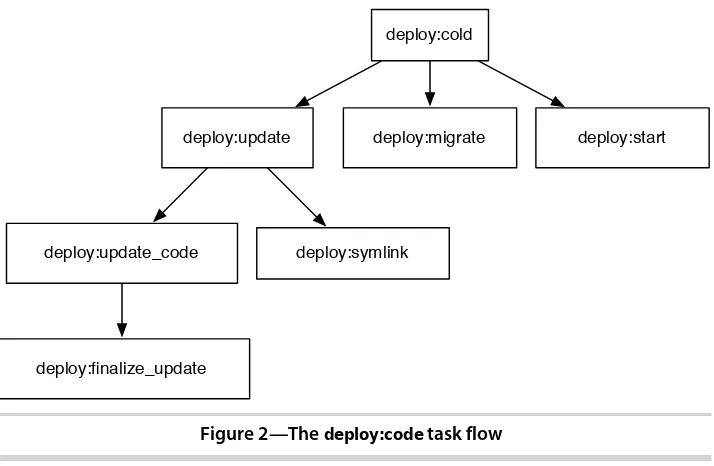

In Chapter 4, Basic Capistrano, on page 61, we’ll explore the premier Rails deployment utility, Capistrano. We’ll build a deployment file for MassiveApp, and we’ll describe how Capistrano features such as hooks, roles, and custom tasks can make Capistrano a linchpin in your Rails development strategy.

Chapter 5, Advanced Capistrano, on page 81 is a deeper dive into more advanced Capistrano topics. We’ll make deployments faster, we’ll use the Capistrano multistage extension to ease deploying to multiple environments, we’ll explore roles in greater depth, and we’ll look at capturing output from remote commands. Along the way we’ll explain more of the intricacies of Capistrano variables and roles. This chapter will get you even further down the road to Capistrano mastery.

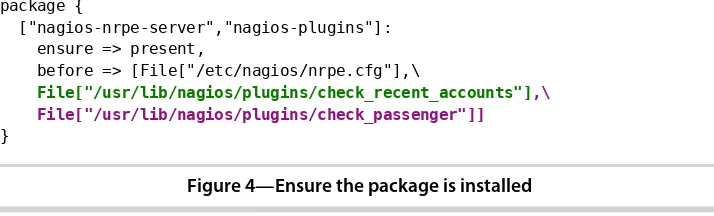

In Chapter 6, Monitoring with Nagios, on page 95, we’ll look at monitoring principles and how they apply to Rails applications. We’ll build a Nagios Puppet module that monitors system, service, and application-level thresholds. We’ll build a custom Nagios check that monitors Passenger memory process size, and we’ll build a custom Nagios check that’s specifically for checking aspects of MassiveApp’s data model.

we’ll explore the Ganglia plugin ecosystem. Then we’ll write a new Ganglia metric collection plugin for collecting MassiveApp user activity.

Chapter 8, Maintaining the Application, on page 153 discusses the ongoing care and feeding of a production Rails application. We’ll talk about performing backups, recovering from hardware failures, managing log files, and handling downtime...both scheduled and unscheduled. This chapter is devoted to items that might not arise during the first few days an application is deployed but will definitely come up as the application weathers the storms of user activity.

Chapter 9, Running Rubies with RVM, on page 173 covers the Ruby enVironment Manager (RVM). RVM is becoming more and more common as a Rails devel-opment tool, and it has its uses in the Rails deployment arena as well. We’ll cover a few common use cases for RVM as well as some tricky issues that arise because of how RVM modifies a program’s execution environment.

Chapter 10, Special Topics, on page 185 discusses a few topics that don’t fit nicely into any of the previous chapters but are nonetheless interesting parts of the Rails deployment ecosystem. We’ll sweep through the Rails technology stack starting at the application level and proceeding downward to the oper-ating system, hitting on various interesting ideas as we go. You don’t need to use these tools and techniques in every Rails deployment, but they’re good items to be familiar with.

Finally, we’ll wrap up with a few short appendixes. The first will cover a line-by-line review of a Capistrano deployment file, and the second will discuss deploying MassiveApp to an alternative technology stack consisting of nginx and Unicorn.

How to Read This Book

If you’re new to Rails deployment, you can read most of this book straight through. The exception would be Chapter 5, Advanced Capistrano, on page 81, which you can come back to once you’ve gotten your application deployed and have used the basic Capistrano functionality for a while.

If you’re a system administrator, you may want to read the Rails-specific parts of Chapter 6, Monitoring with Nagios, on page 95 and Chapter 7, Collecting Metrics with Ganglia, on page 129 to see how to hook monitoring and metrics tools up to a Rails application. You might also be interested in Chapter 8,

Maintaining the Application, on page 153 to see a few solutions to problems that come up with a typical Rails application. If you have a number of Rails applications running on a single server, you will also be interested in Chapter 9, Running Rubies with RVM, on page 173 to see how to isolate those applica-tions’ runtime environments.

Throughout this book we’ll have short “For Future Reference” sections that summarize the configuration files and scripts presented in the chapter. You can use these as quick-reference guides for using the tools that were dis-cussed; they’ll contain the final product without all the explanations.

Tools and Online Resources

Throughout the book we’ll be building a set of configuration files. Since we want to keep track of changes to those files, we’re storing them in a revision control system, and since Git is a popular revision control system, we’re using that. Git’s home page1 contains a variety of documentation options, and you’ll find some excellent practical exercises on http://gitready.com/. You’ll want to have Git installed to get the most out of the examples.

This book has a companion website2 where we post articles and interviews and anything else that we think would be interesting to folks who are deploying Rails applications. You can also follow us on Twitter at http://twitter.com/ deployingrails/.

The code examples in this book are organized by chapter and then by subject. For example, the chapter on Vagrant includes a sample Vagrantfile for running multiple virtual machines, and the path to the file is shown as vagrant/multi-ple_vms/Vagrantfile. In this case, all that chapter’s code examples are located in the vagrant directory, and this particular file is located in a multiple_vms subdi-rectory to differentiate it from other example files with the same name. As another example, the chapter on monitoring shows the path to an example file as monitoring/nagios_cfg_host_and_service/modules/nagios/files/conf.d/hosts/app.cfg. In this case, all the examples are in the monitoring directory, nagios_cfg_host_and_service is a subdirectory that provides some structure to the various examples in that

1. http://git-scm.com/

Acknowledgments

This book pulls together our interactions with so many people that it’s hard to list everyone who’s helped it come into being. Nonetheless, we’d like to recognize certain individuals in particular.

We’d like to thank the technical reviewers for their efforts. After we reread this text for the fiftieth time, our reviewers read it with fresh eyes and found issues, made pithy suggestions, and provided encouraging feedback. Alex Aguilar, Andy Glover, James Retterer, Jared Richardson, Jeff Holland, Matt Margolis, Mike Weber, Srdjan Pejic, and Trek Glowacki, thank you! Many readers provided feedback, discussions, and errata throughout the beta period; our thanks go out to them (especially Fabrizio Soppelsa, John Norman, Michael James, and Michael Wood) for nailing down many typos and missing pieces and helping us explore many informative avenues.

Many thanks to the professionals at the Pragmatic Bookshelf. From the initial discussions with Dave Thomas and Andy Hunt to the onboarding process with Susannah Pfalzer, we’ve been impressed with how dedicated, responsive, and experienced everyone has been. A particular thank-you goes to Brian Hogan, our technical editor, for his tireless shepherding of this book from a few sketchy chapter outlines to the finished product. Brian, you’ve provided feedback when we needed it, suggestions when we didn’t know we needed them, and the occasional firm nudge when we were floundering. Thanks for all your time, effort, and encouragement!

Anthony Burns

Foremost, I’d like to thank my loving wife, Ellie, for putting up with all the long nights spent locked in my office. For my part in this book, I could not have done it without the knowledge and experience of Tom Copeland to back me up every step of the way. I would also like to thank my wonderful parents, Anne and Tim, as well as Chad Fowler, Mark Gardner, Rich Kilmer, and the rest of the folks formerly from InfoEther for opening all of the doors for me that enabled me to help write this book. And lastly, without the shared motivations of my good friend Joshua Kurer, I would never have made it out to the East Coast to begin the journey that led me here today.

Tom Copeland

Thanks to my coauthor, Tony Burns, for his willingness to dive into new technologies and do them right. Using Vagrant for this book’s many exercises was an excellent idea and was entirely his doing.

Thanks to Chris Joyce, who got me started by publishing my first two books under the umbrella of Centennial Books. The next breakfast is on me!

Introduction

A great Rails application needs a great place to live. We have a variety of choices for hosting Rails applications, and in this chapter we’ll take a quick survey of that landscape.

Much of this book is about techniques and tools. Before diving in, though, let’s make some more strategic moves. One choice that we need to make is deciding where and how to host our application. We also want to think philosophically about how we’ll approach our deployment environment. Since the place where we’re deploying to affects how much we need to think about deployment technology, we’ll cover that first.

1.1

Where Do We Host Our Rails Application?

Alternatives for deploying a Rails application generally break down into either using shared hosting, via Heroku, or rolling your own environment at some level. Let’s take a look at those options.

Shared Hosting

The downsides are considerable, though. Shared hosting, as the name implies, means that the resources that are hosting the application are, well, shared. The costs may be low for a seemingly large amount of resources, such as disk space and bandwidth. However, once you start to use resources, the server your application is hosted on can often encounter bottlenecks. When another user’s application on the same server has a usage spike, your own application may respond more slowly or even lock up and stop responding, and there isn’t much you can do about it. Therefore, you can expect considerable vari-ation in applicvari-ation performance depending on what else is happening in the vicinity of your application’s server. This can make performance issues come and go randomly; a page that normally takes 100 milliseconds to load can suddenly spike to a load time of two to three seconds with no code changes. The support and response time is usually a bit rough since just a few opera-tions engineers will be stretched between many applicaopera-tions. Finally, although hardware issues are indeed someone else’s problem, that someone else will also schedule server and network downtime as needed. Those downtime windows may not match up with your plans.

That said, shared hosting does still have a place. It’s a good way to get started for an application that’s not too busy, and the initial costs are reasonable. If you’re using shared hosting, you can skip ahead to the Capistrano-related chapters in this book.

Heroku

Heroku is a popular Rails hosting provider. It deserves a dedicated discussion simply because the Heroku team has taken great pains to ensure that deploying a Rails application to Heroku is virtually painless. Once you have an account in Heroku’s system, deploying a Rails application is as simple as pushing code to a particular Git remote. Heroku takes care of configuring and managing your entire infrastructure. Even when an application needs to scale, Heroku continues to fit the bill since it handles the usual required tasks such as configuring a load balancer. For a small application or a team with limited system administration expertise, Heroku is an excellent choice.

There are other limitations, some of which can be worked around using Heroku’s “add-ons,” but generally speaking, by outsourcing your hosting to Heroku, you’re choosing to give up some flexibility in exchange for having someone else worry about maintaining your application environment. There are some practical issues, such as the lack of access to logs, and finally, some businesses may have security requirements or concerns that prevent them from putting their data and source code on servers they don’t own. For those use cases, Heroku is out of the question.

Like shared hosting, Heroku may be the right choice for some. We choose not to focus on this deployment option in this book since the Heroku website has a large collection of up-to-date documentation devoted to that topic.

Rolling Your Own Environment

For those who have quickly growing applications and want more control, we come to the other option. You can build out your own environment. Rolling your own application’s environment means that you’re the system manager. You’re responsible for configuring the servers and installing the software that will be running the application. There’s some flexibility in these terms; what we’re addressing here is primarily the ability to do your own operating system administration. Let’s look at a few of the possibilities that setting up your own environment encompasses.

In the Cloud

Managed VMs with Engine Yard

Engine Yard’s platform-as-a-service offer lies somewhere between deploying to Heroku and running your own virtual server. Engine Yard provides a layer atop Amazon’s cloud offerings so that you have an automatically built set of virtual servers configured (via Chef) with a variety of tools and services. If needed, you can customize the Chef recipes used to build those servers, modify the deployment scripts, and even ssh directly into the instances. Engine Yard also provides support for troubleshooting application problems. The usual caveats apply; outsourcing to a platform implies some loss of flexibility and some increase in cost, but it’s another point on the deployment spectrum.

Dedicated Hosting

Getting closer to the metal, another option is to host your application on a dedicated machine with a provider like Rackspace or SoftLayer. With that choice, you can be sure you have physical hardware dedicated to your appli-cation. This option eliminates a whole class of performance irregularities. The up-front cost will be higher since you may need to sign a longer-term contract, but you also may need fewer servers since there’s no virtualization layer to slow things down. You’ll also need to keep track of your server age and upgrade hardware once every few years, but you’ll find that your account representative at your server provider will be happy to prompt you to do that. There’s still no need to stock spare parts since your provider takes care of the servers, and your provider will also handle the repair of and recovery from hardware failures such as bad disk drives, burned-out power supplies, and so on.

As with a cloud-based VPS solution, you’ll be responsible for all system administration above the basic operation system installation and the network configuration level. You’ll have more flexibility with your network setup, though, since your provider can physically group your machines on a dedicated switch or router; that usually won’t be an option for a cloud VM solution.

Colocation and Building Your Own Servers

the operational cost of hiring staff for your datacenter are considerations, and there’s always the fear of over or under-provisioning your capacity. But if you need more control than hosting with a provider, this is the way to go.

A Good Mix

We feel that a combination of physical servers at a server provider and some cloud servers provides a good mix of performance and price. The physical servers give you a solid base of operations, while the cloud VMs let you bring on temporary capacity as needed.

This book is targeted for those who are rolling their own environment in some regard, whether the environment is all in the cloud, all on dedicated servers, or a mix of the two. And if that’s your situation, you may already be familiar with the term DevOps, which we’ll discuss next.

1.2

Building Effective Teams with DevOps

While Ruby on Rails is sweeping through the web development world, a sim-ilar change is taking place in the system administration and operations world under the name DevOps. This term was coined by Patrick Debois to describe “an emerging set of principles, methods, and practices for communication, collaboration, and integration between software development (application/soft-ware engineering) and IT operations (systems administration/infrastructure) professionals.”1 In other words, software systems tend to run more smoothly when the people who are writing the code and the people who are running the servers are all working together.

This idea may seem either a truism or an impossible dream depending on your background. In some organizations, software is tossed over the wall from development to operations, and after a few disastrous releases, the operations team learns to despise the developers, demand meticulous documentation for each release, and require the ability to roll back a release at the first sign of trouble. In other organizations, developers are expected to understand some system administration basics, and operations team members are expected to do enough programming so that a backtrace isn’t complete gib-berish. Finally, in the ideal DevOps world, developers and operations teams share expertise, consult each other regularly, plan new features together, and generally work side by side to keep the technical side of the company moving forward and responding to the needs of the business.

One of the primary practical outcomes of the DevOps movement is a commit-ment to automation. Let’s see what that means for those of us who are developing and deploying Rails applications.

Automation Means Removing Busywork

Automation means that we’re identifying tasks that we do manually on a regular basis and then getting a computer to do those tasks instead. Rather than querying a database once a day to see how many users we have, we write a script that queries the database and sends us an email with the current user count. When we have four application servers to configure, we don’t type in all the package installation commands and edit all the configuration files on each server. Instead, we write scripts so that once we have one server perfectly configured, we can apply that same configuration to the other servers in no more time than it takes for the packages to be downloaded and installed. Rather than checking a web page occasionally to see whether an application is still responding, we write a script to connect to the application and send an email if the page load time exceeds some threshold.

Busywork doesn’t mean that the work being done is simple. That iptables

configuration may be the result of hours of skillful tuning and tweaking. It turns into busywork only when we have to type the same series of commands each time we have to roll it out to a new server. We want repeatability; we want to be able to roll a change out to dozens of servers in a few seconds with no fear of making typos or forgetting a step on each server. We’d also like to have records showing that we did roll it out to all the servers at a particular time, and we’d like to know that if need be, we can undo that change easily.

Automation Is a Continuum

Automation is like leveling up; we start small and try to keep improving. A shell script that uses curl to check a website is better than hitting the site manually, and a monitoring system that sends an email when a problem occurs and another when it’s fixed is better still. Having a wiki2 with a list of notes on how to build out a new mail server is an improvement over having no notes and trying to remember what we did last time, and having a system that can build a mail server with no manual intervention is even better. The idea is to make progress toward automation bit by bit; then we can look around after six months of steady progress and be surprised at how far we’ve come.

We want to automate problems as they manifest themselves. If we have one server and our user base tops out at five people, creating a hands-off server build process may not be our biggest bang for the buck. But if those five users are constantly asking for the status of their nightly batch jobs, building a dashboard that lets them see that information without our intervention may be the automation we need to add. This is the “Dev” in DevOps; writing code to solve problems is an available option.

So, we’re not saying that everything must be automated. There’s a point at which the time invested in automating rarely performed or particularly delicate tasks is no longer being repaid; as the saying goes, “Why do in an hour what you can automate in six months?” But having a goal of automating as much as possible is the right mind-set.

Automation: Attitude, Not Tools

Notice that in this automation discussion we haven’t mentioned any software package names. That’s not because we’re averse to using existing solutions to automate tasks but instead that the automation itself, rather than the particular technological means, is the focus. There are good tools and bad tools, and in later chapters we’ll recommend some tools that we use and like. But any automation is better than none.

Since we’re talking about attitude and emotions, let’s not neglect the fact that automation can simply make us happier. There’s nothing more tedious than coming to work and knowing that you have to perform a repetitive, error-prone task for hours on end. If we can take the time to turn that process into a script that does the job in ten minutes, we can then focus on making other parts of the environment better.

That’s the philosophical background. To apply all of these principles in the rest of this book, we’ll need an application that we can deploy to the servers that we’ll be configuring. Fortunately, we have such an application; read on to learn about MassiveApp.

1.3

Learning with MassiveApp

experience. In the meantime, we’ve built ourselves a fine application, and we want to deploy it into a solid environment.

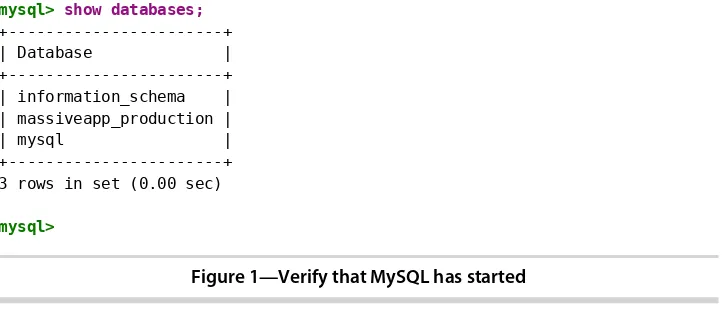

For the purposes of illustrating the tools and practices in this book, we’re fortunate that MassiveApp is also a fairly conventional Rails 3.2 application. It uses MySQL as its data store, implements some auditing requirements, and has several tables (accounts, shares, and bookmarks) that we expect to grow at a great rate. It’s a small application (as you can see in its Git repository3),

but we still want to start off with the right infrastructure pieces in place.

Since we’re building a Rails deployment environment, we also have a compan-ion repository that contains all our system configuratcompan-ion and Puppet scripts. This is located on GitHub as well.4 It has not only the finished configuration but also Git branches that we can use to build each chapter’s configuration from scratch.

Let’s set up those directories. First we’ll create a deployingrails directory and move into it.

$ mkdir ~/deployingrails $ cd ~/deployingrails

Now we can clone both repositories.

$ git clone git://github.com/deployingrails/massiveapp.git

Cloning into 'massiveapp'...

remote: Counting objects: 237, done.

remote: Compressing objects: 100% (176/176), done. remote: Total 237 (delta 93), reused 189 (delta 45) Receiving objects: 100% (237/237), 42.97 KiB, done. Resolving deltas: 100% (93/93), done.

$ git clone git://github.com/deployingrails/massiveapp_ops.git

«

very similar output»

We’ll be creating quite a few subdirectories in the deployingrails directory as we work to get MassiveApp deployed.

We’ve established some of the underlying principles of deployment and DevOps. Let’s move on to seeing how we can set up the first thing needed for successful deployments: our working environment. In the next chapter, we’ll explore two useful utilities that will let us practice our deployments and server configuration without ever leaving the comfort of our laptop.

3. https://github.com/deployingrails/massiveapp

Getting Started with Vagrant

In the previous chapter, we discussed choosing a hosting location and decided that rolling our own environment would be a good way to go. That being the case, knowing how to construct a home for MassiveApp is now at the top of our priority list. We don’t want to leap right into building out our actual production environment, though. Instead, we’d like to practice our build-out procedure first. Also, since MassiveApp will run on several computers in production, it would be handy to run it on several computers in a practice environment as well.

You may well have a couple of computers scattered about your basement, all whirring away happily. But these computers probably already have assigned roles, and rebuilding them just for the sake of running this book’s exercises is not a good use of your time. At work, you may have access to an entire farm of servers, but perhaps you don’t want to use those as a test bed; even simple experiments can be a little nerve-racking since an errant network configuration can result in getting locked out of a machine.

Another possibility is to fire up a few server instances in the cloud. By cloud

Instead, we’ll explore two tools: VirtualBox1 and Vagrant.2 These will let us run VM instances on our computer. To quote from the Vagrant website, Vagrant is “a tool for building and distributing virtualized development envi-ronments.” It lets you manage virtual machines using Oracle’s VirtualBox and gives you the ability to experiment freely with a variety of operating sys-tems without fear of damaging a “real” server.

Setting up these tools and configuring a few virtual machines will take a little time, so there is an initial investment here. But using these tools will allow us to set up a local copy of our production environment that we’ll use to develop our system configuration and test MassiveApp’s deployment. And as MassiveApp grows and we want to add more services, we’ll have a safe and convenient environment in which to try new systems.

2.1

Installing VirtualBox and Vagrant

First we need to install both Vagrant and VirtualBox.

Installing VirtualBox

Vagrant is primarily a driver for VirtualBox virtual machines, so VirtualBox is the first thing we’ll need to find and install. VirtualBox is an open source project and is licensed under the General Public License (GPL) version 2, which means that the source code is available for our perusal and we don’t have to worry too much about it disappearing. More importantly for our immediate purposes, installers are available for Windows, Linux, Macintosh, and Solaris hosts on the VirtualBox website.3 There’s also an excellent

installation guide for a variety of operating systems on the site.4 Armed with those resources, we can download and run the installer to get going. We’ll want to be sure to grab version 4.1 or newer, because current releases of Vagrant depend on having a recent VirtualBox in place.

Now that VirtualBox is installed, we can run it and poke around a bit; it’s an extremely useful tool by itself. In the VirtualBox interface, there are options to create virtual machines and configure them to use a certain amount of memory, to have a specific network configuration, to communicate with serial and USB ports, and so on. But now we’ll see a better way to manage our VirtualBox VMs.

1. http://virtualbox.org

2. http://vagrantup.com

3. http://www.virtualbox.org/wiki/Downloads

Installing Vagrant

The next step is to install Vagrant on our computer. The Vagrant developers have thoughtfully provided installers for a variety of systems, so let’s go to the download page.5 There we can grab the latest release (1.0.2 as of this writing) and install it. This is the preferred method of installation, but if you need to, you can also fall back to installing Vagrant as a plain old RubyGem.

Once we’ve installed Vagrant, we can verify that all is well by running the command-line tool, aptly named vagrant, with no arguments. We’ll see a burst of helpful information.

$ vagrant

Usage: vagrant [-v] [-h] command [<args>]

-v, --version Print the version and exit.

-h, --help Print this help.

Available subcommands: box

destroy halt init package provision reload resume ssh ssh-config status suspend up

«

and more»

We can also get more information on any command by running vagrant with the command name and the -h option.

$ vagrant box -h

Usage: vagrant box <command> [<args>]

Available subcommands: add

list remove repackage

Now that Vagrant is installed, we’ll use it to create a new VM on which to hone our Rails application deployment skills.

Creating a Virtual Machine with Vagrant

Vagrant has built-in support for creating Ubuntu 10.04 virtual machines, and we’ve found Ubuntu to be a solid and well-maintained distribution. Therefore, we’ll use that operating system for our first VM. The initial step is to add the VM definition; in Vagrant parlance, that’s a box, and we can add one with the vagrant box add command. Running this command will download a large file (the Ubuntu 10.04 64-bit image, for example, is 380MB), so wait to run it until you’re on a fast network, or be prepared for a long break.

$ vagrant box add lucid64 http://files.vagrantup.com/lucid64.box

[vagrant] Downloading with Vagrant::Downloaders::HTTP...

[vagrant] Downloading box: http://files.vagrantup.com/lucid64.box

«

a nice progress bar»

The name “lucid64” is derived from the Ubuntu release naming scheme. “lucid” refers to Ubuntu’s 10.04 release name of Lucid Lynx, and “64” refers to the 64-bit installation rather than the 32-bit image. We could give this box any name (for example, vagrant box add my_ubuntu http://files.vagrantup.com/lucid64.box

would name the box my_ubuntu), but we find that it’s easier to keep track of boxes if they have names related to the distribution.

Ubuntu is a popular distribution, but it’s definitely not the only option. A variety of other boxes are available in the unofficial Vagrant box index.6 Once the box is downloaded, we can create our first virtual machine. Let’s get into the deployingrails directory that we created in Section 1.3, Learning with MassiveApp, on page 7.

$ cd ~/deployingrails/ $ mkdir first_box $ cd first_box

Now we’ll run Vagrant’s init command to create a new Vagrant configuration file, which is appropriately named Vagrantfile.

$ vagrant init

A `Vagrantfile` has been placed in this directory. You are now ready to `vagrant up` your first virtual environment! Please read the comments in the Vagrantfile as well as documentation on `vagrantup.com` for more information on using Vagrant.

Where Am I?

One of the tricky bits about using virtual machines and showing scripting examples is displaying the location where the script is running. We’re going to use a shell prompt convention to make it clear where each command should be run.

If we use a plain shell prompt (for example, $), that will indicate that a command should be run on the host, in other words, on your laptop or desktop machine.

If a command is to be run inside a virtual machine guest, we’ll use a shell prompt with the name of the VM (for example, vm $). In some situations, we’ll have more than one VM running at once; for those cases, we’ll use a prompt with an appropriate VM guest name such as nagios $ or app $.

Let’s open Vagrantfile in a text editor such as TextMate or perhaps Vim. Don’t worry about preserving the contents; we can always generate a new file with another vagrant init. We’ll change the file to contain the following bit of Ruby code, which sets our first VM’s box to be the one we just downloaded. Since we named that box lucid64, we need to use the same name here.

introduction/Vagrantfile

Vagrant::Config.run do |config| config.vm.box = "lucid64"

end

That’s the bare minimum to get a VM running. We’ll save the file and then start our new VM by running vagrant up in our first_box directory.

$ vagrant up

[default] Importing base box 'lucid64'...

[default] Matching MAC address for NAT networking... [default] Clearing any previously set forwarded ports... [default] Forwarding ports...

[default] -- 22 => 2222 (adapter 1)

[default] Creating shared folders metadata...

[default] Clearing any previously set network interfaces... [default] Booting VM...

[default] Waiting for VM to boot. This can take a few minutes. [default] VM booted and ready for use!

[default] Mounting shared folders... [default] -- v-root: /vagrant

Despite the warning that “This can take a few minutes,” this entire process took just around a minute on a moderately powered laptop. And at the end of that startup interval, we can connect into our new VM using ssh.

$ vagrant ssh

VirtualBox Guest Additions

When firing up a new VirtualBox instance, you may get a warning about the “guest additions” not matching the installed version of VirtualBox. The guest additions make working with a VM easier; for example, they allow you to change the guest window size. They are included with VirtualBox as an ISO image within the installation package, so to install the latest version, you can connect into your VM and run these commands:

vm $ sudo apt-get install dkms -y

vm $ sudo /dev/cdrom/VBoxLinuxAdditions-x86.run

If you’re running Mac OS X and you’re not able to find the ISO image, look in the Applications directory and copy it over if it’s there; then mount, install, and restart the guest.

$ cd ~/deployingrails/first_box/

$ cp /Applications/VirtualBox.app/Contents/MacOS/VBoxGuestAdditions.iso .

$ vagrant ssh

vm $ mkdir vbox

vm $ sudo mount -o loop /vagrant/VBoxGuestAdditions.iso vbox/ vm $ sudo vbox/VBoxLinuxAdditions.run

Verifying archive integrity... All good.

Uncompressing VirtualBox 4.1.12 Guest Additions for Linux...

«and more output» vm $ exit

$ vagrant reload

Alternatively, you can download the guest additions from the VirtualBox site.

vm $ mkdir vbox

vm $ wget --continue --output-document \ vbox/VBoxGuestAdditions_4.1.12.iso\

http://download.virtualbox.org/virtualbox/4.1.12/VBoxGuestAdditions_4.1.12.iso vm $ sudo mount -o loop vbox/VBoxGuestAdditions_4.1.12.iso vbox/

«continue as above»

This is all the more reason to build a base box; you won’t want to have to fiddle with this too often.

Take a moment to poke around. You’ll find that you have a fully functional Linux host that can access the network, mount drives, stop and start services, and do all the usual things that you’d do with a server.

When we create a VM using Vagrant, under the covers Vagrant is invoking the appropriate VirtualBox APIs to drive the creation of the VM. This results in a new subdirectory of ~/VirtualBox VMs/ with the virtual machine image, some log files, and various other bits that VirtualBox uses to keep track of VMs. We can delete the Vagrantfile and the VM will still exist, or we can delete the VM (whether in the VirtualBox user interface or with Vagrant) and the

and the VM as being connected, though; that way, we can completely manage the VM with Vagrant.

Working with a Vagrant Instance

We can manage this VM using Vagrant as well. As we saw in the vagrant com-mand output in Installing Vagrant, on page 11, we can suspend the virtual machine, resume it, halt it, or outright destroy it. And since creating new instances is straightforward, there’s no reason to keep an instance around if we’re not using it.

We connected into the VM without typing a password. This seems mysterious, but remember the “Forwarding ports” message that was printed when we ran

vagrant up? That was reporting that Vagrant had successfully forwarded port 2222 on our host computer to port 22 on the virtual machine. To show that there’s nothing too mysterious going on, we can bypass Vagrant’s vagrant ssh port-forwarding whizziness and ssh into the virtual machine. We’re adding several ssh options (StrictHostKeyChecking and UserKnownHostsFile) that vagrant ssh adds by default, and we can enter vagrant when prompted for a password.

$ ssh -p 2222 \

-o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null vagrant@localhost

Warning: Permanently added '[localhost]:2222' (RSA) to the list of known hosts. vagrant@localhost's password:

Last login: Thu Feb 2 00:01:58 2012 from 10.0.2.2 vm $

Vagrant includes a “not so private” private key that lets vagrant ssh connect without a password. We can use this outside of Vagrant by referencing it with the -i option.

$ ssh -p 2222 \

-o StrictHostKeyChecking=no -o UserKnownHostsFile=/dev/null \ -i ~/.vagrant.d/insecure_private_key vagrant@localhost

Warning: Permanently added '[localhost]:2222' (RSA) to the list of known hosts. Last login: Thu Feb 2 00:03:34 2012 from 10.0.2.2

vm $

The Vagrant site refers to this as an “insecure” key pair, and since it’s dis-tributed with Vagrant, we don’t recommend using it for anything else. But it does help avoid typing in a password when logging in. We’ll use this forwarded port and this insecure private key extensively in our Capistrano exercises.

Building a Custom Base Box

We used the stock Ubuntu 10.04 64-bit base box to build our first virtual machine. Going forward, though, we’ll want the latest version of Ruby to be installed on our virtual machines. So, now we’ll take that base box, customize it by installing Ruby 1.9.3, and then build a new base box from which we’ll create other VMs throughout this book. By creating our own customized base box, we’ll save a few steps when creating new VMs; for example, we won’t have to download and compile Ruby 1.9.3 each time.

Let’s create a new virtual machine using the same Vagrantfile as before. First we’ll destroy the old one just to make sure we’re starting fresh; we’ll use the

force option so that we’re not prompted for confirmation. $ cd ~/deployingrails/first_box

$ vagrant destroy --force

[default] Forcing shutdown of VM...

[default] Destroying VM and associated drives...

This time we have a basic Vagrantfile in that directory, so we don’t even need to run vagrant init. For this VM we won’t have to download the base box, so creating it will take only a minute or so. We’ll execute vagrant up to get the VM created and running.

$ vagrant up $ vagrant ssh

vm $

Our VM is started and we’re connected to it, so let’s use the Ubuntu package manager, apt-get, to fetch the latest Ubuntu packages and install some devel-opment libraries. Having these in place will let us compile Ruby from source and install Passenger.

vm $ sudo apt-get update -y

vm $ sudo apt-get install build-essential zlib1g-dev libssl-dev libreadline-dev \ git-core curl libyaml-dev libcurl4-dev libsqlite3-dev apache2-dev -y

Now we’ll remove the Ruby installation that came with the lucid64 base box.

vm $ sudo rm -rf /opt/vagrant_ruby

Next we’ll download Ruby 1.9.3 using curl. The --remote-name flag tells curl to save the file on the local machine with the same filename as the server pro-vides.

vm $ curl --remote-name http://ftp.ruby-lang.org/pub/ruby/1.9/ruby-1.9.3-p194.tar.gz

vm $ tar zxf ruby-1.9.3-p194.tar.gz vm $ cd ruby-1.9.3-p194/

vm $ ./configure

«

lots of output»

vm $ make«

and more output»

vm $ sudo make install«

still more output»

We’ll verify that Ruby is installed by running a quick version check.

vm $ ruby -v

ruby 1.9.3p194 (2012-04-20 revision 35410) [x86_64-linux]

Next we’ll verify the default gems are in place.

vm $ gem list

*** LOCAL GEMS ***

bigdecimal (1.1.0) io-console (0.3) json (1.5.4) minitest (2.5.1) rake (0.9.2.2) rdoc (3.9.4)

We’ve set up our VM the way we want it, so let’s log out and package up this VM as a new base box.

vm $ exit

$ vagrant package

[vagrant] Attempting graceful shutdown of linux... [vagrant] Cleaning previously set shared folders... [vagrant] Creating temporary directory for export... [vagrant] Exporting VM...

[default] Compressing package to: [some path]/package.box

This created a package.box file in our current directory. We’ll rename it and add it to our box list with the vagrant box add command.

$ vagrant box add lucid64_with_ruby193 package.box

[vagrant] Downloading with Vagrant::Downloaders::File... [vagrant] Copying box to temporary location...

[vagrant] Extracting box... [vagrant] Verifying box...

[vagrant] Cleaning up downloaded box...

$ vagrant box list

lucid32 lucid64

lucid64_with_ruby193

Our new box definition is there, so we’re in good shape. Let’s create a new directory on our host machine and create a Vagrantfile that references our new base box by passing the box name to vagrant init.

$ mkdir ~/deployingrails/vagrant_testbox $ cd ~/deployingrails/vagrant_testbox $ vagrant init lucid64_with_ruby193

A `Vagrantfile` has been placed in this directory. You are now ready to `vagrant up` your first virtual environment! Please read the comments in the Vagrantfile as well as documentation on `vagrantup.com` for more information on using Vagrant.

Now we’ll fire up our new VM, ssh in, and verify that Ruby is there.

$ vagrant up

«

lots of output»

$ vagrant ssh

vm $ ruby -v

ruby 1.9.3p194 (2012-04-20 revision 35410) [x86_64-linux]

It looks like a success. We’ve taken the standard lucid64 base box, added some development libraries and the latest version of Ruby, and built a Vagrant base box so that other VMs we create will have our customizations already in place.

Installing a newer Ruby version isn’t the only change we can make as part of a base box. A favorite .vimrc, .inputrc, or shell profile that always gets copied onto servers are all good candidates for building into a customized base box. Anything that prevents post-VM creation busywork is fair game.

Next we’ll build another VM that uses other useful Vagrant configuration options.

2.2

Configuring Networks and Multiple Virtual Machines

Our previous Vagrantfile was pretty simple; we needed only one line to specify our custom base box. Let’s build another VM and see what other customiza-tions Vagrant has to offer. Here’s our starter file:

vagrant/Vagrantfile

Vagrant::Config.run do |config| config.vm.box = "lucid64"

Setting VM Options

The Vagrantfile is written in Ruby; as with many other Ruby-based tools, Vagrant provides a simple domain-specific language (DSL) for configuring VMs. The outermost construct is the Vagrant::Config.run method call. This yields a Vagrant:: Config::Top object instance that we can use to set configuration information. We can set any VirtualBox option; we can get a comprehensive list of the available options by running the VirtualBox management utility.

$ VBoxManage --help | grep -A 10 modifyvm

VBoxManage modifyvm <uuid|name> [--name <name>]

[--ostype <ostype>]

[--memory <memorysize in MB>]

«

and more options»

Our example is setting the host name and the memory limit by calling con-fig.vm.customize and passing in an array containing the command that we’re invoking on the VirtualBox management utility, followed by the configuration items’ names and values.

vagrant/with_options/Vagrantfile

config.vm.customize ["modifyvm", :id, "--name", "app", "--memory", "512"]

We’ve found that it’s a good practice to specify the name and memory limit for all but the most trivial VMs. Most of the other items in the customize array we probably won’t need to worry about since they’re low-level things such as whether the VM supports 3D acceleration; VirtualBox will detect these values on its own. The VirtualBox documentation7 is a good source of information on the settings for customizing a VM.

Setting Up the Network

Our VM will be much more useful if the host machine can access services running on the guest. Vagrant provides the ability to forward ports to the guest so we can (for example) browse to localhost:4567 and have those HTTP requests forwarded to port 80 on the guest. We can also set up a network for the VM that makes it accessible to the host via a statically assigned IP address. We can then assign an alias in the host’s /etc/hosts file so we can access our VM by a domain name in a browser address bar, by ssh, or by other methods.

First let’s add a setting, host_name, to specify the host name that Vagrant will assign to the VM. This will also be important later for Puppet, which will check the host name when determining which parts of our configuration to run.

vagrant/with_options/Vagrantfile

config.vm.host_name = "app"

We want to be able to connect into the VM with ssh, and we’d also like to browse into the VM once we get MassiveApp deployed there. Thus, we’ll add several instances of another setting, forward_port. This setting specifies the port on the VM to forward requests to (port 80) and the port on the host to forward (port 4567). With this in place, browsing to port 4567 on the host machine will let us view whatever the VM is serving up on port 80. For forwarding the

ssh port, we include the :auto option, which tells Vagrant that if the port isn’t available, it should search for another available port. Whenever we add new

forward_port directives, we need to restart the VM via vagrant reload. Let’s add those directives.

vagrant/with_options/Vagrantfile

config.vm.forward_port 22, 2222, :auto => true config.vm.forward_port 80, 4567

The next setting, network, specifies that this VM will use a host-only network so that this VM won’t appear as a device on the network at large. This setting also specifies the VM’s IP address on the host-only network. We want to avoid using common router-assigned subnets such as 127.0.0.2 or 192.168.*, because they may clash with existing or future subnets that a router assigns. The Vagrant documentation recommends the 33.33.* subnet, and we’ve found that works well. Keep in mind that when multiple Vagrant VMs are running, they will be able to communicate as long as they are in the same subnet. So, a VM with the IP address 33.33.12.34 will be able to talk to one at 33.33.12.56 but not at 33.44.12.34. Let’s add a network setting to our Vagrantfile.

vagrant/with_options/Vagrantfile

config.vm.network :hostonly, "33.33.13.37"

Like with the port forwarding settings, changing the network requires a VM restart.

Sharing Folders

vagrant/with_options/Vagrantfile

config.vm.share_folder "hosttmp", "/hosttmp", "/tmp"

This greatly simplifies moving data back and forth between the VM and the host system, and we’ll use this feature throughout our configuration exercises.

2.3

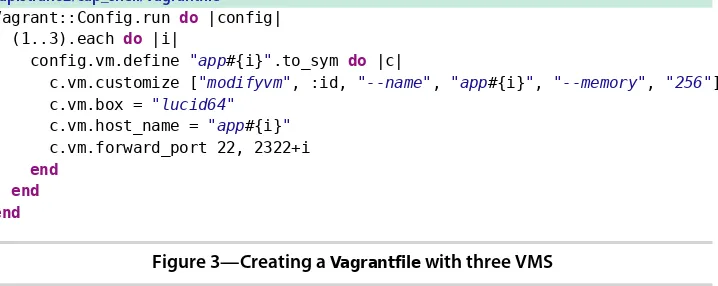

Running Multiple VMs

Vagrant enables us to run multiple guest VMs on a single host. This is handy for configuring database replication between servers, building software firewall rules, tweaking monitoring checks, or almost anything for which it’s helpful to have more than one host for proper testing. Let’s give it a try now; first we need a new directory to hold our new VM.

$ mkdir ~/deployingrails/multiple_vms $ cd ~/deployingrails/multiple_vms

Now we’ll need a Vagrantfile. The syntax to define two separate configurations within our main configuration is to call the define method for each with a dif-ferent name.

Vagrant::Config.run do |config|

config.vm.define :app do |app_config|

end

config.vm.define :db do |db_config|

end end

We’ll want to set vm.name and a memory size for each VM.

Vagrant::Config.run do |config|

config.vm.define :app do |app_config|

app_config.vm.customize ["modifyvm", :id, "--name", "app", "--memory", "512"]

end

config.vm.define :db do |db_config|

db_config.vm.customize ["modifyvm", :id, "--name", "db", "--memory", "512"]

end end

We’ll use the same box name for each VM, but we don’t need to forward port 80 to the db VM, and we need to assign each VM a separate IP address. Let’s add these settings to complete our Vagrantfile.

vagrant/multiple_vms/Vagrantfile

Vagrant::Config.run do |config|

config.vm.define :app do |app_config|

app_config.vm.customize ["modifyvm", :id, "--name", "app", "--memory", "512"] app_config.vm.box = "lucid64_with_ruby193"

Instances Need Unique Names

If you attempt to start more than one VM with the same name (in other words, the same modifyvm --name value), you’ll get an error along the lines of “VBoxManage: error: Could not rename the directory.” The fix is to either choose a different instance name for the new VM or, if the old VM is a leftover from previous efforts, just destroy the old VM. Note that halting the old VM is not sufficient; it needs to be destroyed.

app_config.vm.forward_port 22, 2222, :auto => true app_config.vm.forward_port 80, 4567

app_config.vm.network :hostonly, "33.33.13.37"

end

config.vm.define :db do |db_config|

db_config.vm.customize ["modifyvm", :id, "--name", "db", "--memory", "512"] db_config.vm.box = "lucid64_with_ruby193"

db_config.vm.host_name = "db"

db_config.vm.forward_port 22, 2222, :auto => true db_config.vm.network :hostonly, "33.33.13.38"

end end

Our VMs are defined, so we can start them both with vagrant up. The output for each VM is prefixed with the particular VM’s name.

$ vagrant up

[app] Importing base box 'lucid64_with_ruby193'... [app] Matching MAC address for NAT networking... [app] Clearing any previously set forwarded ports... [app] Forwarding ports...

[app] -- 22 => 2222 (adapter 1) [app] -- 80 => 4567 (adapter 1)

[app] Creating shared folders metadata...

[app] Clearing any previously set network interfaces... [app] Preparing network interfaces based on configuration... [app] Running any VM customizations...

[app] Booting VM...

[app] Waiting for VM to boot. This can take a few minutes. [app] VM booted and ready for use!

[app] Configuring and enabling network interfaces... [app] Setting host name...

[app] Mounting shared folders... [app] -- v-root: /vagrant

[db] Importing base box 'lucid64_with_ruby193'... [db] Matching MAC address for NAT networking... [db] Clearing any previously set forwarded ports...

[db] Fixed port collision for 22 => 2222. Now on port 2200. [db] Fixed port collision for 22 => 2222. Now on port 2201. [db] Forwarding ports...

[db] Creating shared folders metadata...

[db] Clearing any previously set network interfaces... [db] Preparing network interfaces based on configuration... [db] Running any VM customizations...

[db] Booting VM...

[db] Waiting for VM to boot. This can take a few minutes. [db] VM booted and ready for use!

[db] Configuring and enabling network interfaces... [db] Setting host name...

[db] Mounting shared folders... [db] -- v-root: /vagrant

We can connect into each VM using our usual vagrant ssh, but this time we’ll also need to specify the VM name.

$ vagrant ssh app

Last login: Wed Dec 21 19:47:36 2011 from 10.0.2.2 app $ hostname

app app $ exit

$ vagrant ssh db

Last login: Thu Dec 22 21:19:54 2011 from 10.0.2.2 db $ hostname

db

Generally, when we apply any Vagrant command to one VM in a multiple-VM cluster, we need to specify the VM name. For a few commands (for example,

vagrant halt), this is optional, and we can act on all VMs by not specifying a host name.

To verify inter-VM communications, let’s connect from db to app via ssh using the vagrant account and a password of vagrant.

db $ ssh 33.33.13.37

The authenticity of host '33.33.13.37 (33.33.13.37)' can't be established. RSA key fingerprint is ed:d8:51:8c:ed:37:b3:37:2a:0f:28:1f:2f:1a:52:8a. Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '33.33.13.37' (RSA) to the list of known hosts. [email protected]'s password:

Last login: Thu Dec 22 21:19:41 2011 from 10.0.2.2 app $

Finally, we can shut down and destroy both VMs with vagrant destroy.

$ vagrant destroy --force

[db] Forcing shutdown of VM...

[db] Destroying VM and associated drives... [app] Forcing shutdown of VM...

We used two VMs in this example, but Vagrant can handle as many VMs as you need up to the resource limits of your computer. So, that ten-node Hadoop cluster on your laptop is finally a reality.

2.4

Where to Go Next

We’ve covered a fair bit of Vagrant functionality in this chapter, but there are a few interesting areas that are worth exploring once you’re comfortable with this chapter’s contents: using Vagrant’s bridged network capabilities and using the Vagrant Puppet provisioner.

Bridged Networking

We’ve discussed Vagrant’s “host-only” network feature (which hides the VM from the host computer’s network) in Setting Up the Network, on page 19. Another option is bridged networking, which causes the VM to be accessible by other devices on the network. When the VM boots, it gets an IP address from the network’s DHCP server, so it’s as visible as any other device on the network.

This is a straightforward feature, so there’s not too much detail to learn, but it’s worth experimenting with so that it’s in your toolbox. For example, with bridged networking, a guest VM can serve up a wiki, an internal build tool, or a code analysis tool. Co-workers on the same network can browse in and manage the guest as needed, all without disturbing the host computer’s ability to restart a web or database service. It’s a great feature to be aware of.

The Puppet Provisioner

We’ll spend the next chapter, and much of the rest of this book, discussing Puppet and its uses. But to glance ahead, Vagrant provides a provisioning

2.5

Conclusion

In this chapter, we covered the following:

• Installing VirtualBox in order to create virtual machines

• Setting up Vagrant to automate the process of creating and managing VMs with VirtualBox

• Creating a customized Vagrant base box to make new box setup faster • Examining a few of the features that Vagrant offers for configuring VMs

In the next chapter, we’ll introduce Puppet, a system administration tool that will help us configure the virtual machines that we can now build quickly and safely. We’ll learn the syntax of Puppet’s DSL and get our Rails stack up and running along the way.

2.6

For Future Reference

Creating a VM

To create a new VM using Vagrant, simply run the following commands:

$ mkdir newdir && cd newdir

$ vagrant init lucid64 && vagrant up

Creating a Custom Base Box

To cut down on initial box setup, create a customized base box.

$ mkdir newdir && cd newdir $ vagrant init lucid64 && vagrant up

# Now 'vagrant ssh' and make your changes, then log out of the VM

$ vagrant package

$ vagrant box add your_new_base_box_name package.box

A Complete Vagrantfile

Here’s a Vagrantfile that uses a custom base box, shares a folder, forwards an additional port, and uses a private host-only network:

vagrant/with_options/Vagrantfile

Vagrant::Config.run do |config|

config.vm.customize ["modifyvm", :id, "--name", "app", "--memory", "512"] config.vm.box = "lucid64_with_ruby193"

config.vm.host_name = "app"

config.vm.forward_port 22, 2222, :auto => true config.vm.forward_port 80, 4567

config.vm.network :hostonly, "33.33.13.37"

config.vm.share_folder "hosttmp", "/hosttmp", "/tmp"

Rails on Puppet

Now that we know how to create a virtual machine, we need to know how to configure it to run MassiveApp. We could start creating directories and installing packages manually, but that would be error-prone, and we’d need to repeat all those steps the next time we built out a server. Even if we put our build-out process on a wiki or in a text file, someone else looking at that documentation has no way of knowing whether it’s the current configuration or even whether the notes were accurate to begin with. Besides, manually typing in commands is hardly in keeping with the DevOps philosophy. We need some automation instead.

One software package that enables automated server configuration is Puppet.1

Puppet is both a language and a set of tools that allows us to configure our servers with the packages, services, and files that they need. Commercial support is available for Puppet, and also a variety of tutorials, conference presentations, and other learning materials are available online. It’s a popular tool, it’s been around for a few years, and we’ve found it to be effective, so it’s our favorite server automation utility.

3.1

Understanding Puppet

Puppet automates server provisioning by formalizing a server’s configuration into manifests. Puppet’s manifests are text files that contain declarations written in Puppet’s domain-specific language (DSL). When we’ve defined our configuration using this DSL, Puppet will ensure that the machine’s files, packages, and services match the settings we’ve specified.

To use Puppet effectively, though, we still have to know how to configure a server; Puppet will just do what we tell it. If, for example, our Puppet manifests don’t contain the appropriate declarations to start a service on system boot, Puppet won’t ensure that the service is set up that way. In other words, system administration knowledge is still useful. The beauty of Puppet is that the knowledge we have about systems administration can be captured, formalized, and applied over and over. We get to continue doing the interesting parts of system administration, that is, understanding, architecting, and tuning ser-vices. Meanwhile, Puppet automates away the boring parts, such as editing configuration files and manually running package installation commands.

In this chapter, we’ll install and configure Puppet and then write Puppet manifests that we can use to build out virtual machines on which to run MassiveApp. By the end of this chapter, not only will we have a server ready for receiving a MassiveApp deploy, but we’ll also have the Puppet configuration necessary to quickly build out more servers. And we’ll have gained the general Puppet knowledge needed to create additional Puppet manifests as we encounter other tools in later chapters.

3.2

Setting Up Puppet

We’ll accumulate a variety of scripts, files, and Puppet manifests while developing a server’s configuration. We also need a place to keep documenta-tion on more complex operadocumenta-tions procedures. That’s a bunch of files and data, and we don’t want to lose any of it. So, as with everything else when it comes to development, putting these things in version control is the first key step in creating a maintainable piece of our system.

We cloned this book’s massiveapp_ops GitHub repository in Section 1.3, Learning with MassiveApp, on page 7, and if we list the branches in that repository, we see that it contains an empty branch.

$ cd ~/deployingrails/massiveapp_ops/ $ git branch -a

empty * master

without_ganglia without_nagios

remotes/origin/HEAD -> origin/master remotes/origin/empty

remotes/origin/master

Puppet Alternatives: Chef

Chefa is a popular alternative to Puppet. It’s also open source, and it can be run on an in-house server the same as we’re running Puppet. Alternatively, it’s supported by OpsCode, which provides the same functionality in a software-as-a-service solution.

There is a variety of terminology and design differences, but generally Chef has the same primary focus as Puppet: automating the configura