Full Terms & Conditions of access and use can be found at

http://www.tandfonline.com/action/journalInformation?journalCode=vjeb20

Download by: [Universitas Maritim Raja Ali Haji] Date: 11 January 2016, At: 22:20

Journal of Education for Business

ISSN: 0883-2323 (Print) 1940-3356 (Online) Journal homepage: http://www.tandfonline.com/loi/vjeb20

Developing a Forensic Approach to Process

Improvement: The Relationship Between

Curriculum and Impact in Frontline Operator

Education

Simon Croom & Alan Betts

To cite this article: Simon Croom & Alan Betts (2011) Developing a Forensic Approach to Process Improvement: The Relationship Between Curriculum and Impact in

Frontline Operator Education, Journal of Education for Business, 86:6, 311-316, DOI: 10.1080/08832323.2010.520758

To link to this article: http://dx.doi.org/10.1080/08832323.2010.520758

Published online: 29 Aug 2011.

Submit your article to this journal

Article views: 481

CopyrightC Taylor & Francis Group, LLC ISSN: 0883-2323 print / 1940-3356 online DOI: 10.1080/08832323.2010.520758

Developing a Forensic Approach to Process

Improvement: The Relationship Between Curriculum

and Impact in Frontline Operator Education

Simon Croom

University of San Diego, San Diego, California, USA

Alan Betts

HT2 Ltd., Oxford, UK

The authors present a comparative study of 2 in-company educational programs aimed at developing frontline operator capabilities in forensic methods. They discuss the relationship between the application of various forensic tools and conceptual techniques, the process (i.e., curriculum) for developing employee knowledge and capability, and the impact on process improvement. The authors conclude that the use of e-learning via online learning management support and associated media has a positive impact on learner retention and application of concepts and techniques.

Keywords: curriculum design, e-learning, education, financial service operations, process improvement

In this article we examine the impact of online mediated learning. Specifically, the study focuses on curriculum ef-fectiveness in a postexperience learner environment and in-volves a study of company sponsored development programs. Such programs have cohorts of learners with a wider variety of learner capability, prior knowledge, and learning experi-ences than most university courses. Consequently, a major challenge in curriculum design is to accommodate this vari-ety in the caliber of learners while ensuring a minimum level of educational and learning outcomes.

A core consideration in adult learning is to embrace learner experiences within the curriculum design. Illeris (2003) contended that all learning implies an integration of external interaction processes (e.g., the social and workplace environmental conditions faced by the learner) and inter-nal psychological processes (i.e., an individual’s behavioral and cognitive processes) and further stated that many learn-ing theories only deal with one of these processes. Others (e.g., Stevenson, 2002) have noted that the separation of the external and internal is problematic. The relationship

Correspondence should be addressed to Simon Croom, University of San Diego, Supply Chain Management Institute, 5998 Alcala Park, San Diego, CA 92110, USA. E-mail: [email protected]

between the external and internal reflects the action research approach to learning (Rowley, 2003), which is best explained by Kolb’s (1984) learning cycle and reinforced in Nonaka and Takeuchi’s (1995) seminal work.

Knowles (1984a, 1984b) developed a theory of andra-gogy, specifically to address postexperience adult learning, emphasizing that adults are self-directed and expect to take responsibility for decisions. He made the following assump-tions about the design of learning: adults a) need to know why they need to learn something, b) need to learn experien-tially, c) approach learning as problem solving, and d) learn best when the topic is of immediate value. In practical terms,

andragogymeans that instruction for adults needs to focus more on the process and less on the content being taught (Eskerod 2010).

The literature thus emphasizes the importance for learning outcomes of the balance between theoretical learning and re-flection. This is a critical element in ensuring that the learner is able to continue to apply what was learned (Buckley & Caple, 1995) and avoid postclass dismay (Rossett, 1997) due to the fear of being unable to use the knowledge acquired.

With the advent of e-learning and learning management systems (LMS), new opportunities have been presented to educators. Crocetti (2002) discussed some of the benefits of integrating learning processes with corporate processes in

312 S. CROOM AND A. BETTS

order to deliver valuable individual learning outcomes and di-rect corporate benefits, drawing a strong relationship between effective learning management systems and sustainable orga-nizational learning. There has, however, been relatively little research measuring the impact of computer-assisted

learn-ing systems (or e-learnlearn-ing) on learnlearn-ing outcomes ( ´Cukuˇsi´c,

Alfirevi´c, Grani´c, & Garaˇca, 2010; Lim, Lee, & Nam, 2007; McGorry, 2003 Meyer, 2003).

In this article we undertake an analysis of adult learning and offer some redress to this lack of empirical validation of e-learning and LMS.

Process Improvement

Our curricula focused on development programs concerned with process improvements for frontline operators (opera-tives) and their management. The development and appli-cation of skills in a range of analytical tools, techniques, and methods by operatives has been found to have a signif-icant impact on process performance (Imai, 1986; Johnson,

2000; LoBue, 2002; Olve, Roy, & Wetter, 1999).1

Learn-ing curve effects have been well researched since Wright’s 1936 publication, although much of the subsequent litera-ture in this area has focused on autonomous learning (i.e., improvement through repetition; Cabral & Riordan, 1994; Spence, 1981; Womer, 1979). Deming (1982) emphasized process knowledge, Fine (1986) modeled the impact of in-vestments in quality learning, and, germane to this article, Grant and Gnyawali (1996) provided a brief discussion of the contribution of forensic methods to effective organi-zational learning in delivering process improvement. Bohn (1994) further expanded on this with an evolutionary stage model of process knowledge. The importance of learning to strategic and operational improvement has further been examined through discussion of the development of learn-ing networks (incorporatlearn-ing a culture of trainlearn-ing, application and improvement; Figueiredo, 2002; Ramcharamdas, 1994: Upton & Kim, 1998). Forensic tools based on industrial engi-neering, operational research, and work-study methods have been widely applied to manufacturing operations improve-ment (Bal, 1998; Gr¨unberg 2003).

METHOD

The programs studied adopted a strong action learning ap-proach, benefits of which have been identified by Hale, Wade, and Collier (2003). We also addressed the impact of online mediated learning. Alavi, Wheeler, and Valacich (1995) compared desktop video conferencing in a number of teaching contexts, Moore and Thompson (1997) studied dis-tributed technology mediated learning, Van Solingen, Bergh-out, Kusters, and Trienekens (2000) developed a conceptual model of the enabling factors for learning, and Alavi and Gallupe (2003) summarized their view of the literature on

e-learning. Comparative studies of the impact of computer-assisted learning had not produced any conclusive evidence of significant differences between learning media.

Our objectives in this paper were the following: to a) identify the impact of a range of forensic tools and meth-ods in terms of learner application, and b) compare alterna-tive curricula in the development of forensic capability as demonstrated through action learning projects, specifically examining the impact of e-learning.

To evaluate the impact of curriculum on outcomes, we needed to ensure we had adequate control of other variables that may impact on learning outcomes, such as prior learn-ing, learning technology, assessment, delivery mechanisms, and performance measurement (Baker & O’Neil, 1987; En-twistle, 1988; Gagn´e, 1971; Kelly, 1989; Neagley, Ross, & Evans, 1967). The target programs were thus selected be-cause a) both had similar subject content; b) the participating organizations were of a similar size and scope of operation, from the same industrial sector and included participants of similar managerial level; and c) both groups had comparable levels of prior knowledge on entering the programs.

By constraining certain variables (subject content, indus-try and operational context, level and prior capability of learn-ers) and by measuring the performance of participants, we were able to establish the validity of comparative case analy-ses as the method for an analysis of the impact of curriculum on performance. Using Firestone’s (1993) view of case-to-case transfer as one of three forms of generalization and Stake’s (1988) distinction between deductive and inductive generalization in support of the use of case study methods of analyses (in educational research), we also feel that case studies provide a valuable method for conducting compar-ative analysis and positing generalizable propositions as a result of such an analytical approach.

The unit of analysis for this study was a cohort (i.e., a single group of learners following the same course of study). Typically, a cohort would consist of 15–20 participants. In this study we had a total of 38 cohorts, 22 from Organization A and 16 from Organization B.

Data gathering involved a review of each cohort’s project activities and their written submissions at three key stages of the project: problem identification, data collection and analysis, and final interpretation and recommendations. All cohorts used an online discussion forum, which was a pri-mary link between the tutors and the participants. All com-munications were included in the analysis. Written reports, Microsoft PowerPoint presentations, spreadsheets, and oc-casionally other digital media were submitted and assessed. Only Organization B had full online learning support, includ-ing multimedia and interactive online tutorials.

First, analysis of the data involved recording exactly which forensic methods were applied and assessing the ac-curacy and extent of their use through tutor evaluation. A list of the tools used by each cohort was tabulated in the first instance and then summarized by organization. Second,

TABLE 1

Content of Program Curriculum: Tools and Methods Included

Organization A Organization B

Layout diagrams Layout diagrams Process flow charts Process flow charts Workflow analysis Workflow analysis Volume-variety matrix Volume-variety matrix SPC control charts SPC control charts Experimental design Experimental design Capacity utilization Capacity utilization

Throughput efficiency Throughput efficiency, supply chain mapping, ServQual (adapted) gap model Process capability Process capability

Causal maps Causal maps, service blueprinting Polar/radar representation of

strategic imperatives

Polar/radar representation of strategic imperatives

Importance-performance matrix

Importance-performance matrix

all project submissions were assessed for level of accuracy, appropriateness of application, and consistency of interpre-tation by two experienced teachers of operations manage-ment. One of the assessors had involvement in the delivery of the programs, and the other assessor was not involved in delivery. All assessments were marked by both teachers, using their agreed rating system. Last, the use of individual forensic methods was assessed to identify any difference in capabilities between cohorts from the two organizations.

Analysis: Forensic Tools

We found participants’ prior knowledge of many basic meth-ods in the curriculum was cursory or nonexistent. Compona-tion and Farrington (2000) found that the more commonly used tools were those that were easy to understand and apply. Their article outlined a range of methods, including typical layout and flow methods, problem-solving techniques, and quality impact tools. These tools are similar to those included in the present study (see Table 1).

The difference in the range of tools included in the pro-grams for each organization was a result of differences in the curriculum design employed.

Elements of Curriculum Design

The termcurriculum is defined here as all the planned

ex-periences provided by the institution to assist the learners in attaining the designated learning outcomes to the best of their abilities (Neagley et al., 1967). Thus, curriculum embodies the total experience, process, and application of learning.

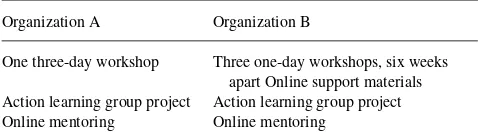

Curriculum design also incorporated e-learning options (Kellner et al., 2008). Organization A’s program included a three-day workshop, online mentoring, and a terminal group action learning project. Organization B followed a mixed mode of three one-day workshops (six weeks apart),

inter-TABLE 2

Learning Experiences Within the Two Curricula

Organization A Organization B

One three-day workshop Three one-day workshops, six weeks apart Online support materials Action learning group project Action learning group project Online mentoring Online mentoring

active online-learning support materials, online mentoring, and a terminal group action learning project. Thus, the two programs differed in terms of the scheduling of the work-shops and the provision of online learning support materials for Organization B (see Table 2).

Our methodology for evaluating which elements of the content were most effective was to apply Componation and Farrington’s (2000) method of observing and monitoring which tools and methods were applied by participants in their action learning project. Extensive reporting and feed-back was central to the method used to conduct these projects, thus providing us with data relating to what had been done (Moen & Norman, 2006). We evaluated the impact in terms of scale of improvement (cost, time, utilization, speed, and quality) and online mentoring enabled a two-way dialogue for guidance to participants in the use of relevant methods. Each cohort submitted a final written report. Both organi-zations were given the same report brief and assessment criteria.

RESULTS

We used the terminal group project submissions as the basis for our data analysis. The remit for both groups of partici-pants was similar: to undertake an analysis of a process and propose improvements as a direct result of their investiga-tion. We were able to identify both the methods employed and the consequent improvements for each of the projects undertaken.

In Table 3 we summarize the frequency distribution of the forensic tools used.

DISCUSSION

Evaluation

For many participants the use of quantitative approaches was particularly challenging. In evaluating participants’ ability in forensic techniques, we assessed three stages of each group’s investigation project. We found a significant difference be-tween the groups—the average score for Organization A was 7% (or one grade level) lower than that of Organization B. The standard deviation of grades for both groups was similar

314 S. CROOM AND A. BETTS

TABLE 3

Forensic Methods: Frequency of Use, by Cohort

Method Organization A Organization B

Volume-variety matrix 90% 97%

Polar/radar representation of strategic imperatives

75% 87%

Importance-performance matrix 53% 82%

Process flow charts 48% 67%

Process capability 18% 17%

Workflow analysis 8% 8%

Capacity utilization 4% 13%

Throughput efficiency 2% 3%

Experimental design 1%

ServQual (adapted) gap model 38%

Supply chain mapping 21%

Layout diagrams 15% 27%

Service blueprinting 2%

(A=2.78, B=2.66). Thus, our assessment was that

Organi-zation B participants demonstrated greater technical ability in the use of a range of forensic methods.

We found three significant differences between the two organizations when we evaluated the recommendations and outcome of each group’s project:

1. All of Organization B’s projects had clear evaluation of the financial impact of recommendations, and linked improvements to customer service. For Organization A, only 25% of the projects had clear financial data associated with improvements.

2. The link between existing performance metrics, pro-cess changes, and performance improvements on non-financial criteria was significantly different between the two organizations. Again, all of Organization B’s projects provided clear associations between improve-ments and performance, whereas only 72% of Organi-zation A’s proposal contained such information.

3. The clarity of argument applied to the proposed im-provements was significantly different between the two organizations, partly reflected by the grades awarded and further reinforced by the extent each project’s rec-ommendations had been implemented. Management receptivity to improvement projects in the two organi-zations could be different (Hung, Yang, Lien, McLean, & Kuo, 2009) and thus account for this, so all group projects were supported by a sponsoring manager and the majority of projects were initiated by management. We thus contended that the differences in progress could be a reflection of the degree of clarity in the tech-nical arguments. To test this proposition, we spoke to a small sample of sponsoring managers in both orga-nizations in order to identify other factors preventing implementation. Although this was an opportunistic process and thus lacks some statistical significance in our findings, we concluded that both organizations faced similar levels of exogenous inhibitors (lack of funding, impending technological changes, and con-straints through work practice and existing systems).

To quantify levels of competence in the execution of foren-sic methods we assessed two key facets of evaluation. First, was the method used correctly? Second, did the applica-tion of the method provide benefit? We developed a 4-point

Likert-type semantic scale ranging from 1 (inadequate use of

the method) to 4 (advanced application of the method). The mean score for each method employed was then calculated.

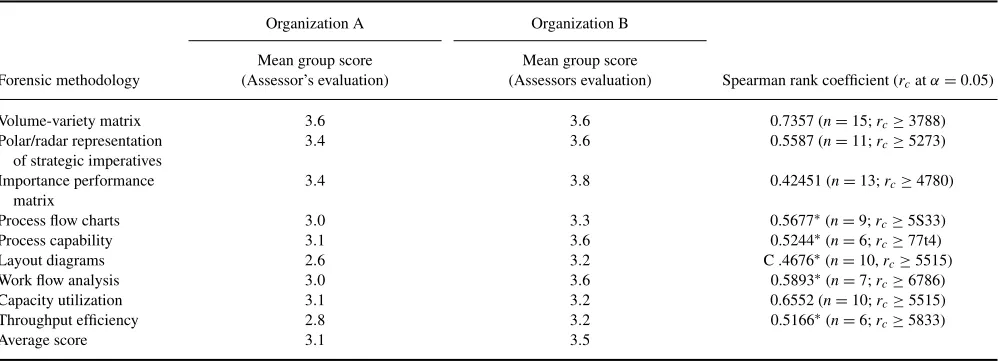

Table 4 provides a contingency table containing the mean scores achieved by participants in relationship to the com-mon forensic methodologies adopted by each case organiza-tion and calculated the Spearman Rank coefficient, which is shown in column 4.

We can reject the null hypothesis that the performance of the two organizations’ participants are mutually independent

when the computed test statistic rs ≥ critical value of the

TABLE 4

Spearman Rank Coefficient Analysis of the Two Case Organizations’ Performance

Organization A Organization B

Forensic methodology

Mean group score (Assessor’s evaluation)

Mean group score

(Assessors evaluation) Spearman rank coefficient (rcatα=0.05)

Volume-variety matrix 3.6 3.6 0.7357 (n=15;rc≥3788)

Polar/radar representation of strategic imperatives

3.4 3.6 0.5587 (n=11;rc≥5273)

Importance performance matrix

3.4 3.8 0.42451 (n=13;rc≥4780)

Process flow charts 3.0 3.3 0.5677∗(n=9;rc≥5S33)

Process capability 3.1 3.6 0.5244∗(n=6;rc≥77t4)

Layout diagrams 2.6 3.2 C .4676∗(n=10,rc≥5515)

Work flow analysis 3.0 3.6 0.5893∗(n=7;rc≥6786)

Capacity utilization 3.1 3.2 0.6552 (n=10;rc≥5515)

Throughput efficiency 2.8 3.2 0.5166∗(n=6;rc≥5833)

Average score 3.1 3.5

Spearman test statistic for an alpha level of .05. The critical value is shown in parentheses for each forensic

methodol-ogy according to sample size (n). We found that the null

hypothesis could not be rejected for six of the forensic meth-ods (importance-performance analysis, process flow chart-ing, process capability analysis, layout diagrams, workflow analysis, and throughput efficiency analysis). In other words, there is some statistically significant difference between the performance of Organizations A and B in their demonstration and application of these methods.

Of the range of tools and methods we included in the pro-grams, the six methods in which independence was identified were also considered to be of a more technically demand-ing nature than others. Given Componation and Farrdemand-ington’s (2000) finding that the simpler techniques seem to be more frequently adopted, we feel there are strong indications in support of our conclusion that the significant differences between the two organizations’ participant performances in these six techniques may be assignable to differences in cur-riculum (e.g., Kyndt, Dochy, & Nijs, 2009).

Our research design did face some limitations. First, as we were undertaking analysis with corporate clients, the scheduling of the courses in terms of duration of the face-to-face program for Organization A and the interval between the one-day workshops for Organization B were prescribed by the client. Second, we were unable to set up control groups in either organization to allow us to control for individual fac-tors, such as the online elements of Organization B’s curricu-lum. A major concern is the potential difference in the times allowed for workers to assimilate the course content, tools, and techniques between the two organizations. Spacing Or-ganization B’s workshops six weeks apart would have had an impact on learning, but because this was an integral element of the blended design it was difficult to control for the impact of the time between workshops rather than the e-learning support between the workshops. By using postcourse action learning group projects, we have provided similar periods of assimilation, reflection, and application, although we accept that there may be some influence on the outcomes of the duration of each organization’s program. Third, although we ensured interrater agreement for project assessment, we may have found it valuable to employ multiple-choice question tests of participants to evaluate individual technical capabil-ity relating to the forensic techniques employed.

CONCLUSION

The literature has shown that many front line operatives are resistant to the use of forensic methods (Bal, 1998; Com-ponation & Farrington, 2000) and do not demonstrate an aptitude to utilize such tools unless given technical instruc-tion (Corner, 2002). The collecinstruc-tion of learning experiences for developing such technical competence is critical to the

effectiveness of educational and training programs (Neagley et al., 1967), even though some authors have claimed that there is no one right way to develop process improvement competencies (Upton & Kim, 1998).

From our study of two development programs containing ostensibly similar technical content we found a significant difference in learning outcomes, which we ascribed to the curriculum differences between the programs. We controlled for other potentially influencing variables, including industry sector, prior knowledge of learners, and organizational con-text (size, structure, culture). We examined contrasts between Organization A, where cohorts of learners were exposed to a three-day offsite workshop followed by an action learning project, and Organization B, where learners were exposed to three one-day offsite workshops, six weeks apart, supported by online learning support and followed by an action learning project. The method of evaluating the learning outcomes in both cases was identical.

It is our conclusion that the level of technical competence demonstrated by participants was greater where the curricu-lum included online learning support and workshops that are scheduled over a longer timescale (see also Alonso, L´opez, Manrique, & Vi˜nes, 2005; Ruiz, Mintzer, & Leipzig, 2006). We also concluded that the effectiveness of the application of learned skills in action learning situations was greater under such circumstances.

The three significant elements of the curriculum that con-tributed to these benefits were the provision of intensive struc-tured support in the form of online tutorials supporting core techniques, incorporation of project-based coaching, and the involvement of an action-oriented approach to learning.

NOTE

1. In this article we use the termforensicto describe the

particularly logical and robust approach that is required for the application of process improvement tools and methods.

REFERENCES

Alavi, M., & Gallupe, R. B. (2003). Using information technology in learning: Case studies in business and management education programs.

Academy of Management Learning & Education,2, 139–153.

Alavi, M., Wheeler, B., & Valacich, J. (1995). Using IT to reengineer busi-ness education: An exploratory investigation of collaborative telelearning.

MIS Quarterly,19, 293–311.

Alonso, F., L´opez, G., Manrique, D., & Vi˜nes, J. (2005). An instructional model for web-based e-learning education with a blended process ap-proach.British Journal of Educational Technology,36, 217–235. Baker, E. L., & O’Neil, H. F. (1987). Assessing instructional outcomes. In

R. M. Gagne (Ed.),Instructional technology: Foundations(pp. 343–377). Mahwah, NJ: Erlbaum.

316 S. CROOM AND A. BETTS

Bal, J. (1998). Process analysis tools for process improvement.The TQM Magazine,110, 342–354.

Bohn, R. E. (1994). Measuring and managing technical knowledge.Sloan Management Review, 61–73.

Buckley, R., & Caple, C. (1995).The theory and practice of training(3rd ed.). London, UK: Kogan Page.

Cabral, L. M. B., & Riordan, M. H. (1994). The learning curve, market dominance and predatory pricing.Econometrica,62, 1115–1135. Componation, P. J., & Farrington, P. A. (2000). Identification of effective

problem-solving tools to support continuous process improvement teams.

Engineering Management Journal,12(1), 23–30.

Corner, P. D. (2002). An integrative model for teaching quantitative research design.Journal of Management Education,26, 671–692.

Crocetti, C. (2002). Corporate learning. A knowledge management perspec-tive.Internet and Higher Education,4, 271–285.

´

Cukuˇsi´c, M., Alfirevi´c, A., Grani´c, A., & Garaˇca, Z. (2010). E-Learning pro-cess management and the e-learning performance: Results of a European empirical study.Computers and Education,55, 554–565.

Deming, W. E. (1982).Quality, productivity and competitive position. Cam-bridge, MA: MIT Center for Advanced Engineering.

Entwistle, N. (1988).Styles of learning and teaching: An integrated outline of educational psychology. London, UK: David Fulton.

Eskerod, P. (2010). Action learning for further developing project man-agement competencies: A case study from an engineering consultancy company.International Journal of Project Management,28, 352–360. Figueiredo, P. N. (2002). Learning processes features and technological

capability accumulation: Explaining inter-firm differences.Technovation,

22, 685–698.

Fine, C. H. (1986). Quality improvement and learning in productive systems.

Management Science,10, 1302–1315.

Firestone W. A. (1993). Alterative arguments for generalising from data as applied to qualitative research.Educational Researcher,22, 16–23. Gagn´e, R. M. (1971). Learning research and its implications for

indepen-dent learning. In R. A. Weisgerber (Ed.),Perspectives in individualized learning. Itasca, IL: Peacock.

Grant, J. H., & Gnyawali, D. R. (1996). Strategic process improvement through organizational learning.Strategy & Leadership,24, 28–32. Gr¨unberg, T. (2003). A review of improvement methods in manufacturing

operations.Work Study,52(2), 89–93.

Hale, R., Wade, R., & Collier, D. (2003). Matching business education to the change agenda.Training Journal, Supp. 1(April), 4–7.

Hung, R. Y. Y., Yang, B., Lien, B. Y.-H., McLean, G., & Kuo, Y.-M. (2009). Dynamic capability: Impact of process alignment and organi-zational learning culture on performance.Journal of World Business,45, 285–294.

Illeris, K. (2003). Towards a contemporary and comprehensive theory of learning.International Journal of Lifelong Learning,22, 396–406. Imai, M. (1986).Kaizen: The key to Japan’s competitive success. New York,

NY: McGraw-Hill.

Johnson, M. D. (2000). Linking operational and environmental improvement through employee involvement.International Journal of Operations & Production Management,20, 148–165.

Kellner, A., Teichert, V., Hagemann, M., ´Cukuˇsi´c, M., Grani´c, A., & Pagden, A. (2008).Deliverable D7.2: Report of validation results. UNITE report. Oxford, UK: Elsevier Science Ltd.

Kelly, A. V. (1989).The curriculum: Theory & practice(3rd ed.). London, UK: Paul Chapman.

Knowles, M. (1984a).The adult learner: A neglected species(3rd ed.). Houston, TX: Gulf.

Knowles, M. (1984b).Andragogy in action. San Francisco, CA: Jossey-Bass.

Kolb, D. (1984).Experiential learning. Englewood Cliffs, NJ: Prentice-Hall. Kyndt, E., Dochy, F., & Nijs, H. (2009). Learning conditions for non-formal and informal workplace learning.Journal for Workplace Learning,21, S369–S383.

Lim, H., Lee, S.-G., & Nam, K. (2007). Validating e-learning factors affect-ing trainaffect-ing effectiveness.International Journal of Information Manage-ment,27, 22–35.

LoBue, R. (2002). Team self-assessment: Problem solving for small groups.

Journal of Workplace Learning,14, 286–297.

McGorry, S. Y. (2003). Measuring quality in online programs.The Internet and Higher Education,6, 159–177.

Meyer, K. (2003). The web’s impact on student learning. In J. Hirschbuhl & J. Kelley (Eds.),Computers in education(166–169). New York, NY: McGraw-Hill.

Moen, R., & Norman, C. (2006).Evolution of the PDSA cycle. Retrieved from http://deming.ces.clemson.edu/pub/den/deming pdsa.htm Moore, M., & Thompson, M. (1997). The effects of distance learning

(ACSDE Research Monograph Series, Vol. 15). State College, PA: Amer-ican Center for the Study of Distance Education.

Neagley, R., Ross, L., & Evans, N. (1967).Handbook for effective curricu-lum development. Englewood Cliffs, NJ: Prentice-Hall.

Nonaka, I., & Takeuchi, H. (1995).The knowledge-creating company. How Japanese companies create the dynamics of innovation. New York, NY: Oxford University Press.

Olve, N., Roy, J., & Wetter, M. (1999).Performance drivers: A practical guide to using the balanced scorecard. Chichester, UK: Wiley. Ramcharamdas, E. (1994). Xerox creates a continuous learning environment

for business transformation.Planning Review,22, 34–38.

Rossett, A. (1997). That was a great class, but. . ..Training and Develop-ment,51, 18–25.

Rowley, J. (2003). Action research: An approach to student work based learning.Education & Training,45, 131–138.

Ruiz, J. G., Mintzer, M. J., & Leipzig, R. M. (2006). The impact of e-learning in medical education.Academic Medicine,81, 207–212.

Spence, A. M. (1981). The learning curve and competition.Bell Journal of Economics,13(1), 20–35.

Stake, R. E. (1988). Case study methods in educational research: Seeking sweet water. In R. M. Jaeger (Ed.),Complementary methods for research in education(pp. 253–300). Washington, DC: American Educational Re-search Association.

Stevenson, J. (2002). Concepts of workplace knowledge.International Jour-nal of Education Research,37, 1–15.

Upton, D. M., & Kim, B. (1998). Alternative methods of learning and pro-cess improvement in manufacturing.Journal of Operations Management,

16(1), 1–20.

Van Solingen, R., Berghout, E., Kusters, R., & Trienekens, J. (2000). From process improvement to people improvement: Enabling learning in software development.Information and Software Technology,42, 965– 971.

Womer, K. W. (1979). Learning curves, production rates and program costs.

Management Science,25, 312–319.

Wright, T. P. (1936). Factors affecting the costs of airplanes.Journal of the Aeronautical Society,3, 122–128.