Bachelor of Information Systems Engineering (Honours) Information Systems Engineering Faculty of Information and Communication Technology (Kampar campus), UTAR. Visually impaired people are at risk when crossing the road and there are no pedestrians nearby to help them. In addition, most of the traffic lights in Malaysia do not have a pedestrian signaling system installed, and those installed lack maintenance.

That is why this project is aimed at developing an application to help visually impaired people cross the road safely. Therefore, since most people currently own their smartphone, including visually impaired people, this project will be fully implemented in the smartphone. Object detection is one of the features included in this project, it uses for recognizing the surrounding object to help the user to cross the road.

In conclusion, this project will use TensorFlow to train a personal detection model and implement it in a mobile application to help navigate the visually impaired. Bachelor of Information Systems (Honours) Information Systems Engineering Faculty of Information and Communication Technology (Kampar Campus), UTAR.

LIST OF TABLES

Introduction

- Problem Statement and Motivation

- Objectives

- Project Scope and Direction

- Contributions

- Report Organization

Therefore, this project is used to design and develop an application for the visually impaired and assist them to cross the road safely. It provides a high-pitched sound to notify the visually impaired that they can cross the road safely. Therefore, it will be more difficult for the visually impaired to recognize the road situation or surroundings.

This project aims to greatly reduce the danger to the visually impaired when crossing the road and to overcome the lack of electronic travel aids at pedestrian crossings. Reduce the interaction time with the application when the application helps visually impaired users. Aiming to help visually impaired people understand the application's output, media speech will be used to interact with visually impaired people.

So visually impaired people just open the app on their smartphone and the smartphone automatically recognizes the environment. By using this app, our visually impaired users can cross the road safely.

Literature Review

Review of Existing Application and System

- SeeLight

- Previous Application – Identify pedestrian crossing

- Pedestrian Crossing Aid Device

- Supersence

In this situation, visually impaired people will be exposed to a dangerous situation when crossing the unsignalized pedestrian crossings. As a result, because those vehicles produce less noise or are quieter than traditional gasoline engine vehicles [5], it is a limitation of this application as well as another drawback it brings to the visually impaired. In addition, the app is able to convey the message to the driver when the visually impaired pass, so the driver is able to slow down earlier and so that the visually impaired user can cross the road safely.

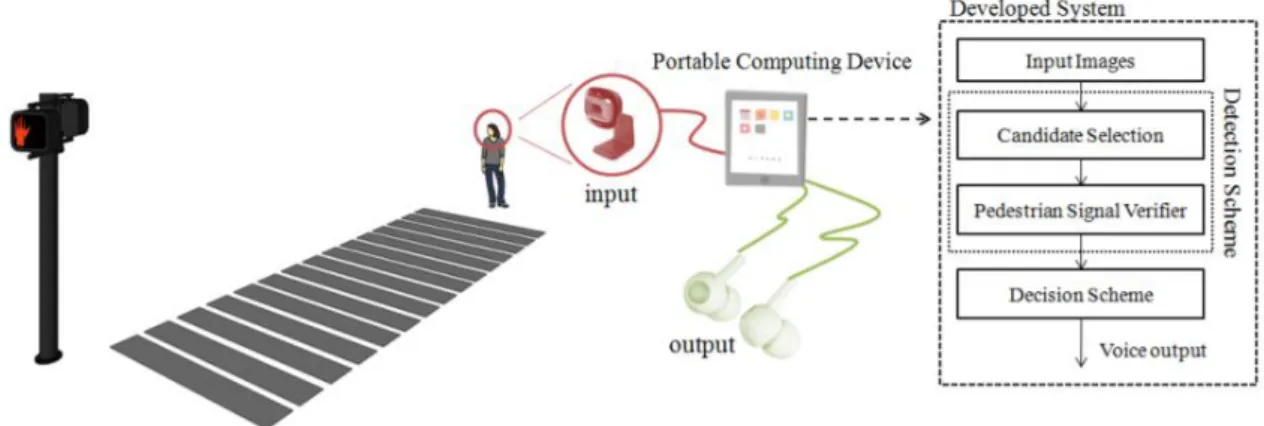

It directly solves the problem of visually impaired people crossing the road by directing them to the pedestrian crossing. This is because the app provides good assistance to visually impaired people in some areas where it provides a complete system of pedestrian crossings. This device can detect pedestrian signals and emit speech, allowing it to guide a visually impaired pedestrian in front of a crosswalk.

The vision of this application is to allow visually impaired people using technology to experience this new generation of experience-based applications. Overall, the application is good at solving the common problem that visually impaired people face in daily life.

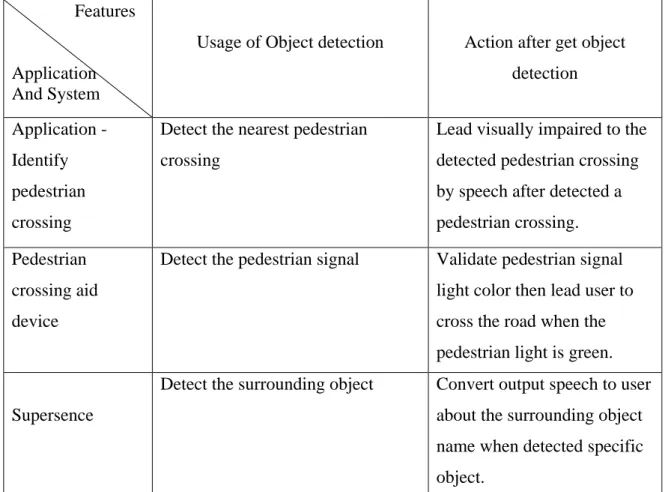

Comparison Analysis on usage of object detection

- Usage of Object Detection

- Analysis on Table 2.1

Analyzing Table 2.1, these applications that use computer vision technology have various uses to assist the visually impaired. So the drawback of this app is that no assistance is offered to the visually impaired after they are brought to the crosswalk. Supersence provided a function to search for a specific object, such as traffic, but no other action after notifying the visually impaired that there is traffic in the area.

In addition, this project will focus on taking action when visually impaired users cross the roads.

System Design

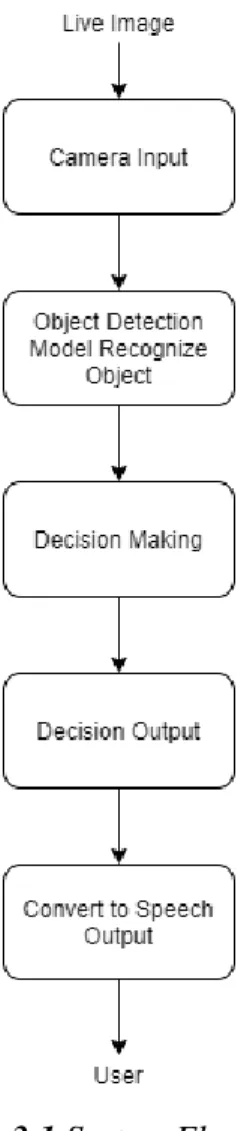

- Overall System Flow

- Training image detection method

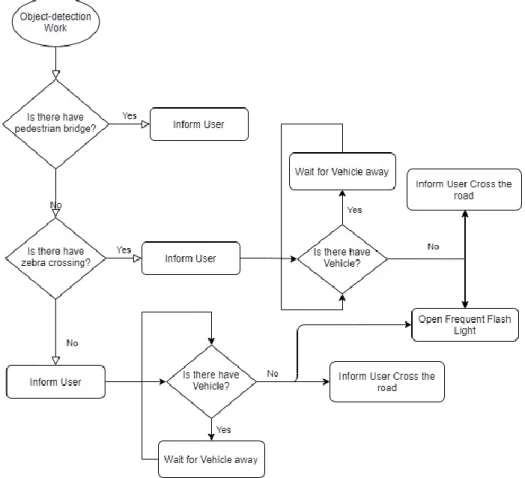

- Decision Schema (Flow Diagram)

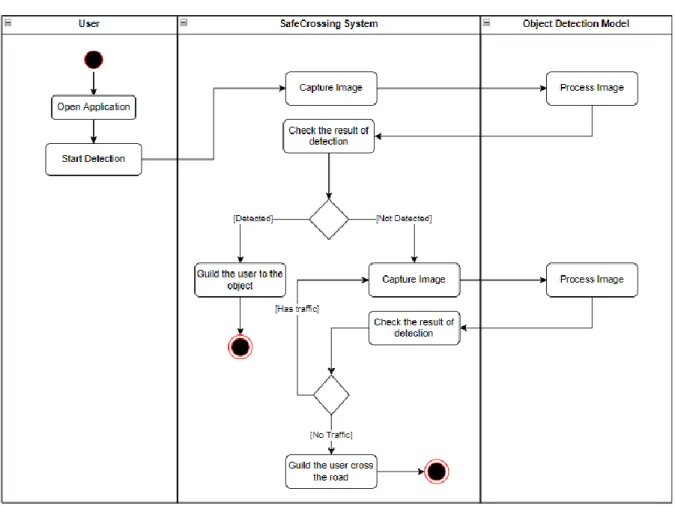

- System Activity Diagram

The reason for choosing object detection in this project is that it requires real-time detection and it can detect more than one object in an image. The trained object detection model starts working when the users are ready to cross. In the first step, the object detection model will detect the environment around the user.

If there are pedestrian bridges, the app will notify the user to cross the pedestrian bridge. However, if there is a crosswalk, the app will also notify the user to cross at the crosswalk and guide the direction of the crosswalk. When the user points in the direction of the pedestrian crossing, the application will start detecting the availability of vehicles in front of the user.

The app will notify the user to cross the road when the vehicle pulls out. Meanwhile, the app will display a frequent flashing light to notify oncoming traffic. Conditions will be divided into two categories: those with pedestrian crossings and those without.

If detected, a pedestrian bridge or crosswalk will guide the user to the direction of that object. Continue taking the image and let the model process it if no crosswalk or pedestrian bridge is detected. If the traffic is detected, it repeats the capture of the image until no traffic is detected.

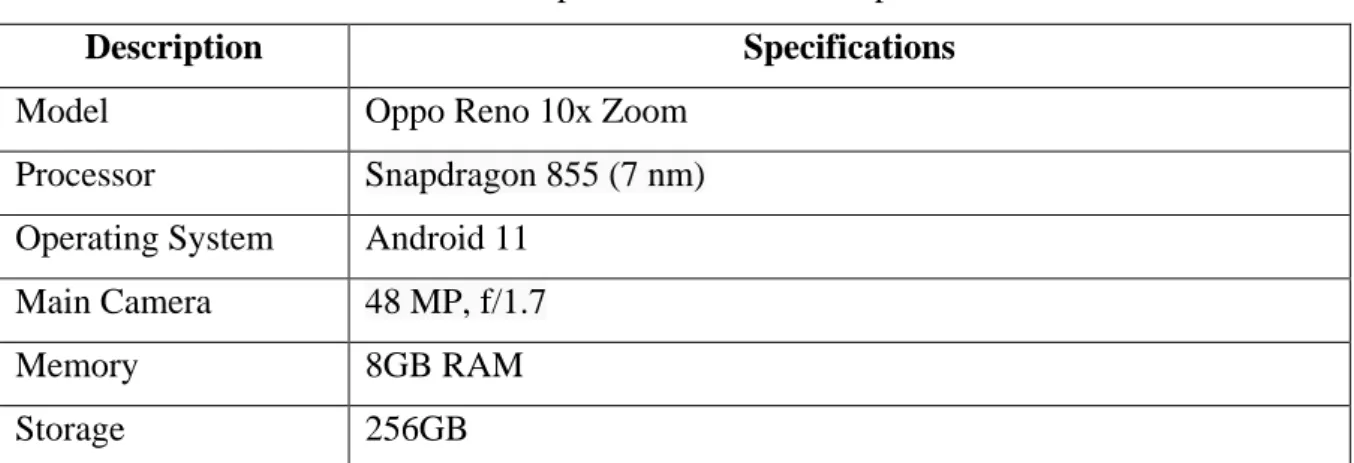

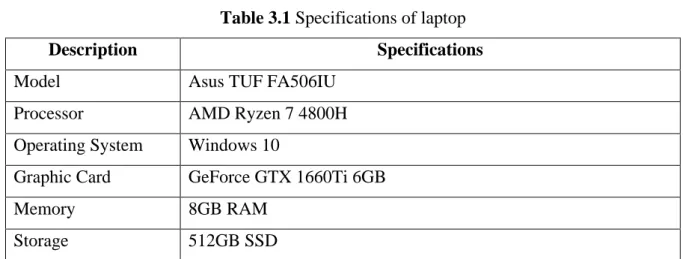

System Implementation

- Methodologies and General Work Procedures

- Tools to use Software

- User Requirements User Requirements

- Object Detection Model

- Training and Test image Dataset

- Label Image Object on training dataset

- Run Training on Object Detection Model with Anaconda

- Convert to TensorFlow Lite Model

- Mobile Development

- Rebuild on TensorFlow Lite Application

- Modify on the Detect Image Function

- Application input and output

- Decision Making Function

- Guiding to Correct Direction

- Detect surrounding traffic

- Start Crossing Road

- Auto Increase Volume

- Application Design .1 User Interface

- Application Logo Design

- Implementation Issues and Challenges

So, the result of the customized object detection model will be more accurate on object detection. The other 20 percent of the collected images will be used for testing after training the model. Finally, the application will interpret the output of the model, which is the object trained in that model.

The app can continue to detect the object and the model, when opened, returns the detected result in front of the device's camera. In this project, the detection works only when the user wants to cross the road. The device will vibrate when the user long-presses or double-clicks an app that uses this feature.

The reason for allowing the user to move around the device is to allow the camera to capture the surroundings when detection begins. If detection is in progress and the user long-presses the medium's speech, it will play: “Detection has been stopped.” and appear along with a long-lasting vibration of the device. A timer was created to repeatedly detect the environment and retrieve the detection results.

If there is a pedestrian bridge or pedestrian crossing, the detected direction will be passed to another function that guides the user to the correct direction for a pedestrian bridge or pedestrian crossing. This function will be called when the user is in the direction of a pedestrian bridge or there is a situation in the user's environment that does not have both a pedestrian crossing and a pedestrian bridge. If there is any car or motorcycle, the media will be there to inform the user.

This is a function of the open flash when the camera is in preview (live view). When the user's device volume is low, it may be difficult to hear the device's media output, especially when the application is not in use. The design of the app logo is sought to represent the app is for visually impaired outdoor use.

To train a good model, the training image should get an image that is in the user's viewpoint towards a crosswalk or a pedestrian bridge. Therefore, some of the collected images are not from the user's point of view when carrying the smartphone.

System Testing and Evaluation

- Testing Case Without Pedestrian Crossing and Pedestrian Bridge

- Testing Case for Pedestrian Crossing Available

- Testing Case for Frequent Flashlight and Alarm Media

- Conclusion

- Project Review and Discussion

- Contributions

- Future Work

The demo is to test the situation where there is a crosswalk but there is no traffic light and to test the input and output functions of the application. The case assumes that the user wants to cross the road and does not know whether there is a pedestrian crossing or pedestrian bridge around him. In this test case, the user opens the application and double-clicks to start detection.

The app then detects the crosswalk and takes the user in the direction of the crosswalk. When the user is facing the correct direction of the crosswalk, the system will vibrate. Next, it detects the surrounding traffic and informs the user to cross the road when there is no traffic.

Additionally, the application's output media is audible because it automatically increases the system volume and is quiet around the user. On the other hand, this test also tests the app's frequent flash and alarm sound when the user crosses the road. In addition, the sound of the alarm has the same function as the lamp, which informs others that the user is crossing the road.

The objective of these functions is to reduce the risk of a user when he or she is crossing the road. The goal of the project is for the smartphone to be the eyes of the visually impaired. Therefore, the main goal of the project will be to discover the objects and function of the application.

Additionally, the app is equipped with pre-trained speech media to guide the visually impaired user. This project tries to make full use of the device's functionality to increase user security. Furthermore, in future work, users' travel distance to the crosswalk can be calculated with a better algorithm so that the app can be perfected to bring a safe environment for visually impaired people when they are outside and crossing the road.

FINAL YEAR PROJECT WEEKLY REPORT

- WORK DONE

- WORK TO BE DONE

- PROBLEMS ENCOUNTERED

- SELF EVALUATION OF THE PROGRESS The progress should be complete 40%

- PROBLEMS ENCOUNTERED No problem encounters

- SELF EVALUATION OF THE PROGRESS Mobile develop progress is going good

- SELF EVALUATION OF THE PROGRESS The progress of the application is done before week 8

- SELF EVALUATION OF THE PROGRESS The application can be fully completed on week 11

- SELF EVALUATION OF THE PROGRESS

The compass is implemented, but the problem is the calculation to calculate the left or right of the degree direction. Need to find a road with pedestrian crossing and less traffic to take the demo video on the road. Note Supervisor/candidate(s) are required to deliver a soft copy of the full set of the originality report to the faculty/department.

Based on the above results, I declare that I am satisfied with the originality of the final year project report submitted by my students as mentioned above. Form Title: Supervisor Comments on Originality Report Generated by Turnitin for Final Year Project Report Submission (for Undergraduate Programs).

UNIVERSITI TUNKU ABDUL RAHMAN