I understand that the University will upload the soft copy of my final year project / dissertation / thesis* in pdf format to the UTAR Institutional Repository, which can be made accessible to the UTAR community and public. System Using Convolutional Neural Network” is my own work, except as cited in the references. However, one of the challenges in developing a recommendation system is that the system itself must be accurate enough to record the user's profile in order to determine their movie preferences.

Therefore, in this study, an online movie recommender system using a convolutional neural network (CNN) model is proposed to recognize human emotions from facial images. The movie recommendation process will be done by capturing the user's emotions to save their search time instead of manually going through all the movies to find and select the most suitable one.

Problem Statement and Motivation

Project Scope

Project Objectives

Innovation and Contribution

Background Information

LITERATURE REVIEW

- Previous Works

- Machine Learning in Emotion Detection

- Applications of Emotion Detection in Movie Recommendation System

- Comparison of Previous Studies

- Proposed Solution

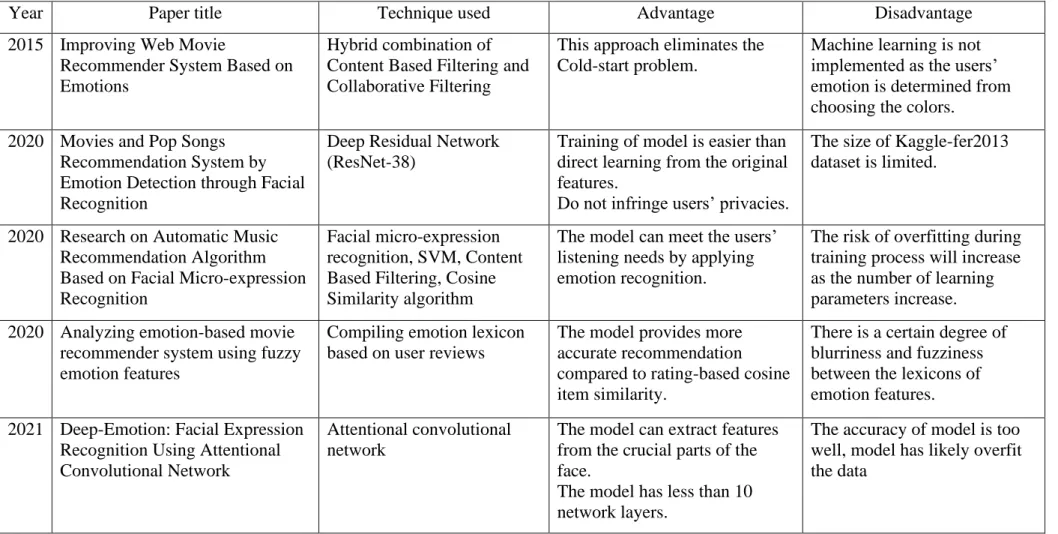

After reading all the review tokens, the emotion strengths of each emotion will reflect the review's emotion profile. Besides that, there are four residual structures to prevent the data error from getting worse as the model depth increases. The advantage of using residual learning is that the model can be trained more easily than learning directly from the original features.

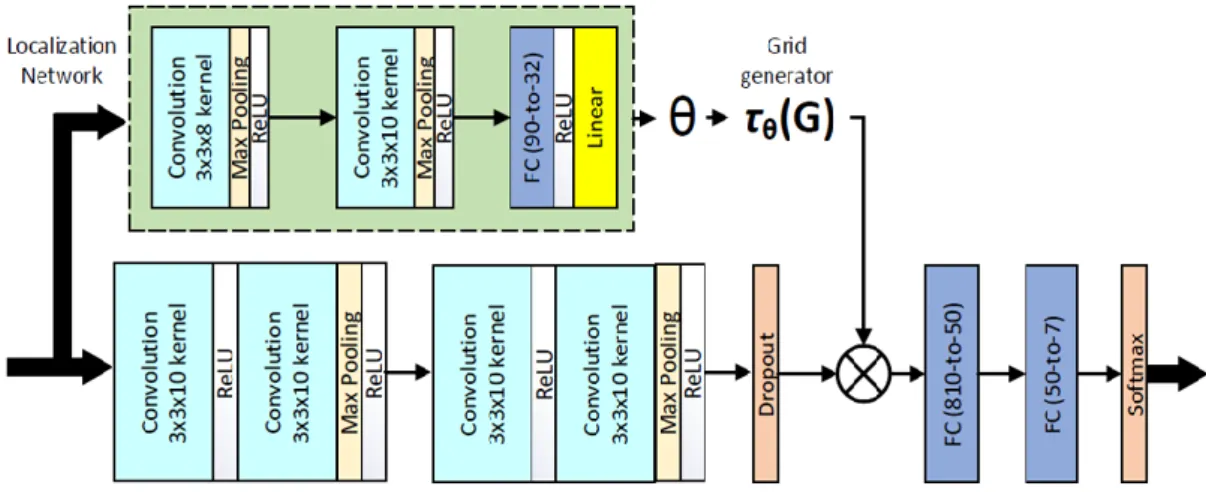

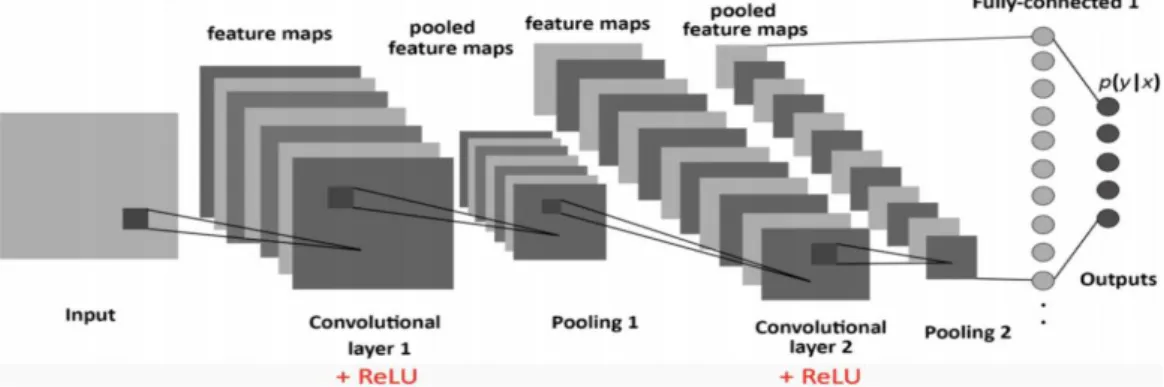

For feature extraction, the model has four convolutional layers, the two of which are passed to the max-pooling layer and the ReLU activation function. The spatial transformer module is responsible for focusing on the parts of the face that have the most influence on the classifier's outcome. The advantage of using a convolutional attention network to emphasize the crucial parts of the face is that it can achieve promising results with less than 10 layers of neural network.

However, the model's accuracy may be questioned, as the author does not test the trained model on a test set, and it may have been overfitting because the accuracy is too high. Pooling layers reduce the number of features and dimensionality of the network without losing any of its characteristic [13].

![Figure 2.2 Structure of residual learning [8]](https://thumb-ap.123doks.com/thumbv2/azpdforg/10216511.0/20.892.271.681.525.738/figure-structure-of-residual-learning.webp)

SYSTEM METHODOLOGY

Design Specifications

- Methodologies and General Work Procedures

- Requirements

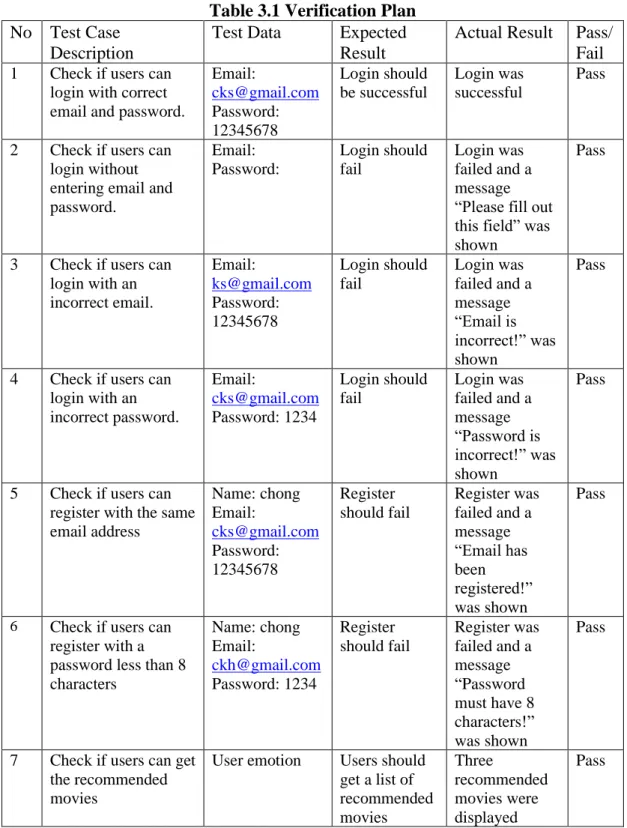

- Verification Plan

The system allows a new user to create/register a new account by completing the application form. The system allows a current/existing user to log into an account by entering a username and password. The system must validate the user's login by verifying correct user credentials.

The system must allow the user to turn on and off the peripheral unit (camera) of the personal device (laptop) to enable emotion detection. The system must be able to provide informative feedback by displaying success and error messages. The system must be able to capture the user's emotions from facial expressions in less than 10 seconds.

The system must be able to accurately predict the user's emotions to get appropriate movie recommendations. In addition, the web app is said to be able to make movie recommendations to users based on their emotions.

System Design .1 Use Case Diagram

- CNN Architecture

- Data Dictionary Users Entity

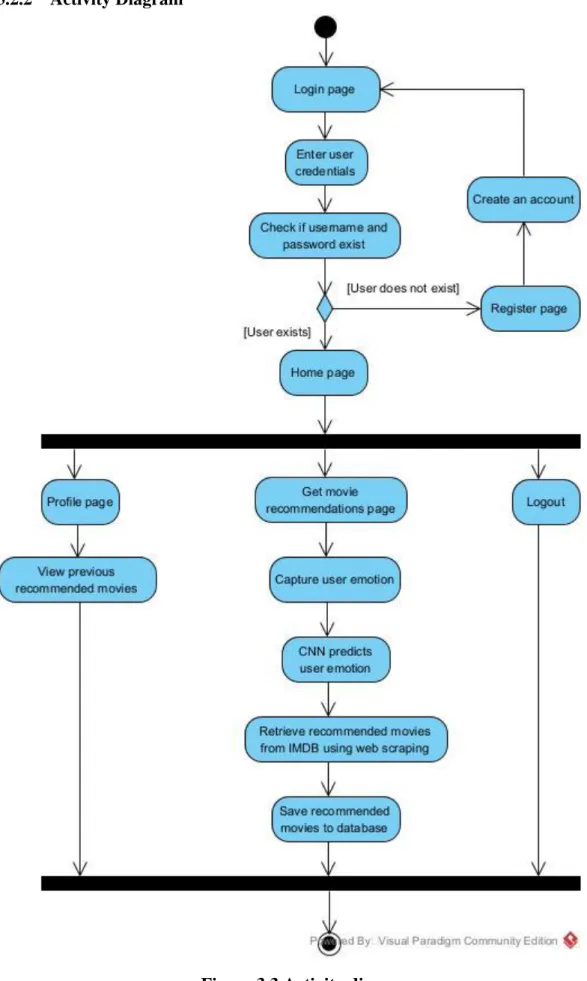

According to Figure 3.3, this system displays the login page when the users access the web application. The system will redirect them to the home page if both their username and password are in the database. On the other hand, if the user does not exist, he must register a new account.

After logging in, users can access their past movie recommendations by clicking on their profile. In addition, when users click on movie recommendations, the system captures the user's emotion and feeds it to the CNN model for prediction. After that, the system uses the predicted emotion to retrieve recommended movies from IMDB using web scraping.

The proposed emotion recognition model will consist of four convolutional layers with and 64 filters respectively. Then, the max-pooling layer is applied to reduce the dimensionality of the network by taking the highest value in 2x2 windows. The login page has two entries which are email and password, while the register page has three entries including name, email and password.

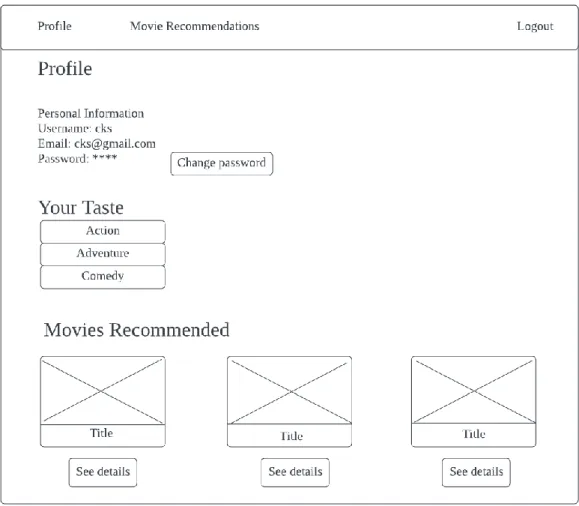

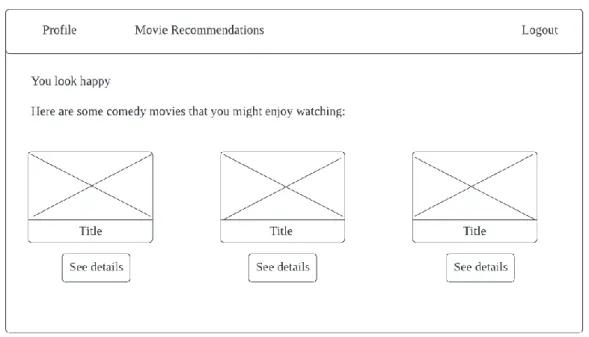

The user profile page has three sections which are personal information, your taste and recommended movies. Based on the latest recommendations, your taste will reflect the movie genre users are most likely to watch. During emotion prediction, users must open their webcam to allow the web application to capture the emotion on their face.

Additionally, the web app shows the movie genre that users are most likely to watch based on their emotions. Finally, three movie recommendations will be extracted from the IMDB website using web scraping and displayed on the screen where users can click on the view details button to learn more about the movies.

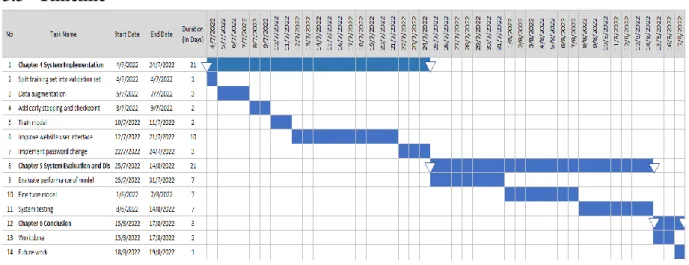

Timeline

SYSTEM IMPLEMENTATION

- Hardware Setup

- Software Setup

- Emotion Recognition Model

- Data Preprocessing on FER2013 Dataset

- Building the CNN

- Training the CNN

- Web Development

It is used to develop the emotion recognition model because of its simplicity and access to many great libraries such as TensorFlow and Keras. In this project it is used to perform face recognition by extracting the human face from an image. In this project it is used to implement user authentication and routing, connect the database as well as integrate the emotion recognition model to the web app.

Werkzeug [22] is a library used to hash the user's password before storing it in the database to increase security. In addition, data augmentation is used to generate new images from the existing images by flipping and moving the images horizontally and vertically. The CNN model was developed based on the architecture of system design described in Chapter 3.

Furthermore, the categorical cross entropy is used as the loss function in the output layer. Then the model is trained on the training set and evaluated on the validation set for 100 epochs using an initial learning rate of 0.001. After training the model for 39 epochs, the model achieved an accuracy of 74.98%, which is an improvement from FYP 1 as the model only needs to go through 39 epochs to reach about 70%.

Next, the model is trained using various combinations of hyperparameters, including learning rates of 0.01 and 0.001, Adam and SGD optimizers, and packet sizes of 64 and 128. After this, the model is evaluated on the validation set to determine the optimal hyperparameters that give the most accurate predictions. Bootstrap is used to create most of the components like login and registration form, card and button.

Based on the source code below, the web application is connected to the PostgreSQL database by setting the database URI and disabling tracking. With the database connection established, the database object generated earlier was used to create two database tables which are user table and movie table. Users can view their personal information such as username and email on the profile page.

SYSTEM EVALUATION AND DISCUSSION

- Evaluating Model Performance

- Register

- Emotion Recognition

- Analysis

- Objectives Evaluation

- To study the existing methods and artificial intelligence techniques in building an emotion recognition model implemented into the web application

- To propose a movie recommendation system based on human emotion prediction

- To develop the proposed method in recommending relevant movies to user based on human emotion

- To evaluate the effectiveness of the proposed method in recommending relevant movies to the user

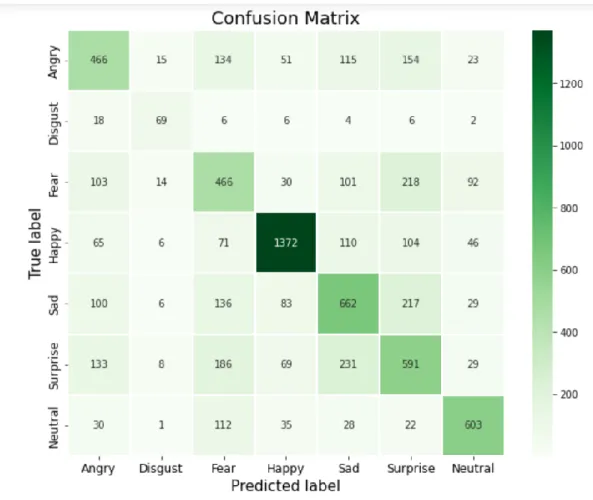

The overall result is quite good as the emotion recognition model can recognize most of the six emotions except disgust. Figures 5.4.1 show that 43.3% of respondents watch movies more than seven hours a week, while 10% spend only one to three hours watching movies. Based on the number, the respondents said that they like to watch romantic movies when they feel happy.

43.3% of respondents choose to watch family movies as it can help lighten their mood as shown in Figure 5.4.6. Based on Figure 5.4.7, half of the respondents like to watch horror movies to experience a surprise. Figure 5.4.8 shows that when feeling angry, 43.3% of respondents choose to watch action movies because a fast-paced scene can help them reduce stress.

One of the challenges faced when implementing the CNN model is that the number of images in the FER2013 dataset is not evenly distributed. For example, Figure 5.5.1 shows some of the images in the FER2013 dataset that contain two possible labels that are both acceptable. In addition, another difficulty encountered during web development is the large number of movies with a total of 3,500 movies, 50 movies for each of the seven emotions that must be manually entered into the database.

To develop the proposed method to recommend relevant movies to users based on human emotion. To evaluate the effectiveness of the proposed method in recommending relevant movies to the user. Together with myself, the model was tested on four other people to determine the performance of the model in the real world.

Furthermore, the effectiveness of the model was calculated based on the result of emotion recognition.

CONCLUSION

APPENDIX

FINAL YEAR PROJECT WEEKLY REPORT

WORK DONE

WORK TO BE DONE

PROBLEMS ENCOUNTERED

SELF EVALUATION OF THE PROGRESS

POSTER

PLAGIARISM CHECK RESULT

The title of the last year of the project EMOTION-BASED MOVIE RECOMMENDATION SYSTEM USING A CONVOLUTIONAL NEURAL NETWORK. The required parameters of originality and restrictions approved by UTAR are as follows: i) total similarity index is 20% and less, and. ii) Matching of individual cited sources must be less than 3% each and (iii) Matching of texts in a continuous block must not exceed 8 words. Note: Parameters (i) – (ii) exclude citations, bibliography and text matches of less than 8 words.

Note: The supervisor/candidate(s) must provide the Faculty/Institute with an electronic copy of the complete originality report set. Based on the above results, I declare that I am satisfied with the originality of the final year project report submitted by my students as mentioned above. Form Title: Supervisor Comments on Originality Report Generated by Turnitin for Final Year Project Report Submission (for Undergraduate Programs).

UNIVERSITI TUNKU ABDUL RAHMAN FACULTY OF INFORMATION & COMMUNICATION

TECHNOLOGY (KAMPAR CAMPUS)

![Figure 2.1 shown the confusion matrix on FER2013 dataset [6] where the accuracy result of the model is approximately 62.10%](https://thumb-ap.123doks.com/thumbv2/azpdforg/10216511.0/18.892.314.676.752.1012/figure-shown-confusion-matrix-dataset-accuracy-result-approximately.webp)

![Figure 2.3 The important regions for detecting facial expressions [10]](https://thumb-ap.123doks.com/thumbv2/azpdforg/10216511.0/21.892.314.678.415.797/figure-important-regions-detecting-facial-expressions.webp)