FACIAL EMOTION RECOGNITION USING CONVOLUTIONAL NEURAL NETWORK.

BY

Shakiur Rahman Shakib ID: 172-15-10090

This Report Presented in Partial Fulfillment of the Requirements for the Degree of Bachelor of Science in Computer Science and Engineering

Supervised By Md Azizul Hakim

Senior Lecturer Department of CSE

Daffodil International University

Co-Supervised By Dewan Mamun Raza

Lecturer

Department of CSE Daffodil International University

DAFFODIL INTERNATIONAL UNIVERSITY

DHAKA, BANGLADESH

SEPTEMBER 2021

APPROVAL

“Facial Emotion Recognition Using Convolutional Neural Network”

this project

title submitted by myself MD Shakiur Rahman Shakib. ID: 172-15-10090.It’s accepted for the partial fulfilment of the requirement for B Sc. in Computer science and engineering degree.The presentation has been held on September 9, 2021.

BOARD OF EXAMINERS

Dr. Touhid Bhuiyan

Professor and Head Chairman Department of CSE

Faculty of Science & Information Technology Daffodil International University

Dr. Md. Ismail Jabiullah

Professor Internal Examiner Department of CSE

Faculty of Science & Information Technology Daffodil International University

Most. Hasna Hena

Assistant Professor Internal Examiner Department of CSE

Faculty of Science & Information Technology Daffodil International University

Dr. Dewan Md. Farid

Associate Professor External Examiner Department of Computer Science and Engineering

United International University

© Daffodil International University ii

DECLARATION

With respect to my supervisor, I declare that I completed the project under the supervision of Md Azizul Hakim, Senior Lecturer, and Department of CSE Daffodil International University. I also declare that neither this project nor any part of this project has been submitted elsewhere for award of any degree or diploma.

Supervised by:

Md. Azizul Hakim Senior Lecturer Department of CSE

Daffodil International University

Submitted by:

Shakiur Rahman ID: 172-15-10090 Department of CSE

Daffodil International University

ACKNOWLEDGEMENT

First of all, I express my gratitude to the Most Merciful God for enabling me to successfully complete the project phase of the year.

I would like to thank our Supervisor Azizul Hakim for getting this work done properly as he has helped us to make our work a success with his proper advice. His support and guidance gave us the indomitable courage to complete our research accurately and correctly. He was the first to inspire us to do this with CNN. He is doing extensive work on CNN and he has provided all resources and relevant information to do this research. I also thank Dewon Mamum Raza, my Co-Supervisor, for supporting me in completing my work.

I'm grateful to our department head, Professor Dr. Touhid Bhuiyan for helping us to do research on this Emotion Recognition. Moreover, I would like to thank the members of other faculties and the staff of our department for their support. I sincerely thank our DIU Machine Learning Research Lab for providing us with the highest facilities.

And I’m extremely grateful for the convenience of providing the machinery and completing the research work.

© Daffodil International University iv

ABSTRACT

Human face emotion recognition is an easy thing, but it is difficult when someone uses an algorithm to do this. It's a powerful task and also challenging. Six categories of human face emotion are recognized here. Expressions: angry, disgusted, fearful, happy, sad, surprised, and neutral. It detaches all the emotions by AI. Here I work with the FER 2013 data set. At first, AI detaches a face then extracts the feature and after that classifies the emotion. A person's affective state, cognitive activity, intention, personality, and psychopathology are observable manifestations of their facial expression, which plays a communication function in interpersonal relationships. Automatic facial expression recognition can be a useful feature in natural human-machine interfaces, as well as in behavioral science and therapeutic practice.

TABLE OF CONTENTS

CONTENTS PAGE

Board of examiners i

Declaration ii

Acknowledgements iii

Abstract iv

List of Figure viii

List of Tables ix

List of Abbreviation x

CHAPTER CHAPTER 1: INTRODUCTION

1-4 1.1 Introduction 11.2 Motivation 2

1.3 Rationale of the Study 2

1.4 Research Questions 3

1.5 Expected Output 3

1.6 Report layout 4

CHAPTER 2: BACKGROUND STUDIES

5-10 2.1 Preliminaries 52.2 Related Works 5

© Daffodil International University vi

2.3 Research Summary 5

2.4 Scope of the Problem 9

2.5 Challenges 10

CHAPTER 3: RESEARCH METHODOLOGY

11-14 3.1 Introduction 113.2 Research Subject and Instrumentation 11

3.3 Data collection and Preprocessing 12

3.4 Statically Analysis 13

3.5 Implementation Requirements 14

CHAPTER 4: EXPERIMENTAL RESULTS

15-18 4.1 Introduction 154.2 Experimental Results 15

4.3 Descriptive Analysis 18

4.4 Summary 19

CHAPTER 5: IMPACT ON SOCIETY

205.1 Impact on Society 20

5.2 Ethical Aspects 20

CHAPTER 6: CONCLUSION AND FUTURE WORK

21-22 6.1 Summary of The Study 216.2 Conclusions 21

6.3 Recommendations 22 6.4 Implications for Further Study 22

REFERENCES

24© Daffodil International University viii

LIST OF FIGURES

FIGURES PAGE NO

Figure 2.3.2 Process of real time face emotion detection. 6 Figure 2.3.3 to 2.3.7 Output accuracy of model 7-9 Figure 4.2.1 to 4.2.2: Output Total prams. 16 Figure 4.2.3: Live image output 17 Figure 4.2.4: correct output of imager’s emotion 17 18 Figure 4.2.5: Bar chart of recognition rate.

18 Figure 4.2.4: Pie chart of recognition.

LIST OF TABLES

FIGURES PAGE NO

Table 3.1: Statistics of image collection with detailed. 12 Table 3.2: Separated the entire dataset for training and testing set. 13

© Daffodil International University x

ABBREVIATIONS

CNN Convolution Neural Network FACS Facial Action Coding System FER Facial Expression Recognition GPU Graphics Processing Unit

JAFFE Japanese Female Facial Expressions

CHAPTER 1 Introduction

1.1 Introduction

There are many kinds of languages like, 6500 languages used all over the world. Every language is different but facial expression is one of the most important parts of every language. It's the most interesting language. Sometimes we see people and easily understand what they can see by their face.

When we see a face cry or angry we easily understand his situation. A computer is a machine that works like a robot. We classify our emotions by computer programs.

Sometimes we use our facial expressions like language.

Artificial intelligence makes our life easier. By Facial expression, we know about people's moods and it is also much easier to communicate with each other. There are many kinds of data sets to solve the facial expression problem. FER 2013 is one of them.

In the FER 2013, dataset 35887 images are stored. And all those images are facial expressions. Expressions: angry, disgusted, fearful, happy, sad, surprised, and neutral. I recognize those images by using CNN. Training 60%, testing 20%, 20% validation of all images.

CNN analyzes every image and gives us the result. AT 1st recognizes the images and then classifies them according to expression.

© Daffodil International University 2

1.2 Motivation

Life is easy nowadays. Every technology makes our life easier. We use many kinds of applications that can detach emotion. It's interesting and exciting when we see our facial expressions live on camera.

Sometimes people don't understand many languages, machines do it easily. But people understand the emotion of a human and her expression, but machines can't.

Here I try that, machines can understand facial expressions easily. By CNN it's possible to detach human emotion. Convolutional Neural Network is one of the best ways to detach human emotion. When we see a person smiling, at that time her lips movement is up at the corner of the mouth and when he is sad, his lips are down a portion of the mouth.

Now day by day AI-based work makes our life easier. Many people work with facial expressions and I want to try a better one. Who can detach emotion easily and give us current results in a short time? There are many kinds of datasets like the JAFFE dataset, FER 2013 dataset, etc. I use the FER 2013 data set here. Here firstly detach the object then extract the feather and finally classify the object or face.

1.3 Rational of the Study

Every day invents new technology and makes people's life enjoyable and comfortable.

What we did not imagine twenty years ago is now happening. Every society's facial expression can display human actual emotion, whose personal emotional expression. It's one kind of social language and it’s very important for social communication. A facial expression is a psychological activity. Sometimes people explain a lot of things through facial expressions. So, I think it's a very important part of our life. We use it day by day.

Actually, without facial expression, we can't imagine our daily life. Without facial expressions human-like a robot or machine. Many kinds of methods have been developed

and used for facial expression. Like open CV and Haar cascades, Open CV's deep learning- base, Dlib’s CNN etc.

CNN is a multi-layer network and I use it here. FER 2013 dataset is most popular for real time face recognition. Its pixel size is 48*48.

1.4 Research Questions

● What is facial emotion recognition?

● What is CNN?

● How does CNN work?

● What are the benefits of artificial facial recognition?

● What is FER 2013?

● What is the impact of the research?

● What is the future of facial recognition?

● Is CNN the best way to do this project?

1.5 Expected Output

This Convolutional Neural Network is an algorithm base project, which is face recognition.

Here we select some human images in front of our faces. Every image is different and no one matches each other. Every picture has a specific angle. In this project I prepared a model that can classify 6 emotions of a human face. Here I input an image in our model, then use CNN for identifying the facial expression. In that way CNN gives us the actual emotion of the particular face of human input images. After that, the output shows the result that the picture brings happiness, sadness, anger, fear or nature.

Here I used the FER 2013 dataset which is capable of registering images automatically.

Every face size is 48*48 pixels. This Dataset used for Training 60%, testing 20%, 20%

validation.

My main work of the model is: Face Detection => Feature Extraction =>: Emotion Classification.

© Daffodil International University 4

1.6 Report Layout

This facial emotion detection project contains six chapters. Each chapter contains a different explanation.

Following the content:

Chapter 1

1.1 Introduction of my project, 1.2 Motivation of the project, 1.3 rationale study, 1.4

Research questions of my work, 1.5 Expected output and 1.6 Report layout of the paper.

Chapter 2

Here I work with background studies.2.1 Preliminaries, 2.2 related work of this project, 2.3

Summary of research, 2.4 Scope of the problem, and 2.5 Challenges.

Chapter 3

Here discuss methodology system, 3.1 Introduction, 3.2 Research topics and appliance, 3.3 Data collection and preprocessing, 3.4 Statistical analysis, 3.5 proposed methodology, and 3.6 Implementation requirements.

Chapter 4

I discuss Research results and discussion here. Introduction, experimental results, descriptive analysis, and summary are the major points of chapter 4.

Chapter 5

In this chapter I explain Impact of society's ethical aspects and sustainability plans.

Chapter 6

Summary of this project, Conclusions, Recommendations, Implication for Further Study are the main points of chapter 6.

CHAPTER 2 Background

2.1 Preliminaries

In our society, many times we see the human face and describe her behavior very easily. I force here human emotion recommendation and to realize human emotion it’s ability of recognize [1]. In my project, I want to recognize emotion from a human image. So that, I picked up an image and in stared many emotions. When we see a picture, we easily understand what kind of emotion is on her face. I focus here on some emotions and how I execute them on a dataset for detach face emotions. When we used 5 humans' faces here, they gave frequent language. So it suggests the human personality is different 5 ways [2].

2.2 Related Works

When I chose this topic for my final project, I found some papers which work with voice and text for detecting facial expression [3]. Emotion categories like happiness, sadness, disgust, anger, fear, and surprise. After that mixing those images, voice and data they give us the best output. For identifying emotion, the Gabor filter works well. In the system every image is filtered with two Gabor filters and gives output. Here used the JAFFE dataset [4]

2.3 Research summary

Experiment 1:

My total process works two different ways. Firstly real time images taken by webcam and give input. On the other side I take input from the FER dataset.

© Daffodil International University 6 Figure 2.3.2 Process of real time face emotion detection.

For the experiment I took some images from many different websites. I took around 200 images for experimental data.

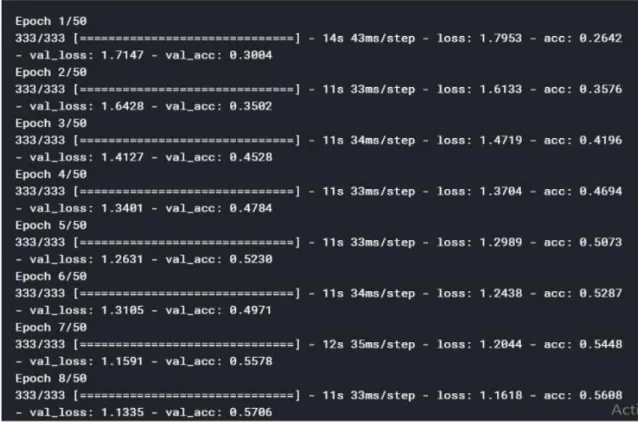

Figure 2.3.3 Output accuracy

Figure 2.2.4 Output accuracy

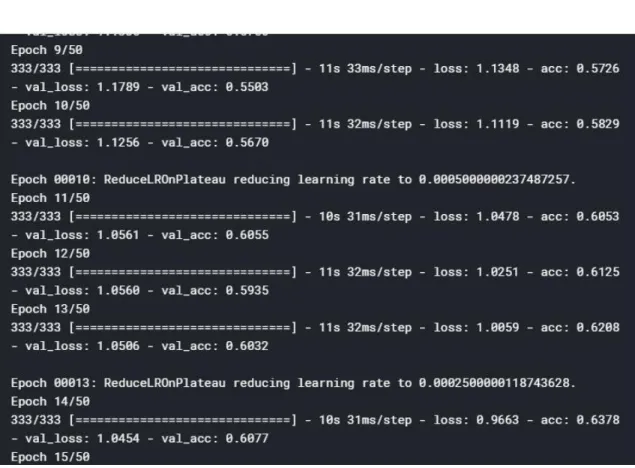

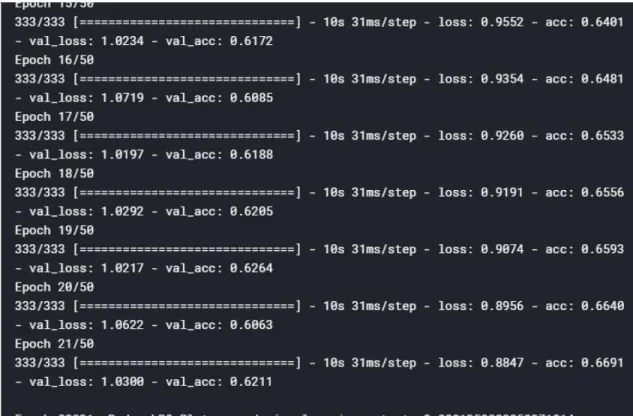

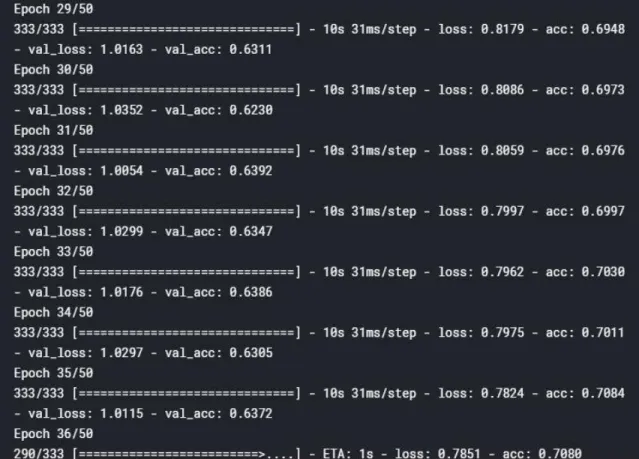

© Daffodil International University 8 Figure 2.3.5 Output accuracy

Figure 2.3.6 Output accuracy.

Figure 2.3.7 Output accuracy

2.4 Scope of the problem

In this project I would like to try detecting facial emotion recognition with live images and also data set images. Now every single day people work with that and make improvements more and more. All the projects are quite difficult and work in different ways. I picked some live images to test them. Sometimes it provides false results and it’s my project's drawback. I was trying to solve this but it did not work 100%. I used the FER 2013 datasets for testing. In the future I will try to solve the problem by algorithm. Human emotion recognition through face detection has been successfully out in several countries. However, there is no better way to become conscious of human behavior than in Our Nation [5].

© Daffodil International University 10

2.5 Challenges

I'll verify our models by creating a proper vision system that uses our suggested CNN architecture to do tasks such as face detection, gender classification, and emotion classification, as well as detecting a small number of objects in one blending step. We proceed to evaluate on standard benchmark sets after presenting the basics of the training technique setup. The faces has been automatically worn so that the face is more or less occupies space and centered on about the same measure of space in each image [6]. For input the size of image is 48*48 pixels.

● I face some problems when I work with custom datasets.

● When taking the current images.

● Upkeep of data is also challenging.

CHAPTER 3 Methodology

3.1 Introduction

A feature of facial recognition software is the ability to recognize facial expressions and emotional responses within the user's face. Face and emotion features recognition is In modern time very active area of research in the computer vision field as several mode of face detection application are now used such as image database management system or real time face [7].In facial emotion recognition, computer guidelines rise and users give the best feedback. There has been a huge increase in the research on emotion in the last 30 years. I work with 2D data.

In this chapter, I will explain the internal method of my project. What kind of data do I train and test? Facial Expression Recognition system using Convolutional Neural Networks based on Torch model [8]. How much data do I collect for this? Everything I will describe with proper explanation. An emotion detection system's goal is to develop a formula for emotion-related wisdom that will boost mortal computer indicators and improve users' views. In medical applications, there is a learned method that may be taught and implemented for more serious problems, such as aggression detection and frustration detection. The calculation of distances between numerous feature points is being used to detect expressions entirely. In this session, the analogy between distances of checking out a photograph and the natural photograph is completed.

3.2 Research Subject and Instrumentation

I work on my project with CNN. Human facial emotion detachment is my main thing. I work on that. My instrument was:

● Laptop.

© Daffodil International University 12

● Many journal or papers for reference.

● Web camera.

● Data set images for testing.

● Intern ate.

● Humans have an accurate face image.

● Live image.

3.3 Data Collection

Without collecting data, this project is impossible to finish. Data is the main medicine of this project. All the image data join with metadata for current images. Around 28 thousand images collected from datasets and using camera [9]. For maximum results, I need more data. I work here 26 subject shows and 7 to 19 examples for 160 separate actions.

28 thousand color male and female accurate photo use here. I used a fifteen to sixty years human image for a dataset and two thousand several cases.

Table 3.1: Image excerpt

Category of Image Total Image use

Happy 52

Sad 74

Angry 124

Fear 58

Disgust 83

Normal 40

The dataset is built without a 3D image [6]. That's quite impossible to use millions of data for the right and 100% result. I test those data and get a result which is pretty good.

In

future, if anyone can interstate to work with facial expression emotion detection.

My research project helped him to get more accurate results.

In the table all of the images format is a JPG file without normal categories images. All image sizes are different without normal, which is null. I saved around 600 images in those categories.

Table 3.2: Training and testing.

Emotion of face No of images Train data Test data

Happy 170 75% 25%

Sad 100 75% 25%

Angry 130 75% 25%

Fear 100 75% 25%

Disgust 100 75% 25%

Normal 40 75% 25%

3.4 Statically Analysis

I have several original photos at a resolution of 750*570 pixels. In such a situation, the camera, image model, and rotation are all the same, with the exception of the face expression [4]. I found another batch of photos on Google. The average image size is around 340 * 450 pixels. Each image depicts a different conduct, and each face of the image has a different emotion. In 3000 color photos, 150 women and men are depicted. The photographs [8] exhibit a variety of facial expressions. Around 350 people are represented in the FER dataset, with 11 different stances. Every expression of human emotion is unique.

© Daffodil International University 14

3.5 Implementation Requirements

It is totally hard to show all of this in a short time. As a result, the emotional country and the special collection of the code must be exposed in order to produce unshakeable results [8]. I used some algorithm here to detach emotion.

Algorithm:

● For real-time detection, the local binary pattern histogram is implemented in Open CV.

● Eigen face.

● Fisher face.

CHAPTER 4 Experimental Result

4.1 Introduction

Detaching images of emotion is not an easy process. I was trying to use some different algorithms for this. Trained them for detecting emotion recognition from images [10]. I made a data set of around 600 images for the test. For better results it is important to use a good and right algorithm. We utilized some methodology so that we get an environment- friendly output. It is beneficial for the user to acquire a perfect emotion. We generate a customize dataset using kaggle.com. For image title selection, we try to provide an environmentally friendly title for a photo. The depth interpretation of the corresponding picture that we additionally have tried to provide an ideal title.

4.2 Results

The above-mentioned CNN architecture for facial expression recognition was developed in Python.

Experiment 1

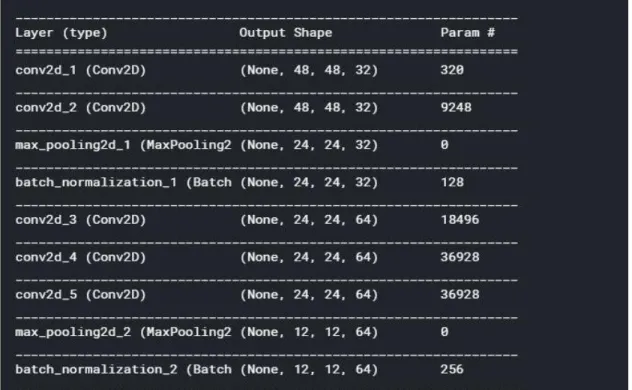

The classical model has up to 555063 training parameters. The improved training parameters are 554615, and the improved model parameters are 448 less, which reduces a huge number of training parameters, resulting in a shorter model training speed and minimum computer resource use.

© Daffodil International University 16 Figure 4.2.1: Output Total prams.

Figure 4.2.2: Output Total prams.

In figure 4.2.1 we can see the sum of total prams, trainable prams and non-trainable prams.

Experiment 2

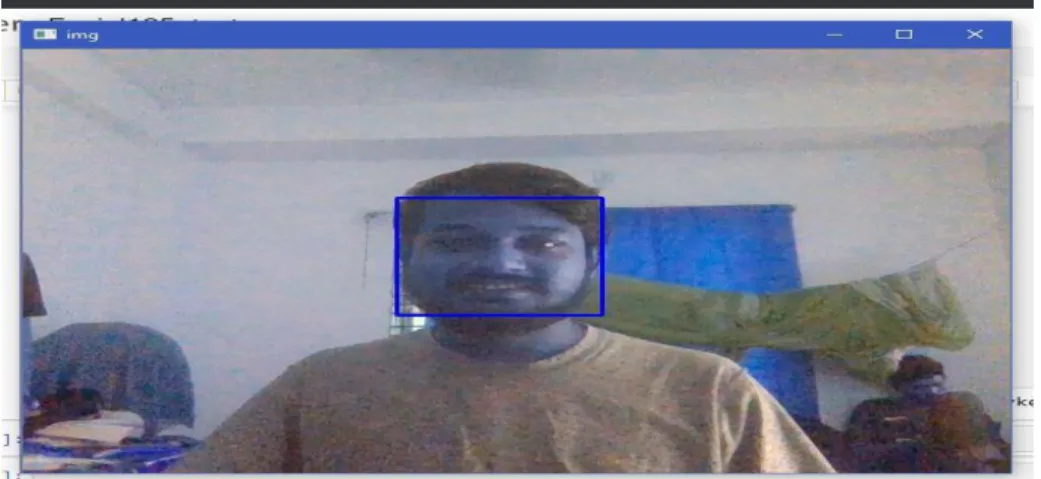

Figure 4.2.3: Live image output

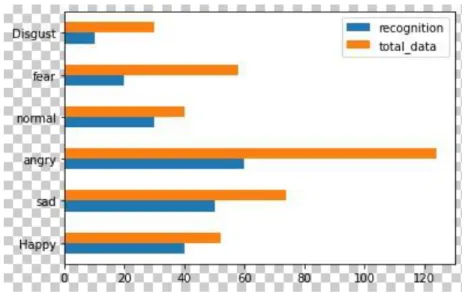

© Daffodil International University 18 Figure 4.2.5: Bar chart of recognition rate.

We see in figure 4.2.5, Orange bar is total data and blue is recognition graph. Here total images are happy 52, sad 74, angry 124, fear 58, disgust 83 and normal 40. My application detach happy 40, sad 50, angry 60, normal 30, fear 22, disgust 10 images.

Figure 4.2.4: Pie chart of recognition.

4.3 Descriptive Analysis

This type of research mainly works on images with algorithms. If we check the data more, our accuracy of detecting emotion will increase. I am trying to get a good output. So I check my data by testing 75%. After that I trained 25% of my dataset's photos. Here I used 600 unique images for that and FER 2013 datasets also. Earlier I mentioned my classification of CNN and those behaviors work almost properly.

I also mentioned my result. Now we know how the project executes and gives us almost accurate result. Now researchers are nicely trying to find out the exact problem and work on them. Some seekers do the other datasets and also use a Gabor filter for that.

Convolutional Neural Networks with deep learning give us many new inventions.

4.4 Summary

A variety of limitations, such as band, facial hair, cosmetics, and aging, still exist. This document outlines the steps for measuring emotion detection studies on a regular basis.

For checking purposes, I took some images and executed them. Most of the time it gives nearby results. Those photos are not added in my datasets. . It is beneficial for the user to acquire a perfect emotion. Customized datasets are usually set by the user and they modify images whatever they want. My trained dataset's images are about six hundred .If I say detail, happy one hundred and seventy, angry one hundred and thirty, and other three classes I used one hundred images test. Its private a well performance. One hundred sixty educated pictures are categorized and 40 was uncategorized. It shows a fantastic accuracy.

© Daffodil International University 20

CHAPTER 5 Impact on Society

5.1 Impact on society

Facial expressions can reveal a person's affective state, cognitive activity, intention, personality, and psychopathology. They also serve as a communication tool in interpersonal relationships. Natural human-machine interfaces, behavioral science, and therapeutic practice can all benefit from automatic facial expression recognition. In the modern generation there are a huge number of people who use technology for their daily life. Artificial intelligence made our day comforter. Now everyone has a smartphone and most young people love to take selfies. Using detecting facial emotion we understand many humans' reactions and also know their behavior. Because, many times when we communicate with each other, people use their expressions for many times. I try to understand emotion by computer application. Live image capture is one of the best ways to train and test them.

For this kind of research project people easily execute their emotion or application.

In future this system will be used in offices or many where for their live behavior. Capturing images and recognition face behavior is a digitalization process of our communication system and it’s impactful.

5.2Ethical Aspects

Every research base projects have some ethic. My ethical aspects are following statement:

● I used all the data honestly.

● Capturing images and resizing them currently.

● When I select this project, I find out about this kind of project for learning about the process.

● I am trying to manage plagiarism.

CHAPTER 6

Conclusion and Future Work

6.1 Summary of the Study

My whole project runs with Co lab and all the internal code is python based. Here I worked with a significant amount of human faces and their actual behaviors. I am trying to find out the accurate expression of faces. The scientific culture of facial expression viewed that it illustrates one of the most strong and early means for emotions and purpose communication. Facial mood or emotions states of each other, such as sad or happy and anger using facial expression. We want to show the animus to modern techniques and system method that will be of most interest researchers in the area of facial images.

All over that research finding can be a necessary determinative of the fields its will build the future research trends. Human facial emotions play a significant role in man-to-man communication because emotions help us comprehend one another. Using facial expressions, people can communicate their moods or emotions, such as sadness or happiness, or anger. I wish to invite the animus to modern methodologies and system methods that will be of particular interest to facial image researchers.

6.2 Conclusion

A six-layer convolution neural network based on architecture is being used in the project to classify human facial expressions such as happy, sad, surprise, fear, anger, disgust, and neutral. The goal of this article is to chronicle and speculate on current improvements in human facial emotion recognition. I built and implemented a general architecture for producing real-time CNNs. Our proposed rules were developed in a systematic manner to limit the number of parameters. We started by removing all fully connected layers and using depth-wise sustainability to reduce the amount of parameters in the remaining convolutional layers. We have demonstrated that our suggested multiclass classification

© Daffodil International University 22 models can be stacked while maintaining real-time inferences. In particular, we've created a vision system that can recognize faces.

Ideal system:

● Auto recognition of the human face, Accuracy of the project 64%.

● Classify all the emotions automatically.

● Use a camera, take the real-time images.

● Automatically detach photos by algorithm.

● Train 75% and test 25% of all images.

● Burn faces are not allowed here.

● Also identify the age and look of humans.

6.3 Recommendation

Face recognition works any image which depends on any age, color and position. When someone use the system, please follow the work:

● Makeup images (face) do not work.

● Every face’s eyes are perfectly open.

● To reduce errors, run the system perfectly.

● FER datasets image and color images.

● Format of human photos is pang or jpg.

● Right image size and resolution.

● Work on the lack of this project.

● Build the best possible model which gives us more current output.

● Increase training data.

6.4 Implications for Further Study

A person's affective state, cognitive activity, intention, personality, and psychopathology can all be revealed through facial expressions. In interpersonal relationships, they can also be used as a means of communication. Automatic facial expression recognition can help

with natural human-machine interfaces, behavioral science, and therapeutic practice. The most important thing is that this section gives an indication of the direction or concept of their new research, which will help individuals who are new to research get started. In our research, we used one lakh data points to train models, but we hope to expand the data set in the future. So that our model can function more precisely and get better results.

The most major private haul is related with facial fervidity. It may be utilized in a variety of apps, including face identification and age estimation. Protected applications, law inducement systems, and presence applications are samples of the standard of these apps.

In addition, they are each useful in the discovery of a tainted child. Current systems require high level of test precision.

© Daffodil International University 24

REFERENCES

[1] H. H. 2. Y. W. H. Y. 1. a. C. M. Yi Dong1 (B), "Personalized Emotion-Aware Video Streaming for the Elderly," 2018.

[2] S. G. H. Q. W. B. W. W. L. M. Z. K. Z. a. B. D. Shenglan Liu, "Multi-view Laplacian Eigenmaps Based on Bag-of-Neighbors For RGBD Human Emotion Recognition," 2018.

[3] Á.-G.-M. A.-C. JoséCarlosCastillo, "Emotion Detectionand Regulation from Personal Assistant Robot in Smart Environment," 2018.

[4] Maryam Imani, “Fast Facial emotion recognition Using Convolutional Neural Networks and Gabor

Filters,”Tarbiat Modares University.

[5] F. Arafat, "Classification of chronic kidney disease (ckd) using data mining techniques," Daffodil International University, Dhaka, 2018.

[6] Z.-R. W. J. D. Yuanyuan Zhang, "Deep Fusion: An Attention Guided Factorized Bilinear Pooling for Audio-video Emotion Recognition," 2019.

[7] S. K. A. G. R. Ram Krishna Pandey, "Improving Facial Emotion Recognition Systems Using Gradient and Laplacian Images," 2019.

[8] Alizadeh, Shima, and Azar Fazel. "Convolutional Neural Networks for Facial Expression Recognition."

Stanford University, 2016

[9] R. Hossain, "Applying text analytics on music emotion recognition," Daffodil International University, Dhaka, 2017.

[10] A. S. M. &. K. S. D. &. A. S. &. P. S. 2, "A study on various methods used for video summarization andmoving object detection for video surveillance applications," 2018.

APPENDICES

We experienced a number of issues and retardation on a regular basis when we first started our inquiry. Once that, after we solved the programming and artificial intelligence problems, we learned about numerous techniques and technologies. In these circumstances, we had to learn new machine learning, deep learning, and Convolution Neural Networks methodologies (CNN).

© Daffodil International University 26