ContentslistsavailableatScienceDirect

Biomedical

Signal

Processing

and

Control

j ou rn a l h o m e pa g e :w w w . e l s e v i e r . c o m / l o c a t e / b s p c

Yawn

analysis

with

mouth

occlusion

detection

Masrullizam

Mat

Ibrahim,

John

J.

Soraghan,

Lykourgos

Petropoulakis,

Gaetano

Di

Caterina

∗DepartmentofElectronicandElectricalEngineering,UniversityofStrathclyde,204GeorgeStreet,G11XWGlasgow,UnitedKingdom

a

r

t

i

c

l

e

i

n

f

o

Articlehistory:

Received11August2014 Receivedinrevisedform 10December2014 Accepted9February2015

a

b

s

t

r

a

c

t

Oneofthemostcommonsignsoftirednessorfatigueisyawning.Naturally,identificationoffatigued individualswouldbehelpedifyawningisdetected.Existingtechniquesforyawndetectionarecentredon measuringthemouthopening.Thisapproach,however,mayfailifthemouthisoccludedbythehand,asit isfrequentlythecase.Theworkpresentedinthispaperfocusesonatechniquetodetectyawningwhilst alsoallowingforcasesofocclusion.Formeasuringthemouthopening,anewtechniquewhichapplies adaptivecolourregionisintroduced.Fordetectingyawningwhilstthemouthisoccluded,localbinary pattern(LBP)featuresareusedtoalsoidentifyfacialdistortionsduringyawning.Inthisresearch,the StrathclydeFacialFatigue(SFF)databasewhichcontainsgenuinevideofootageoffatiguedindividualsis usedfortraining,testingandevaluationofthesystem.

©2015ElsevierLtd.Allrightsreserved.

1. Introduction

Fatigueisusuallyperceivedasthefeelingoftirednessor drowsi-ness.Inaworkrelatedcontext,fatigueisastateofmentaland/or physicalexhaustionthatreducestheabilitytoperformworksafely andeffectively.Fatiguecanresultduetoanumberoffactors includ-inginadequaterest,excessivephysicalor mentalactivity,sleep patterndisturbances,orexcessivestress.Therearemany condi-tionswhichcanaffectindividualsandwhichareconsidereddirectly relatedtofatiguesuchasvisualimpairment, reducedhand–eye coordination,lowmotivation,poorconcentration,slowreflexes, sluggishresponse orinabilitytoconcentrate. Fatigueis consid-eredasthelargestcontributor toroadaccidents,leadingtoloss of lives. Data for 2010 from the Department of Transport, UK

[1],points to 1850people killed,and 22,660seriouslyinjured, withfatiguecontributingto20%tothetotalnumberofaccidents

[2]. US National Highway Traffic Safety estimates suggest that approximately100,000accidentseachyeararecausedbyfatigue

[3].

Fatigueisidentifiablefromhumanphysiologysuchaseyeand mouth observations, brainactivity, and by using electrocardio-grams(ECG) measuring,for example,heartratevariability. The physical activities and human behaviour may also be used to identifyfatigue[4–6].Inthis paperyawning, indicatingfatigue, is detectedand analysed from video sequences. Yawning is an

∗Correspondingauthor.Tel.:+44(0)1415484458.

E-mailaddress:gaetano.di-caterina@strath.ac.uk (G.DiCaterina).

involuntary action where the mouth opens wide and, for this reason,yawningdetectionresearchfocusesonmeasuringand clas-sifyingthis mouth opening.Frequently, however,this approach is thwartedbythecommonhumanreaction tohand-coverthe mouthduringyawning.Inthispaper,weintroduceanewapproach

todetectyawning bycombiningmouth openingmeasurements

witha facialdistortion (wrinkles)detectionmethod.For mouth openingmeasurementsanewadaptivethresholdforsegmenting themouthregionisintroduced.Foryawningdetectionwiththe mouthcovered,localbinarypatterns(LBP)featuresandalearning machineclassifierareemployed.Inordertodetectthewrinkles, theedgedetectorSobeloperator[30]isused.Differentlyfrom[32], wherethemouthcovereddetectiontechniqueisappliedtostatic imagesonly,theyawnanalysisapproachproposedinthispaper describesacompletesystemforyawndetectioninvideosequences. Inthisresearch,genuineyawningfatiguevideodataisusedfor training,testingandevaluation.ThedataisfromtheStrathclyde FacialFatigue(SFF)videodatabase,whichwasexplicitlycreated toaid this research,and which contains series of facial videos of sleep deprived fatigued individuals. The, ethically approved, sleepdeprivationexperimentswereconductedattheUniversity ofStrathclyde,withtheaidoftwentyvolunteersundercontrolled conditions.Eachofthe20volunteerswassleepdeprivedfor peri-odsof0,3,5and8honseparateoccasions.Duringeachsessionthe participants’faceswererecordedwhiletheywerecarryingouta seriesofcognitivetasks.

Theremainderofthispaperisorganisedasfollows.Section2

discussestherelatedworkofthisresearch,whileSection3explains theSFF database.Section4 discusses theoverall system,while

http://dx.doi.org/10.1016/j.bspc.2015.02.006

of the mouth opening [7–9]. The algorithms used must there-forebeabletodifferentiatebetweennormalmouthopeningand yawning.Yawndetectioncanbegenerallycategorisedintothree approaches;featurebased,appearancebased,andmodelbased.In thefeaturebasedmethod,themouthopeningcanbemeasured byapplying severalfeatures suchascolour, edges andtexture, whichareabletodescribetheactivitiesofthemouth.Commonly, therearetwoapproachestomeasurethemouthopening,mainly bytrackingthelipsmovementandbyquantifyingthewidthof themouth.Sincethemouthisthewiderfacialcomponent,colour isoneof theprominentfeatures usedtodistinguishthemouth region.Yao et al. [10] transfer RGB colour spaceto LaB colour spaceinordertousethe‘a’component,whichisfoundas accept-ableforsegmentingthelips. Thelipsrepresenttheboundaryof themouthopeningthat istobe measured.YCbCr colourspace waschosen by Omidyeganeh [11] for their yawning detection. In that work,the claim is that colour spaceis able toindicate themouthopeningarea, whichis thedarkestcolour region,by setting a certain threshold. Yawning is detected by computing theratioofthehorizontalandverticalintensityprojectionofthe mouthregion.Themouthopeningisdetectedasyawnwhenthis ratiois aboveasetthreshold. Implementationusingthecolour propertiesiseasybut,tomakethealgorithmrobust,several chal-lengesmustbeaddressed.Thelipscolourisdifferentforeveryone, andduetothedifferentlightingconditionsitisnecessarytoensure thatthethresholdvalueisadaptedtothechanges.

Edgesareanothermouthfeatureabletorepresenttheshape ofthemouthopening.Aliouaetal.[12]extractedtheedgesfrom thedifferencesbetweenthelipsregionandthedarkestregionof themouth.Thewidthofthemouthis measuredinconsecutive frames, and yawn is detectedwhen themouth is continuously openingwidelymorethanasetnumberoftimes.Whenusingthe edgestodetermineyawn,thechallengeisthatthechangesofthe edgesareproportionaltotheilluminationchanges,makingit dif-ficulttosettheparameterofanedgedetector.Inordertolocate accuratelythemouthboundary,theactivecontouralgorithmcan beusedasimplementedin[8].However,thecomputationalcost ofthisapproachisveryhigh.Themouthcornershavealsobeen employedbyXiaoetal.[9]todetectyawn.Themouthcornerscan

bereferencepointsinordertotrackthemouthregion.Yawning isclassifiedbasedonthechangeofthetextureinthecornerof themouthwhilethemouthisopenedwidely.Moreover,asinthe caseimplementedformeasuringeyeactivities,Jiménez-Pintoetal.

[13]havedetectedtheyawnbaseduponthesalientpointsinthe mouthregion.ThesesalientpointsintroducedbyShiandTomasi

[14]areabletodescribethemotionofthelipsmovement,andyawn isdeterminedbyexaminingthemotioninthemouthregion.In

[7,11]yawnisdetectedbasedonahorizontalprofileprojection. Theheightofthemouthopeninginprofileprojectionrepresents themouthyawn.However,thisapproachisinefficientforbeard andmoustachebearingindividuals.

Inappearancebasedmethod,statisticallearningmachine algo-rithmsareappliedanddistinctivefeaturesneedtobeextractedin ordertotrainthealgorithms.Lirongetal.[15]combinedHaar-like featuresandvariancevalueinordertotraintheirsystemtoclassify thelipsusingsupportvectormachine(SVM).Withtheadditional integrationofaKalmanfiltertheiralgorithmisalsoabletotrack thelipregion.Linglingetal.[16]alsoappliedHaar-likefeaturesto

yawnclassificationinthreestates:mouthclosed,normalopen,and wideopen.Themouthregionfeaturesareextractedbasedonthe RGBcolourproperties.Forthisapproachahugenumberofdatasets forimagesisrequiredinordertoobtainadequateresults.

Themodelbasedapproachrequiresthatthemouthorlipsare modelledfirst.Anactiveshapemodel(ASM)forinstance,requires asetoftrainingimagesand,ineveryimage,theimportantpoints thatrepresentthestructureofthemouthneedtobemarked.Then, themarkedsetofimagesisusedfortraining usingastatistical algorithmtoestablishtheshapemodel.

García et al.[18] and Anumaset al. [19] train thestructure usingASM, and theyawn ismeasuredbased onthelips open-ing.Hachisukaetal.[20]employactiveappearancemodel(AAM),

whichannotateseachessentialfacialpoint.Foryawningdetection threepointsaredefinedtorepresenttheopeningofthemouth.In thisapproachalotofimagesarerequiredsinceeverypersonhas differentfacialstructure.

3. StrathclydeFacialFatigue(SFF)database

TheStrathclydeFacialFatiguedatabasewasdevelopedinorder toobtaingenuinefacialsignsoffatigue.Mostofthefatiguerelated researchershaveusedtheirowndatasetsforassessingtheir devel-opedsystem.Forexample,Vuraletal.[21]developedtheirfatigue databasebyusingvideofootagefromasimulateddriving environ-ment.Theparticipantshadtodriveforover3handtheirfaceswere recordedduringthattime.Fanetal.[22]recruitedforty partici-pantsandrecordedtheirfacesforseveralhoursinordertoobtain facialsignsoffatigue.Someresearchersonlytesttheiralgorithms based on video footage of persons who pretend to experience fatiguesymptoms.Forexample,researchersin[8,11,12]detected yawnbasedonthewidthofthemouthopeningandthealgorithms werenottestedonsubjectsexperiencingnaturalyawning.TheSFF databaseprovidesagenuinemediumoffacialfatiguevideofootage fromsleepdeprivationexperimentswhichinvolvedtwenty partic-ipants.

Thesleepdeprivedvolunteerswere10maleand10femalewith agesrangingbetween20to40yearsold.Eachvolunteerhadto per-formaseriesofcognitivetasksduringfourseparateexperimental sessions.Priortoeachsession,theparticipantsweresleepdeprived for0h(nosleepdeprivation),3,5and8hundercontrolled condi-tions.Thecognitivetasksweredesignedtotest(a)simpleattention, (b)sustainedattentionand(c)theworkingmemoryofthe partici-pants.Thesecognitivetasksareassociatedwithsensitivitytosleep lossandaredesignedtoacceleratefatiguesigns.Fig.1showssome examplesofvideofootageintheSFFdatabase.

4. Systemoverview

Ablockdiagramofthenewyawnanalysissystemisshownin

Fig.2.Thesystembeginswithaninitialisationoperationcarriedout inthefirstframeofthevideo.Thisconsistsoffaceacquisitionand regioninitialisationalgorithms.Duringfaceacquisition,theface, eyeandmouthdetectionalgorithmsareperformedsequentially beforearegionofinterestinitialisationalgorithmisexecuted.

M.M.Ibrahimetal./BiomedicalSignalProcessingandControl18(2015)360–369

Fig.1. ExampleimagesfromvideofootageSFF.

Fa

Eye

Mouth Detection Face Detection ace acquisition

Regions Interest Initialisation

Yawn analysis operations Detection

Fig.2.Yawnanalysissystemoverview.

tobetrackedinallthefollowingframes.TheFMRregionisused inmeasuringthemouthopeningandtodetectwhenthemouth iscovered,andFDRisusedinmeasuringthedistortionsduring yawning.

4.1. Regionofinterestevaluation

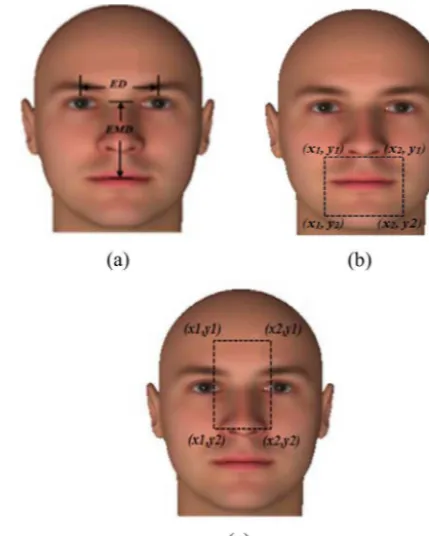

Asis indicatedin[32] afacial regionof interestneedstobe establishedfirst.Fig.3depictsafaceacquisitionoperationwhere theeyesandmoutharesequentiallydetectedusingaViolaJones technique[23,24].Trainingisperformedthroughacascade clas-sifierappliedtoeverysub-window intheinputimagein order toincreasedetectionperformanceandreducecomputationtime. TheSFF video footageprovided thelargenumber of face, eyes and mouthdata neededtotrain the Haar-likefeatures [25,26]. Inthepresentmethod,thefaceregionisfirstdetectedandthen, theeyesandmouthareasareidentifiedwithinit.Theacquisition ofthedistinctive distances betweenthesefacialcomponents is effectedthroughanthropometricmeasurements,thusaccounting forhumanfacialvariability.Thisinformationisthenusedinthe formationofthetwofollowingregionsofinterest.

4.1.1. Focusedmouthregion(FMR)

Thisisformedbaseduponthedetectedlocationsoftheeyesand mouth.Thedistancebetweenthecentreoftheeyes(ED),andthe distancebetweenthecentreofmouthandthemid-pointbetween theeyes(EMD)areobtained(Fig.3(a)).Theratioofthesedistances

REMisthencomputedas:

REM= ED

EMD (1)

Fig.3. (a)Anthropometricmeasurement;(b)focusedmouthregion(FMR);(c) focuseddistortionregion(FDR).

FromthisinformationthecoordinatesoftheFMR(Fig.3(b))are empiricallydefinedas:

x1y1

=

xR

yR+0.75EMD

(2)

x2y2

=

xL

yL+0.75EMD+0.8ED

(3)

where(xR,yR)and(xL,yL)arethecentrepointsoftherighteyeiris andlefteyeiris,respectively.TheFMRdependsonthelocationand distanceoftheeyes.Whenthefacemovesforwardtheeye dis-tanceincreasesandsodoestheFMR.Conversely,theFMRreduces followingabackwardmove.

4.1.2. Focuseddistortionregion(FDR)

Thefocuseddistortionregion(FDR)isaregioninthefacewhere changesoffacialdistortionoccur.Theregion,whichismostlikely toundergodistortionsduringyawning, wasidentifiedbased on conductedexperimentsusingtheSFFdatabase.Thecoordinatesof theFDR(Fig.3(c)),areempiricallydefinedas:

x1y1

=

xR

yR−0.75EMD

(4)

x2y2

=

xL

yL+0.75EMD

(5)

Thethresholdvalues0.75and0.8usedinEqs.(2)–(5)arebased onobservationtests,undertakenontheSFFdatabase.

4.2. Yawnanalysisoperations

Fig.4. AlineartransformthatremapsintensitylevelinputimageinbetweenG′ min andG′

max.

0 0 10 20 30 40

(a)

50 100 150 200 250 00 50

1000 2000 3000

(b)

100 150 200 250

Fig.5.(a)Enhancedimagehistogram;(b)cumulativehistogram.

openingmeasurement, coveredmouthdetection,and distortion detection.

4.2.1. Mouthopeningmeasurement

Yawningisaninvoluntaryactionthatcausesapersontoopen themouth widely and breathe in. The technique in this paper detectsyawningbaseduponthewidestareaofthedarkestregion betweenthelips.Aspreviouslydiscussedwhenappliedtechniques arebasedonintensitiesandcolourvalues,thechallengeistoobtain anaccuratethresholdvaluewhichwilladapttomulti-skinandlips colouration,aswellastodifferentlightingconditions.Theproposed algorithmappliesanewadaptivethresholdinordertomeetthis challenge.

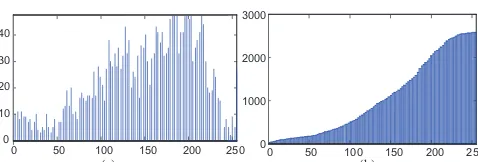

Theadaptivethresholdvalueiscalculatedduringthe initialisa-tionoperationaftertheFMRisformed.Duetothedependenceof themethodontheintensityvalueoftheregion,itisnecessaryto improvethefaceimageinordertocontrastenhancethedarkest andthebrightestfacialregions.Theenhancementisimplemented bytransformingthehistogramoftheimagetospreadthelevelof colourvaluesevenlybutwithoutchangingtheshapeoftheinput histogram.Thisiscomputedusingthefollowing transformation

[27]:

g′(x,y)=G ′

max−G′

min

Gmax−Gmin[g(x,y)−Gmin]+G

′

min (6)

ThelineartransformationillustratedinFig.4stretchesthe his-togramoftheinputimageintothedesiredoutputintensitylevel ofthehistogrambetweenG′

minandG′max,whereGminandGmaxare

theminimumandmaximuminputimageintensities,respectively. FromthisenhancedhistogramillustratedinFig.5(a),the cumu-lativehistogramHiiscalculatedasinEq.(7),thusgeneratingthe

Fig.6. Focusedmouthregion(FMR).

Fig.7.FMRsegmentationusingadaptivethreshold.

totalnumberofindexpixelsinthehistogrambinsbetween0and 255asillustratedinFig.5(b).

Hi= i

j=1

hj (7)

Consequently,theinitialadaptivethresholdiscalculatedby ref-erencetothedarkestareaasshowninFig.6.Onaverage,thedarkest areaconstitutesapproximately5%,orless,oftheentireobtained regionofinterest.Basedonthispercentage,thedarkestareainthe histogramissituatedbetween0andXintensitylevels.The inten-sityvalueXcanbeobtainedbyselectingthefirst5%ofthetotal numberofpixelscontainedintheenhancedhistogram(Fig.5(a)) andreadingthecorrespondingright-mostintensityvaluefromthe

x-axis.

Then,theadaptivethresholdTavalueiscalculatedasfollows:

Ta=

X−Pmin

Pmax−Pmin (8)

wherePmaxandPminarethemaximumandminimumpixelvalues,

respectively.

AftertheadaptivethresholdTa iscomputed,theFMRis seg-mentedandthemouthlengthLMisobtainedasillustratedinFig.7. ThelengthofLMmustbewithintherangeindicatedinthefollowing equation:

0.7ED<LM<0.9ED (9)

IfLMisoutsidethisrange,thevalueTaisincreasedordecreased accordingly,instepsof0.001.Thenthesegmentationprocessis repeateduntilthevalueofLMiswithinrangeasinEq.(9).

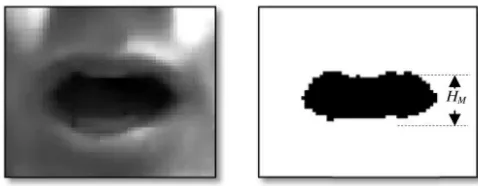

Theadaptivethresholdisappliedtothenextsequenceofframes forsegmentingthedarkestregionbetweenthelips.In orderto determinetheyawning,theheightofthemouthHM,basedonthe darkestregioninFMR,ismeasuredasshowninFig.8.Then,the YawnRatio(RY)oftheheightHMovertheheightofFMRiscomputed asfollows:

RY =

HM

FMRheight (10)

M.M.Ibrahimetal./BiomedicalSignalProcessingandControl18(2015)360–369

Fig.8. Themeasurementofhighofmouthopening.

Fig.9.(a)Uncoveredmouth;(b)coveredmouth.

Fig.10.ExampleofthebasicLBPoperator.

4.2.2. Coveredmouthdetection

Itisnotunusualtooccludethemouthwiththehandduring yawning.ThismeansthatinformationfromSection4.2.1canno longerbeusedtodetectyawning.Theapproachfirstintroducedin

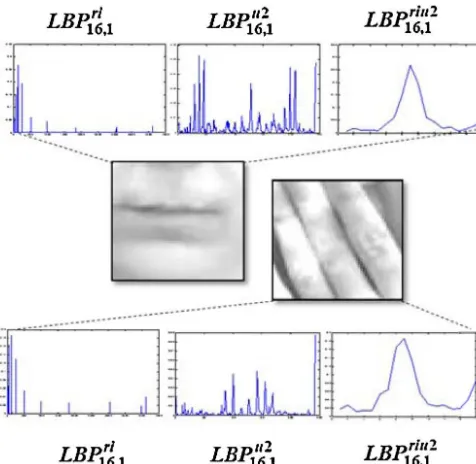

[32]onstillimagescanalsobeusedinvideosequencefootage.The approachinvolvesthattheFMRisexaminedtodeterminewhether theregioniscoveredornot.Fig.9displaysimagesofFMR(a)not coveredand(b)covered.Fromtheseimagesthedifferenttexture oftheFMRregionsisclearlyobviousinthetwocases.Inorderto differentiatebetweenthesetwocases,localbinarypatterns(LBP) areused toextractthe FMRtexturepattern. LBPfeatures have beenprovenhighlyaccuratedescriptorsoftexture,arerobustto themonotonicgrayscalechanges,aswellassimpletoimplement computationally[28].

Localbinarypatterns(LBP)wasoriginallyappliedontexture analysistoindicatethediscriminativefeaturesoftexture.With ref-erencetoFig.10,thebasicLBPcodeofthecentralpixelisobtained bysubtractingitsvaluefromeachofitsneighboursand,ifanegative numberisobtained,itissubstitutedwitha0,elseitisa1.

TheLBPoperatorislimitedsinceitrepresentsasmallscale fea-turestructurewhichmaybeunabletocapturelargescaledominant features.Inordertodealwiththedifferentscaleoftexture,Ojalaet al.[29]presentedanextendedLBPoperatorwheretheradiusand samplingpointsareincreased.Fig.11showstheextendedoperator

Fig.11.ExampleofextendedLBPoperator.(a)(8,1);(b)(16,2)and(c)(24,3)

neigh-bourhoods.

wherethenotation(P,R)representsaneighbourhoodofPsampling pointsonacircleofradiusR.

TheLBPresultcanbeformulatedindecimalformas:

LBPP,R(xc,yc)=

P−1

P=0

s(iP−ic) 2P (11)

whereic andiPdenotegraylevelvaluesofthecentralpixeland

Prepresentsurroundingpixelsinthecircleneighbourhoodwith radiusR.Functions(x)isdefinedas:

s(x)=

1, if x≥ 0

0, if x<0 (12)

TheLBPoperator,as formulatedinEq. (11),hasa rotational effect.Hence,iftheimageisrotated,then,thesurroundingpixelsin eachneighbourhoodaremovingaccordinglyalongtheperimeter ofthecircle.InordertoremovethiseffectOjalaetal.[29]proposed arotationalinvariant(ri)LBPasfollows:

LBPri P,R=

minRORLBPP,R,i

i=0, 1,..., P−1 (13)

whereROR(x,i)performsacircularbitwiserightshiftontheP-bit numberxwithitime.ALBPuniformpattern(u2),LBPu(P,R2 )wasalso

proposed.TheLBPiscalleduniformwhenitcontainsamaximum oftwobitwisetransitionsfrom0to1orviceversa.Forexample, 1111111(0transition)and00111100(2transitions)areboth uni-form,but100110001(4transitions)and01010011(6transitions) arenotuniform.AnotherLBPoperator,whichisacombinationof arotationalinvariantpatternwithauniformpattern,isLBPriu2

(P,R).

Thisoperator isobtainedbysimplycountingthe1’sinuniform patterncodes.Allnon-uniformpatternsareplacedinasinglebin. AnexampleofanLBPhistogramofthethreetypesofLBPoperators forcoveredanduncoveredmouthregionisshowninFig.12.

AclassifierthenusestheLBPfeaturestodetectmouthcovering, asexplainedinSection6.2.

4.2.3. Distortionsdetection

Yawning cannot be concluded solely on the detection of a coveredmouthregion.Itispossiblethatthemouthiscovered acci-dentallyorevenintentionallyforreasonsunrelatedtoyawning.To minimisethepossibilityoffalseyawndetection,themetricof dis-tortionsinaspecificregionoftheface,mostlikelytodistortwhile yawning,isalsoused[32].Thisregionisidentifiedbasedon obser-vationsandtheexperimentsconductedusingthevideo footage fromtheSFFdatabase.

Theregionmostaffectedbydistortionduringyawningisthe focuseddistortionregion(FDR)(Section4.1.2).FromFig.13bandc itisobviousthattherearesignificantvisualdifferencesintheFDR areabetweennormalconditionsandduringyawning.

ToevaluatethechangesoccurringinFDR,edgedetectionisused toidentifythedistortions.Hereanyedge detectorcanbeused. However,basedonexperimentscarriedout,theSobeloperator[30]

Fig.12.LBPhistogramfornotcoveredmouthandcoveredmouthregions.

Fig.13.(a)Focuseddistortionregion(FDR);(b)normalconditioninFDR;(c) yawn-inginFDR.

intensitygradientateachpointintheregionofinterest.Then,it providesthedirectionofthelargestpossibleincreasefromlightto darkandtherateofchangeinthehorizontalandverticaldirections. TheSobeloperatorrepresentsapartialderivativeoff(x,y)andof thecentralpointofa3×3areaofpixels.Thegradientsforthe hor-izontalGxandverticalGydirectionsfortheregionofinterestare thencomputedas[31]:

Gx=

f(x+1, y−1)+2f(x+1, y)+f(x+1, y+1)−

f(x−1, y−1)+2f(x−1, y)+f(x−1, y−1) (14)Gy=

f(x−1, y+1)+2f(x, y+1)+f(x+1, y+1)−

f(x−1, y−1)+2f(x, y−1)+f(x+1, y−1) (15)TheFDRdistortionsaredetectedbasedonthegradientsinboth directionsandtheresultsareshowninFig.14.Thenumberofedges detectedundernormalconditions,asillustratedinFig.14(a),is sig-nificantlydifferentcomparedtoyawning(Fig.14(b)).Inorderto identifythedistortionintheFDRduringyawning,thesumFDRSAD

oftheabsolutevaluesobtainedinEq.(16)isusedtocomputethe numberofwrinklesintheregion.ThenormalisedFDRSADis calcu-latedinEq.(17)whereWandHdenotethewidthandheightofthe FDR,respectively.

Fig.14. FDRwithinputimageandedgesdetectedimage.(a)Normal;(b)yawn.

Fig.15.PlotofnormalisedFDRSADvaluesduringyawning.

Duringyawning,thenumbersofthedetectededgesincreaseas showninFig.15.

FDRSAD =

i,j

I1(i,j)−I2(x+i,y+j) (16)

Normalised FDRSAD= FDRSAD

255×W×H (17)

5. Yawnanalysis

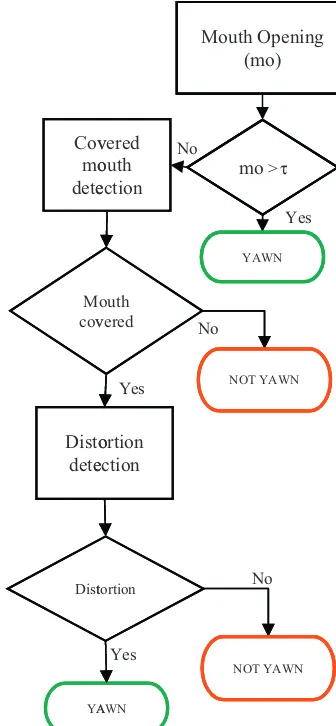

Ingeneral,theactionofyawningcanbecategorisedintothree situations:(a)mouthnot-coveredandwideopen,(b)mouthwide open for a short time before it gets covered and, (c) mouth totallycoveredwithinvisiblemouthopening.Thesesituationsare based ontheobservations madefrom thevideo footageof the SFFdatabase.Ayawnanalysisalgorithm, asdepictedinFig.16, wasthereforedevelopedtodealwiththesecasesbyintroducing theaforementionedprocedures,i.e.mouthopeningmeasurement, mouthcovereddetectionanddistortionsdetection.

Inthisalgorithm,thestatusofmouthopening,mouthcovered, anddistortionsareexaminedperiodically.Thechosentimeframe formeasurements,basedonobservationsfromtheSFFdatabase, is30s—atimeintervalreachedbyexaminingtheSFFvideodata. Thisassumesthatyawningdoesnotoccurtwicewithinthistime period.

The algorithm starts byfirst examining themouth opening. Yawningisidentifiedthroughthemouthopeningforlongerthana fixedinterval,usuallybetween3and5s.Ifthisshowsnoyawning, thenthemouthcovereddetectionistriggered.Thestatusof distor-tionsinFDRisonlyexaminedwhenmouthcoveringisdetected.

M.M.Ibrahimetal./BiomedicalSignalProcessingandControl18(2015)360–369

Cov

mo

dete

M cov

Disto

dete

Dist

YA

Yes No

Yes No

vered

outh

ection

outh vered

ortion

ection

tortion

AWN

No Yes

Mouth

Openin

g

(mo)

mo

>YAWN

NOT YAWN

NOT YAWN

Fig.16.Yawnanalysisalgorithm.

6. Experimentalresults

Theperformanceof thedevelopedalgorithms wastested by usingthefullSFFvideofootage.Theresultspresentedherewerethe outcomeofsubstantialreal-timeexperimentationusingthevideo sequencesfromthewholeofthedatabase.

6.1. Mouthopeningmeasurement

Themouthopeningmeasurementalgorithmsaretestedusing theSFFdatabase.Inordertoevaluatetheaccuracyofthemouth openingsegmentationusingtheproposedadaptivethreshold,the actualinput image is compared withthe segmented region of mouthopening.Fig.17showsexamplesofmouthopeningimages pairedwiththeoutputimageofmouthopeningsegmentation.A totalof145mouthopeningimagesundervaryinglighting condi-tionswereusedfortesting.Fromthesetests,itwasshownthatthe algorithmwasabletosegmentthedarkestregionbetweenthelips asrequiredfordetectingyawning.

AsdiscussedinSection4,yawningisdeterminedbymeasuring theheightofthesegmentedregionofthemouthopening.

Inordertoobtaintheapplicablethresholdvaluethatrepresents yawning,25oftherealyawningscenesfromtheSFFdatabasewere used.Fromtheyawningscenesitwasfoundthattheminimum valueofRYwhichdenotesyawningis0.5.Thisratiovalueisapplied inmouthopeningmeasurementsduringyawnanalysis.

Fig.17.Mouthopeningsegmentationimages.

6.2. Mouthcovereddetection

Inordertodetectthecoveredmouth,theFMRisexaminedin everyvideoframe.TheLBPfeaturesareextractedfromtheregion, andthelearningmachinealgorithmdecidesthestatus.The clas-sifierstested,todeterminetheirsuitabilityforuseintheanalysis algorithm,comprisedsupportvectormachines(SVM)andneural networks(NN).

A total of 500covered mouth images and 100non covered mouthimagesofsize50×55pixelhavebeenusedtotraintheNN andSVMclassifiers.TheLBPoperatorsusedforfeatureextraction were:rotationalinvariant(ri),uniform(u2),anduniformrotational

invariant(riu2).Theradiioftheregionsexaminedwereeither8,16, or24pixel.

Classificationwasperformedon300images.Fourdifferent ker-nelfunctionsweretestedfortheSVMclassifierandtwodifferent networklayersfortheNN.Analyticallythesewere:SVMwitha) radialbasisfunction(SVM-rbf),(b)linear(SVM-lin),(c)polynomial (SVM-pol),and (d)quadratic (SVM-quad). The neuralnetworks testedhad5(NN-5)and10neurons(NN-10),respectively,intheir hiddenlayer.

Itis usualtopresent suchresultsusinggraphsofsensitivity (ordinate)against1-specificity (abscissa),where sensitivity and specificityaregiven,respectively,by:

Sensitivity= TP TP+FP

(18)

Specificity= TN TN+FN

(19)

whereTprepresentsthenumberoftruepositives,Fpthenumberof falsepositives,Tnthenumberoftruenegatives,andFnthenumber offalsepositives.Sensitivityrepresentstheabilityofthedeveloped algorithmtoidentifythecoveredmouth.Thespecificityrelatesto theabilityofthealgorithmtodetectthenon-coveredmouth.For maximumaccuracyandminimumnumberoffalsepositives, max-imumvaluesofsensitivityandspecificityarerequired.TheROC curvesfromtheresultsoftheclassificationsareshowninFig.18.

ThelegendsshowninFig.18(a–c)indicatetheclassifiersused ineachcaseandtheirrespectiveoutputs.

Fig.18.ROCcurvesforLBPoperator:(a)rotationalinvariant(ri);(b)rotational invariantwithuniformpattern(riu2);(c)uniformpattern(u2)withzoom-ininsert ofupperleftcorner.

6.3. Yawnanalysis

Yawnanalysisisacombinationofmouthopeningmeasurement, mouthcovereddetection,anddistortionsdetection.

Theseoperationsareexaminedevery30s.Formouthopening measurements,thebestthresholdvaluethatrepresentsyawningis

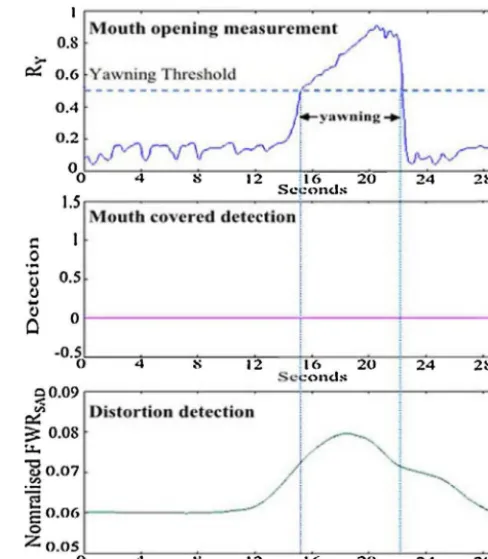

Fig.19.Plotsofyawningdetectionwithmouthnotoccluded.

0.5.BasedontheresultsobtainedandshowninFig.19,formouth coveredclassificationtheLBPuniformpattern(u2)withradius16 andaneuralnetworkclassifierwith5neuronsinthehiddenlayer arechosentodeterminethestatusofFMR.

Genuine yawning images from sequences of 30 video clips fromtheSFFdatabaseareusedforevaluationofthealgorithm’s performance. Thesevideos contain10 yawning sequences with non-coveredmouthscenes,14 withcovered mouthscenesand 6sceneswithbothsituationspresent.Examplesofthedetection resultsineachsituationofyawningareshowninFigs.19–20.

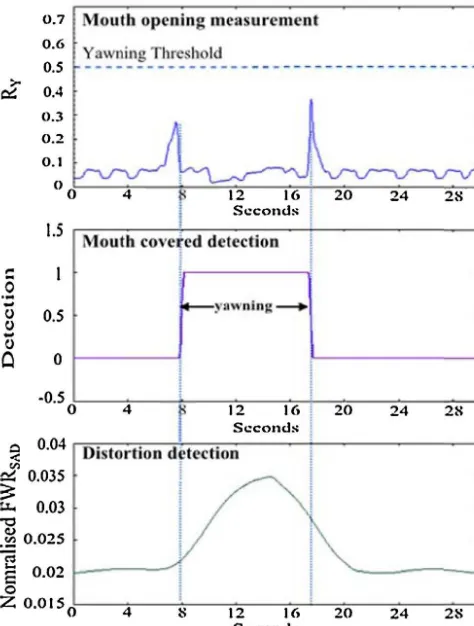

Whenthemouthisnotoccluded(Fig.19),yawningisdetected whentheheightofthemouthopeningisequaltoorgreaterthanthe thresholdvalue.Theyawningperiodismeasuredfromthe begin-ningoftheintersectionpoint betweenthethresholdvalueand theratioofthemouthopeningheighttoFMRheightRY.When theRY valueis belowthethreshold(Fig.20), thiscouldbedue tomouthbeingcoveredduringyawning.Inthiscase,themouth covereddetectionpartofthesystemistriggered.Ifmouth occlu-sionisdetected,facialdistortionduringtheperiodofocclusionis checked.Yawningisassumedtooccurwhendistortionincreases substantiallywithinthatperiod.

Whenthemouthisopenedwideoverashortperiodoftime beforeitisoccluded(Fig.21),thevalueofRYexceedsthe thresh-oldvaluefora shortperiodbeforeitdropsquickly.Inthiscase, themouthocclusiondetectionwillbetriggeredwhentheRYvalue dropsbelowthethreshold.Ifocclusionisdetected,thentheperiod ofyawningismeasuredfromthebeginningofthefirstintersection ofRYwiththethresholdvalue,untiltheendofthemouthocclusion detection.

M.M.Ibrahimetal./BiomedicalSignalProcessingandControl18(2015)360–369

Fig.20.Plotsofyawningdetectionwithmouthoccluded.

Fig.21.Plotsofyawningdetectionwithmouthinitiallynotoccludedbutoccluded subsequently.

7. Conclusion

Ayawnanalysisalgorithmdesignedtodealwithbothoccluded and uncovered mouth yawning situations was presented. This algorithmisacombinationoftechniquesformouthopening mea-surement, mouth covered detection and distortions detection.

The mouth opening is measured based on the darkest region

betweenthelips.Thedarkestregionissegmentedusinganadaptive thresholdwhichisintroducedinthispaper.Fordetectingmouth occlusion,anLBPuniformoperatorisappliedtoextractfeaturesof themouthregion,andtheocclusionisevaluatedusinganeural net-workclassifier.Toensurethatthemouthocclusionisindeeddue toyawningandnootherreason,thewrinklesofspecificregionson thefacearemeasured.Yawningisassumedtohaveoccurredwhen distortionsintheseregionsaredetected.

Inthepresentedyawnanalysisalgorithm,thetworegionsFMR andFDRaremonitoredevery30s. Theanalysisresultsproduce thestatusofyawning,aswellastheperiodofyawning.Genuine sequencesofyawningfromtheSFFdatabasevideofootageareused fortraining,testingandevaluatingtheperformanceofthe devel-opedalgorithms.Basedontheconductedexperimentsandresults obtained,theoveralldevelopedsystemdevelopedindicatedavery encouragingperformance.

Acknowledgements

ThisworkwassupportedbytheScottishSensorSystemsCentre (SSSC),theMinistryforHigherEducationofMalaysiaandUniversiti TeknikalMalaysia,Melaka(UTeM).TheSFFdatabasedevelopment wasfundedbyaBridgingTheGap(BTG)grantfromtheUniversity ofStrathclyde.

Theworkwhich ledtothedevelopmentoftheSFF database wasincollaborationwiththeschoolofPsychologicalandHealth Sciences,UniversityofStrathclyde,theGlasgowSleepCentre, Uni-versityofGlasgow,BrooksBellLtd.

References

[1]D. f. T. UK, “Reported Road Casualties in Great Britain: 2010 Annual Report”DepartmentforTransportUK,UnitedKingdom,StatisticalRelease-29 September2011.

[2]P. Jackson, C. Hilditch, A. Holmes, N. Reed, N. Merat, L. Smith, Fatigue and Road Safety: A Critical Analysis of Recent Evidence, Department for Transport, London, 2011, pp. p88.

[3]R. Resendes, K.H. martin, Saving Lives Through Advanced Safety Technology, Faderal Highway Administration, US, Washington, DC, 2003.

[4]Daimler, Drowsiness-Detection System Warns Drivers to Pre-vent Them Falling Asleep Momentarily, Daimler, 2012, Available: http://www.daimler.com/dccom/0-5-1210218-1-1210332-1-0-0-1210228-0-0-135-0-0-0-0-0-0-0-0.html.

[5]M.MichaelLittner,C.A.Kushida,D.DennisBailey,R.B.Berry,D.G.Davila,M. Hirshkowitz,Practiceparametersfortheroleofactigraphyinthestudyofsleep andcircadianrhythms:anupdatefor2002,Sleep26(2003)337.

[6]N.Mabbott,ARRBPro-activefatiguemanagementsystem,RoadTrans.Res.12 (2003)91–95.

[7]Y.Lu,Z.Wang,Detectingdriveryawninginsuccessiveimages,in: Bioinfor-maticsandBiomedicalEngineering,2007.ICBBE2007.The1stInternational Conferenceon,2007,pp.581–583.

[8]M.Mohanty,A.Mishra,A.Routray,Anon-rigidmotionestimationalgorithmfor yawndetectioninhumandrivers,Int.J.Comput.VisionRob.1(2009)89–109.

[9]F. Xiao, Y. Bao-Cai, S. Yan-Feng, Yawning detection for monitoring driver fatigue, in: Machine Learning and Cybernetics, 2007 International Conference on, 2007, pp. 664–668.

[10]W. Yao, Y. Liang, M. Du, A real-time lip localization and tacking for lip reading, in: Advanced Computer Theory and Engineering (ICACTE), 2010 Third Interna-tional Conference on, 2010, V6-363-V6-366.

[11]M. Omidyeganeh, A. Javadtalab, S. Shirmohammadi, Intelligent driver drowsi-ness detection through fusion of yawning and eye closure, in: Virtual EnvironmentsHuman–ComputerInterfacesandMeasurementSystems (VEC-IMS),2011IEEEInternationalConferenceon,2011,pp.1–6.

ITSC’09. 12th International IEEE Conference on, 2009, pp. 1–6.

[17]Y. Yang, J. Sheng, W. Zhou, The monitoring method of driver’s fatigue based on neural network, in: Mechatronics and Automation, 2007. ICMA 2007. Interna-tional Conference on, 2007, pp. 3555–3559.

[18]H. García, A. Salazar, D. Alvarez, Á. Orozco, Driving fatigue detection using active shape models, Adv. Visual Comput. 6455 (2010) 171–180.

[19]S. Anumas, Kim Soo-chan, Driver fatigue monitoring system using video face images & physiological information, in: Biomedical Engineering International Conference (BMEiCON), 2011, 2012, pp. 125–130.

[20]S.Hachisuka,K.Ishida,T.Enya,M.Kamijo,Facialexpressionmeasurementfor detectingdriverdrowsiness,Eng.Psychol.Cogn.Ergon.6781(2011)135–144.

[21]E.Vural,M.Cetin,A.Ercil,G.Littlewort,M.Bartlett,J.Movellan,Drowsydriver detectionthroughfacialmovementanalysis,in:Human–ComputerInteraction, 2007,pp.6–18.

[22]X.Fan,B.-C.Yin,Y.-F.Sun,Dynamichumanfatiguedetectionusing feature-levelfusion,in:Springer(Ed.),ImageandSignalProcessing,Springer,2008,pp. 94–102.

[28]G. Zhao, M. Pietikainen, Dynamic texture recognition using local binary pat-terns with an application to facial expressions, in: Pattern Analysis and Machine Intelligence, IEEE Transactions on, 2007, pp. 915–928, 29.

[29]T. Ojala, M. Pietikainen, T. Maenpaa, Multiresolution gray-scale and rota-tion invariant texture classification with local binary patterns, in: Pattern Analysis and Machine Intelligence, IEEE Transactions on, 2002, pp. 971–987, 24.

[30]R.O. Duda, P.E. Hart, Pattern Classification and Scene Analysis, vol. 3, Wiley, New York, NY, 1973.

[31]J.-Y. Zhang, Y. Chen, X.-x. Huang, Edge detection of images based on improvedSobel operatorand geneticalgorithms, in:ImageAnalysisand Signal Processing, 2009. IASP 2009. International Conference on, 2009, pp.31–35.