A Neurally Controlled Computer Game Avatar

With Humanlike Behavior

David Gamez, Zafeirios Fountas

, Member, IEEE

, and Andreas K. Fidjeland

, Member, IEEE

Abstract—This paper describes the NeuroBot system, which uses a global workspace architecture, implemented in spiking neurons, to control an avatar within the Unreal Tournament 2004 (UT2004)computer game. This system is designed to dis-play humanlike behavior withinUT2004, which provides a good environment for comparing human and embodied AI behavior without the cost and difficulty of full humanoid robots. Using a biologically inspired approach, the architecture is loosely based on theories about the high-level control circuits in the brain, and it is thefirst neural implementation of a global workspace that has been embodied in a complex dynamic real-time environment. NeuroBot’s humanlike behavior was tested by competing in the 2011 BotPrize competition, in which human judges playUT2004

and rate the humanness of other avatars that are controlled by a human or a bot. NeuroBot came a close second, achieving a humanness rating of 36%, while the most human human reached 67%. We also developed a humanness metric that combines a number of statistical measures of an avatar’s behavior into a single number. In our experiments with this metric, NeuroBot was rated as 33% human, and the most human human achieved 73%.

Index Terms—Benchmarking, botprize, competitions, computer game, evaluation, global workspace, neural networks, serious games, spiking neural networks, Turing test.

I. INTRODUCTION

I

N 1950, Turing [1] proposed that an imitation game could be used to answer the question whether a machine can think. In this game, a human judge engages in conversation with a human and a machine and tries to identify the human. The conversation is conducted over a text-only channel, such as a computer console interface, to ensure that the voice or body of the machine does not influence the judge’s decision. While Turing posed his original test as a way of answering the question whether machines can think, it is now more typically seen as a way of evaluating the extent to which machines have achieved human-level intelligence.Since the Turing test was proposed, there has been a substan-tial amount of work on programs (chatterbots) that attempt to pass the test by imitating the conversational behavior of humans. Many of these compete in the annual Loebner Prize competition

Manuscript received February 19, 2012; revised July 16, 2012; accepted Oc-tober 24, 2012. Date of publication November 20, 2012; date of current version March 13, 2013. This work was supported by the Engineering and Physical Sci-ences Research Council (EPSRC) under Grant EP/F033516/1.

D. Gamez was with Imperial College London, London SW7 2AZ, U.K. He is now with the University of Sussex, Brighton, East Sussex BN1 9RH, U.K.

Z. Fountas and A. K. Fidjeland are with Imperial College London, London SW7 2AZ, U.K. (e-mail: andreas.fi[email protected]).

Color versions of one or more of thefigures in this paper are available online at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TCIAIG.2012.2228483

[2]. None of these programs has passed the Turing test so far, and they often rely on conversational tricks for their apparent realism, for example, parrying the interlocutor with a question when they cannot process or understand the input [3].

One reason why chatterbots have failed to pass the Turing test is that they are not embodied in the world. For example, a chatterbot can only respond to the question “Describe what you see on your left” if the programmers of the chatterbot put in the answer when they created the program. This lack of em-bodiment also leads to a constant need for dissimulation on the part of the chatterbot: when asked about its last illness, it has to make something up. There is also an emerging consensus within the AI community that many of the things that we do with our bodies, such as learning a new motor action, require and exhibit intelligence, and most AI research now focuses on intelligent actions in the world, rather than conversation.

This emphasis on embodied AI has led to a number of varia-tions of the Turing test. In addition to its bronze medal for hu-manlike conversation, the Loebner Prize has a gold medal for a system that can imitate the body and voice of a human. Harnad [4] has put forward a T3 version of the Turing test, in which a system has to be indistinguishable from a human in its external behavior for a lifetime, and a T4 version in which a machine has to be indistinguishable in both external and internal func-tion. The key challenge with these more advanced Turing tests is that it takes a tremendous amount of effort to produce and con-trol a convincing robot body with similar degrees of freedom to the human body. Although some progress has been made with the production of robots that closely mimic the external appear-ance of humans [5], and people are starting to build robots that are closely modeled on the internal structure of the human body [6], these robots are available to very few researchers and the problems of controlling such robots in a humanlike manner are only just starting to be addressed.

A more realistic way of testing humanlike intelligence in an approximation to an embodied setting has been proposed by Hingston [7], who created the BotPrize competition [8]. In this competition, software programs (or bots) compete to provide the most humanlike control of avatars within theUnreal

Tour-nament 2004 (UT2004)computer game environment. Human

judges play the game and rate the humanness of other avatars, which might be controlled by a human or a bot, with the most humanlike bot winning the competition. If a bot became indis-tinguishable from the human players, then it might be consid-ered to have human-level intelligence within theUT2004 envi-ronment according to Turing [1]. This type of work has prac-tical applications, since it is more challenging and rewarding to play computer games against opponents that display human-like behavior. Humanhuman-like bot behavior can also be applied in

fi

of the brain. The high-level coordination and control of our system is carried out using a global workspace architecture [9], a popular theory about conscious control in the brain that explains how a serial procession of conscious states could be produced by interactions between multiple parallel processes. This global workspace coordinates the interactions between specialized modules that operate in parallel and compete to gain access to the global workspace. Both the workspace and the specialized modules are implemented in biologically inspired circuits of spiking neurons, with CUDA hardware acceleration on a graphics card being used to simulate the network’s 20 000 neurons and 1.5 million connections in close to real time.

The humanness of NeuroBot’s behavior was tested by com-peting in the 2011 Botprize competition and by participating in a conference panel session on the Turing test. These events showed that human behavior within UT2004 varies consider-ably and that human judgments are not a particularly reliable way of identifying human behavior within this environment. To explore this further, we developed a humanness metric ,2that

combines a number of statistical measures of an avatar’s be-havior into a single number. This was used to compare Neu-roBot with human subjects as well as with the Hunter bot sup-plied with the Pogamut bot toolkit forUT2004[10]. In the fu-ture, could be used to discriminate between human- and bot-controlled avatars, and to evolve systems with humanlike be-havior.

Thefirst part of this paper provides background information about the global workspace architecture and describes previous related work on spiking neural networks, robotics, and human-like control of computer game avatars (Section II). Next, the architecture of our system is described, including the structure of the global workspace, the specialized parallel processors, and the spike conversion mechanisms (Section III). We then cover the implementation of our system (Section IV) and outline the methods that we used to evaluate the humanness of NeuroBot’s behavior (Section V). Finally, we conclude with a discussion and plans for future work.

II. BACKGROUND

A. Global Workspace

The global workspace architecture wasfirst put forward by Baars [9] as a model of consciousness in which separate parallel

1A download of the latest version of NeuroBot (binary and source code) is available here: http://www.doc.ic.ac.uk/zf509/neurobot.html. This includes a C++ wrapper for interfacing with GameBots.

2 (mannaz) means “man” in the runic alphabet.

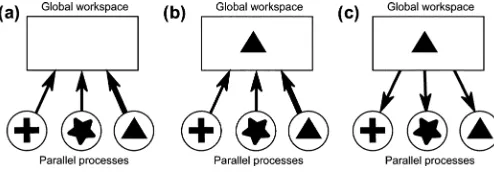

connection strengths from the parallel processes to the global workspace or by a saliency value attached to each parallel process. (b) The process on the right wins and its information (represented as a triangle) is copied into the global workspace. (c) Information in the global workspace is broadcast back to the par-allel processes and the cycle of competition and broadcast begins again. More information about the abstract global workspace architecture can be found in [9] and a detailed description of a neural implementation is available in [11].

processes are coordinated to produce a single stream of control. The basic idea is that the parallel processes compete to place their information in the global workspace. The winning infor-mation is then broadcast to all of the other processes, which ini-tiates another cycle of competition and broadcast (Fig. 1). Dif-ferent types of process are specialized for difDif-ferent tasks, such as analyzing perceptual information or carrying out actions, and processes can also form coalitions that work toward a common goal. These mechanisms enable global workspace theory to ac-count for the ability of consciousness to handle novel situations, its serial procession of states, and the transition of information between consciousness and unconsciousness.

A number of neural models of the global workspace architec-ture have been built to investigate how it might be implemented in the brain. Dehaeneet al.created a neural simulation to study how a global workspace and specialized processes interact during the Stroop task and the attentional blink [12]–[14], and a related model was constructed by Zylberberg et al.

[15]. A brain-inspired cognitive architecture based on global workspace theory has been developed by Shanahan [16], which controlled a simulated wheeled robot, and Shanahan [11] built a global workspace model using simulated spiking neurons, which showed how a biologically plausible implementation of the global workspace architecture could move through a serial progression of stable states. More recently, Shanahan [17] hypothesized that neural synchronization could be the mechanism that implements a global workspace in the brain, and he is developing neural and oscillator models of how this could work.

successful software system based on a global workspace archi-tecture is CERA–CRANIUM [21], which won the BotPrize in 2010 and the humanlike bots competition at the 2011 IEEE Con-gress on Evolutionary Computation. CERA–CRANIUM uses two global workspaces: thefirst receives simple percepts, ag-gregates them into more complex percepts, and passes them on to the second workspace. In the second workspace, the com-plex percepts are used to generate mission percepts that set the high-level goals of the system.

While programmatic implementations of the global workspace architecture have been used to solve challenging problems in real time, with the exception of [16], the neural implementations have typically been disembodied models that are designed to show how a global workspace architecture might be implemented in the brain. One aim of the research described in this paper is to close the gap between successful programmatic implementations and the models that have been developed in neuroscience: using spiking neurons to implement a global workspace that controls a system within a challenging real-time environment. Addressing this type of problem with a neural architecture will also help to clarify how a global workspace might be implemented in the brain.

B. Spiking Neural Networks and Robotics

The work described in this paper takes place against a back-ground of previous research on the control of robots using bio-logically inspired neural networks. There has been a substantial amount of research in this area, with a particularly good example being the work of Krichmaret al. [22], who used a network of 90 000 rate-based neuronal units and 1.4 million connec-tions to control a wheeled robot. This network included models of the visual system and hippocampus, and it used learning to solve the water maze task. The work that has been done on the control of robots using spiking neural networks includes a net-work with 18 000 neurons and 700 000 connections developed by Gamez [23], which controlled the eye movements of a vir-tual robot and used a learned association between motor output and visual input to reason about future actions. In other work, Bouganis and Shanahan [24] developed a spiking network that autonomously learned to control a robot arm after an initial pe-riod of motor babbling; there has been some research on the evolution of spiking neural networks to control robots [25], and a self-organizing spiking neural network for robot navigation has been developed by Nichols et al. [26]. As far as we are aware, none of the previous work in this area has used a global workspace implemented in spiking neurons to control a com-puter game avatar.

C. Humanlike Control of Computer Game Avatars

A variety of approaches have been used to produce humanlike behavior in computer games, such asUT2004andQuake. For example, a substantial amount of research has been carried out on evolutionary approaches [27]–[32] and many groups have used machine learning to copy human behavior [33]–[37]. Other approaches include a self-organizing neural network with rein-forcement learning developed by Wanget al.[38], and UT ’s hybrid system, which uses an evolved neural network to control combat behavior and replays prerecorded human paths when the

bot gets stuck [39]. Of particular relevance to NeuroBot is the hierarchical learning-based controller developed by van Hoorn

et al.[40]. In this system, the execution of different tasks is

car-ried out by specialized low-level modules, and a behavior se-lector chooses which controller is in control of the bot. Both the specialized modules and the behavior selector are neural net-works, which were developed using an evolutionary algorithm. Related work on the evaluation of humanlike behavior in computer games has been carried out by Changet al.[41], who developed a series of metrics to compare human and artificial agent’s behavior in theSocial Ultimatumgame. A trained neural gas has been used to distinguish between human- and bot-con-trolled avatars inUT2004[37], and Paoet al.[42] developed a number of measures (including path entropy) for discriminating between human and bot paths inQuake 2.

III. NEURONALGLOBALWORKSPACE

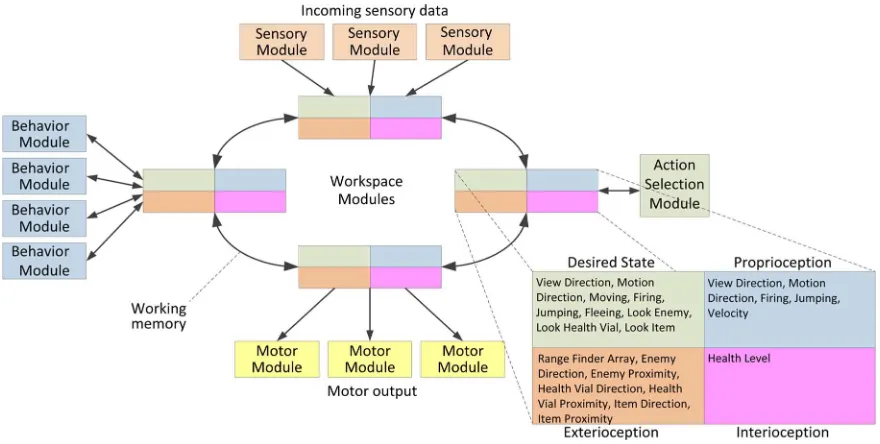

This section describes the global workspace architecture shown in Fig. 2, which enables NeuroBot to switch between different behaviors and deliver humanlike avatar control within

UT2004. Details about the implementation of this architecture

in a network of spiking neurons are given in Section IV. Be-cause of space constraints, only a brief description is given of most of the specialized modules, with full details available in [43].

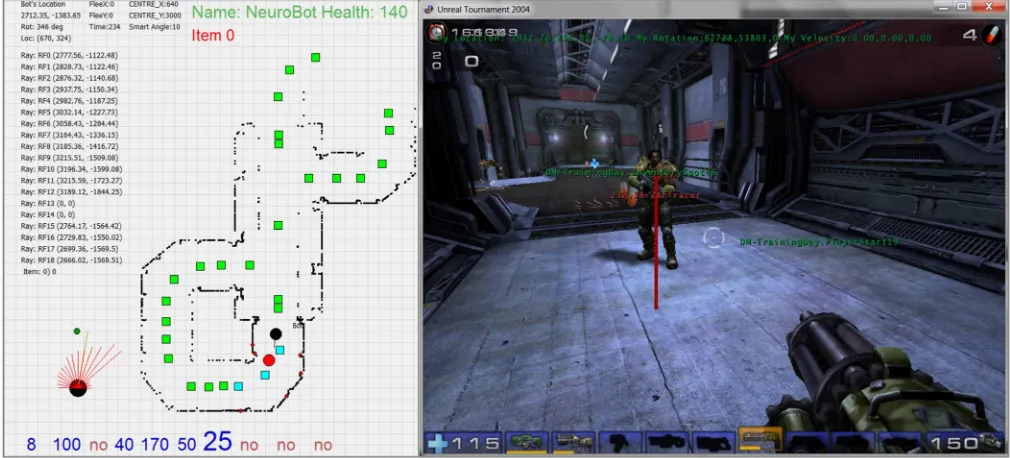

A. Sensory Input and Output

Instead of the rich visual interface provided to human players, bots have direct access to the avatar’s location, direc-tion, and velocity, as well as the position of all currently visible items, players, and navigation points. These data are provided in an absolute 3-D coordinate frame, as shown in Fig. 3. Bots can query the spatial structure of the avatar’s immediate en-vironment using range-finder data, and they also have access to proprioceptic data (crouching, standing, jumping, shooting) and interioceptic data (health, adrenaline level, armor), which are provided as scalar values. The avatar can be controlled by sending commands specifying its movement, rotation, shooting, and jumping.

To use these data within our system it is necessary to convert the data from theUT2004environment into patterns of spikes that are passed to the neural network. To begin with, a number of preprocessing steps are applied to the input data. First, informa-tion about an enemy player or other object is converted into a di-rection and distance measurement that is relative to the avatar’s location. Second, a measure of pain is computed as the rate of decrease of health plus the number of hit points. Third, the ve-locity of the avatar is calculated and a series of predefined cast rays are converted into a simulated range-finder sensor. Fourth, each piece of information is transformed into a number in the range 0–1 that is multiplied by a weight.

Fig. 2. NeuroBot’s global workspace architecture.

temporal or spatial precision is required, a population of neu-rons is used to represent the encoded value. For example, spa-tial data about the avatar’s environment are represented using an egocentric 1-D neuron population vector in which each neuron represents a direction in a 360 view around the avatar, with the directions expressed relative to the current view direction, and the salience indicated by thefiring rate [Fig. 4(a)].

Two different approaches were used to decode the spiking output from the network into scalar motor commands. Simple commands, such as shoot or jump, were extracted by calculating the averagefiring rate of the corresponding neurons over a small time window. The angles specifying motion and view directions were encoded by populations of neurons and extracted in the following way.

1) Find all contiguous groups of neurons of the population vector , where the firing rate over a small recent time window of each neuron within the group is above a threshold (half the overall averagefiring rate).

2) For each such group , create a new population vector that inherits only thefiring rates of neurons within the con-tiguous group with rates above the threshold.

3) Calculate the second-order polynomial regression of each .

4) Calculate the angle where

An illustration of this algorithm is given in Fig. 4(b), which shows significant activity in four different areas of the popula-tion vector encoding the desired mopopula-tion direcpopula-tion. This method enables our system to accurately identify the center of the most active group of motor output neurons, implementing a winner-takes-all mechanism that ignores small activity peaks in the rest of the population vector.

B. Global Workspace

The global workspace (Fig. 2) facilitates cooperation and competition between the behavior modules. In combination

with the action selection module, it ensures that only a single behavior module, or a coherent coalition of behavior mod-ules, is in control of the motor output. Based on the workspace model in [11], the architecture consists of four layers of neurons linked with topographic excitatory connections (to preserve patterns of activation) and diffuse inhibitory connections (to decay patterns of activation over time). The information in each layer is identical, and the four layers are used as a kind of working memory that enables information to reverberate while the modules compete to broadcast their information. Each workspace layer is divided into areas that represent four different types of globally broadcast information: 1) desired body state (the output data that are sent to control the next position of the avatar); 2) proprioceptive data (the avatar’s current position and movements); 3) exteroceptive data (the state of the observable environment); and 4) interoceptive data (the internal state of the avatar).

C. Behaviors

The behavior of NeuroBot is determined collectively by a number of specialized modules, which read sensory data from the global workspace and compete, either individually or as part of a coalition, to control the avatar. The competition is carried out by comparing the activity of the salience layers in each module, with the most salient module winning access to the workspace and being executed. The action selection module ensures that contradictory commands, such as jumping and not jumping, cannot be carried out. The design of many of the mod-ules was inspired by Braitenberg vehicles [46], which use pat-terns of excitatory and inhibitory wiring to produce attraction and repulsion behaviors that have good tolerance to noise. The following paragraphs outline NeuroBot’s current behaviors.

a) Moving: This determines whether the avatar moves or

Fig. 3. Game environment. On the left is a map showing the data that are available to bots through the GameBots 2004 server (see Section IV-B. This includes the location of the avatar (black circle), other players (red circles), navigation points in view (cyan squares), and out of view (green squares), and wall locations gathered from ray tracing. On the right is the in-game view that is displayed to human players.

movement. This module contains a layer of neurons with a low level of activity, which send spikes to the salience layer.

b) Navigation: This module (Fig. 5) controls the avatar’s

direction of motion, enabling it to follow walls and avoid colli-sions. A coalition between the moving and navigation modules can cause the avatar to move in a particular direction.

c) Exploration: In situations without any observable

ene-mies or other interesting items, this module enables the avatar to exhibit exploratory behavior. The architecture here is similar to the navigation module, except that the activation of its salience layer is proportional to the activation of neurons representing the level of health, which avoids exploratory behaviors when the avatar’s health is low.

d) Firing: This module enables the avatar to attack or

de-fend itself when threatened by enemies. Using similar mecha-nisms to the previous cases, the salience layer is activated only when the observed direction of the enemy is aligned with the current view. To achieve this, only the central neurons of the egocentric neuron population vector representing the direction of the enemy are connected to the inhibitory layer, and the ac-tivation of the salience layer is proportional to the enemy prox-imity and the level of health. Hence, if an enemy is close and the avatar has sufficient health, it willfire.

e) Jumping: The salience of this module increases when

the moving module is active and there is low horizontal ve-locity, causing the avatar to jump over an obstacle or escape from a situation in which it is stuck. This module also increases in salience when an approaching enemy is observed. In this sit-uation, jumping makes it harder for the enemy to hit the avatar.

f) Chasing: This module causes the avatar to follow

en-emies that try toflee, for example, due to falling health. The salience layer is activated when the avatar’s own health is good and an enemy is nearby or moving away, at which point the chasing module drives the desired direction of view toward the

fleeing player and influences the desire to look in the direction of this player. The aggregate behavior depends on the salience of the moving andfiring modules. For example, when this module is cooperating with the moving module, the avatar will start chasing the enemy. On the other hand, if the view direction is aligned with the direction of the enemy, then the salience of the

firing module will increase and the avatar will start shooting.

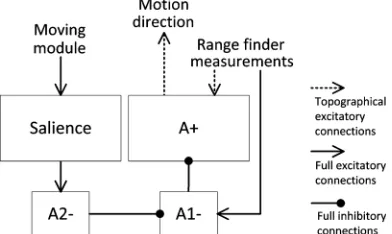

g) Fleeing: The fleeing module (Fig. 6) increases in

salience when an enemy is near and the level of health is low. When it is active, it drives the motion direction in the opposite direction to the source of immediate danger.

h) Recuperation: The recuperation module activates when

health is low and the location of health vials is known. When it is active, this module drives the view and motion direction to-ward observed health vials. This module usually forms a coali-tion with thefleeing and moving modules, resulting in an active

fleeing behavior that looks for health vials while it tries to avoid the attacking enemy.

i) Item Collection: This controls the approach and

col-lection of items. When an item is noticed, the salience layer becomes active and the module aligns the avatar’s view direc-tion with the item. When this module forms a coalidirec-tion with the moving and navigation modules, the avatar will approach and collect the target item.

j) U-Turn: This module enables the avatar to escape from

situations in which it is surrounded by walls and cannot decide which direction to turn. This usually means that the avatar is trapped and an obvious approach is to reverse the direction of motion. This module is also activated when the avatar is being attacked by an unseen enemy.

D. Action Selection

se-Fig. 4. Encoding/decoding of spatial data using an egocentric 1-D population code. A vector of neurons represents a range of spatial directions with respect to the current direction of view. (a) Example of howfiring activity in a neuron population encodes the presence of a wall to the right, wherefiring activity is inversely proportional to distance. (b) Example of decoding the value that represents the desired motion direction.

Fig. 5. Navigation module. Workspace neurons representing range finder measurements are topographically connected to an excitatory layer A , which passes the pattern to the desired motion direction. This causes the avatar to move in the direction where the most empty space has been observed. The same workspace neurons also excite the inhibitory layer A1 , which inhibits A . Thus, in a situation where the salience layer is not active, A is not able to send spikes back to the workspace node. The salience layer is activated when the moving module is also active, when it sends spikes to the inhibitory layer A2 , which then inhibits A1 . As a result, A1 becomes unable to stop A from sending the desired motion direction back to the workspace, and the entire module becomes active.

lecting modules based on the activity in their salience layers and preventing contradictory actions from being implemented simultaneously. The internal structure of this module consists of an excitatory input layer and an inhibitory layer of the same size. The input layer is connected to the inhibitory layer, and it re-ceives connections from the workspace neurons that hold infor-mation about the desired state of the system. These workspace

Fig. 6. Fleeing module. The enemy direction is diametrically connected to a layer of excitatory neurons A , which sends back to the workspace node the direction that the avatar should point in order toflee from the enemy. The enemy direction also inhibits the A layer through the inhibitory layer A1 to disable the broadcast of this vector in cases where the salience layer is not active. The level of activation of the salience layer also depends on enemy proximity and the level of health.

neurons also receive connections from the inhibitory layer, as shown in Fig. 7.

IV. IMPLEMENTATION

Fig. 7. Action selection module.

could learn are given in Section VI. A custom C++ interface was created to communicate with the GameBots 2004 server.

A. Spiking Neural Network

Spiking neural networks were selected for this system be-cause they have a number of advantages over rate-based models [47], [48], including their efficient representation of informa-tion in the form of a temporal code [49] and their ability to use local spike-time-dependent plasticity learning rules to iden-tify relationships between events [50]. It is also being increas-ingly recognized that precise spike timing plays an important role in the biological brain [51]–[53], and the implementation in our system of spiking neurons enabled us to take advantage of high-performance CUDA-accelerated spiking neural simula-tors that can model large numbers of neurons and connections in real time [54], [55]. The feasibility of this approach has been demonstrated by other global workspace implementations based on spiking neurons [11], [13]–[15], which provided a source of inspiration for the current system.

The neurons in the network were simulated using the Izhike-vich model [56], which accurately reproduces biological neu-rons’ spiking response without modeling the ionicflows in de-tail. This model is computationally cheap and suitable for large-scale network implementations [57], [58]. The parameters in Izhikevich’s model can be set to reproduce the behavior of dif-ferent types of neurons, which vary in terms of their response characteristics. In our simulation, we used two types of neu-rons, excitatory and inhibitory, which have different behaviors and connection characteristics.

The spiking neural network was simulated using the NeMo spiking neural network simulator [54], [59], which was de-veloped by some of the authors of this paper and is primarily intended for computational neuroscience research. NeMo is a high-performance simulator that runs parallel simulations on graphics processing units (GPUs), which provide a high level of parallelism and very high memory bandwidth. The use of GPUs makes the simulator eminently suitable for implementing neu-rally based cognitive game characters infirst-person shooters

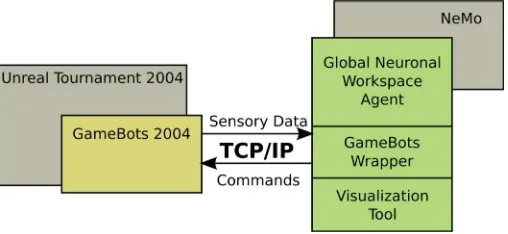

Fig. 8. System overview.

and similar games since the required parallel hardware is typically already present on the player’s computer.3

NeMo updates the state of the neural network in a discrete-time simulation with a 1-ms discrete-time step. While NeMo can sim-ulate the NeuroBot network of 20 000 neurons and 1.5 million connections in real time on a high-end consumer graphics card, the NeuroBot network typically ran using a 5-ms time step, ef-fectively simulating the network at afifth of the normal speed. This was mainly due to the computing requirements of its other components. The parameters of the network were adjusted to produce the desired behavior at this update rate.

B. UT2004 Interface

Our system uses sockets to communicate with the GameBots 2004 server [60], which interfaces with UT2004 (Fig. 8). In the case of a bot connection, GameBots sends synchronous and asynchronous messages containing information about the state of the world, and it receives commands that control the avatar in its environment. To facilitate this communication, we created a C++ wrapper for GameBots 2004, which contains a syntactic

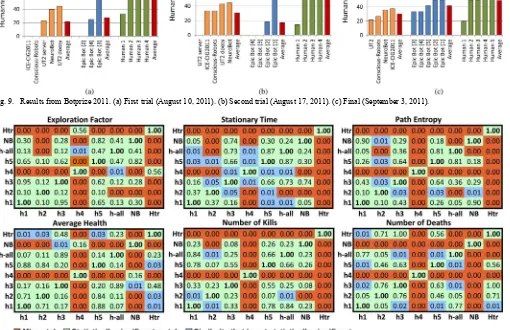

Fig. 9. Results from Botprize 2011. (a) First trial (August 10, 2011). (b) Second trial (August 17, 2011). (c) Final (September 3, 2011).

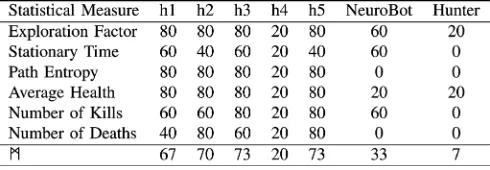

Fig. 10. Pairwise similarity betweenfive human subjects (h1–5), the average of the human subjects (h-all), NeuroBot (NB), and Hunter (Htr) for the different statistical measures. The numbers are the -values that were computed from the data recorded across the runs. A -value greater than 0.05 indicates that there is no statistically significant difference between the two sets of measurements.

analyzer that parses the GameBots messages and updates the attributes of the corresponding objects that form the system’s representation of the world. The system then converts the gath-ered information into spikes that are passed into the network, and spikes from the network are converted back into scalar vales that are sent to the GameBots server. The communication loop with GameBots is asynchronous and runs independently of the neural network update.

V. MEASUREMENTS OFNEUROBOT’SHUMANLIKEBEHAVIOR

The humanness of NeuroBot’s behavior was evaluated by competing in the 2011 Botprize competition, by participating in a conference panel session, and statistical measures were used to compare the behavior of avatars controlled by humans and NeuroBot.

A. Human Judgements About NeuroBot’s Behavior

The humanlike behavior of NeuroBot wasfirst evaluated by competing in the 2011 Botprize competition [8]. In this compe-tition, human judges play the game and rate the humanness of other avatars, which might be controlled by a human or a bot, with the most humanlike bot winning the competition. The 2011

Botprize competition consisted of two trial rounds, which were mainly intended to establish that everything was working as ex-pected, and the actual competition. We have included the results from the trials as well as thefinal competition to give a better idea about the variability.

The results shown in Fig. 9 indicate that NeuroBot was a highly ranked competitor, achievingfirst or second place in the trials and a close second in thefinal. In the last round of the

We also carried out an informal evaluation of NeuroBot at the 2011 SGAI International Conference on Artificial Intelli-gence as part of a panel session on the Turing test. An audi-ence of around 50 conferaudi-ence delegates was shown an avatar controlled by NeuroBot on one randomly selected screen and an avatar controlled by a human on another randomly selected screen. At the end of each 5-min round the audience was asked to vote on which of the avatars was controlled by a human and which was controlled by a bot and to explain the reasons for their choice. In thefirst round, one avatar was controlled by an amateur human player, and the majority of the audience incor-rectly believed that the NeuroBot avatar was being controlled by a human. In the second round, the other avatar was controlled by a skilled player and the majority of the audience correctly identified the human-controlled avatar. In the second case, many audience members reported that their decision was based on in-formation supplied by the chair that the human player in the second round was highly skilled, which led them to select the most competent avatar. One audience member reported that his correct identification of the human player had been based on minor glitches in NeuroBot’s behavior.

These evaluations suggest that humans often make incorrect judgements about whether an avatar is being controlled by a human or a bot and they are often influenced by the skill level of the player, which is highly variable between humans. In the Botprize competition, there is a further problem that the humans are carrying out a double role in which they are supposed to be playing the game as normal players while they make judgements about the behavior of the bots. These roles are to some extent contradictory since accurate judgements are likely to depend on close observation of other players from a relatively safe place in a static position. This will tend to reduce the number of judge-ments that are made about judges’ humanness, and make human players behave differently from human judges.4

B. Statistical Measures of Humanlike Behavior

The variability of human judgements and human behavior led us to develop a number of statistical measures of avatars’ be-havior inUT2004, which were used to compare the behavior of NeuroBot with humans and to explore the behavior differences between human players.

• Exploration factor (EF): While humans smoothly navigate around UT2004 using the rich visual interface, avatars guided by range-finder data are likely to get stuck on walls and corners and fail to explore large areas of the game environment, for example, a wall-following bot might endlessly circle a block or column. The EF measure was developed to quantify the extent to which an avatar visits all of the available space in its environment.

A GameBots map in UT2004 has predefined navigation points that are spread throughout the accessible areas and placed in strategic positions to help simple software bots navigate. Thefirst stage in the calculation of EF is a Voronoi tessellation of the map, which divides it into navigation areas, which contain the points that are

4Perhaps this is why the winning ICE–CIG2011 bot was based on human judges, not human players [37].

closest to each navigation point. EF is then averaged over a number of time periods as follows:

EF

where is the number of different navigation areas visited in each time period; is the number of transitions between navigation areas in each time period (this is incremented by 1 to ensure that EF lies between 0 and 1); is the number of different navigation areas visited in the entire testing period; is the total number of navigation areas in the map, which is the same as the number of navigation points; and is the number of time periods over which EF is calculated. In our experiments, each time period was 1 min in duration. EF has a minimum of 0 and a maximum of 1. If a player visits all of the navigation areas once, its exploration factor will be 1, which means that the avatar has followed the best possible path to explore the map. Contrariwise, if the avatar alternates between two navigation areas in a much larger map, then the total number of visited navigation areas will be 2 and the exploration factor will be close to 0. If the player visits more navigation areas, but most of the time alternates between two navigation areas, then the average over the time periods will tend toward 0, and the explo-ration factor will again be close to 0.

• Stationary time: It is likely that human-controlled avatars will exhibit characteristic patterns of resting and movement that will differentiate them from bots. The total amount of time that the avatar was stationary during each round was recorded to determine if this is a useful measure.

• Path entropy: It is likely that human players will exhibit more variabilityintheirmovements,beingattractedtowardsalient features of their environment, such as doors, staircases, or objects, whereas bots are more likely to exhibit stereotyped movements that are linked to the game’s navigation points. Path entropy was measured by dividing the map into squares and recording the probability that the avatar would be in each square during each round [42]. The information entropy was then calculated using the standard formula

Fig. 11. Typical paths recorded from (a) human, (b) NeuroBot, and (c) Hunter as well as the duration and location of each stop.

A number of other statistical measures were tried, which there was not space to present in this paper. These included av-erage enemy distance, number of jumps, avav-erage wall distance, number of items taken, and shooting time. Visualization of the avatars’ paths and their stopping time was also used to compare the behavior of human- and NeuroBot-controlled avatars.

To test these measures, we recorded data from avatars controlled by humans or bots within the UT2004map shown in Fig. 11. Ten 5-min rounds were used to collect the data from each human player (h1–h5) and data from 35-min rounds was recorded for NeuroBot and the Hunter bot included with Pogamut. In each round either a human or one of the bots played against the Epic bot that is shipped with UT2004.5

Pairwise similarity between the data gathered from human and bot players was then computed using a standard two tail t-test for unequal variances.

5While the Epic bot that is included withUT2004would have been a better target for comparison with NeuroBot, this was not possible in practice because of the limited amount of data that could be recorded from the Epic bot.

Thefirst thing to note about the results shown in Fig. 10 is that human behavior is not a single unitary phenomenon; the move-ment patterns, health, kills, and deaths of the human-controlled avatars all varied significantly between players. In particular, h4 displayed a large amount of anomalous behavior compared with the rest of the humans and the bots, probably because she or he was the least skilled player. Some more specific comments about the results are as follows.

• EF: NeuroBot matched most of the human players on this measure, which suggests that it explores the game envi-ronment in a reasonably humanlike manner. Interestingly, both Hunter and h4 had higher exploration factors than NeuroBot and the other humans, which suggests that com-plete systematic exploration of an environment is not a typ-ical human characteristic.

• Stationary time: NeuroBot matched a majority of humans on this measure, which exhibited a substantial amount of variability between humans. Hunter had a poor match to the human data because it was stationary for approximately four times longer than most human players.

• Path entropy: Hunter typically had a lower path en-tropy than the humans, whereas NeuroBot’s path enen-tropy matched the majority of human players. These differences in path entropy are clearly visible in the path traces shown in Fig. 11.

• Average health: Hunter appears to conserve its health in a more humanlike way than NeuroBot, which had a higher average value than the human players.

• Number of kills: NeuroBot and the human players killed a similar number of other players, whereas Hunter typically killed two to three times more players. In this case, playing the game too well led to behavior that was nonhuman in character.

To get an overall idea of the extent to which NeuroBot and Hunter exhibited humanlike behavior withinUT2004, we de-veloped a humanness metric (mannazmeaning “man” in the runic alphabet), which measures the extent to which the behavior of an avatar matches the behavior of a population of humans, using a particular set of statistical measures. For a bot-controlled avatar, is the average percentage of statistically significant matches between the bot and the sampled human players. for a human-controlled avatar is the average percentage of statisti-cally significant matches between the human and all of the sam-pled human players, this time including the human that is being measured, who is an example of a human player.

The results shown in Table I confirm the observation dis-cussed earlier that the human player h4 behaved very differ-ently than the other human players. Interestingly, the -values are close to the results of the human judgements in the Bot-prizefinal, in which the most human human scored 67, the least human human scored 20, and NeuroBot scored 36.

VI. DISCUSSION ANDFUTUREWORK

While the results from the Botprizefinal could have been im-proved by colocation of NeuroBot and the GameBots server, they still indicate that there is a significant gap between the average humanness rating of NeuroBot compared with the Epic bots and humans. One way of addressing this gap would be to add mech-anisms to NeuroBot that could enable it to learn from its own mistakes and imitate avatars controlled by humans, an approach that has been successfully followed in other work (Section II-C). This learning could be implemented using genetic algorithms, or a spike-time-dependent plasticity (STDP) rule could be used to train a randomly wired module to perform a particular func-tion. A second way of increasing NeuroBot’s humanness would be to extend its repertoire of behaviors. While NeuroBot can carry out a variety of actions, such as exploration, wall avoidance, and shooting, in a fairly humanlike manner, it lacks higher level strate-gies that are often used by humans, such as concealment, ambush, or sniping from a high position. Ideally, NeuroBot would learn these strategies by observing other players, although a theory of mind might be necessary to infer the purpose behind a strategy, for example, replaying a concealment maneuver will only work if other players do not observe the action.

Both the human judgements and the statistical measures il-lustrate that humanlike behavior withinUT2004 is not a uni-tary phenomenon. Different people have different styles of play, people with divergent skill levels approach the game in dis-tinct ways, and, within the Botprize competition, the judging task changes the human players’ behavior. This suggests that people who want to produce avatars with humanlike behavior willfirst have to decide what sort of human behavior they want to produce, for example, skilled or unskilled, standard player, or judge. This diversity of human behavior could be addressed in the Botprize competition by making the human judges into pure observers of the game and allowing only skilled human players to compete against the bots.6The statistical approach outlined 6One problem with this solution is that interaction is typically used in the Turing test to probe the full range of a system’s behavior and measure its re-sponse to dynamic input.

in Section V-B could also be used to address this issue by gath-ering a much larger sample of behavior from humans and clas-sifying it according to different skill levels (perhaps using data about average health, deaths, and kills). Comparison between bots and humans with similar levels of skill would be likely to eliminate much of the variability. It would then be possible to calculate the humanness metric of a bot with a particular skill level. could then be used in a genetic algorithm to evolve a bot with a particular level of skill, and it could also be used to discriminate between human and software players, perhaps in situations where only humans are supposed to be competing.

While humanlike behavior can be a valuable attribute in a computer game avatar, it is not necessarily an ideal benchmark for intelligent systems. As French [63] and Cullen [64] point out, the original Turing test and its variants are a rather an-thropocentric measure of a particular type of culturally oriented human intelligence that is embodied in a unique way. It is pos-sible that systems could achieve human level or superhuman in-telligence in a completely different way from humans, without the cognitive structures that are associated with human intelli-gence. A further problem is that Turing test competitions like the Botprize set an upper limit on intelligent behavior: a super-human or postsuper-human bot would presumably receive less than 100% for deviating from standard human behavior. An inter-esting way of addressing this concern would be to develop a method for measuring the intelligence of avatars withinUT2004

that does not discriminate against systems that are intelligent in a nonhuman way. This could be approached using one of the universal intelligence measures that have been put forward, which are based on the complexity of the environment and the reward received by the agent as it interacts with the environ-ment, processed across a variety of environments with different levels of complexity [61], [62], [65].7

To apply one of these universal intelligence measures within

UT2004a number of difficulties would have to be addressed.

To begin with, the measures that have been proposed so far are fairly abstract and work would have to be done to adapt them to function within the rich dynamic environment ofUT2004. Second, the current measures are based on the idea of sum-ming across a number of different environments, whereas it seems plausible that a bot should be able to display intelligence within a single reasonably complexUT2004environment.8A final issue is that the data available to humans and bots are com-pletely different, and so intelligence would have to be measured in a different way for bots and humans, or all players would have to be constrained to operate from the same interface.9In spite of

these difficulties, computer games do seem to present an ideal test bed for developing intelligence measures that are practical enough to be applied in the real world. It would be particularly interesting to see if humans were acting in a more intelligent way than bots withinUT2004, and this type of measure could also be used to evolve more intelligent bots.

7Insa-Cabreraet al.[66] have done some work comparing the results of these tests on humans and a reinforcement learning algorithm.

8This is related to difficulties with defining an environment within the context of these measures. For example: is the sensor data the environment, or is the environment the sum of possible sensory data within a particular map? Does the environment change with the movement of each agent?, and so on.

tion, and hardware simulators of spiking neurons on afi eld-pro-grammable gate array (FPGA) [68] or custom chips [69] could be used to enable these devices to run in real time.

While the main focus of this work has been on using a brain-inspired neural network to produce humanlike behavior in a computer game avatar, the construction of embodied spiking neural models is also a good way of improving our understanding of the brain. In our current work, we are using the NeuroBot network as a test bed to explore how a system can be analyzed using graph theory measures of structural and effective connectivity to identify global workspace nodes [45]. Techniques developed on NeuroBot could then be applied to data recorded from the human brain to confirm or refute the hypothesis that some aspects of the brain’s functioning operate like a global workspace. We are also planning to use this system to explore how neural synchronization could implement a global workspace along the lines proposed by Shanahan [17]. In our current system, the broadcast information is the activation within a population of global workspace neurons; in the neural synchronization approach, the globally broadcast information is the information that is passed within a population of synchronized neurons. This would open up the possibility of a more biologically realistic and dynamic definition of the global workspace, and it would enable us to link our work to the extensive literature on the connection between neural synchronization and consciousness [70].

VII. CONCLUSION

This work has demonstrated that a network of 20 000 spiking neurons and 1.5 million connections organized using a global workspace architecture is an effective way of producing real-time humanlike behavior in a computer game avatar. This paper has also highlighted the variability of human behavior withinUT2004

and explored how statistical measures, combined into our hu-manness metric , could complement human judgements and provide an automatic way of evaluating humanlike behavior in computer games. Future applications of this system include de-veloping more believable bots in computer games, modeling dif-ferent aspects of the brain, and controlling robots in the real world.

ACKNOWLEDGMENT

The authors would like to thank the reviewers for their helpful comments.

REFERENCES

[1] A. Turing, “Computing machinery and intelligence,”Mind, vol. 59, pp. 433–460, 1950.

[7] P. Hingston, “A Turing test for computer game bots,”IEEE Trans. Comput. Intell. AI Games, vol. 1, no. 3, pp. 169–186, Sep. 2009. [8] P. Hingston, Botprize Website [Online]. Available: http://botprize.org [9] B. J. Baars, A Cognitive Theory of Consciousness. Cambridge, U.K.:

Cambridge Univ. Press, 1988.

[10] J. Gemrot, R. Kadlec, M. Bída, O. Burkert, R. Pııbil, J. Havlíček, L. Zemčák, J. Šimlovič, R. Vansa, M. Štolba, T. Plch, and C. Brom, “Pogamut 3 can assist developers in building AI (not only) for their videogame agents,” inAgents for Games and Simulations, ser. Lecture Notes in Computer Science. Berlin, Germany: Springer-Verlag, 2009, vol. 5920, pp. 1–15.

[11] M. P. Shanahan, “A spiking neuron model of cortical broadcast and competition,”Consciousness Cogn., vol. 17, no. 1, pp. 288–303, 2008. [12] S. Dehaene, M. Kerszberg, and J. P. Changeux, “A neuronal model of a global workspace in effortful cognitive tasks,”Proc. Nat. Acad. Sci. USA, vol. 95, no. 24, pp. 14 529–14 5234, 1998.

[13] S. Dehaene, C. Sergent, and J. P. Changeux, “A neuronal network model linking subjective reports and objective physiological data during conscious perception,”Proc. Nat. Acad. Sci. USA, vol. 100, no. 14, pp. 8520–8525, 2003.

[14] S. Dehaene and J. P. Changeux, “Ongoing spontaneous activity con-trols access to consciousness: A neuronal model for inattentional blind-ness,”PLoS Biol., vol. 3, no. 5, 2005, e141.

[15] A. Zylberberg, D. Fernndez Slezak, P. R. Roelfsema, S. Dehaene, and M. Sigman, “The brain’s router: A cortical network model of serial processing in the primate brain,”PLoS Comput. Biol., vol. 6, no. 4, Apr. 2010, e1000765.

[16] M. P. Shanahan, “A cognitive architecture that combines internal sim-ulation with a global workspace,”Consciousness Cogn., vol. 15, pp. 433–449, 2006.

[17] M. P. Shanahan, Embodiment and the Inner Life: Cognition and Con-sciousness in the Space of Possible Minds. Oxford, U.K.: Oxford Univ. Press, 2010.

[18] S. Franklin, “IDA—A conscious artifact?,”J. Consciousness Studies, vol. 10, no. 4–5, pp. 47–66, 2003.

[19] S. Franklin, B. J. Baars, U. Ramamurthy, and M. Ventura, “The role of consciousness in memory,”Brains Minds Media, vol. 2005, bmm150. [20] T. Madl, B. J. Baars, and S. Franklin, “The timing of the cognitive cycle,”PLoS One, vol. 6, no. 4, 2011, DOI: 10.1371/journal.pone. 0014803, e14803.

[21] R. Arrabales, A. Ledezma, and A. Sanchis, “Towards conscious-like behavior in computer game characters,” inProc. Int. Conf. Comput. Intell. Games, 2009, pp. 217–224.

[22] J. L. Krichmar, D. A. Nitz, J. A. Gally, and G. M. Edelman, “Charac-terizing functional hippocampal pathways in a brain-based device as it solves a spatial memory task,”Proc. Nat. Acad. Sci. USA, vol. 102, no. 6, pp. 2111–2116, 2005.

[23] D. Gamez, “Information integration based predictions about the con-scious states of a spiking neural network,”Consciousness Cogn., vol. 19, no. 1, pp. 294–310, 2010.

[24] A. Bouganis and M. Shanahan, “Training a spiking neural network to control a 4-DoF robotic arm based on spike timing-dependent plasticity,” inProc. IEEE Int. Joint Conf. Neural Netw., 2010, pp. 4104–4111.

[25] H. Hagras, A. Pounds-Cornish, M. Colley, V. Callaghan, and G. Clarke, “Evolving spiking neural network controllers for autonomous robots,” inProc. IEEE Int. Conf. Robot. Autom., 2004, vol. 5, pp. 4620–4626. [26] E. Nichols, L. J. McDaid, and M. N. H. Siddique, “Case study on a

self-organizing spiking neural network for robot navigation,”Int. J. Neural Syst., vol. 20, no. 6, pp. 501–508, 2010.

[28] A. Mora, M. Moreno, J. Merelo, P. Castillo, M. Arenas, and J. Laredo, “Evolving the cooperative behaviour in unreal™ bots,” inProc. IEEE Symp. Comput. Intell. Games, Aug. 2010, pp. 241–248.

[29] M. Patrick, “Online evolution in unreal tournament 2004,” inProc. IEEE Symp. Comput. Intell. Games, Aug. 2010, pp. 249–256. [30] R. Graham, H. McCabe, and S. Sheridan, “Realistic agent

movement in dynamic game environments,” in Proc. DiGRA Conf., 2005 [Online]. Available: http://www.digra.org/dl/dis-play_html?chid=http://www.digra.org/dl/db/06278.14234.pdf [31] I. Karpov, T. D’Silva, C. Varrichio, K. Stanley, and R. Miikkulainen,

“Integration and evaluation of exploration-based learning in games,” inProc. IEEE Symp. Comput. Intell. Games, May 2006, pp. 39–44. [32] D. Cuadrado and Y. Saez, “Chuck Norris rocks!,” inProc. IEEE Symp.

Comput. Intell. Games, Sep. 2009, pp. 69–74.

[33] C. Bauckhage, C. Thurau, and G. Sagerer, “Learning human-like op-ponent behavior for interactive computer games,” inPattern Recog-nition, ser. Lecture Notes in Computer Science. Berlin, Germany: Springer-Verlag, 2003, vol. 2781, pp. 148–155.

[34] S. Zanetti and A. E. Rhalibi, “Machine learning techniques for FPS in Q3,” inProc. ACM SIGCHI Int. Conf. Adv. Comput. Entertain. Technol., 2004, pp. 239–244.

[35] C. Thurau, C. Bauckhage, and G. Sagerer, “Synthesizing movements for computer game characters,” inPattern Recognition, ser. Lecture Notes in Computer Science. Berlin, Germany: Springer-Verlag, 2004, vol. 3175, pp. 179–186.

[36] C. Thurau, T. Paczian, and C. Bauckhage, “Is Bayesian imitation learning the route to believable gamebots?,” in Proc. GAME-ON North Amer., 2005, pp. 3–9.

[37] R. Thawonmas, S. Murakami, and T. Sato, “Believable judge bot that learns to select tactics and judge opponents,” in Proc. IEEE Conf. Comput. Intell. Games, Sep. 2011, pp. 345–349.

[38] D. Wang, B. Subagdja, A.-H. Tan, and G.-W. Ng, “Creating human-like autonomous players in real-timefirst person shooter computer games,” inProc. Innovative Appl. Artif. Intell. Conf., 2009, pp. 173–178. [39] J. Schrum, I. Karpov, and R. Miikkulainen, “UT : Human-like

be-havior via neuroevolution of combat bebe-havior and replay of human traces,” inProc. IEEE Conf. Comput. Intell. Games, Sep. 2011, pp. 329–336.

[40] N. van Hoorn, J. Togelius, and J. Schmidhuber, “Hierarchical controller learning in afirst-person shooter,” inProc. IEEE Symp. Comput. Intell. Games, Sep. 2009, pp. 294–301.

[41] Y.-H. Chang, R. Maheswaran, T. Levinboim, and V. Rajan, “Learning and evaluating human-like NPC behaviors in dynamic games,” inProc. Artif. Intell. Interactive Digit. Entertain. Conf., 2011, pp. 8–13. [42] H.-K. Pao, K.-T. Chen, and H.-C. Chen, “Game bot detection via avatar

trajectory analysis,”IEEE Trans. Comput. Intell. AI Games, vol. 2, no. 3, pp. 162–175, Sep. 2010.

[43] Z. Fountas, “Spiking neural networks for human-like avatar control in a simulated environment,” M.S.c. thesis, Dept. Comput., Imperial College London, London, U.K., 2011.

[44] D. Gamez, R. Newcombe, O. Holland, and R. Knight, “Two simulation tools for biologically inspired virtual robotics,” inProc. IEEE 5th Conf. Adv. Cybern. Syst., 2006, pp. 85–90.

[45] D. Gamez, A. K. Fidjeland, and E. Lazdins, “iSpike: A spiking neural interface for the iCub robot,”Bioinspirat. Biomimet., vol. 7, no. 2, 2012, DOI: 10.1088/1748-3182/7/2/025008.

[46] V. Braitenberg, Vehicles: Experiments in Synthetic Psychology. Cambridge, MA, USA: MIT Press, 1984.

[47] W. Maass, “Lower bounds for the computational power of networks of spiking neurons,”Neural Comput., vol. 8, no. 1, pp. 1–40, 1996. [48] W. Maass, “Noisy spiking neurons with temporal coding have more

computational power than sigmoidal neurons,” Adv. Neural Inf. Process. Syst., vol. 9, pp. 211–217, 1997.

[49] R. Van Rullen and S. Thorpe, “Rate coding versus temporal order coding: What the retinal ganglion cells tell the visual cortex,”Neural Comput., vol. 13, pp. 1255–1283, Jun. 2001.

[50] T. Masquelier and S. Thorpe, “Unsupervised learning of visual features through spike timing dependent plasticity,”PLoS Comput. Biol., vol. 3, no. 2, Feb. 2007, e31.

[51] W. Maass and C. M. Bishop, Eds., Pulsed Neural Networks. Cam-bridge, MA, USA: MIT Press, 1999.

[52] F. Rieke, Spikes: Exploring the Neural Code. Cambridge, MA, USA: MIT Press, 1997.

[53] T. Gollisch and M. Meister, “Rapid neural coding in the retina with relative spike latencies,”Science, vol. 319, no. 5866, pp. 1108–1111, 2008.

[54] A. K. Fidjeland and M. P. Shanahan, “Accelerated simulation of spiking neural networks using GPUs,” inProc. IEEE Int. Joint Conf. Neural Netw., 2010, DOI: 10.1109/IJCNN.2010.5596678.

[55] A. Rast, F. Galluppi, S. Davies, L. Plana, C. Patterson, T. Sharp, D. Lester, and S. Furber, “Concurrent heterogeneous neural model simu-lation on real-time neuromimetic hardware,”Neural Netw., vol. 24, no. 9, pp. 961–978, 2011.

[56] E. M. Izhikevich, “Simple model of spiking neurons,”IEEE Trans. Neural Netw., vol. 14, no. 6, pp. 1569–1572, Nov. 2003.

[57] R. Ananthanarayanan, S. K. Esser, H. D. Simon, and D. S. Modha, “The cat is out of the bag: Cortical simulations with 10 neurons, 10 synapses,” inProc. Conf. High Performance Comput. Netw. Storage Anal., 2009, DOI: 10.1145/1654059.1654124.

[58] E. Izhikevich and G. Edelman, “Large-scale model of mammalian tha-lamocortical systems.,”Proc. Nat. Acad. Sci. USA, vol. 105, no. 9, pp. 3593–3598, 2008.

[59] A. K. Fidjeland, Nemo Website [Online]. Available: http:// nemosim.sf.net

[60] R. Adobbati, A. N. Marshall, A. Scholer, S. Tejada, G. Kaminka, S. Schaffer, and C. Sollitto, “Gamebots: A 3D virtual world test-bed for multi-agent research,” inProc. Int. Workshop Infrastructure Agents MAS Scalable MAS, 2001, vol. 45, pp. 47–52.

[61] S. Legg and M. Hutter, “Universal intelligence: A definition of machine intelligence,”Minds Mach., vol. 17, pp. 391–444, Dec. 2007. [62] J. Hernández-Orallo and D. L. Dowe, “Measuring universal

intelli-gence: Towards an anytime intelligence test,”Artif. Intell., vol. 174, pp. 1508–1539, Dec. 2010.

[63] R. M. French, “Subcognition and the limits of the Turing test,”Mind, vol. 99, pp. 53–65, 1990.

[64] J. Cullen, “Imitation versus communication: Testing for human-like intelligence,”Minds Mach., vol. 19, pp. 237–254, 2009.

[65] B. Hibbard, “Measuring agent intelligence via hierarchies of environ-ments,”Artif. Gen. Intell., pp. 303–308, 2011.

[66] J. Insa-Cabrera, D. L. Dowe, S. España-Cubillo, M. V. Hernández-Lloreda, and J. Hernández-Orallo, “Comparing humans and AI agents,”Artif. Gen. Intell., pp. 122–132, 2011.

[67] C. Nehaniv and K. Dautenhahn, “The correspondence problem,” in Im-itation in Animals and Artifacts, ser. Bradford Books; Complex Adap-tive Systems, K. Dautenhahn and C. Nehaniv, Eds. Cambridge, MA, USA: MIT Press, 2002, pp. 41–61.

[68] L. P. Maguire, T. M. McGinnity, B. Glackin, A. Ghani, A. Belatreche, and J. Harkin, “Challenges for large-scale implementations of spiking neural networks on FPGAs,”Neurocomputing, vol. 71, no. 1–3, pp. 13–29, 2007.

[69] J. Schemmel, D. Brüderle, A. Grübl, M. Hock, K. Meier, and S. Millner, “A wafer-scale neuromorphic hardware system for large-scale neural modeling,” inProc. IEEE Int. Conf. Circuits Syst., Jun. 2010, pp. 1947–1950.

[70] F. Varela, J. P. Lachaux, E. Rodriguez, and J. Martinerie, “The brainweb: Phase synchronization and large-scale integration,” Nat. Rev. Neurosci., vol. 2, no. 4, pp. 229–239, 2001.

David Gamezreceived the Ph.D. degrees in philos-ophy and computer science from the University of Essex, Colchester, U.K., in 2002 and 2008, respec-tively.